Years ago, when troubleshooting performance, I stumbled across this Microsoft documentation on parallel query processing that says:

Certain types of statements cannot be processed in parallel unless they contain clauses, however. For example, UPDATE, INSERT, and DELETE are not normally processed in parallel even if the related query meets the criteria. But if the UPDATE or DELETE statements contain a WHERE clause, or an INSERT statement contains a SELECT clause, WHERE and SELECT can be executed in parallel. Changes are applied serially to the database in these cases.

Thank goodness I bookmarked it, because I moved on with my life and totally forgot about it until recently. I had to troubleshoot a couple of different slow deletes, and in both cases, parallelism questions came up. To help tell the story, I’ve reconstructed versions of them with the large Stack Overflow database.

I figured I should blog about this because I polled y’all on Twitter, and you weren’t sure about it either:

Do DELETE queries go parallel in SQL Server? (Assume just one big table in the query, no joins, no subqueries.) Poll:

— Brent Ozar (@BrentO) August 27, 2020

And there was even disagreement between those of y’all who WERE sure, hahaha, so let’s walk through it.

Problem 1: Cleaning Out a Table

Say you need to periodically erase the contents of a table and start over, and your app doesn’t have the permissions required to do a truncate table. Right now, all you’re doing is a simple delete:

|

1 |

DELETE dbo.Posts; |

The execution plan isn’t pretty:

For this demo, I’m using the 40 million row, 105GB Posts table, and it’s got 13 indexes on it. This database is the starting point for the Mastering classes – I drop different indexes on it depending on which lab folks are doing – and it happens to nicely replicate the kind of problem the client faced.

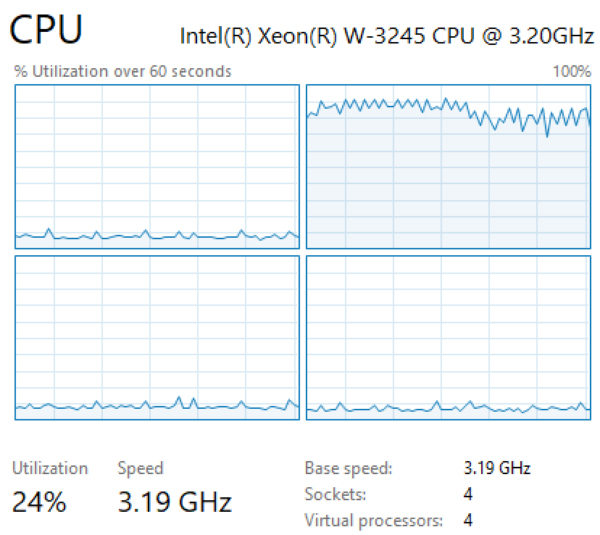

The plan has no parallelism operators, which in turn heats up one CPU core, which then sets 148,723 query bucks on fire:

Watching the live query plans with sp_BlitzWho, we can see SQL Server working through the plan, one index at a time, sorting millions of rows of data. One index at a time. Two minutes, Turkish.

This query is single-threaded, but…could we get a parallel operator anywhere in the plan, and if so, will the query go faster? Well, we can kinda sorta gain parallelism by injecting a meaningless filter that matches all rows. In that case, SQL Server uses parallelism to “seek” on an index, discovers that all rows match, and then sets about doing the delete. However, there’s no parallelism in the delete operation itself, just during the meaningless operation to “find” the rows that match, which is of course all of them. The delete doesn’t finish any faster.

Anyhoo, setting aside stunt examples, back to the problem itself. I can totally understand the developer’s frustration because with this kind of query, you would think that SQL Server would:

- Take out an exclusive lock on the table

- Start deleting the contents of all of the indexes in parallel (since every row in every index has to be deleted, and we have an exclusive lock on the table, so it’s not like we need to worry about Halloween protection)

In that case, it helps to reset perspectives and say, “The real problem here isn’t parallelism: we just shouldn’t be doing all this work at all, let alone spreading it across multiple cores.” Better solutions for this particular problem:

- Use K. Brian Kelley’s post on truncating tables with the least permissions, and then ideally, use a cert-signed stored procedure to do the dirty deed

- If you can’t truncate the table, drop it and create a new one in its place – which has all kinds of problems around security, foreign keys, objects that may have been added to the table (like indexes and triggers)

- If you can’t do any of that, at least drop all the indexes first, then recreate them after the table is empty (which is what we ended up doing in this case, bizarrely, because it presented the least security issues for that particular situation – sometimes the right solution isn’t politically feasible)

Problem 2: Cleaning Out Parts of a Table

Truncating was a no-brainer there, but things got tougher with the second query. Say we have another table – I’ll use the 66M row, 22GB Comments table in this example. Say we regularly run a clean-out project that removes all comments whose score is lower than the overall average:

|

1 2 3 |

DELETE c FROM dbo.Comments c WHERE c.Score < (SELECT AVG(Score * 1.0) FROM dbo.Comments); |

And say we don’t want to add a Score index just for the delete process, because the app wouldn’t use it for any other purpose. The plan starts with two scans of the Comments table – one scan to find the average score, and another scan to find the rows we want to delete:

And the whole plan goes single-threaded despite costing 49,345 Query Bucks. Ouch. (Amusingly, it’s very easy to get parallelism in delete queries like this if you reference other tables, like if I deleted all Comments rows with a lower score than the average Posts score.)

In this case, it made sense to break the query up into two phases:

- Find the average Comments score (and that query plan goes parallel, plus probably makes sense to do separately ahead of time anyway)

- Delete all comments with a score lower than that (that query plan stays single-threaded, but it doesn’t really matter – it’s just a heck of a lot of work)

That change alone got us across the finish line in that case.

If Those Solutions Hadn’t Been Enough

For further tuning, we could think about solutions like:

- Partitioning by Score ranges

- Designing two separate tables: one where all new Comments go into, and another where Comments are moved into after they meet a certain Score threshold, and then truncating the low-score table to speed the delete process.

- Instead of deleting, building a CommentsToKeep table, migrating over the desired rows, dropping the old Comments table, and renaming CommentsToKeep as Comments

All of those solutions have a heck of a lot of drawbacks, though – and the main point of this post was just to point out that just because something is a lot of work doesn’t mean that it’s going to go parallel.

If You’re Interested In This Kinda Thing

You’ll probably also like Paul White’s series on the Halloween Problem and the new Using Batches to Do A Lot of Work Without Blocking module of my Mastering Query Tuning class. In addition, here’s a fun thought exercise: if you have to do a lot of these deletes on different tables, are you better off running them:

- Serially, one after another, in a long command/batch/proc, or

- In parallel, in different sessions, each using different CPU cores?

The answer would depend on things like:

- How fast your IO subsystem is – like whether one core can max it out

- What kind of HA/DR solution you’re using – as in, do you really want to flood your multi-subnet AG with 100GB of logged changes in a matter of minutes

- Whether blocking will rear its ugly head – like if you have foreign keys or triggers

12 Comments. Leave new

Thanks for pointing out the HA/DR aspect of this, it’s often neglected until it’s too late. Cue Jeff Goldblum’s “your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should”.

🙂

Not strictly parallelism related, but I would always do a DELETE of that size in a batch of 5,000 rows and loop. Why? Because a wise man once told me to do it that way to avoid locking the entire table.

A (hopefully) wiser-me now knows that this 5,000 figure relates to the number of locks that trigger escalation, rather than number of rows, but still, performing deletes from large tables in smaller batches, perhaps with a short WAIT in between each loop, gives me that warm fuzzy feeling that my massive DELETE isn’t causing anyone else too much pain.

erasing by chunks is also not useful because it allows you to have smaller transactions and therefore reduce the overall (when we do not have a very well performing I/O subsystem, that is, almost always? :-))

You did not mention partition switching (every table has at least one partition) as possible solution – just create a copy of the table (in the same file group) and the indexes.

After this run a ALTER TABLE SWITCH PARTITION 1 TO

Drawback is of course that you need again ALTER permissions for the tables (and if you have those you could do a simple truncate again).

PS: TRUNCATE supports since 2016 the WITH (PARTITIONS x) “Parameter” that you can use to truncate only the specified partition (if the table is partitioned of course)

Thomas – which problem are you suggesting that it solves?

In problem #1, the client didn’t have permissions to truncate a table. Even with partitioning, they still don’t, so the problem remains.

In problem #2, are you suggesting that they would somehow partition by a number that they can’t predict? How do you predict the average of something before the data goes in?

My reply was because of your suggestion “If you can’t truncate the table, drop it and create a new one in its place – which has all kinds of problems around security, foreign keys, objects that may have been added to the table (like indexes and triggers)”.

You don’t need to partition a table (with a fake partition function) to use partition switching. It works with every table (ok, I never tested it with at #temptable).

So if you want to use your idea with a second table but don’t want to bother with FKs, MS_Description, Permissions, Constraints etc. you could just create the table and the indexes (it is not very hard to script both) and use the partition switching to “delete” all the stuff.

Right, but you still have to do deletes, which raises all the problems with churning transaction log activity over to replicas/secondaries.

I might be nitpicking here but, if you are grabbing the average score across all comments, wouldn’t you be eventually backing yourself into a corner whereby the average score is going up and more comments are removed as a result?

I really appreciated this article and I just wanted to check if there was a bit of logic I’d missed or a safety catch I’d overlooked?

Michael – I just used that as an example of the kind of work the client was doing. The client isn’t Stack Overflow, and the client isn’t using this database. It’s just a demo query to help you understand the kind of work that’s being done. Hope that helps!

Thanks Brent!

Wouldn’t be a problem for LakeWobegon.stackexchange.com, where all posts are above average.

HAHAHA, I love that radio show.