Oh God, he’s talking about statistics again

Yeah, but this should be less annoying than the other times. And much shorter.

You see, I hear grousing.

Updating statistics was bringin’ us down, man. Harshing our mellow. The statistics would just update, man, and it would take like… Forever, man. Man.

But no one would actually be able to tell me how long it took. Though many stacks of Necronomicons were wheel-barrowed out and sworn upon for various incantations of “it was faster, we timed it” and “yes, performance improved” and “no, nothing else was different”.

What I would believe, because it’s totally believable, is that perhaps statistics updated, and some plans recompiled, and that recompiling the plans made things take longer.

Okay, fair enough. But no one ever says that. I wish someone would so I could take one look at an execution plan, verify that it looks like Nyarlathotep eating the NYC skyline, and say “yeah, that’d probably take a few to compile a plan for, let’s try to figure out how to break that into smaller pieces”.

Or, you know, something else reasonable.

Where am I going with this? Oh yeah. I measured. With Extended Events. So I’m extra angry about having to use those things again. XML is a hostile datatype. Don’t let the cute and cuddly creatures on those O’Reilly books fool you. Here’s the setup for the XE session.

|

1 2 3 4 5 6 7 |

CREATE EVENT SESSION [StatsGather] ON SERVER ADD EVENT sqlserver.auto_stats( ACTION(sqlos.task_time,sqlserver.database_id,sqlserver.database_name,sqlserver.plan_handle,sqlserver.session_id,sqlserver.tsql_stack) WHERE ([package0].[equal_uint64]([sqlserver].[session_id],(52)) AND [sqlserver].[is_system]=(0))) ADD TARGET package0.event_file(SET filename=N'C:\temp\StatsGather',max_rollover_files=(10)) WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS,MAX_DISPATCH_LATENCY=30 SECONDS,MAX_EVENT_SIZE=0 KB,MEMORY_PARTITION_MODE=NONE,TRACK_CAUSALITY=OFF,STARTUP_STATE=OFF) GO |

Then, I ran the same type of workload that I ran to get my statistics thresholds for automatic updates. Except, of course, this time I’m only looking at how long each update took. Not when it happened. We already know that. If you want the query I used to parse the session data, it’ll be at the end of the post. I’d rather spend your ever-shortening attention spans getting to the point.

Here’s the point:

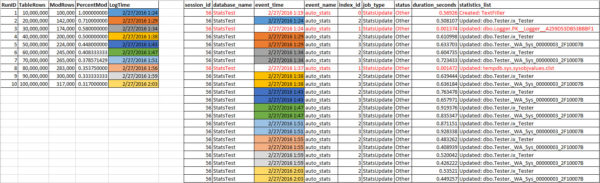

Updating statistics, even on some pretty good sized tables, didn’t really take that long. Everything is color-coded, so you can see the row count, how many rows were modified, etc. right next to the corresponding event time and timing. The statistics in red text have nothing to do with our tests, but I left them in there for completeness. They took absolutely minuscule amounts of time.

For the really big tables, which were all in the 10 million to 100 million row range, the statistics update itself never took more than 1 second. It stuck right around the half second mark aside from a few times, in the middle oddly, which I’m blaming on:

- AWS

- Clouds

- Disks

- Juggalos.

Now, how you proceed depends on a few things.

- Do you update statistics often enough to not need to have automatic updates?

- Are your update routines using FULLSCAN? (auto stats updates sample a percentage of the table)

- Do you not have data movement during the day (presumably when recompiling queries would be user-facing)?

- Can you not afford an occasional half second statistics update?

- Do your queries not benefit from updated statistics?

If you answer yes, yes, no, no, no, you’re not just singing about a dozen Amy Winehouse songs at once, you also might be in a situation crazy enough to warrant turning auto-update stats off.

Thanks for reading!

Begin code!

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 |

IF OBJECT_ID('tempdb..#StatsGather') IS NOT NULL DROP TABLE [#StatsGather]; CREATE TABLE [#StatsGather] ( [ID] INT IDENTITY(1, 1) NOT NULL , [WaitsXML] XML , CONSTRAINT [PK_StatsGather] PRIMARY KEY CLUSTERED ( [ID] ) ); INSERT [#StatsGather] ( [WaitsXML] ) SELECT CONVERT(XML, [event_data]) AS [WaitsXML] FROM [sys].[fn_xe_file_target_read_file]('C:\temp\StatsGather*.xel', NULL, NULL, NULL) CREATE PRIMARY XML INDEX [StatsGatherXML] ON [#StatsGather]([WaitsXML]); CREATE XML INDEX [StatsGatherXMLPath] ON [#StatsGather]([WaitsXML]) USING XML INDEX [StatsGatherXML] FOR VALUE; WITH x1 AS ( SELECT [sw].[WaitsXML].[value]('(event/action[@name="session_id"]/value)[1]', 'BIGINT') AS [session_id] , DB_NAME([sw].[WaitsXML].[value]('(event/action[@name="database_id"]/value)[1]', 'BIGINT')) AS [database_name] , [sw].[WaitsXML].[value]('(/event/@timestamp)[1]', 'DATETIME2(7)') AS [event_time], [sw].[WaitsXML].[value]('(/event/@name)[1]', 'VARCHAR(MAX)') AS [event_name], [sw].[WaitsXML].[value]('(/event/data[@name="index_id"]/value)[1]', 'BIGINT') AS [index_id], [sw].[WaitsXML].[value]('(/event/data[@name="job_type"]/text)[1]', 'VARCHAR(MAX)') AS [job_type], [sw].[WaitsXML].[value]('(/event/data[@name="status"]/text)[1]', 'VARCHAR(MAX)') AS [status], [sw].[WaitsXML].[value]('(/event/data[@name="duration"]/value)[1]', 'BIGINT') / 1000000. AS [duration], [sw].[WaitsXML].[value]('(/event/data[@name="statistics_list"]/value)[1]', 'VARCHAR(MAX)') AS [statistics_list] FROM [#StatsGather] AS [sw] ) SELECT [x1].[session_id] , [x1].[database_name] , [x1].[event_time] , [x1].[event_name] , [x1].[index_id] , [x1].[job_type] , [x1].[status] , [x1].[duration] , [x1].[statistics_list] FROM x1 WHERE [x1].[duration] > 0 ORDER BY [x1].[event_time] |

Brent says: if you’re thinking, “Oh, but my tables have more than a hundred million rows, so stats updates would take way longer,” then it’s time to think about regularly updating your stats during maintenance windows anyway. As long as you’re doing that say, weekly, then what are the odds that you’re going to trip the stats update threshold during the week on a billion row table? And if you do, it’s time to think about partitioned views.

15 Comments. Leave new

Great Post Erik. I work in a sharepoint environment would you recommend leaving

Auto Update Statics On? Any advice is appreciated. Thank you.

You should do exactly what the documentation for SharePoint tells you to do.

This post, tells it pretty good, James 😉

https://blogs.msdn.microsoft.com/chunliu/2011/11/17/auto-update-statistics-and-auto-create-statistics-on-or-off-for-sharepoint-2010-databases/

Ha! Juggalos probably use update stats async…..

Hi Erik,

I really good question is when server decides that plan is old and throw it to the trash bin?

Say, you have a 100 million rows in your table and have auto-update statistics on (big mistake in my opinion).

Say, you store user activities and during a day you change a million of rows.

When your auto-update stats run by default it will sample some range of data from your table.

The question is – what range? Today’s one where all data distribution was changed dramatically, or yesterday’s one that is static now and never be changed?

You see the point – even without considering the fact that you may have your key plans destroyed in the middle of the busy day, your default sampling could also be bad enough to not detecting changes that require recompiling.

That’s why I against auto-update stats in most of the cases and always prefer to do it manually with specific sampling.

Indeed! And if you read the stuff between the title and the comments, I point those things out as situations where turning the feature off is fine.

Yep 🙂 Sampling is a question that always makes me curious – what exactly is sampling?

BOL says that sampling based on data distribution – but how server knows data distribution if it was changed since last stats update? And if it wasn’t – what’s the point to resample it?

Ideally it should check what pages were changed or added since last stats update and use at least half of them for sampling but I didn’t find any evidence that it’s working that way.

What I found instead (at least for SQL2008R2) is that if you changing and working with a little portion of your data in a big table – there are good chances that default sampling never detects it.

Partitioning can help here – but it’s another big story 🙂

Bit too long for a comment, so I’ll work on a follow up post. Can’t promise an air date for it at the moment though.

We, will be waiting on that post, with big expectations, Erik 😉 heh

But if I’m not mistaken, I believe that an resample is based on the last sample size,

whereas an default sample size, is based on a fraction, of the size of an table,

which can be bit of a pain, if your table size is very large, lets say 200GB

Or am I totally off, here ???

Will be nice to read.

Few years ago I raised this question with MS support and after a few emails got a polite version of “don’t be a smart ass and use hints or partitioning if your sample is not sampling what you would like to sample” 🙂

As with TABLESAMPLE, samples are taken at the page level which is why you can get varying numbers of rows sampled in the sys.dm_db_stats_properties() DMF for tables of the same number of rows with different widths/fillfactors/etc.

Conor Cunningham has noted that this process “isn’t entirely random” (https://blogs.msdn.microsoft.com/conor_cunningham_msft/2008/07/24/statistics-sample-rates/) and that the pages that are used to sample data are the same for each run. When I read that, I tested with another XE (physical_page_read) and confirmed that the same pages were read (for the same configuration) over 100 iterations.

Yeah, I have spoken with Conor about it in 2014, he did a mistake saying that we can ask him anything about SQL Server 🙂 and this was my second question – “How to sample only that part of table that I want to be sampled”.

His advice was “You can’t, do either fullscan or use hints”.

Hints usually works but ideally I would like to have something like SAMPLE 10%…STARTING FROM….TO….

Very good post. Thanks!

Hey Erik,

I have a question about a situation that I believe is related… I recently moved a copy of a database from SQL 2016 Enterprise edition to Azure SQL DB, and as part of that, I didn’t copy over stats. I then ran a query which took a little over 10 minutes, then on a subsequent run took 16 seconds, then after copying over the stats from the original DB, went down to 2 seconds.

I had attributed the time taken on the original run to be down to updating stats, though I wasn’t measuring it, so can’t be sure. I did have stats io/time on though and almost all of the time taken was due to the time to compile the query, with only about 7 seconds of CPU time to actually execute it. Is there something else that would explain that, or do you think it would be related to updating stats? I would just copy over the DB again to get it to the same point I started at and try again, but it’s about 160GB, so it’s not trivial. The query also hit quite a few tables, so I’m not sure of all of the stats it would have updated.

Paul — for longer Q&A, you should post questions on http://dba.stackexchange.com

It’s really hard to help people with stuff like this in blog comments.

Thanks!