I Feel Sorry for Untrained Developers Using Entity Framework.

Most of the time, I love Entity Framework, and ORMs in general. These tools make it easier for companies to ship applications. Are the apps perfect? Of course not – but they’re good enough to get to market, bring in revenue to pay salaries, and move a company forwards.

However, just like any tool, if you don’t know how to use it, you’re gonna get hurt.

One classic example popped up again last month with a client who’d used EF Core to design their database for them. The developers just had to say which columns were numbers, dates, or strings, and EF Core handled the rest.

But if you create a string without specifying its length, EF defaults to using NVARCHAR(MAX). That is not a bug. That is by design, and it’s explained in the documentation:

The wording on that really pisses me off because NO, IT IS NOT SQL SERVER DOING THIS MAPPING. There is absolutely nothing in the database engine that’s saying strings are nvarchar(max). This is an Entity Framework problem, and stop pointing the blame at the innocent database engine. (Update 2025/01/10 – the documentation has been updated by Erik Ejlskov Jensen to point out that no, this isn’t a SQL Server problem.)

The documentation goes on to explain how you can manually set the right column lengths, and I’ve sat through several development conference sessions that emphasize how important it is for developers to do that. The problem here is that most folks don’t read the documentation, let alone attend conferences to learn how to use their tools. (I don’t blame the folks – I blame the companies who are pressuring developers to ship quickly without training.)

Demoing One of the Problems It Causes

Let’s create a table with two string columns – one NVARCHAR(100) and one NVARCHAR(MAX). Then, let’s load a million rows into it, putting the same contents in both the short and long columns.

Transact-SQL

|

1 2 3 4 5 6 7 8 9 10 |

DROP TABLE IF EXISTS dbo.Test; CREATE TABLE dbo.Test (Id INT PRIMARY KEY CLUSTERED, ShortString NVARCHAR(100), LongString NVARCHAR(MAX)); GO INSERT INTO dbo.Test(Id, ShortString, LongString) SELECT value, N'Brent Ozar', N'Brent Ozar' FROM generate_series(1,1000000); |

Then, we’ll run identical queries against the short & long string version:

Transact-SQL

|

1 2 3 4 5 6 7 |

SELECT TOP 250 ShortString, Id FROM dbo.Test ORDER BY ShortString; SELECT TOP 250 LongString, Id FROM dbo.Test ORDER BY LongString; |

And review their actual execution plans:

The bottom query is the one that hits the NVARCHAR(MAX) column. Your first signs of danger are the yellow bang on the SELECT, and the 99% query cost estimate on the second query, indicating that SQL Server thinks the NVARCHAR(MAX) one is going to be quite a bit more expensive. However, as is often the case with SQL Server, the really big danger isn’t even shown visually.

Hover your mouse over each SELECT operator, and you’ll get a popup tooltip. One of the lines on that tooltip will say Memory Grant. Here’s the one for the NVARCHAR(100) query:

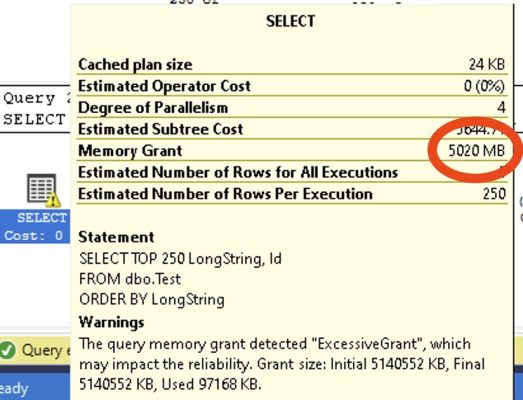

When the datatype is NVARCHAR(100), SQL Server allocates 210MB of memory to run the query because it believes it won’t need too much memory to sort that small of a column. However, check the same metric on the NVARCHAR(MAX) query:

Because SQL Server thinks that the contents of NVARCHAR(MAX) columns are larger, this query gets a 5GB memory grant, 24x larger. Depending on your server size and workloads, this can be a real problem because the more queries you have running simultaneously, the quicker your database server will run out of memory.

There’s a Fast Fix. It Doesn’t Work Here.

If you frequently search or order by a column, all you have to do is index it, right? Let’s try it:

The ShortString index gets created – but SQL Server can’t create an index on LongString because we can’t use NVARCHAR(MAX) as a key column in an index.

That’s a bummer, and you could argue that it’s a SQL Server limitation that could be fixed. For example, you can create a computed column on a shorter version of the column, and index that:

Transact-SQL

|

1 2 3 4 5 6 7 8 |

CREATE OR ALTER VIEW dbo.Test_View WITH SCHEMABINDING AS SELECT Id, CAST(LongString AS NVARCHAR(100)) AS LongString_Truncated FROM dbo.Test; GO CREATE UNIQUE CLUSTERED INDEX CLIX ON dbo.Test_View (LongString_Truncated, Id); |

There’s nothing to say that SQL Server couldn’t do similar work in order to index abridged versions of NVARCHAR(MAX) columns when it could check to see if there any truly long values in that column. It just doesn’t, though, and I understand that it would require Microsoft to do some work.

It’s much easier for Microsoft to say, “Yo, EF developers, read the docs and you’ll never have problems like this.” To some extent, that’s fair, because I can see how someone would expect people to be well-trained on the tools they use every day to do their jobs. This is only one tiny example of the many, many problems you can hit with Entity Framework if you don’t get trained on how to use it first.

If you’re looking for training resources, start with anything by Julie Lerman in whatever way you enjoy consuming training material – videos, books, etc.

Related

Hi! I’m Brent Ozar.

I make Microsoft SQL Server go faster. I love teaching, travel, cars, and laughing. I’m based out of Las Vegas. He/him. I teach SQL Server training classes, or if you haven’t got time for the pain, I’m available for consulting too.

Get Free SQL Stuff

"*" indicates required fields

23 Comments. Leave new

Love it! Nice simple test that leaves no doubt. Thanks, Brent!

Glad you liked it, sir!

what’s even more funny is some people expect EF will give system better performance.

EF and performance hardly go hand in hand.

I have seen some project where they use GUID as primary key, nvarchar to store even integer value in DB. Making the column very unintuitive.

Scaling up by hyperscaling the DB in Azure in hope for better performance.

“Your scientists were so preoccupied with whether or not they could, they didn’t stop to think if they should.”

Just because EF can create a database doesn’t mean you should let it.

EF creating the database schema is not a problem of you’re very explicit in telling it what you want.

“The wording on that really pisses me off because NO, IT IS NOT SQL SERVER DOING THIS MAPPING. There is absolutely nothing in the database engine that’s saying strings are nvarchar(max). This is an Entity Framework problem, and stop pointing the blame at the innocent database engine.”

Channel that anger and create a PR against the docs. There’s a little pencil at the top of the page that lets you do that. Might they reject that for any of myriad reasons? Sure. But might they not? One way to find out.

Or – or, hear me out – or – I could go on with my life. 😉

This was an article long overdue for writing. Great that, in true Brent Ozar fashion, you created a beautiful example that demonstrates the problem, and provide a great resource on how we can better ourselves as developers.

You know what I always say – “When in doubt, engineers will max it out”

Also part of the problem is that database developers are overlooked in favor of “full-stack” developers, who are supposed to know everything about everything 🙂

This is just the tip of the EF/ORM iceberg, my brother (a software developer (C# mostly)) and myself have seen some atrocious queries coming out of EF (and other ORMs) retrieving thousands or even millions of rows to check the existence of a single row.

EF/ORMs are great when used properly and yes reading documentation is 100% part of that process

I have a love/hate relationship with EF.

On one hand, it writes better SQL than most web or fullstack developers and will generally not face absolute atrocities in TSQL with EF and helps devs avoid logical bugs. It is also AMAZING for database schema source control.

On the other hand, it doesn’t write particularly efficient SQL, it can be a little difficult to read in complex queries and when a performance issue is found to be from a query, there is nothing I can do about it and usually nothing I can express to the developer to help make it more efficient if they don’t already know how to tune it.

My willingness to read documentation, buy books, attend conferences, etc is a considerable part of my success as a consultant.

So, I’m grateful that most people can’t be bothered. (Although I do try to get customers to go to do all of the above. It almost never works).

Why not replacing EF by AI? Dictate AI what you want, paste stuff to get code generated, etc. The do the code review and go through a second pass to refine the stuff. Of course you need to know what you are doing but the result is controlled and readable, you can “teach” AI the naming conventions and the coding style so you do not end with totally unreadable names and code.

AI is like an advanced secretary, typing lot of codes faster than you can do. EF is a black box.

lemme just stop you right there

🙂

The critical design flaw here is in EF, and it was in allowing a default mapping of string to a sql server data type. When a developer tries to generate a schema from that, they should get an error, and the tool should tell them they HAVE to decorate the string property with an attribute before they can proceed.

To be fair, nvarchar(max) IS the logical mapping of .net string onto sql server data types, but the entirely predictable human behavior is that people will follow the path of least resistance. So when that path leads to somewhere bad, it needs to be blocked.

My guess is that this was internally debated years ago on the EF Core team, and the overriding goal was to maximize adoption. So they didn’t want to make all their demos and examples cluttered with more attributes. In the beginning, it’s worse to have a reputation for having a steep learning curve. But in the long run, it’s worse to have a reputation for being full of foot guns.

[…] Brent Ozar points out one issue you might run into when using Entity Framework: […]

Just FYI, the docs have been updated (I created a PR to calm you down)

I’ll offer a hilarious example of misusing EF from Microsoft’s Identity codebase ( https://github.com/aspnet/AspNetIdentity/blob/main/src/Microsoft.AspNet.Identity.EntityFramework/UserStore.cs ):

public virtual Task FindByNameAsync(string userName)

{

ThrowIfDisposed();

return GetUserAggregateAsync(u => u.UserName.ToUpper() == userName.ToUpper());

}

To a non-DBA, it’s reasonable to assume that ToUpper() does not impact the algorithmic complexity of the search. However, what actually happens is that EF generates a WHERE clause that looks something like this:

WHERE UPPER(username) = UPPER(@username) .

And now SQL cannot use the index on the username column. This can easily turn a 10ms query into a 10s query.

Dapper Micro ORM is similarly dangerous. In client c# code, you can lazily chose not to specify column type for passed params and Dapper will happily declare those as varchar(max) in the generated TSQL. Wish this would flag a compiler warning.

The DBA can’t really fix these but he can at least educate the developers.

Great blog post.

I had this kind of problem, and I showed the max length of the column and I purpose developers to use it as reference.