Troubleshooting Mysterious Blocking Caused By sp_getapplock

I’m kinda weird. I get excited when I’m troubleshooting a SQL Server problem, and I keep hitting walls.

I’ll give you an example. A client came to me because they were struggling with sporadic performance problems in Azure SQL DB, and nothing seemed to make sense:

- sp_BlitzFirst @SinceStartup = 1 showed very clearly that their top wait, by a long shot, was blocking. Hundreds of hours of it in a week.

- sp_BlitzIndex showed the “Aggressive Indexes” warning on a single table, but… only tens of minutes of locking, nowhere near the level the database was seeing overall.

- sp_BlitzCache @SortOrder = ‘duration’ showed a couple queries with the “Long Running, Low CPU” warning, and they did indeed have blocking involved, but … pretty minor stuff. Plus, their plan cache was nearly useless due to a ton of unparameterized queries pouring through constantly, overwhelming Azure SQL DB’s limited plan cache.

- sp_Blitz wasn’t reporting any deadlocks, either. (sp_BlitzLock doesn’t work in Azure SQL DB at the moment because Microsoft’s no longer running the default system health XE session up there. They turned that off in order to save money on hosting costs, and passed the savings on to… wait… hmm)

- As a last-ditch hail-Mary, I ran sp_BlitzWho repeatedly, trying to catch the blocking happening in action. No dice – the odds that I’d catch it live weren’t great anyway.

During a bio break, I unloaded the dishwasher (it’s what I do) and made a mental list of things that would cause blocking, but not on indexes, and not show up in the plan cache. And that’s when it hit me, just as I was unloading the wine glasses. (Don’t judge me: I drink a lot, and I don’t have time for hand-washing.)

sp_getapplock lets developers use SQL Server’s locking mechanisms for their own purposes, unrelated to tables. Let’s say you have an ETL process, and you want to make sure that it can only be run from one session at a time. You’d start the process by running:

Transact-SQL

|

1 2 3 4 |

EXEC sp_getapplock @Resource = 'ETL Process', @LockMode = 'exclusive', @LockOwner = 'session' |

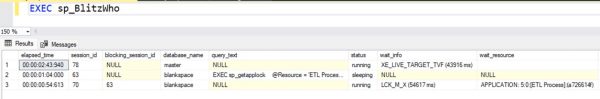

That’s kinda like saying BEGIN TRAN, but for entire processes. If someone else tries to run the same query and grab a lock from their session, they’ll get blocked. Here’s what it looks like from sp_BlitzWho:

Session 63 grabbed the lock first, and session 70 is trying to get it, but they’re waiting on LCK_M_X because 63 has an eXclusive lock on it. Note that 63’s query_text shows sp_getapplock – that’s where the troubleshooting Eureka moment hits.

When session 63 is done, it can release the lock like this:

Transact-SQL

|

1 2 3 |

EXEC sp_releaseapplock @Resource = 'ETL Process', @LockOwner = 'session' |

If you work like I do, troubleshooting things after they’ve happened, without the ability to set things up in advance of the problem, this is extraordinarily hard to track down.

Once you know about the problem, you can monitor blocked processes in Azure SQL DB (shout out to Etienne Lopes for a well-written, start-to-finish tutorial, and you should subscribe to his blog.) Etienne uses a file target there, but I used a ring buffer target because I didn’t have access to the client’s Azure file systems. The ring buffer target is way easier to set up:

Transact-SQL

|

1 2 3 4 5 6 7 8 |

CREATE EVENT SESSION [BlockedProcesses] ON DATABASE ADD EVENT sqlserver.blocked_process_report() ADD TARGET package0.ring_buffer(SET max_memory=(10240)) WITH (STARTUP_STATE=ON) GO ALTER EVENT SESSION [BlockedProcesses] ON DATABASE STATE = START; GO |

The problem is that Azure SQL DB’s blocked process threshold is 20 seconds, and can’t be changed. <sigh> For high-volume, short-duration blocking, you’ll be better off rapidly running sp_BlitzWho and logging it to table:

Transact-SQL

|

1 2 3 4 |

EXEC sp_BlitzWho @OutputDatabaseName = 'mydbname', @OutputSchemaName = 'dbo', @OutputTableName = 'BlitzWho' |

Once you’ve identified that there’s a blocking chain led by sp_getapplock, the database side of the tuning work is done. There’s no database-side “tuning” on blocking like this: it’s up to the developers to use conventional transaction-based tuning processes, but in whatever app code is calling sp_getapplock. The app is essentially running its own transaction, so the developers need to:

- Minimize the setup work done inside the transaction: do all set-up work like fetching config data ahead of time, before the transaction starts

- Minimize post-processing work inside the transaction: do logging later, after releasing the lock

- Minimize row-by-row processing: do work in sets, touching each table as few times as possible, not row-by-row inserts/updates/deletes

- Minimize round trips between the app server and database server: ideally, prepare everything as a package and send it off to a stored procedure to do all the committing quickly, eliminating all round trips (and then rip out sp_getapplock while you’re at it)

With those tips, sp_getapplock can be completely fine. It’s not like sp_getapplock is inherently bad, any more than BEGIN TRAN is bad. It’s just that detecting its long transactions is way harder.

Related

Hi! I’m Brent Ozar.

I make Microsoft SQL Server go faster. I love teaching, travel, cars, and laughing. I’m based out of Las Vegas. He/him. I teach SQL Server training classes, or if you haven’t got time for the pain, I’m available for consulting too.

Get Free SQL Stuff

"*" indicates required fields

9 Comments. Leave new

I think that at some point in the future a blery eyed DBA will read this in the small hours of the night on day four of a near down critical system and thank you deeply for this post . . . and then go on to name their first child after you . . . or maybe just their next dog.

Hahaha, I hope so!

Wow, like a diamond in a lock, no I mean, rock! This is a difficult thing to hunt around on managed servers. These are fantastic tips, thank you for sharing this. After this post, you should get another bottle if it’s the right time.

You’re welcome, my pleasure!

I something like this situation a few years back, where an application was using several threads in an application pool to pool a Jobs table. All but one thread was blocked. The lead developer changed the application and the blocking disappeared. Oddly enough, no one but me was affected since database monitoring was showing the situation as idle blocking. Ironically, this idle blocking kept me far from idle when I saw it.

Thanks Brent!

My pleasure!

[…] Brent Ozar has an epiphany: […]

I’ve faced this because a monitoring tool was complaining more than often about a “blocking issue” with the sp…what finally was pointed to the excesive use of a .Net Task Scheduler named Hangfire… the difficult part was that no one was complaining of the blocking except just me, the lonely DBA.

.. if only I’ve had this article a few years back….

Me too, Andrês. It was a Hangfire app and I was the only one noticing it. My monitoring tool Solar Winds DPA couldn’t distinguish between active and idle blocking to I got alert noise from it.