Pinal Dave recently ignited a storm of controversy when he quizzed readers about which one of these would be faster on AdventureWorks2019:

|

1 2 3 4 5 6 7 8 9 |

SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID IN (1, 2, 3); SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID = 1 OR CustomerID = 2 OR CustomerID = 3; |

I laughed so hard when I saw the storm of responses on Twitter. People sure do get passionate about this kind of thing. If you ever wanna witness patience and generosity in action, look at Pinal’s responses to this tweet.

While the answer for the default AdventureWorks2019 database is that they perform identically, I wanted to extend the quiz a little.

Does columnstore change the answer?

Folks who’ve been through my Fundamentals of Columnstore class will remember that queries are performed differently when a columnstore index is involved – sometimes better, sometimes worse. (Usually better, though.)

We’ll start by dropping the existing indexes FKs, and triggers, then creating a clustered columnstore index on Sales.SalesOrderHeader, then running the two queries:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 |

USE [AdventureWorks2019] GO DROP INDEX [IX_SalesOrderHeader_SalesPersonID] ON [Sales].[SalesOrderHeader] DROP INDEX [IX_SalesOrderHeader_CustomerID] ON [Sales].[SalesOrderHeader] DROP INDEX [AK_SalesOrderHeader_SalesOrderNumber] ON [Sales].[SalesOrderHeader] DROP INDEX [AK_SalesOrderHeader_rowguid] ON [Sales].[SalesOrderHeader] GO ALTER TABLE [Sales].[SalesOrderHeaderSalesReason] DROP CONSTRAINT [FK_SalesOrderHeaderSalesReason_SalesOrderHeader_SalesOrderID] ALTER TABLE [Sales].[SalesOrderDetail] DROP CONSTRAINT [FK_SalesOrderDetail_SalesOrderHeader_SalesOrderID] GO ALTER TABLE [Sales].[SalesOrderHeader] DROP CONSTRAINT [PK_SalesOrderHeader_SalesOrderID] WITH ( ONLINE = OFF ) DROP TRIGGER [Sales].[uSalesOrderHeader] GO CREATE CLUSTERED COLUMNSTORE INDEX CCI ON Sales.SalesOrderHeader; GO SET STATISTICS IO ON; GO SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID IN (1, 2, 3); SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID = 1 OR CustomerID = 2 OR CustomerID = 3; |

And then compare the logical reads done by both queries:

|

1 2 3 4 5 6 7 8 9 10 11 |

(0 rows affected) Table 'SalesOrderHeader'. Scan count 1, logical reads 0, lob logical reads 731 Table 'SalesOrderHeader'. Segment reads 1, segment skipped 0. (1 row affected) (0 rows affected) Table 'SalesOrderHeader'. Scan count 1, logical reads 0, lob logical reads 731 Table 'SalesOrderHeader'. Segment reads 1, segment skipped 0. (1 row affected) |

Both queries do 731 logical reads. (For clarity, I’ve removed a lot of the irrelevant IO stats.)

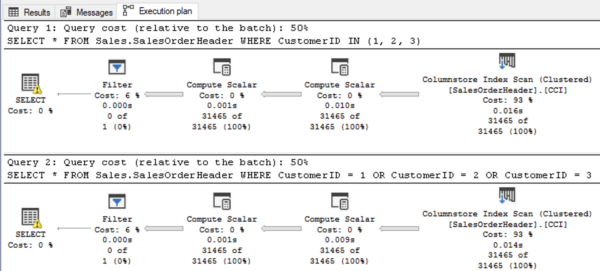

That means the queries are performing identically in terms of reads, and their execution plans both look the same too:

Both plans perform equally.

They both suck.

They’re both retrieving all of the rows in SalesOrderHeader – even though no rows actually match our query! If you test this against the default rowstore indexes in AdventureWorks2019, you’re going to come away with the conclusion that columnstore is worse than rowstore – and frankly, for many kinds of queries, that’s true.

Does SQL Server 2022 compat level fix that?

AdventureWorks2019 ships in compat level 150 (2019). Let’s switch over to 160 (2022), and try that again. Here are the new logical reads:

|

1 2 3 4 5 6 7 8 9 10 11 |

(0 rows affected) Table 'SalesOrderHeader'. Scan count 1, logical reads 0, lob logical reads 0 Table 'SalesOrderHeader'. Segment reads 0, segment skipped 1. (1 row affected) (0 rows affected) Table 'SalesOrderHeader'. Scan count 1, logical reads 0, lob logical reads 0 Table 'SalesOrderHeader'. Segment reads 0, segment skipped 1. (1 row affected) |

BOTH QUERIES DO ZERO READS. ZERO.

That’s because SQL Server 2022 (in 2022 compat) is able to skip the segments altogether. Note the line “segment skipped 1”, as opposed to the 2019 version which actually read the data.

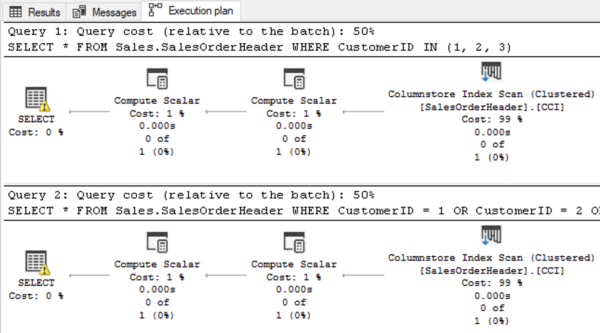

How? Well, the 2022 execution plans look different than the 2019 versions:

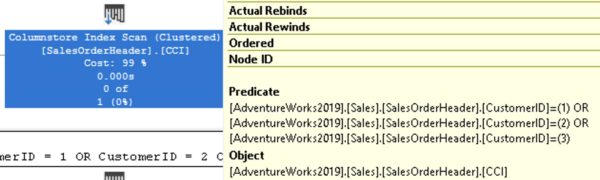

These new simpler plans don’t have a filter because the data’s already being filtered earlier in the plan, at the index scan level. If you hover your mouse over the Columnstore Index Scan operators, you’ll see a new Predicate option that we didn’t have before:

This is called “predicate pushdown”, and it’s something that Microsoft has been gradually adding in to more and more columnstore plans over the years. They’ve been pretty good about tracking what’s new in columnstore with each version, but I gotta confess that I actually thought integer predicate pushdowns were already happening, hahaha. It might be a regression in 2022, but frankly, I don’t have multiple machines handy with different versions to test it. (I’m finishing up my Norway vacation, getting ready to fly back to the US, but Pinal’s blog post tickled me so much that I had to bang this post out quickly.)

So in summary, when you’re using columnstore indexes, what’s faster, IN or OR? The answer: 160 compatibility level.

19 Comments. Leave new

No worries, integer pushdown into columnstore index scan has indeed been available for a long time already.

My guess is that it’s the OR predicate (either explicit in the query or implied by IN) that threw the optimizer off in older versions. Queries with OR are always a lot harder to optimize than when AND is used.

Hugo – hmm, no, as I demonstrate in the post, we are NOT getting integer pushdown on SQL Server 2022 with AdventureWorks2019 running in 2019 compat level.

If I understand predicate pushdown correctly, I can induce it on SQL 2019 after running your setup script to create the columnstore index with the ff query: SELECT * FROM Sales.SalesOrderHeader WHERE CustomerID = 1 and [Status] = 1. Which I think is what Hugo was getting at; the presence of a disjunctive predicate (either explicitly with OR or implicitly with IN) inhibited the pushdown.

That said, it’s great that the behavior in SQL 2022 is better in that respect.

Ah! There we go, that makes sense.

I’m quite sure that the second query in the demo script is performing better.

But just because it’s pulling data from cache thanks to the dirty work of pushing it by the previous query.

Ahah… Just kidding 🙂

It worth waiting the release of the final chapter of this trilogy on : “Exists vs IN, the ultimate challenge”.

(I know the killer is the butler).

Federico – well, Pinal wrote chapter 1, and because I wanted to say more, I wrote chapter 2. Sounds like there’s something you want to say about the topic on EXISTS, so tag, you’re it! Let’s see what you do for chapter 3.

There’s a 3rd option worth considering….dare I say it? Yes a table function.

Since this type of query in my shop would go against dimension tables where I don’t use columnstore indexes I’m interested in seeing performance of the existing indexes on the tables using different options.

I changed the ids to ones that exist.

Total logical reads is now 96 + 3 for the 3 inserts. But cost estimate goes from .0592677 to .0108805 and the cost distribution across the 2 indexes changes significantly.

Might this be a more scalable option if an application needs to query a lot more than just 3 customers – even if logical IOs don’t change?

SELECT *

FROM Sales.SalesOrderHeader

WHERE CustomerID = 29825

OR CustomerID = 29672

OR CustomerID = 29734;

DECLARE @CustIds TABLE (

CustomerID INT)

INSERT INTO @CustIds VALUES(29825)

INSERT INTO @CustIds VALUES(29672)

INSERT INTO @CustIds VALUES(29734)

SELECT *

FROM Sales.SalesOrderHeader soh

INNER JOIN @CustIds AS CID ON CID.CustomerID = soh.CustomerID;

Hmm, can we stick to the IDs used for the other queries to compare? Otherwise I’m not sure how this is relevant. Thanks!

Sorry I meant to say table valued parameter.

Sticking to the non-existent ids as requested….

DECLARE @CustIds TABLE (

CustomerID INT)

INSERT INTO @CustIds VALUES(1)

INSERT INTO @CustIds VALUES(2)

INSERT INTO @CustIds VALUES(3)

SELECT *

FROM Sales.SalesOrderHeader soh

INNER JOIN @CustIds AS CID ON CID.CustomerID = soh.CustomerID;

Table ‘#A0CD519F’. Scan count 1, logical reads 1

It adds 1 read for every insert.

And the cost is also actually higher with this method when the ids are not found. The cost went from .0065707 to > .01. However when customer ids are found it went down from .05 to .01. I would argue the test for non-existent customers may be misleading since looking for existing customer ids seems an edge case need and it appears to have a different result when comparing options.

There is of course another option as well – with large volume of ids you would usually put in a temp table. However a test with 100 ids resulted in significantly more logical reads even after indexing the temp table.

My conclusion is IN list and OR are best options for performance compared to either table valued parameter or temp table.

The limiting factor for scaling this to a large list then seems to be sheer volume of sql you’re submitting. For our use case we need to get a list of ids within an Azure LogicApp from a flat file, then use the list to query our database. If it’s possible with LogicApp, loading it into temp table with persistent session for the eventual query would seem the most reasonable solution.

Joining to a real or a temporary table (regardless if it is a #temp-Table or a @table_variable) is usually not a good idea, because the SQL server has absolut no idea, what really is in it (even with up to date statistics) and can’t do partition / segment eliminations

So make it permanent if that’s a problem. I’ve created a permanent table for this purpose before – if you don’t commit the rows it’s surprisingly fast and scales very well.

But this whole discussion of course was really just academic because #1) who queries for non-existent ids in a list? Anything’s possible but pretty unlikely scenario. And then there is #2) it doesn’t scale and can fail. If you have a list I’m guessing it’s dynamic and maybe could grow large? I know ours can. Check out Microsoft documentation and possible errors with long IN lists and its recommendation to use a table to store the ids…hmm what a concept…

https://learn.microsoft.com/en-us/sql/t-sql/language-elements/in-transact-sql?view=sql-server-ver16

This says….

Explicitly including an extremely large number of values (many thousands of values separated by commas) within the parentheses, in an IN clause can consume resources and return errors 8623 or 8632. To work around this problem, store the items in the IN list in a table, and use a SELECT subquery within an IN clause.

Error 8623:

The query processor ran out of internal resources and could not produce a query plan. This is a rare event and only expected for extremely complex queries or queries that reference a very large number of tables or partitions. Please simplify the query. If you believe you have received this message in error, contact Customer Support Services for more information.

Error 8632:

Internal error: An expression services limit has been reached. Please look for potentially complex expressions in your query, and try to simplify them.

When i create a non-clustered columnstore index on all columns and deactivate other secondary indexes, even on compat 140, the pushdown predicate works (on the non-clustered columnstore index).

IN and OR queries return the same plan :

|–Compute Scalar(DEFINE:([AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderNumber]=[AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderNumber], [AdventureWorks2019].[Sales].[SalesOrderHeader].[TotalDue]=[AdventureWorks2019].[Sales].[SalesOrderHeader].[TotalDue]))

|–Nested Loops(Inner Join, OUTER REFERENCES:([AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderID]))

|–Compute Scalar(DEFINE:([AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderNumber]=isnull(N’SO’+CONVERT(nvarchar(23),[AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderID],0),N’*** ERROR ***’), [AdventureWorks2019].[Sales].[SalesOrderHeader].[TotalDue]=isnull([AdventureWorks2019].[Sales].[SalesOrderHeader].[SubTotal]+[AdventureWorks2019].[Sales].[SalesOrderHeader].[TaxAmt]+[AdventureWorks2019].[Sales].[SalesOrderHeader].[Freight],($0.0000))))

| |–Index Scan(OBJECT:([AdventureWorks2019].[Sales].[SalesOrderHeader].[NonClusteredColumnStoreIndex-20230912-004643]), WHERE:([AdventureWorks2019].[Sales].[SalesOrderHeader].[CustomerID]=(1) OR [AdventureWorks2019].[Sales].[SalesOrderHeader].[CustomerID]=(2) OR [AdventureWorks2019].[Sales].[SalesOrderHeader].[CustomerID]=(3)))

|–Clustered Index Seek(OBJECT:([AdventureWorks2019].[Sales].[SalesOrderHeader].[PK_SalesOrderHeader_SalesOrderID]), SEEK:([AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderID]=[AdventureWorks2019].[Sales].[SalesOrderHeader].[SalesOrderID]) LOOKUP ORDERED FORWARD)

You also might want to try putting a NULL in the list. There are other products in which the IN() . It’s very nicely optimized. First we start the list if it’s long enough to justify this, then we remove any duplicates and finally the predicate does a binary search on the remaining values. That’s bit fancy, but it leads to some pretty weird behavior in the larger SQLs. There’s usually a critical link from the list triggers this optimization, so you had some absurd dummy values into the mix to force the length and thus the optimization.

Howdy sir, good to see you!

Maybe a bit off topic (just comes to my mind) – the whole predicate pushdown / elimination stuff in SQL 2022 (and earlier) is still not perfect.

Easiest example:

have a large data warehouse table which is *partitioned* by an DATETIME2(0) column (e.g. the order_date).

When you now do a

SELECT COUNT(*)

FROM dbo.orders AS o

WHERE o.order_date BETWEEN ‘20230101’ AND ‘20230131 23:59:59’

It does use the filter as predicate (this part works) and does segment elimination on the ColumnStore Index, but it does no partition elimination.

SELECT COUNT(*)

FROM dbo.orders AS o

WHERE o.order_date BETWEEN CAST(‘20230101’ AS DATETIME2(0)) AND CAST(‘20230131 23:59:59’ AS DATETIME2(0))

on the other hand works perfect with partition elimination and is MUCH faster (500 ms instead of 3000 ms; 32 scans vs. 569 scans, 76k vs. 1.3 mio reads, no WORKTABLE involved etc.)

So – particularly when working with non-integer columns – ensure, that you ALWAYS use the exact data type for filtering

Ooowee! That’s terrible. Sorry to hear that!

I remember a particular case where a dynamic SQL script had thousands of comma separated values in the “IN” list.

I wanted to replace the IN clause with a derived table, similar to a table variable. Eg.

Instead of “WHERE CustomerID IN (1, 2, 3)”

use “WHERE CustomerID IN (select CustomerID from (values (1),(2),(3)) as value(CustomerID)

I would think this would create a better query plan.

Regards, Ignacio

Then by all means, go for it! Experiment and prove out your hypothesis. That’s how you learn!

Oops, there is a missing “)” at the end of the script…