When I’m doing a SQL Server health check, one of the things I like showing clients is a list of their top tables by size.

When I’m doing a SQL Server health check, one of the things I like showing clients is a list of their top tables by size.

Inevitably, there are a bunch of tables that:

- Tables whose names start with “temp” or “backup” or “just_in_case”

- Tables with no clustered index that were clearly created with a select-into, and then forgotten about

- Tables that haven’t been accessed in months or years

We have some chuckles, make a quick calculation, and realize that our database is 10%-20% larger than it needs to be. That means our backups are taking 10-20% longer, corruption checking, restore testing, backup space, disaster recovery test time, you name it – it’s all 10-20% higher.

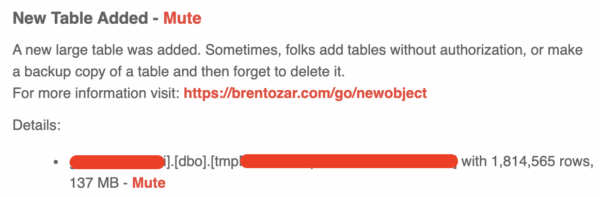

So now if SQL ConstantCare® sees a new table with at least a million rows, we give you a heads-up in your daily email:

This is such a good example of what SQL ConstantCare® is all about: giving you practical insight, not just raw metrics.

4 Comments. Leave new

I would like to be able to use ConstantCare but our servers live in a secure environment.

Is supporting that market segment on your radar?

No, not at all – your best bet there would be a more expensive traditional monitoring app.

I am using a similar approach for a number of years already and based on what I experienced I also include in my checks schema names like tmp,temp, delete, trash, etc. I also include table names that include dates, like dbo.customers_20100101.

I have a database on my Production Server called “Archived Data”. Every few months, I run a script manually (so I can verify the tables affected), which copies all of the tables that have names starting with an underscore, or have _bak, _temp, _bk, _backup, or an 8 digit date as a suffix to the Archived Data database and then, after confirming that the table was copied successfully, it Truncates and then Drops the original Production database table.

This allows me to recover the tables, if they are actually needed (never happened yet, but it is possible that something gets missed), and it minimizes the time that Production backups take.

A side benefit is that, because I really will be clearing them out of Production, it allows me to make backup tables of the whole table, rather than a subset of the data. This often allows me to check exactly when a specific field update was made even if the record only has a basic Last_Modified_Date field.

I do the Truncate/Drop because these tables are so large, a straight Drop will generate huge transaction logs that are completely pointless in this situation (that is why I check that the table WAS copied successfully first)… but your mileage may vary on that decision.