Get Yourself Together

This is one of the most common patterns that I see in stored procedures. I’m going to simplify things a bit, but hopefully you’ll get enough to identify it when you’re looking at your own code.

Here’s the stored procedure:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

CREATE OR ALTER PROCEDURE dbo.you_are_not_clever ( @cd DATETIME NULL) AS BEGIN DECLARE @id INT; SELECT TOP 1 @id = u.Id FROM dbo.Users AS u WHERE ( u.CreationDate >= @cd OR @cd IS NULL ) ORDER BY u.Reputation DESC; SELECT p.OwnerUserId, pt.Type, SUM(p.Score) AS ScoreSum FROM dbo.Posts AS p JOIN dbo.PostTypes AS pt ON p.PostTypeId = pt.Id WHERE p.OwnerUserId = @id GROUP BY p.OwnerUserId, pt.Type; END; |

There’s a lot of perceived cleverness in here.

The problem is that SQL Server doesn’t think you’re very clever.

Pattern 1: Assigning The Variable

Leaving aside that optional parameters aren’t SARGable, something even stranger happens here.

And I know what you’re thinking (because you didn’t read the post I just linked to), that you can get the “right” plan by adding a recompile hint.

Let’s test that out.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

DECLARE @id INT; DECLARE @cd DATETIME = '2017-01-01'; SELECT TOP 1 @id = u.Id --Assigning the id to a variable FROM dbo.Users AS u WHERE ( u.CreationDate >= @cd OR @cd IS NULL ) ORDER BY u.Reputation DESC; SELECT TOP 1 u.Id -- Not sssigning the id to a variable FROM dbo.Users AS u WHERE ( u.CreationDate >= @cd OR @cd IS NULL ) ORDER BY u.Reputation DESC OPTION ( RECOMPILE ); |

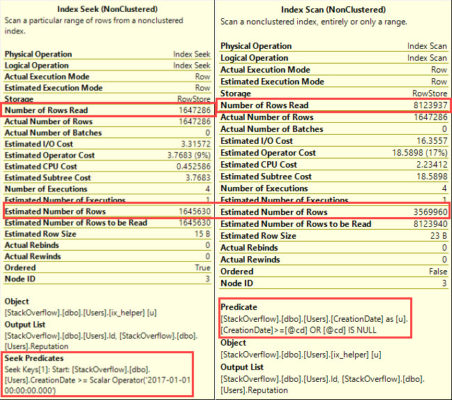

Here are the plans, for all you nice people NOT WORKING AT WORK.

Just like in the post I linked up there (that you still haven’t read yet — for SHAME), the first plan refuses to recognize that an index might make things better.

The second plan does, but as usual, the missing index request is rather underwhelming.

But what’s more interesting, is that even with a recompile hint, The Optimizer doesn’t ‘sniff’ the variable value in the first query that assigns to @id.

This only happens in queries that test if a variable is null, like the pattern above, and it becomes more obvious with a better index in place.

|

1 |

CREATE INDEX ix_helper ON dbo.Users(CreationDate, Reputation DESC) |

We’ve got it all! A seek vs a scan, a bad estimate! Probably some other stuff!

Pattern 2: Using That Assigned Variable

The Great And Powerful Kendra blogged about a related item a while back. But it seems like you crazy kids either didn’t get the message, or think it doesn’t apply in stored procedures, but it does.

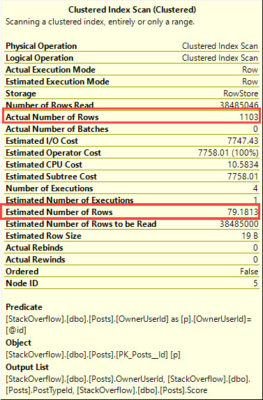

If we look at the plan generated, the cardinality estimate gets the density vector treatment just like Kendra described.

In my histogram, the values plug in below, and give us 79.181327288022

|

1 |

SELECT (38485046 * 2.057457E-06) AS lousy |

This gets worse when a user with a lot of data, where other parts of the plan start to go downhill.

How To Avoid These Problems

Both of the problems in these patterns can be avoided with dynamic SQL, or sub stored procedures.

Unfortunately, IF branching doesn’t work the way you’d hope.

If you’re ever confused by bad query plans that crop up in a stored procedure, sneaky stuff like this can totally be to blame.

Thanks for reading!

15 Comments. Leave new

What’s a sub-stored procedure?

James — tsk! You didn’t click on the link at the end. Do that and lemme know if you still have a question.

ahh, now I see!

Would it make any difference to use explicit values for the catch-all case?

eg

WHERE ( u.CreationDate >= @cd OR @cd = ‘1-jan-1990’ )

In a lot of cases I have optional parameters for identity columns so I have used default values of 0 for the parameter instead of NULL.

Heh heh heh, no, that would usually get you pretty bad query plans as well – but definitely try it out to see if it makes a difference in your case. Try doing only the specific values (not the catch-alls) and see how the query plan changes.

To be honest, something about the point of what I’m looking at in this post is going right past my brain in a morning pre-coffee fog that I’m like “What am I looking at? What am I doing wrong now?” BUT it all looks far too familiar at the same time.

Those links tho. How on earth am I performance tuning SQL every single day and I entirely overlook or forget these time and time again? I’m sure I’ve read all of this in the past, but Local Variable issue? Forgot it. Optional Parameters? Yep, not in the brain cells that I go to when tuning. Branching logic? I should totally know this, but that’s not up there either. AND I’M WORKING WITH PROCEDURES THAT DO THIS RIGHT NOW. Sigh.

Thanks for the refresher guys. I seriously need to build a profiling map of tuning – from the logic flow of the engine, and from all these gotchyas, and revisit Brent’s beginner tuning vids. This is so valuable as a reminder I really appreciate and I really appreciate it.

Part of me wants to know how we’re x versions of SQL Server along though, and these known issues (like not getting Missing Indexes in these cases) aren’t baked in with solutions. That same part of me wonders why the UI still has options for Boost SQL Server Priority, and doesn’t come with some simple UX around setting Max DOP, Cost for Parallelism, Memory Max, etc that make any kind of sense.

Did I just really appreciate it twice? Well I really, really appreciate it. Also, time for coffee.

Andrew — I triple appreciate your comments! This is exactly the kind of stuff that makes writing about SQL Server worthwhile.

Thanks!

Hey Erik, just as a follow up to this – Over my year here I’ve done some work query tuning off and on in between everything else, and I’ve taken the server from ~100k CPU ms threshold on our lovely monitoring tool down to below 5k regularly, with some spikes. There was large reads all over the place.

Anyway, I’ve been tracking down the stragglers that were still performing badly, but were small enough I hadn’t gotten to yet… I just got a query down from 3s/2m reads, to 4ms/~1,200 reads. And I would have looked at this query before and wondered how come?! Well, I looked at it and just knew. It was because we used a variable to test “field > @intPreviousCompletionValue”.

I threw a OPTION (RECOMPILE) on it, and it was as fast as I could have hoped. Then I wondered how to do without that, and it ended up being two parts, and the second part was being done first because the filter didn’t know how many rows it was returning (from a table of millions of rows). I ended up doing the filtered part out to a temp table (and it was a @temp, not a #temp solely because we would only expect a few rows anyway) – and because the estimates were better (even if 1 row/100 row – its closer than “possibly millions!”), we ended up with the speeds I mentioned before.

This blog post has had that impact, my dude.

Right on. Keep that up and Microsoft will fire the robots!

great, can you please explain me sub-stored procedure

Harshil — read the links!

Thank you for the visual. This is a very helpful post!!

In combating miles of OR logic related to optional parameters over the last 20-odd years, I’ve also found that one alternative is to reassign the parameter to account for NULL with a base value to replace the NULL and satisfy what the OR would accomplish. It is not always an option but when it is the resulting plan sees a big improvement.

Apparently I should have read ahead to the Sniffed NUlls and Magic Numbers article which hadn’t been published yet when this was. To clarify what I said above I was looking to get rid of the OR. That doesn’t help the cardinality of the scalar. However, it may result in better index choices by simplifying the filter logic.