Sharing Is Caring

When everything is going well, and queries are behaving responsibly, one need hardly think about memory grants.

The problem becomes itself when queries start to over and under estimate their practical needs.

Second Hand Emotion

Queries ask for memory to do stuff. Memory is a shared resource.

What kind of stuff? Well, usually to sort or hash data.

How much memory the optimizer asks for is dependent on:

- Number of rows

- Row goals

- Data types and sizes

- If the query is going parallel

- Max server memory

- Any memory limiting query hints

- Resource governor settings

If you need to do something with 10 rows of VARCHAR(10) data, the amount of memory you need will generally be estimated to be much lower than if you need to do something with 10 rows of VARCHAR(100) data.

Underestimating

If I have this query plan.

|

1 2 3 4 5 |

SELECT u.DisplayName FROM dbo.Users AS u WHERE u.CreationDate >= '2016-10-11' AND u.CreationDate < '2016-10-12' ORDER BY u.CreationDate; |

The sort spills, with only 1968KB of memory granted.

Is this when you should worry about spills? Definitely not.

But it’s easy to see how we can influence the memory grant with One Weird Trick And Basically You’re Kidding Me.

|

1 2 3 4 5 |

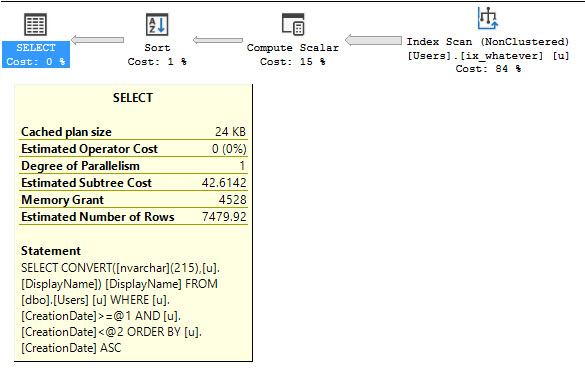

SELECT CONVERT(NVARCHAR(215), u.DisplayName) AS DisplayName FROM dbo.Users AS u WHERE u.CreationDate >= '2016-10-11' AND u.CreationDate < '2016-10-12' ORDER BY u.CreationDate; |

The important thing to pay attention to here is that we’re not sorting BY a column with a large data type. We only have to sort some data by a column that has no index to support the sort order.

It’s like when you highlight an entire Excel file and order it by a single column. The whole sheet winds up sorted by that one column.

SQL Server has to do the same thing, and it wants to do it in memory, if possible. Spilling to disk stinks.

The DisplayName column is defined as NVARCHAR(40), but if we CONVERT it to have a length of 215 (this was the just the lowest number that it stopped spilling at, there’s nothing special about an NVARCHAR(215), generally) we get a larger memory grant.

We get this plan.

Lying about that causes the optimizer to ask for a large enough grant for nothing to spill.

This doesn’t mean you should litter every query spilling to disk with CONVERTS. The more appropriate response might be a better index that supports the sort.

Or just not asking for sorted data.

But still, this query underestimated it’s practical needs. It chose the wrong stall, to coin a phrase.

Overestimating

It’s possible for the optimizer to choose unwisely in the other direction, as well.

If we change our query to this:

|

1 2 3 4 5 |

SELECT CONVERT(NVARCHAR(4000), u.DisplayName) AS DisplayName FROM dbo.Users AS u WHERE u.CreationDate >= '2008-12-25' AND u.CreationDate < '2008-12-26' ORDER BY u.CreationDate; |

We wind up with another kind of warning! An excessive grant. Again, at this size, I wouldn’t worry about it. But there it is.

If you have a lot of queries asking for much larger grants than they’re using, you can wind up with a whole bunch of queries with big needs waiting on a bunch of queries with much lesser needs.

You’rein trouble, in other words.

You may even think SQL Server has a memory leak.

Rain Dance

SQL Server only has a limited amount of memory to do stuff, which means that when it’s given out all the memory it can, you end up with queries waiting on memory to do stuff.

Not just memory consuming stuff, either. They’ll hang out waiting for memory to start running, or even compile.

This is something you want to control, because you may have queries stealing memory space from the buffer pool, or the plan cache.

Which is worse depends on what sort of shape your server is in.

- If you’re already under provisioned for memory, the large grants can hurt more

- If you have plenty of memory, but maybe sup par (no 3PAR jokes, here) storage, spills to disk may be worse

Too Many Things

What causes this to happen?

- Poor cardinality estimates

- Parameter sniffing

- Badly defined data types

- Not eliminating rows early in the query

- Selecting more columns than you need

- Selecting all your columns at once

You can’t index for every aspect of every query, and you can’t have infinite RAM.

Yet.

Thanks for reading!

13 Comments. Leave new

Do these concerns apply to the use of Temp Tables?

Sean — are you asking if temp tables need memory grants to sort and hash data too?

Yes, basically, what I’m concerned with is seeing temp tables like the following in some of the home-grown scripts in the environment.

CREATE TABLE #MyFooTable

(

[FirstName] NVARCHAR(MAX),

[LastName] NVARCHAR(MAX)

)

Instead of strictly defining the Data Type to be NVARCHAR(50) or what-have-you.

Yes, that can be bad too.

“can’t have infinite RAM”

Challenge accepted!

Dmitry — I keep asking Brent for it, and he keeps laughing at me.

You have to start small. As for half infinity first.

positive or negative infinity ? 😉

I’m confused … I’m not particularly clever at these things so please bear with me.

The NVARCHAR(215) had a memory grant of 4528, and we know the query tries to use more than 1968KB (it spilt to disk with this figure) … yet on the excessive memory grant it requested 1536KB and used 16KB. Isn’t the excessive memory grant (NVARCHAR(4000)) [1536KB] actually requesting less space than the NVARCHAR(215) [1968KB]? #Confuzzled

Read the code closely. They’re looking at different date ranges.

I saw the date ranges, I just didn’t pay enough attention to them to pick up that they were significantly different. Sorry 🙂

In an emergency I use DBCC FREEPROCCACHE and it often works wonders for me.

Another thing that could cause over-estimation of memory is having the wrong “Minimum memory per query” value at the instance (sp_configure ‘min memory per query (KB)’). If you set it too high, SQL may try to do larger memory grants than what the queries would need, causing semaphore waits all over the place.

Actually I would suggest you guys do a test on this scenarion with some crazy value like 10 or 20gb, and if that repeats for you maybe add a check on SP_BLITZ (no idea how to suggest this).