Users are horrible, I know

You can just never rely on them to pass in reasonable search strings. When you write stored procedures to retrieve information for them, it’s really tempting to define all your string variables as MAX length. As long as the actual data type, N/VARCHAR matches, you’re cool, right? Avoid that pesky implicit conversion and live another day.

Well, not really. Let’s test it out. We’ll be using StackOverflow, and hitting the Users table. We have a column, DisplayName, that’s an NVARCHAR(40), which sets us up well enough to demo.

My Favorite Martian

There’s a user with the handle Eggs McLaren, which cracks me up. It reminds me of this old Red Stripe commercial. I use him for all my demos, even though he doesn’t really exist.

So what happens when we go looking for Eggs?

|

1 2 3 4 5 6 |

DECLARE @DisplayName NVARCHAR(MAX) = 'Eggs McLaren' SELECT * FROM dbo.Users AS u WHERE u.DisplayName = @DisplayName GO |

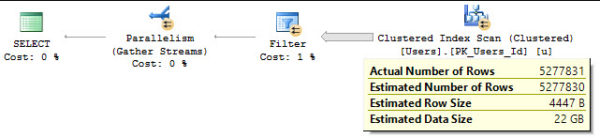

We get the correct results, but the execution plan has some bad news for us.

In short, we read everything in the clustered index. This could be mitigated with a smaller index, sure, but you’d still read all 5.2 million rows of it, and pass them into a filter operator. I’m using the clustered index here to highlight why this can be extra bad. We read and passed 22 GB of data into that filter operator, just to get one row out.

Why is this bad, and when is it different?

SQL Server makes many good and successful attempts at something called predicate pushdown, or predicate pushing. This is where certain filter conditions are applied directly to the data access operation. It can sometimes prevent reading all the rows in a table, depending on index structure and if you’re searching on an equality vs. a range, or something else.

What it’s really good for is limiting data movement. When rows are filtered at access time, you avoid needing to pass them all to a separate operator in order to reduce them to the rows you’re actually interested in. Fun for you! Be extra cautious of filter operators happening really late in your execution plans.

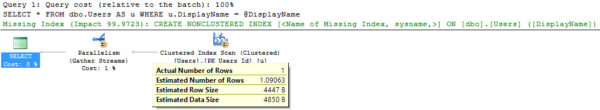

Even adjusting the variable type to NVARCHAR(4000) gets us there. If your users need to pass in search strings longer than 4000 characters, you have some serious thinking to do.

|

1 2 3 4 5 6 |

DECLARE @DisplayName NVARCHAR(4000) = 'Eggs McLaren' SELECT * FROM dbo.Users AS u WHERE u.DisplayName = @DisplayName GO |

Rather than 22 GB, we’re looking at passing 4850 bytes to the next operator. This seems much more optimal to me. Next up is figuring out which columns we actually need, so we’re not running SELECT * every time.

That’s another bummer.

Thanks for reading!

23 Comments. Leave new

Hi Erik,

10/10 post, would rep IRL.

Can you explain or link us to some stuff that explains why the query engine is able to push the predicate down when switching to nvarchar(4000) but not with nvarchar(max)?

P.S. do you know of any good gyms in Seattle? Preferably one without a lunk alarm. Asking for a friend.

You know, I’ve never seen anything on that. My guess is lack of hip drive.

If you see a guy named Conor Cunningham walking around Seattle, you can ask him while he laughs at your 1RM.

http://sqlblog.com/blogs/paul_white/archive/2012/04/28/query-optimizer-deep-dive-part-1.aspx

This is a great series of articles, but a cursory ctrl+f for MAX doesn’t turn anything up. Was there something in particular here you wanted to point out?

He asked out predicate pushdown. Paul White discusses this on several occasions. These are a few of the articles I found on it. This particular article from Paul covers a lot of other topics as well, but it is interesting to see how it works.

http://www.theboreddba.com/Categories/FunWithFlags/Revealing-Predicates-in-Execution-Plans-(TF-9130).aspx

https://www.google.com/

Yes, I did use Google. I was just sharing topics on what was asked in reference to your article. Apologies if I upset you. Was just trying to help.

It’s totally fine to do that, but just posting a link without context is sort of spammy.

Very helpful post. I was in a meeting yesterday where a developer asked me the ramifications of using nvarchar(max) vs nvarchar(255) and I wasn’t sure so I told him I’d look it up and get back to him. I sent him a link to your post this morning! I usually learn something new every week from you guys.

Thanks!

Glad it helped!

Where is the rest of “MAX Data Types Do WHAT”? I have been trying to educate my developers that MAX anything runs slower. They will listen to Brent because they know him and respect his publications.

>> If your users need to pass in search strings longer than 4000 characters, you have some serious thinking to do.

But how about when you’re passing in to SSRS an “all items in dropdown” parameter? SSRS then passes in all items in a list. This could easily surpass 4000.

Is there a way to change a SSRS “all” parameter to something that could be caught and then use dynamic SQL to leave off the column in the WHERE clause?

Hi Pat,

I couldn’t tell you which end of SSRS is up. I’ve never touched it, and hope I never have to.

Use Tableau instead? 😀

Thanks!

Yes Pat, you could populate the dropdown with an “All” alternative at the very top and handle this in the T-SQL for the dataset eg. “… where (o.code = @p_code or @p_code = ‘ALL_CODES’).

this is the correct solution.

Hmmm, the SSRS parameter has an option for “allow multiple values”. Then the dropdown is automatically populated with a “select all” option. The dropdown appears as a checked listbox. I wonder if there’s a way to not allow multiple values and manually populate a select all to pass a chosen parameter, and still allow multiple values to be chosen?

I assume the predicate push down will also get bypassed if the reverse happens, i.e. the column is an NVarChar(MAX) and the sting is NVarChar(40).

If you capitalize it all funky like that, who knows? 😉

Yeah yeah, you aren’t the first one to give me crap over that. :p

Not sure where I picked that habit up.

Nice! Thanks!

I tried to reproduce this and could reproduce a plan difference but not any actual performance difference when measured against dm_exec_requests with buffers cleared and plan cache cleared.

Is there a script you can share that reproduces the dbo.Users object and associated indexes and data? I am happy to share my script too to see if we can get to the same point.

[…] forget that they also prevent predicate pushdown, and they can really mess with memory […]