Editors Note: this post was updated in June 2014 to link to an online index rebuild bug that can cause corruption.

Once up on a time, there was a database server with 500GB of data and a heavy read workload of dynamic queries. Data was updated frequently throughout the day and index tuning was a serious challenge. At the best of times, performance was dicey.

Then things went bad

Application performance plummeted. Lots of code changes had been released recently, data was growing rapidly, and the hardware wasn’t the absolute freshest. There was no single smoking gun– there were 20 smoking guns!

A team was formed of developers and IT staff to tackle the performance issue. Early in the process they reviewed maintenance on the database server. Someone asked about index fragmentation. The DBA manager said, “Of course we’re handling fragmentation!” But a few queries were run and some large, seriously fragmented indexes were discovered in production.

The DBA explained that fragmentation wasn’t the problem. She didn’t have automated index maintenance set up, but she periodically manually defragmented indexes that were more than 75% fragmented.

Bad, meet ugly

At this point the whole performance team flipped out. Trust disappeared. Managers squirmed. More managers were called in. The DBA tried to change the subject, but it was just too late. More than a week was wasted over Fragmentation-Gate. It was a huge, embarrassing distraction, and it solved nothing.

Here’s the deal– the DBA was right. Fragmentation wasn’t the root cause of the performance problem. The DBA was a super smart person and very talented at performance tuning, too! (And no, I’m not secretly talking about myself here– this is not the “royal she”.) But she made a strategic miscalculation: she should have set up occasional automated index maintenance to align with her team’s normal practices and standards.

Why you need automated index maintenance

When performance gets bad, one of the very first things people look at is whether systems involved are configured according to best practices. If you’re not following a best practice, you need to have a really good reason for it.

Regular index maintenance still has a lot of merit: even in Shangri-La, where your data all fits into memory and your storage system is a rockstar with random IO, index maintenance can help make sure that you don’t have a lot of empty space wasting loads of memory.

It’s still a good idea to automate index maintenance. Absolutely don’t go too crazy with it– monitor the runtime and IO use and run it only at low volume times to make sure it helps more than it hurts. Be careful, but don’t skip it.

How much downtime can you spare?

Before you implement index maintenance, find out how much time tables can be offline in each of your databases. Then, figure out what operations you want to use.

To Rebuild or Reorganize: That is the Question

First off: ‘Reorganize’ and ‘Rebuild’ are two different operations that each reduce fragmentation in an index. They work differently toward the same end. You don’t need to run both against the same index. (I sometimes find that people are doing both against every index in a maintenance plan. That’s just double the work and NOT double the fun.)

Rebuild: An index ‘rebuild’ creates a fresh, sparkling new structure for the index. If the index is disabled, rebuilding brings it back to life. You can apply a new fillfactor when you rebuild an index. If you cancel a rebuild operation midway, it must roll back (and if it’s being done offline, that can take a while).

Reorganize: This option is more lightweight. It runs through the leaf level of the index, and as it goes it fixes physical ordering of pages and also compacts pages to apply any previously set fillfactor settings. This operation is always online, and if you cancel it then it’s able to just stop where it is (it doesn’t have a giant operation to rollback).

Factors to consider:

- Standard Edition rebuilds ain’t awesome. If you’ve got SQL Server Standard Edition, index rebuilds are always an offline operation. Bad news: they’re also single-threaded. (Ouch!)

- Enterprise Edition rebuilds have gotchas. With SQL Server Enterprise Edition, you can specify an online rebuild — unless the index contains large object types. (This restriction is relaxed somewhat in SQL Server 2012). You can also use parallelism when creating or rebuilding an index— and that can save a whole lot of time. Even with an online rebuild, a schema modification lock (SCH-M) is needed at the time the fresh new index is put in place. This is an exclusive lock and in highly concurrent environments, getting it can be a big (blocking) problem.

- There’s a bug in SQL Server 2012 Enterprise Edition Rebuilds that can cause corruption. If you’re running SQL Server 2012 SP1 – SP2, parallel online index rebuilds can cause corruption. Read about your options here.

- Rebuilding partitioned tables is especially tricky. You can rebuild an entire partitioned index online– but nobody really wants to do that because they’re huge! The whole idea behind horizontal partitioning is to break data into more manageable chunks, right? Unfortunately, partition level rebuilds are offline until SQL Server 2014.

- Reorganizing can be pretty cool. ‘Reorganizing’ an index is always an online op, no matter what edition of SQL Server you’re using. It doesn’t require a schema mod lock, so it can provide better concurrency. Reorganizing only defragments the leaf level of the index. On large tables it can take longer than a rebuild would take, too. But as I said above, it’s nice that you can reorganize for a while and then stop without facing a massive rollback.

SQL SERVER 2014: WAIT_AT_LOW_PRIORITY, MAX_DURATION, AND ABORT_AFTER_WAIT

I’m really excited about new index rebuild options that are shipping in SQL Server 2014. Check *this* out:

|

1 2 3 4 5 6 7 |

ALTER INDEX OhSoFragmented ON dbo.MyTable REBUILD WITH ( ONLINE = ON (WAIT_AT_LOW_PRIORITY (MAX_DURATION= 5, ABORT_AFTER_WAIT=BLOCKERS) ) ); |

So we’ve got new tools for those concurrency problems I was talking about with online rebuilds. Now, we can say how long we’re willing to wait to get that schema modification lock (in minutes), and if we have to keep waiting what should happen. (Options: kill those who are blocking us, kill ourself, or do nothing.) Obviously there are some situations where just killing the blockers could be a terrible problem, but I’m interested to experiment with this.

You Didn’t Answer the Question: Do I Use Rebuild or Reorganize?

Yeah, I totally dodged that question, didn’t I?

If you have a regularly scheduled downtime every weekend, you’re probably fine with straight up index rebuilds, even if you have Standard Edition. Single threaded offline index maintenance may not be the hottest thing in the world, but hey, if you’ve got time for it then embrace the simplicity.

If you have Enterprise Edition, embrace parallel index rebuilds– and use the ONLINE option for indexes that allow it if people need to access the database during your maintenance window.

If you have database mirroring or AlwaysOn Availability Groups, tread with caution– particularly with rebuilds. It’s easy to generate a ton of IO with index maintenance, and it could mean putting your secondaries or mirror so far behind that they can’t catch up.

Maintenance plans or custom scripts?

You can go the easy way and use SQL Server Maintenance Plans, but unfortunately they’re very simplistic: you can only say “rebuild all the indexes” or “reorganize all the indexes”. You cannot say, “If the index is 45% or more fragmented, rebuild it– otherwise do nothing.” If you don’t spend much time with SQL Server and you’ve got downtime available every weekend, this can be a decent option.

If you need to minimize downtime, custom index maintenance scripts are the way to go. Our favorite: Ola Hallengren’s maintenance scripts. These are super flexible, well documented, and … free! The scripts have all sorts of cool options like time boxing and statistics maintenance.

Some tips for using Ola Hallengren’s index maintenance scripts:

- Download and configure them on a test instance first. There’s a lot of options on parameters, and you’ll need to play with them.

- Get used the ‘cmdexec’ job step types. When you install the scripts you’ll see that the SQL Server Agent jobs run index maintenance using a call to sqlcmd.exe in an MSDOS style step. That’s by design!

- Use the examples on the website. If you scroll to the bottom of the index maintenance page you’ll find all sorts of examples showing how to get the procedure to do different useful things.

Find out when maintenance fails

Don’t forget to make sure that your maintenance jobs are successfully logging their progress. Set up Database Mail and operators so jobs let you know if they fail.

Tell your boss you did a good thing

Finally, write up a quick summary of what you did, why you chose custom scripts or maintenance plans, and why. Share it with your manager and explain that you’ve set up automated index maintenance as a proactive step.

Having your manager know you’re taking the time to follow best practices certainly won’t hurt– and one of these days, it just might help you out. (Even if it’s just by keeping everyone from following a red herring.)

109 Comments. Leave new

Hi,

Firstly thanks to you and all of the team at Brent Ozar for all the SQL Server Knowledge you continue to share with the community.

Not to prejudge it’s content but I’m just wondering how your excellent blog aligns with Brent’s forthcoming webast “Why Index Fragmentation Doesn’t Matter”?

Should we be rebuilding our indexes or not?

Thanks,

George

Haha! You’ll have to attend Brent’s webcast to see. I do agree that index maintenance is very often too overhyped and also misunderstood. I’ve found many cases where the maintenance has had a worse effect than the problem it’s trying to solve.

Webcast times are awkward for me over here in Belfast, Northern Ireland but I always make sure I catch up with the recording of the session.

Yes i’ll be very interested to find out what the definitive index maintenance solution is!

Also by way of feedback the pricing of your online training videos is very reasonable and I hope to be able to convince my company to fork out for your index training ones real soon!

I did see Brent’s webcast “Why Index Fragmentation Doesn’t Matter” yesterday and he makes a lot of good points. Turning off ongoing index maintenance is something I now need to give consideration to. But the information in your blog does seem to somewhat conflict. I guess the big question is: when does it make sense to defrag on in a modern shared SAN environment which as Brent put it is fragmented by design?

This actually is a question for Brent as well, but I am waiting for his blog on the webcast to be posted so I can ask the question there.

Hi Don. Let’s step back and ask, “What’s the problem you’re trying to solve?”

During the webcast, I took an extreme position in order to get people to think about how aggressively they defrag/rebuild. Defrags and rebuilds aren’t free, as I explained in the video, so as you’re thinking about the frequency, make sure you’re paying the right price and getting the right result.

the disks are fragmented in a SAN environment, not SQL indexes??

Rob – can you rephrase your question? I’m not quite sure what you’re asking.

Hi,

Yeah, I was referring to the fact that we’re potentially talking about fragmentation in two different environments…..SAN and SQL, which are obviously two completely different things.

Thanks for the post!

Could you please elaborate on the case of database mirroring or AlwaysOn Availability Groups?

It’s clear that rebuilding generates a lot of transaction log activity that will take time to apply on the secondary, but is there an actual danger of breaking the mirroring?

Hey Alex,

With all types of replication products I’ve worked with and heard of (database mirroring, availability groups, transactional replication, SAN replication, filter driver replication technologies), there’s always a way that you can push so much data churn into the system that your secondary gets so far behind that you have to reset everything and start over.

Things get so bogged down that perhaps things *could* catch up, but you’re so far out of SLA that it’s faster to reinitialize/resnap/etc. The process of just handling a vast amount of “deltas” is worse than just starting over in these cases.

For availability groups and mirroring, yes, I do know of specific cases where this scenario has been caused by index rebuilds. Of course the scenarios vary in terms of geographic distance between the partners, throughput, server and storage builds. But it’s not terribly uncommon.

Kendra

I see, thanks.

Would you recommend some settings to prevent it, perhaps limiting the parallelism of the rebuilds?

Though I suppose the way to go for a new setup is first to monitor and see if any action is required.

Yeah, there’s lots you can tweak — you can leave a few minutes between commands so that things have time to catch up, do partition level rebuilds (offline, only if using partitioning), and timebox the whole operation so that you only do a smaller amount of work. And of course limiting what you’re targeting (by level of fragmentation, purpose) helps. And you could potentially reorganize for a while and then manually stop the command in some instances.

Great points, thanks!

It’s been a while since my last comment — and since then I’ve found myself pretty much agreeing with the DBA described in the article. Fragmentation isn’t the problem (and even Brent has such an article).

Due to the SCH-M locks, the I/O load, and the AlwaysOn Availability Groups issues that index maintenance causes, I decided to simply disable the index maintenance. As our system is 24/7 and highly concurrent, this actually improved performance (combined with the usual query tuning and offloading to readable replicas).

However, it still bothers me — we now have high fragmentation in most of the main tables, which means we’re doing extra I/O and taking up extra RAM.

But reducing that, temporarily, using index maintenance costs a lot of I/O by itself, and with locking and replica issues.

Would you say that in this situation it would be best to just continue to skip index maintenance?

Or perhaps only run short reorgs, which should have the least negative impact?

Hi Alex,

We’re at the end of the allowable threaded comments/ “reply chain” for this particular comment. I can reply to this through the WordPress GUI, but WordPress will force you to start a new comment on this post if you’d like to respond. (Just an FYI in case the missing “reply” looks weird.)

You’re in a special place with your application. You’ve got a complex high availability in place and 24×7 customers. Your comment basically says, “there’s no obvious choice here, only judgment calls.”

That’s correct, and it’s you who has to make the judgment call. For me to tell you what’s right in your situation I’d need a lot more information, the type of detailed data that I’d only get over a multi-day consulting engagement.

So I can’t tell you not to worry. It’s incredibly valuable to periodically reassess the situation and decide if it’s time to customize your maintenance to do something better. That’s what keeps you on the lookout for issues like index rebuilds potentially causing corruption in some versions of SQL Server (like we just found out about in the 2012 SP1 line).

Wow, it’s been a while. Something important to add: reorganize often generates orders of magnitude more transaction log than rebuild! This is disastrous for AG/mirroring, and may not be worth the restartability.

Reference:

http://blogs.msdn.com/b/timchapman/archive/2012/09/28/index-rebuild-vs-reorganize-the-transaction-log-edition.aspx

Kendra…you read my mind on my exact issue… as I just setup a bcp site with always on and mirroring with trans repl… it took the maintenance and introduced a whole bunch of issue with TLogs getting HUGE.. and the rebuilds of indexes tanked me a few times…I have yet to dig myself out given I am still the new guy on the block and my learning is a daily event!

Good Read for sure… thx

-Zero

I am a SysAdmin and a NetAdmin… which does NOT mean I’m a DBAdmin (despite what hiring software seems to think).

Your article gave relevance to the TechNet articles and other articles written towards a DBA audience. It allowed me to know that I could Reorganize my database uh… things… tables? whatever–without taking down my monitoring software’s DB.

So thanks for writing something a non-DBA could grok!

You’re welcome, glad we could help!

I am testing Index fragmentation on 35gb Database setting my Log size to 8gb i have used ola Hallengren’s scripts and tryied Custom scripts but the transaction log is filling up . Do we need to increase th Transaction log or is there any other way to make sure Transaction log is not filling up. Thanks for your resposne.

Padhu – yes, rebuilding indexes is a logged operation, so depending on your individual server’s setup, you may need to try using simple recovery mode, increasing the log size, doing transaction log backups more often, etc.

Brent, Thanks for your response, I do not want to set database to simple recovery mode as we will be loosing point of recovery features and since this is OLTP System it is critical to have point of recovery. I am good to increase size for 35gb but i do have database which is 600gb and believe we need around 100% database size for Transaction Log as index are droped and re-created during rebuild process. I do tryied taking multiple Transaction log backup as Index fragmentation job was running but still it didn’t help, Your response and help is much appreciated.

I’ve noticed my databases reserved space has started growing more, but the used space has slightly grown is about the same typically after we run our maintenace plan (index rebuild, reorg, update stats). One thing we did a while back is set our maintenance plan to run index rebuilds for two of our largest tables before it was runing reorg operations. This was mostly due to the fact a reorg was taking a long time versus a rebuild. Could that have caused our sudden increase in reserved space to increase?

Yes– absolutely. REBUILD requires temporary space in the datafile for building that nice, polished new structure whereas REORGANIZE fixes things up as it goes, and doesn’t need a bunch of extra space. It’s healthy to allow the database to keep that unused space in place so you don’t get into a deadly cycle of shrinking (which causes fragmentation) and then regrowing when you do maintenance… only to trigger another shrink that causes more fragmentation.

There’s a good writeup of all the considerations around the temporary space issue in Books Online here: http://technet.microsoft.com/en-us/library/ms179542.aspx

Kendra,

Thanks Kendra for the quick response. I figured the data files would grow when an index rebuild was being issued, but what’s strange to me is our DB is made up of multiple data files not sure if that matters total size 1,301 GB reserved 1,245 used after we ran the index rebuild on the two tables it jumped up to 1,317 reserved 1,207 used. Wait think I figured it out.. index 1 is 65 Gigs, and index 2 is 77 Gigs in size. When I force a rebuild it needs to expand the data file to accomodate the size at which time it will initiate a rebuild. Is that accurate.. guess I’ll revert the maintenance plan back to not force rebuilds.

Another problem with rebuilds if you don’t have a maintenance window – even with SQL Enterprise and online rebuilds: any transaction started with snapshot isolation when the index rebuilds will fail with error 3961 if it accesses the rebuilt table again

i.e.

TX 1 TX 2

begin snapshot tran

select data from T

rebuild index on T online

rebuild complete

select data from T again: 3961

Well, the formatting got lost; begin snapshot, select data, select data again should be TX 1. rebuild index, rebuild complete should be TX2

Great tips out here. Good stuff!

On a rebuild completion will it update stats ? Does re-org update stats also ?

Rebuilds update stats, defrags do not.

Cheers Brent 😉

We are thinking of purchasing an all flash array for our data center [for SQL, Exchange, VMware, etc.] and since flash is 20x faster than regular disk [and I know this may seem like a silly question]…..but is a re-org/rebuild still needed if your entire database is lightning fast even with heavy fragmentation?

Not a silly question at all!

Even on traditional hardware, most of the read and write activity ends up being random on modern database systems due to the number of databases, the way storage is configured, and lots of things like that.

Occasional defragmentation may still be desirable in some cases– it does compact pages and help eliminate wasted space on the pages that physical fragmentation can cause, which can still be valuable for making data more dense (and therefore making your memory more efficient, also). But I like where you’re going to try to minimize maintenance: that can absolutely help in maximizing performance, too!

What is the real process behind the rebuild and reorganize? in the storage level.

which one is more faster and why?

Hi Manjesh. For questions like that, check out Books Online. You’d be amazed at what you can learn in the manual – it’s really well-written these days. For even more internal details, check out the book SQL Server 2012 Internals by Kalen Delaney & friends.

I could not imagine what would happen to me if I let the indexes get to 75% fragmentation. Wouldn’t be good for sure so I’m not getting it. Maybe because its such a small database I am not sure. I have larger tables than that.

If your databases aren’t large and most of your workload is random reads and writes (which is true for many OLTP databases), honestly you might not notice much of a difference. In the example I’m describing here, there was no measurable difference after all of the maintenance was standardized and fixed, and it wasn’t even a small database.

For what I would call our primary database which is currently about 6.5TB it processes around 500,000 claims a day which come in in the form of files (X12). There is some OLTP but much what goes on is reporting. However the picture is so much more complicated. I can point at so many issues. I many times suggested we hire you guys to tell Management these issues because it would have more impact coming from an outsider.

Regarding the indexes, if they get too fragmented on some of these large table the performance gets very bad. I’m talking 20%. Even if I do not create a covering index and a look-up is required it can have a severe impact on some of these. So I end up with really too many indexes. So there are problems on top of problems it gets somewhat complicated.

So for re-indexing I run a job 3 days a week. On the two weekday night jobs the threshold for re-indexing is 20% but it is 10% on Sunday mornings. I need a large transaction log drive and i have put logic in the script to monitor the size and go to sleep if it reaches a certain size. Yes I have no choice but to shrink it at that point. Oh and yes I do have table partitioning and as a job step before I get into the primary script i reorg partitions. However partitioning seems to be a performance hit for us to be honest, and mys testing has shown we get better performance if I remove the partitioning.

One more thing I have noticed that reorganizing seems to create more impact on replication than simply altering an index. I believe that is because altering the index you can sort in TEMPDB which is not replicated

So that’s part of my story and observation.

Wow, 20% My guess would be that the index maintenance is improving performance by changing something other than the fragmentation.

I did a couple videos on how index maintenance can have OTHER side effects that can really boost performance here: https://www.brentozar.com/archive/2014/04/index-fragmentation-bad-statistics-arent-always-problem-video/

If that’s the case, there might be faster ways to handle that than index maintenance.

If the index maintenance is working well for you then it’s not the worst thing in the world. I don’t want to make it sound like it’s *bad*. But if the index maintenance starts taking too long and there’s issues with it in running an 24×7 environment, then it might be interesting to look deeper.

Ok I will check out the video. But tell me doesn’t index fragmentation = diskIO? Because there is no doubt my disk are overloaded. If i have 25% fragmentation in a table that is 1TB that’s a good deal of IO.

Not necessarily. Most OLTP databases already have random IO/lookups, and don’t heavily use sequential scans. If you’ve got more than one database with a file on the same drive, you’ve also got a random IO pattern even if they’re scanning and more than one is active. Brent goes even deeper in this video: https://www.brentozar.com/archive/2013/09/why-index-fragmentation-doesnt-matter-video/

I do think that periodic index maintenance can help remove wasted empty space on pages. It’s just that historically index maintenance has been overly encouraged and credited with more benefits than it really has.

I have had a good deal of time now to work with this and I would say overall you are right but there are some other things I find that are factors. 1. Disk speed is a factor on this from what I see and in particular when the more SSD i get the less I need to re-index. Also being on a VNX I think I upset my Fast Cache when I re-index. Very soon we are moving to Pure Storage all SSD storage. 2. Very large tables. In this area I think I was getting the advantage from reindexing because when I did the indexes I would get statistics updates. The Built in Auto Update was not enough. So I started updating stats based on number of rows changed and stopped rebuilding indexes as much. It seemed to even out. So that goes to your point of something else being affected by the index updates.

Kyle – yeah, zooming out a little, the faster your storage is, and the less your queries wait on storage, the less that external fragmentation seems to be an issue to end users.

Internal fragmentation (empty space on pages) can still be an issue if scanning more pages takes your queries longer to run, but of course even that gets reduced the faster your storage is. (I’m not saying people with fast storage never need to do index maintenance, but just that the symptoms appear much lesser to end users. Kinda like how people with fast metabolisms don’t need to exercise to stay thin, but they probably should exercise for other reasons.

Hi Kendra,

Possibly a silly question. If, as Brent mentioned, index rebuilding may or may not be necessary, and as you’ve sort of said in this comment; is it still deemed a “best practice” to have SOME form of index maintenance or is “best practice” now a case of “Well, do what you need to do based on your underlying infrastructure and database structure”?

I seem to think that BOL used to imply that index maintenance was critical, however, I get the impression that Microsoft assume that everybody lives on a physical server with physical disks plugged in, and not everybody has access to an enterprise level SAN 😉

Regards,

Andy

Hi Andy,

Not a silly question. There’s two issues with index fragmentation – pages out of order, and empty space in the index. Defragmenting both re-orders the pages as well as “compacts” and removes empty space (depending on fillfactor).

There’s a lot of argument out there about whether out of order pages slow things down.

But most people agree that large amounts of empty space in an index isn’t desirable for most cases. I’ve seen 10GB indexes with 5GB free space in them. The sql server query optimizer considers page count, and also reading more pages requires more CPU and takes longer.

So regardless of page order, it’s still a best practice to do some index maintenance.

Kendra

Hi Kendra,

We have Instances with very large number DBs and couple of the DBs is big close to 1TB.

We are using OLA Hallengren script for Index Maintenance, in which it first updates statistics for all DB then runs Index maintenance. We also set time limit around 14 hours. So what happened is, within 14 hours it is able to finish only half of the DBs and when next time it runs again the same half of them gets done, though there are many times it finishes for all Dbs.

So I am thinking may be run the maintenance script in two different schedules by splitting the DBs and also a thought of editing the script to move the DBs that are done to the bottom of the table where it sets the selected & completed bits and when next time runs it will not rerun the same one and will resume from where it left last time.

Do you come across anything like that and what is your suggestion.

Thank you in advance.

Van

My first suggestion would be to question the assumption that you need to do index maintenance on 1 TB of data on a regular basis.

Thanks for your quick response, yes we do need index maintenance on that big DB on a regular basis since there is lot of activity is going on that DB for nightly batch process in big Financial firm. We do archive them quarterly, but still it is too big.

Also on my previous comment there is a mistake, actually OLA script, updates the column statistics and depends on the fragmentation level it runs the Index Rebuild or Reorganize & update Statistics.

Thank you

Van

OK– so to be really blunt here, you aren’t saying that you know you really need to do all this reindexing. You believe you do, but you don’t know specifically what will happen if you don’t. So you’re hitting multiple terabytes with a massive amount of IO and it takes a while.

This is a common mistake people make: they reindex out of fear. It’s going to take some careful analysis and work to figure out how to do less and get your maintenance done faster, and possibly some custom coding on your part. There’s no magic answer I can give to fix that.

Thanks Kendra!

I was excited to see the new WAIT_AT_LOW_PRIORITY option for index rebuilds in 2014 as we constantly have SCH_M / SCH_S blocking issues on our AlwaysOn Secondary node when Index rebuilds are happening on the Primary and a long running query is holding a SCH_S on the same object on the secondary.

I ran a few tests today thinking that this new option would clear the blocking on the Secondary but it hasn’t worked 🙁 I used the following as a reference : http://www.sqldiablo.com/2012/08/01/alwayson-availability-groups-isolation-levels-selects-blocking-writers/ and did the following:

ON SECONDARY KICK OFF LONG RUNNING QUERY:

USE [AdventureWorks2012]

select

NumbersTable.Number

from AdventureWorks2012.dbo.ErrorLog el

cross apply (

select

Number

from

(

select

row_number() over (order by s1.name) as number

from AdventureWorks2012.sys.sysobjects s1

cross apply AdventureWorks2012.sys.sysobjects s2

cross apply AdventureWorks2012.sys.sysobjects s3

cross apply AdventureWorks2012.sys.sysobjects s4

cross apply AdventureWorks2012.sys.sysobjects s5

) as InnerNumbersTable

) NumbersTable

group by NumbersTable.Number

order by NumbersTable.Number desc;

ON PRIMARY REBUILD INDEX WITH NEW WAIT_AT_LOW_PRIORITY OPTION:

USE [AdventureWorks2012]

GO

ALTER INDEX [NCI_ErrorNumber_ErrorSeverity_ErrorState] ON [dbo].[ErrorLog] REBUILD PARTITION = ALL

WITH (ONLINE = ON (WAIT_AT_LOW_PRIORITY (MAX_DURATION= 2 MINUTES, ABORT_AFTER_WAIT=BLOCKERS)));

GO

When I check the Secondary I can see blocking as the long running query has a SCM_S lock and the Index rebuild is waiting on a SCH_M lock.

However…..

I wait and wait but the blocking doesn’t clear. I was expecting the long running query to be killed after 2 minutes to allow the Index Rebuild to happen.

Either this is not meant to resolve the AlwaysOn blocking issues or I am not doing the test right… Would be great to hear if anyone has managed to get this one to work.

Thanks!

Ooo, great comment Jack!

I believe that you are seeing the expected behavior and that the ALTER INDEX command’s new option is designed to only abort blockers on the primary: but I agree, I don’t think that’s clear in the documentation! (I tend to think of that new feature as being for things like partition switching in and out and not specifically for AGs, which is maybe why I’d never thought of it in the way you were testing.)

Thanks Kendra! However, I just died a little inside 🙁 I was triumphantly telling our Report Team that 2014 has solved this issue and I just needed to run this test to confirm and we were set to upgrade non-production to 2014 from 2012.

I would imagine there has to be a lot of people out there experiencing blocking on AlwaysOn for the same reason – what a shame the SQL Server Team didn’t take this opportunity to resolve it.

I guess I will have to develop my own in house solution for this issue (pain). Maybe I’ll roll it out along with SQL Server 2014 😉

I hate to be the bad news bears, but just in case you haven’t seen it, there’s also this:

https://www.brentozar.com/archive/2014/01/careful-adding-indexes-with-alwayson-availability-groups/

Longrunning transactions on secondary replicas can also block ghost cleanup from running in the primary and cause other perf issues, so having some way to detect and alert on those queries can come in handy.

Yep – have a comment even on that post too 🙂

Our analysts run queries on the secondary that can last hours (they have a standard of SQL I like to call horrorSQL).

And as the source for the Report Team’s ETL’s is the Primary node they want us to run our index rebuilds during the day at the same time the analysts are online – hence why we hit this issue so much.

Got it. Honestly, in your situation, I would ask, “Why even rebuild?” I’d try to reorganize (as rarely as I can get away with) and perhaps supplement with periodic statistics updates if needed. Reorganize is still pretty darn good– yeah, it only addresses leaf level fragmentation, but it still compacts pages and reclaims wasted space in them, and that’s pretty important. Why not just do that?

(Note: We’re at the end of allowed comment replies on this particular comment thread, so you may need to start a new comment chain to reply back.)

Added this AGENT JOB to the Secondary which will give me what I want 🙂

DECLARE @BlockingSessionID tinyint

–Get the SPID of a Blocking Session holding a SCH_S when a SCH_M lock is trying to execute

SELECT @BlockingSessionID = blocking_session_id

FROM sys.dm_exec_requests

WHERE wait_type = ‘LCK_M_SCH_M’ AND blocking_session_id IS NOT NULL AND ((wait_time/1000)/60) >=2

–Kill the session

IF @BlockingSessionID IS NOT NULL

BEGIN

DECLARE @SQLCommand varchar(64)

SET @SQLCommand = ‘KILL ‘ + CAST(@BlockingSessionID as varchar(4))

EXEC (@SQLCommand)

END

–Returned to the user on SSMS:

–Msg 0, Level 11, State 0, Line 0

–A severe error occurred on the current command. The results, if any, should be discarded.

Thanks again Kendra. I think you may be on to something. We have the following in place for our maintenence (from Ola’s script):

@FragmentationLow nvarchar(max) = NULL,

@FragmentationMedium nvarchar(max) = ‘INDEX_REORGANIZE,INDEX_REBUILD_ONLINE,INDEX_REBUILD_OFFLINE’,

@FragmentationHigh nvarchar(max) = ‘INDEX_REBUILD_ONLINE,INDEX_REBUILD_OFFLINE’,

@FragmentationLevel1 int = 30,

@FragmentationLevel2 int = 70

When i go through my log table I can see quite a lot on indexes over 70% fragmented and get REBUILT (I run the script across each DB once a week).

Would you recommend looking at FILL FACTOR to try and get the fragmentation level down?

Also I know the Report Team have Index Drop and Creates built into their ETL Loads – might be time for us to have a little chat 🙂

Any final thoughts on Stats updates across a DataWarehouse? I see so much conflicting advice out there on this topic in a Very Large Table (Billions of Rows) environment.

Your help today has been great!

Fillfactor might be an option. I don’t like to lower it across the board, but when you’ve got frequently fragmented indexes, that’s a sign it might work.

I’d also look at the size of the fragmented indexes and consider bumping up @PageCountLevel. The default level of 1000 pages (~8MB) isn’t super high. I’d just be curious to know how many of the frequently fragmented tables are close to that.

For the ETL drop and create, man, that’s a hard one: it can be so much better to do that when loading data! But if it’s part of an AG, man, that makes it more rough. You’ve got to be in full recovery model and can’t get minimal logging, and all that data’s got to get churned over to the secondary server. For warehouses I always want to really know what the RPO and RTO are, and if there’s any way I can get away with simple recovery model for staging databases for performance reasons!

On the stats update, yeah– it’s a hard one. I’ve seen billion row warehouses where stats were updated once a day right after data loads with default sampling and that was just fine, and others which were much more sensitive and needed more special treatment. It does vary quite a lot by environment.

The biggest advice I’ve learned the hard way is not to get into updating stats with fullscan or adding filtered stats or super fine tuning parts of it unless you can clearly document what queries are improved by the change and by how much. If you can’t then it becomes difficult for others to support and understand. (Been there!)

So really with the stats question, I would look at things like your top queries in sp_BlitzCache and work through tuning top queries and making them faster, and only worry about the stats as you work through problems and can document why you’re making decisions. Later on that will help someone else (or even you) figure out if that maintenance is choice is still the best one.

Kendra I would like to try and see how fragmentation affects disk reads. For instance if I turn Statstics IO on and run a query would I see changes in reads as fragmentation grows? Would I have to free the cache to do so? Perhaps the DMV sys.dm_io_virtual_file_stats wold be the way to do that? Any idea’s?

Thanks

Oh, I think this might actually make a good post on its own. Here are some quick notes:

If it compacts the pages, you’ll see fewer reads, because there’s simply fewer pages in the index. This is one of the reasons why I think it’s probably good for most folks with active systems to at least periodically run index maintenance, even if some people only need to do it quite rarely.

You can use the avg_page_space_used_in_percent column in sys.dm_db_index_physical_stats to estimate how much space that would be if a large table was scanned.

For queries you can run yourself: Statistics IO will break it down and show you logical and which of those were physical vs readahead.

For a dev system: For sys.dm_io_virtual_file_stats, that’s physical reads, so for testing measuring that way you’d have to run DBCC DROPCLEANBUFFERS (clean pages) and CHECKPOINT (dirty pages). <- Disclaimer for readers, be very careful, don't blow away your buffers in production, that'll impact your performance! For production: My favorite way of looking at this actually asking, "How much space in my memory is wasted in the middle of the day?" (Or whatever your most active time is.) You can see the free space in memory in sys.dm_os_buffer_descriptors. Query you can modify for that easily in BOL here.

Where it gets tricksy: You might actually see fewer reads due to an execution plan change brought on as a side effect, rather than the actual compaction of the pages. This makes showing it with a sample of a lot of queries in a production workload very difficult, unless you’re painstakingly comparing the plans and data hasn’t been coming in.

Sorry to crash the thread, but I was intrigued by “How much space in my memory is wasted in the middle of the day?”

Is it something like this?

SELECT mem_space_wasted_mb = SUM(CONVERT(BIGINT, free_space_in_bytes)) / 1024 / 1024

,mem_space_wasted_percent = SUM(CONVERT(BIGINT, free_space_in_bytes)) * 1.0 / COUNT(*) / 8096

FROM sys.dm_os_buffer_descriptors

Are there any rule-of-thumb numbers for how much these should be, perhaps by type of system? (I’m probably hoping for too much here…)

It sounds to me that it could be perfectly reasonable for a system to “waste” a lot of memory on page free space as part of normal operations (even before taking into consideration cost of the I/O load that index maintenance generates).

Thanks!

One interesting thing is that the wasted space is not entirely from index fragmentation: it might be from heaps (you can get weird trapped space in these), or it might be from having rebuilt a lot of indexes with a LOWER fillfactor.

And you’re totally right that there’s always going to be some of this. When it gets to be around 25% of all the memory on the server, that’s where I start thinking “we can probably do better.” But sometimes index maintenance with a low fillfactor is actually more of a part of the cause than the solution, oddly enough!

Found this too (a bit late 🙂 )

http://www.sqlskills.com/blogs/paul/performance-issues-from-wasted-buffer-pool-memory/

I have been advised by a contracted SQL Server expert that, after any change in # of CPUs and/or available memory, I should reorganize all indexes and then update all statistics or SQL Server will not make the full use of the new resources. The reason given is that the indexes and statistics are partly based on available CPU and memory resources. I have not read that advice anywhere and am looking for a second source before making it part of my practices. Does that advice make sense to you?

Oh, wow, I haven’t heard that one before either!

That advice doesn’t make sense to me. Changing the amount of memory available, the number of CPUs available, or the settings that control those (max server memory, cost threshold for parallelism, max degree of parallelism) can absolutely change your query plans. But as soon as any of those things are changed, SQL Server knows that it has to reconsider all the execution plans: no index maintenance or statistics update is required to trigger that.

The contents or quality of your indexes and statistics shouldn’t vary based on the number of processors or the amount of memory you have. Those things can totally impact how long it takes to rebuild an index, for example, but the quality / contents of the index should be the same at the end either way.

Hope this helps!

Added again coz entered the incorrect email address mistake…

What would be the impact if rebuild Index job stopped?

Using SQL standards edition (64-bit)

Possibly there would be no impact. Possibly queries might perform differently after a while.

Thanks Kendra…

I thought if I run built-in rebuild index from maintenance plan and stop the job (or cancelled) then possibly index get corrupted that in running at the time got stopped.

Possibly queries might perform differently after a while. – is this for all of the queries running for the database after stop the job?

Any impact on table data?

If you never run index maintenance, potentially your tables could become very fragmented and have a lot of empty space in them after a long time. That could change performance, that’s all I’m saying.

Thanks Kendra 🙂 … appreciate quick reply.

Hi,

During online index rebuild, occasionally getting login failed error “Login failed for user ‘XXXX’. Reason: Failed to open the database ‘XXXX’ configured in the login object while revalidating the login on the connection.” And once the error happens, none of the databases in the server is accessible till the index rebuild of the specific index complete and all connections timed out.

This is with SQL Server 2012 SP2 (with latest updates) with Always On and Transactional Replication, index maintenance using Ola’s scripts. Appreciate any advice on troubleshooting.

I would start with our first responder kit checklist to gather more information about what’s going on and assess it: https://www.brentozar.com/responder/

Hello,

I’ve tried rebuilding and or reorganizing indexes on my test machine. I have a ETL process that always runs extremely long the next day after the rebuild(or reorganize).On average it adds 3+ hrs to the process the next day(around 4.5 hrs total).

After the first day it returns to normal time(around 1.5 hrs). I’ve validated there isn’t any locking, blocking or other processes running that could affect it. I’m starting to think its related to the cache plans but I’d like to identify how index rebuilds cause that issue.

Thanks!

Eric – I can’t quite tell if you’ve got a question in here or not, but if you do, check out http://dba.stackexchange.com. Try to give enough details that you’ve got a specific question in there.

I am hoping you are still reading this thread, here is my problem.

I have a converted mainframe application that sadly still using cursors, everywhere! So we have enterprise edition SQL and we are doing all maintenance plans with online, and we try to use reorganize as much as we can. Sorry for the long intro, here is my question… If I am doing “SET LOCK_TIMEOUT 600000; ALTER INDEX [INDEX_NAME] ON [DATABASE].[dbo].[DB_TABLE] REORGANIZE WITH (LOB_COMPACTION = ON)” would that cause “Could not complete cursor operation because the table schema changed after the cursor was declared”.

As we are getting that above error occasionally with the maintenance plans we have setup. Hopefully someone can tell me if reorg’s do technically affect the cursor process and if they do, I will have to do online rebuilds of everything.

Thanks for your help.

Adam – for random comments unrelated to a blog post, your best bet is http://DBA.StackExchange.com.

Hello, thanks for the article, very helpful – read it several times))

But I’ve run into a problem, maybe you could help with a piece of advice.

I have 1.7TB database wich is mirrored in asynchronous mode.

The rebuild job is run against the database every night.

The logic is simple – run select from sys.dm_db_index_physical_stats(db_id, null null etc) into a temp table(it runs for 2 hours!). and if i sum the size of indexes (page_count*8/1024) i get around 1,2 TB of indexes. I guess, that’s beacuse the clustered index is data itself =/

judging on the avg_fragmentation_in_percent value it reorganaizes or rebuilds indexes online row by row.

one morning i came to work and saw, that the hard drive with logs is full. that happened, because of the mirroring. principal transfered all the data from transactional log, but as mirror the mirror hadn’t restored it yet, the principal’s log couldn’t be freed with log backup.

after that i addded a line into a cycle after each index rebuild to check space on hard drive and wait for ten minutes then try again.

then the job took too long. it didn’t fit into single night. online rebuild causes no long lasting locks, but affects performance, so it’s not desired to run it during the day.

then i decided to split the job into 2 parts. one night indexes, that are less than 30GB, second – greater than 30GB. no luck. none of them fited into night.

i guess, the preblem is, as i’ve mentioned, that the select from sys.dm_db_index_physical_stats last for two hours and it runs twice during job – at the begining to form a temp table to maintain indexes, and in the end to from a report to send via email.

I am a bit confused, what are my options. Continue splitting the job into smaller chunks? Or maybe there’s a faster way to collect info on indexes rather than using table-function? Should offline rebuild be faster? Or maybe hardcode all indexes and rebuild them anyway?

Thanks in advance!

Egor – lemme zoom back a little. Why are you doing index rebuilds every night on a 1.7TB database?

Well, that’s what i got from previous DBA. I’ve been on current position for only a couple months, and there is plenty of other stuff to get into.

Soooo, I guess I should rebuild them to zero fragmentation. And then look how fast they get fragmented again?

But anyway, in case of rebuild/reorganaize i spend 4 hours to just get the stats of indexes.

Well, I solved the problem. Maybe it’ll help somebody:

I’ve splitted up all indexes in 4 equal parts (300 GB each). 1 part per night. So all indexes get refreshed every 4 days.

Step by step i check index fragmentation and Judging on it i choose to do rebuild or reorgonize.

In case of rebuild, I make sure that (log disk free space) + (log free space) > (current index size) + (log disk size * 0.1). So that after rebuild I have at least 10% of free space on log disk. Else I skip that index, and throw an alert into notification mail. But, i think it is possible to use WAIT FOR DELAY to let principal send the log and take full backup (if time limits are not the issue), and than try again.

Unicorns –is this site a joke or what….. Can we dispense with the silliness please. It hides the message.

Troll – yes, this entire site is a joke. All of it. Especially this line.

Serious –this reads like a 13 year old wrote it. You guys need to dispense with foolery. A little is fine –but who reads 1000 line posts like this —NO ONE. Cut it down –edit it for content and clarity. Put a joke or two in –but serious DBA don’t ascribe to unicorn references. It’s makes us look like bafoons. Well, not me because I wouldn’t be caught dead acknowledging this junk.

Troll – you’re right. Nobody is going to read this site. Our tens of thousands of subscribers and followers are surely just bots trying to sell us Viagra. I can totally see why you, fnguy4545@yahoo.com, wouldn’t be caught dead acknowledging this junk by doing things like commenting on the blog post.

You’ve convinced me. I’m ending the joke here. We’ll never post again. We surely wouldn’t want to look like bafoons who can’t spell the word buffoon. Thank you so much for turning my life around. Today is gonna be a brand new day!

Oh but his parents where alcoholics.

Your email address will not be published. Required fields are marked *

So if you don’t like someone you’ll post their email? Shame on you. Kim Kardashian has millions of followers — don’t equate followers with intelligence. The two aren’t mutually inclusive.

Wow, lots of trolls out there! I’m sorry 🙁 I love your posts and humor!! Keep it up

btw…Ola’s script is leaving giant indexes severely fragmented – I’m talking 10s of thousands of pages with like 97% fragmentation. I suspect it doesn’t handle partitioned tables but that’s only a guess and I haven’t dug into his code. It picks up the index has an issue but then does reorganize on just one partition leaving the rest fragmented. We are contacting him to see if he knows there appears to be significant bug in the code.

I’m curious how your checking for this fragmentation at the partition level or the entire table and if your using the option with the utility @PartitionLevel=’Y’

The DBA is using the partitionlevel=’Y’ and it is finding the partitioned indexes that are fragmenting but its often only doing just a single partition and then for some reason does it again the next run even though the table hasn’t changed and it also leaves the entire index as showing still up to 99.8% fragmented! But other times it missed the index entirely and other times its doing a single partition when in fact all the partitions need to be done. I’ve noticed no actual pattern to it – sometimes it missed indexes on partitioned tables and other times it misses non-partitioned table indexes it misses. Unfortunately our DBA trusted the scripts (because everyone uses it and trusts it) and didn’t monitor actual fragmentation – I noticed it wasn’t working correctly only after performance problems drove me into researching the cause. btw…we’re on version 2012.

I disagree with Troll…. (sorry dude)

Seriously, thanks for posting this. We’re a small team (at work) and I’ve been looking at our indexes lately and seeing that some have gotten quite fragmented. We aren’t experiencing any performance issues.

We’re not dealing with huge databases (45GBs… and growing steady), but I think it’s likely prudent for us to have some measure of index maintenance in place.

Just trying to find what the best plan of attack is to put around the heavier use times…etc.

I’ve read the whole thing… lot’s of great stuff in the article and in the comments.

An update from the front lines of Troll slaying…or was that goblins? I seem to have trouble telling them apart until my sword glows…

It seems Ola’s script does do its job as advertised after all! The issue seems to be a little thing called running using 0% fill factor which was causing a coolant leak. So batch updates were causing re-fragmentation of the warp coils right away leading me to assume the script was at fault. I don’t know Jim, I’m just a country architect and this is a big database but the good part is Scotty saw the error and is reconfiguring the Ola’s warp coil to see if the ship can get back up to warp speed soon.

And to think it all started with Kendra’s blog that got me asking questions about fill factors…someday you will read this and know how the warp coil explosion was avoided. Is it possible Kendra time travelled back to this point in time just for this very purpose? We may never know.

I thought i am the only dba who does a lot of weed. Seems, i was mistaken…

Great Post.

Thanks for the info!

Hi kendra,

I have 1TB size database .what happens when a index reorg job is running on a table at the same time index rebuild job starts which is going to rebuild the same table index. Does rebuild job supersede index reorg? Appreciate your help on this.

Shetha – why don’t you give it a shot, and see if one blocks the other? That’s one of the neatest ways to learn in SQL Server – try it yourself in your development environment and see what happens, rather than asking someone else to do it for you. Enjoy the journey!

Hi Brent,

In my many years of DBA work, I’ve never seen the following behavior:

In SQL 2014 Express edition (12.0.2269.0), I have a Fragmented index at 54% (4.5M rows) and Stays fragmented at 54% after a rebuild.

The only way I was able to fix the situation was to drop and recreate the index.

Have you ever witnessed a similar behavior? Can it be as simple as upgrading to SP2, although I didn’t find any direct correlation with the SP1 and SP2 release notes?

John – your best bet for questions is to head over to a Q&A site like http://dba.stackexchange.com. That way you can get a rapid answer from the next available person rather than waiting for a single person who might not have had that same experience.

Did that 😉

got some interesting answers.

Not sure I fully understand (trying to reproduce in lab), if you are interested: http://dba.stackexchange.com/questions/145546/fragmented-index-not-defragmenting-after-an-index-rebuild

Thank you for all the great articles

Hi Kendra,

I have a question on index reporg..so basically we have reorg job followed by update statistics job that runs every night and are successfull.But still i see fragmentation > 80% for many small tables.The reorg job is set to run for indexes which have pagecount > 500.Since those are small tables page count is not more than 200.

How do i get the fragmentation reduced?Does this fragmentation really cause slowness to the application?

Heck no.

Sravanthi – for questions, head on over to https://dba.stackexchange.com.

Sure, thanks!

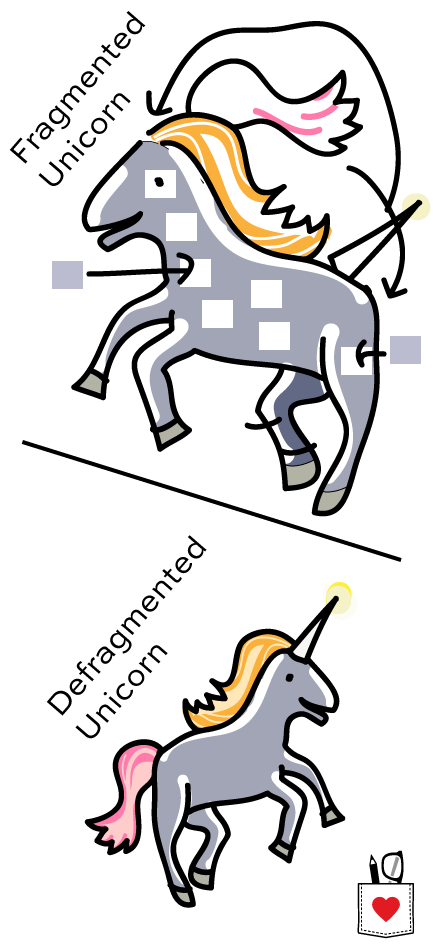

Perfect illustration with the picture. A picture is worth 1000 words! Well done!

[…] Explanation:Reorganize: This option is more lightweight compared to rebuild. It runs through the leaf level of the index, andas it goes it fixes physical ordering of pages and also compacts pages to apply any previously set fillfactorsettings. This operation is always online, and if you cancel it then it’s able to just stop where it is (it doesn’t havea giant operation to rollback). https://www.brentozar.com/archive/2013/09/index-maintenance-sql-server-rebuild-reorganize/ […]

I know this is an older thread but I’ve been doing a whole lot of experimenting with indexes lately…

Yes, it’s true… Reorganize will compact pages according to the FILL FACTOR. You have to be careful how you read that, though. Reorganize will always try to do things within the space allocated. What that means is that it will compact any pages that have a % of page fullness LESS than the FILL FACTOR but, as a whole, it will NOT allocate more space if the average % of page fullness is MORE than the FILL FACTOR. That means that if one of the reasons that you’re trying to do a defrag is to allocate more space according to the Fill Factor to help prevent mid index page splits, Reorganize does nothing for you and the page splits will continue and actually get a whole lot worse as the pages continue to fill over time. In such cases where the average % of page fullness is more than the Fill Factor for the index, then a Reorganize is a total waste of time, CPU, IO, and log file space. You should do a Rebuild instead for cases like these.

I’ll also state that if you have an index with a 100% Fill Factor and it needs to be defragmented, DON’T DO IT UNTIL YOU HAVE DETERMINED WHAT THE FILL FACTOR SHOULD BE! Defragging indexes with a 100% Fill Factor will cause huge numbers of bad page splits as soon as people start inserting new rows and the % of fragmentation will almost immediately jump to over 99%. It’s actually worse than shrinking your database every night.

Hi, Brent Ozar!

Thank you for the great article!

You mentioned that “if you cancel it then it’s able to just stop where it is”. Does it mean: if you stop the reorganize process than you can resume it from the place where you stopped it? Or the next run starts from scratch like you didn’t run “reorganize” before and there is no outcome from the previous “reorganize” operation?

@Nick,

The truth is that if you have something that’s fragmenting, you should probably not be using REORGANIZE on it at all. REORGANIZE doesn’t work the way most people thing it does. It only compresses indexes UP to the Fill Factor. It will never make space above the Fill Factor.

What that means is that REORGANIZE is being used at the worst possible time there is,., when you need free space in the index and you’re removing it, instead,

[…] QUESTION 2You administer a Microsoft SQL Server 2014 database that contains a table named AccountTransaction.You discover that query performance on the table is poor due to fragmentation on theIDX_AccountTransaction_AccountCode non-clustered index. You need to defragment the index. You also need toensure that user queries are able to usethe index during the defragmenting process.Which Transact-SQL batch should you use?A. ALTER INDEX IDX_AccountTransaction_AccountCode ONAccountTransaction.AccountCode REORGANIZEB. ALTER INDEX ALL ON AccountTransaction REBUILDC. ALTER INDEX IDX_AccountTransaction_AccountCode ONAccountTransaction.AccountCode REBUILDD. CREATE INDEX IDXAccountTransactionAccountCode ONAccountTransaction.AccountCode WITH DROPEXISTINGCorrect Answer: AReorganize: This option is more lightweight compared to rebuild. It runs through the leaf level of the index, and as itgoes it fixes physical ordering of pages and also compacts pages to apply any previously set fillfactor settings. Thisoperation is always online, and if you cancel it then it\’s able to just stop where it is (it doesn\’t have a giant operation torollback).References: https://www.brentozar.com/archive/2013/09/index-maintenance-sql-server-rebuild-reorganize/ […]