Great volumes have been written about table partitioning. It’s a complex feature and you can read for days to just understand how you might apply it. But will it improve performance for you? Table partitioning produces great benefits for some applications, but causes giant headaches for others.

How do you know if you should invest your time in table partitioning?

I can help.

SQL Server Table Partitioning: The Basics

Table partitioning allows tables or indexes to be stored in multiple physical sections— a partitioned index is like one large index made up of multiple little indexes. Each chunk, or partition, has the same columns– just a different range of rows. Table partitioning is “transparent”. This means a partitioned heap, clustered index, or non-clustered index can be referenced as a single structure although it’s stored in independent physical partitions. In other words, in theory you don’t need to change any code in the calling applications. (Is this true in reality? More on this later.)

An Textbook Example of Table Partitioning

Contoso Corporation’s Froyo Division has a 2TB database named FroyoReports. Each day, 10 million rows of sales data are loaded into a table named FroyoSales. Contoso Corp has employees worldwide who query the data using SQL Server Reporting Services. Reports are run against FroyoReports 24 x 7, although there is a two hour window each day where there is significantly lighter load. 95% of reports run are against the most recent two months of data, and the DBA team controls and can tune the queries run by each report. The Froyo DBA team needs to maintain only 13 months of data in the FroyoSales table. As a safety precaution, they prefer to keep three additional months of data online, but do not want reports to access the older data.

Life used to be tough for the Froyo DBA team. Each night as data was loaded, reports repeatedly blocked inserts. To help alleviate blocking, some reports were modified to have NOLOCK hints. This meant that sometimes reports contained partial data for the most recent day, which caused problems. Users were never really sure when data was finished loading and when it was safe to run reports. The Froyo DBA team deleted old data on weekends, and that process also had problems. There was additional blocking and deletes slowed performance significantly. To solve these problems, the Froyo team implemented table partitioning. They partitioned the FroyoSales table by date.

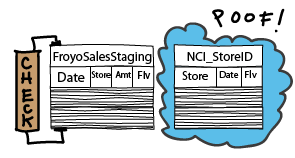

Each night, the Froyo team loads data with an automated process. First, it loads new fact data into a fresh, empty table named FroyoSalesStaging.

Next, it adds indexes and constraints to FroyoSalesStaging so its structure matches Froyo sales.

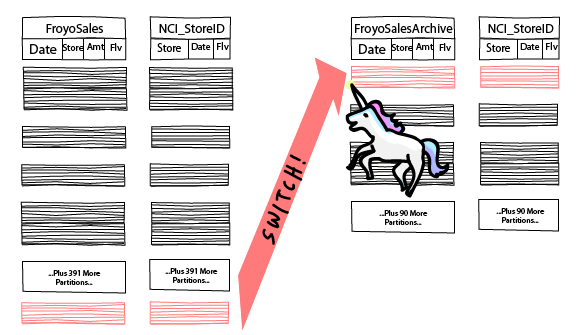

Then, they switch the single partition out of FroyoSalesStaging and into the partitioned table FroyoSales. (This involves a few commands to prepare the metadata for the partitioned table prior to the switch– we’re going for an overview here.) This switch takes a brief moment, then all the new data is visible to users.

The Froyo team has also automated how they remove old data. Each night they switch the oldest day– which is now past their 13 month limit– out from FroyoSales and into a table named FroyoSalesArchive. (Similarly, there’s a few commands to clean up metadata for FroyoSales after the switch out.)

Handy, isn’t it?

The Main Features of Table Partitioning— And the Gotchas

Here are the big attractions for table partitioning, along with the fine print.

SWITCH That Partition

As you can see above, a whole partition can be switched into the table or switched out, allowing for extremely fast loading and removal of large amounts of data. This is, in my opinion, the biggest benefit of partitioning.

There are a couple of gotchas to be aware of. Switching in and switching out partitions can be very fast, but an exclusive lock— Called ‘SCH-M’, or Schema Modification lock— is required. This means you can get blocked from loading or removing data from your table— potentially for a very long time. Also, all of your enabled non-clustered indexes must be “partition aligned” to switch a partition in. This means the partitioning key must be part of each of those indexes. If you need to maintain uniqueness on a set of columns that doesn’t include the partitioning key (which is often the case in OLTP environments), this can pose a problem.

Query Performance on Partitioned Tables: Partition Elimination and Beyond

SQL Server tries to identify when it can use limited parts of a partitioned table. The SQL Server query optimizer may direct a query to only a single partition, multiple partitions, or the whole table. Using fewer partitions than the entire table is called “partition elimination.”

Statistics are maintained for the entire partitioned table or index— you don’t get additional steps in your histogram for each partition. This means that the SQL Server Query optimizer may still have a very hard time knowing how much data is going to be returned by your query, and this difficulty will increase as your table grows. The result may be slow queries.

Queries will perform better when you specify the partitioning key in the criteria (aka the “where clause”). So, although partitioning is “transparent,” for existing applications, query tuning will almost always be required.

Partition Management

Individual partitions may:

- Be rebuilt individually, for clustered and nonclustered indexes alike.

- Be set to read-only, via their filegroup— gives you options to optimize backups

- Live on different disk sub-systems — less frequently accessed data can sit on slow disk. Frequently accessed data can sit on faster disk. All this within the same table! You can move a partition to faster or slower disk online with some virtualization and SAN solutions.

There’s a few things to be aware of:

- You want to be careful about splitting partitions— performance can be very slow.

- In SQL Server 2005 and 2008, individual partitions may be rebuilt offline only. An entire partitioned index may be rebuilt online— but that’s a bummer if your database is 24×7.

- Setting a filegroup to read-only doesn’t eliminate lock management overhead— that’s only true for a read-only database.

Columnstore Indexes and Table Partitioning

Columnstore indexes are a really hot feature in SQL Server 2012. These are columnar indexes optimized for blazing fast performance. Although these indexes will be read-only, partitions may be switched in to columnstore indexes.

When Is a Table Big Enough to Partition?

After covering the basics of table partitioning, this is usually the first question people have: “Is my table big enough?” My response is: “Let’s talk about why you’re interested in table partitioning. What is the problem you’re experiencing?”

Tell Me Where Your Table Hurts

When people investigate table partitioning in SQL Server, usually they’re having a problem scaling up their database. What you are experiencing may take many different forms. The problem can contain one or more of the following:

- “Slow” queries that return small amounts of data

- “Slow” queries that return large amounts of data

- “Slow” loading of data

- Blocking between readers and writers (inserts or updates)

- Long-running index maintenance jobs (or an inability to run them at all because they would take so long)

“Slow” is of course highly relative. Here it means “my users are complaining” or “my webserver is timing out” or “something is failing and paging me in the middle of the night.” Often, the tables in question are being used for a mixture of OLTP activity and reporting activity.

My approach is to talk to the team and find out what the experience of the problem is like. Literally, “What keeps you up at night about this table?”

How Is Your Overall Health?

Prescribing table partitioning is like recommending significant surgery— you don’t want someone to go under the knife unless it’s the best way to make things better. I look at the overall health of the system. Where are the current bottlenecks? What are we waiting on? How healthy are the individual components? How are the queries currently performing, and what do the query plans look like? What patterns are in use in the queries which are running? I also look at the structure of the tables and indexes in the context of the queries.

What Performance Characteristics Do You Need?

How many records do you want to load a day? How many records will you be deleting a day in six months? How many new clients is your business expecting to be bringing on, and what is the estimated impact that will have on reads and writes on your system? The number of expected clients can be tricky to translate to database activity. A SQL Server health check can produce some metrics for current activity that can be used for projections.

How Many Queries Can You Tune?

Whether or not you have the flexibility to tune queries is a big differentiator in how you choose to scale up your application. On the one hand, table partitioning is “transparent” because the name of the partitioned objects doesn’t change. On the other hand, you want to tune queries to get partition elimination and the best possible query plans after you partition— and sometimes you need to get a little creative. The structure of your tables and how queries are currently written will play a huge role if you have a limited (or no) ability to tune queries.

What’s The Best Approach to Scaling Your Application?

Here’s my secret: I don’t answer the question of “Should I use table partitioning?” Instead, I answer the question “What is the best way to scale this application?”

The right approach for your scalability problem may contain table partitioning— perhaps by itself, perhaps in combination with other technologies. In the right application, table partitioning can be truly awesome. But we also may be able to scale your application up in another way— perhaps more cheaply, perhaps more quickly, or perhaps in a way that includes built-in geo-diversity. It all depends on your database health, performance requirements, budget, and flexibility.

Still Interested?

If you got this far and you’re still interested in table partitioning, maybe it’s the right fit for you!

Before you start designing a table partitioning strategy, or if you’re trying to troubleshoot why your partitioned tables aren’t working as fast as you expect, check out our SQL Server table partitioning resources page.

Want help? Talk to Brent for free.

The problem probably isn’t fragmentation – you keep defragmenting and the problems keep coming back.

Our 3-day SQL Critical Care® is a quick, easy process that gets to the root cause of your database health and performance pains.

Learn more, see sample deliverables, and book a free 30-minute call with Brent.

157 Comments. Leave new

There are application implications when a large transcriptional table is partitioned. The unique key is now partitioned align, and unique index calls and foreign key relationships need to include the partitioned aligned column. Even worse, you can no longer guarantee a unique identifier on the table just by itself, unless the unique identifier is the only unique index and is also the partition scheme. However, most partitioning strategies involve date+timestamp in order to exercise sliding window.

I wish SQL Server enhanced their partitioned tables to include Global Indexes, like the ones supported in Oracle for over 10 years now. It would be completely transparent for the application.

For readers who are new to partitioning, I would just add that you can make a unique index which doesn’t include the partition key– but that index is “non-aligned” with the partitioned table.

Having one or more non-aligned indexes enabled on a partitioned table means that swapping partitions in and out no longer works. And that’s one of the biggest features of partitioned tables, so that’s a bummer!

This is where people get into the really hard choices when they implement partitioning on databases that serve an OLTP role, or a mixed OLTP role. I don’t personally think that’s always a bad idea– it really depends on the use case and looking at all the options— but there typically aren’t nearly as many hard choices to make when it comes to reporting/ warehousing style of databases.

I expect to see partitioning usage increase with columnstore indexes becoming available. it’s definitely going to be interesting!

For EDWs and Data Marts the implementation of partitioning is a no-brainer. Staging to table to partition switch-in is one of the best methods of appending data to the facts. Same goes for sliding windows for data archival.

Going back to OLTP, I have come across solutions that loaded in excess of 20 million rows per table per day that were part of well normalized schema. The application was making calls that read any rows of those table for the last N days. Because our backup windows increased and due to the fact that storage was limited, we needed to re-architect to support partitioning on these tables. It was a challenge, but once in place it made data archiving an easy task, and allowed us to tier the storage for Read-Only filegroups/partitions. Backups windows decreased as it was only taking care of Read-Write Filegroups.

Partitioning for column-store indexes are a must IMO. It will allow the DBA to create them on a staging table before switching in into the master table. Columnar-store indexes are not updatable as you already know, and any addition to the master table via DML will require a drop and recreation of the column-store index. Not ideal when you have billions of rows.

Kendra:

Thanks for the good partitioning overview.

Should “The Froyo DBA team needs to maintain only 13 of data in the FroyoSales table.” be “The Froyo DBA team needs to maintain only 13 months of data in the FroyoSales table.”?

It absolutely should! I made the correction. Thanks for letting me know.

how partitioning table can solve read write lock issue you described in “An Textbook Example”, can u be more specific ?

because as far as i know insert operations basiclly don’t block reads(on increasing clustered index), update operations block reads with key lock but same story after partitioning .delete operations wont block reads either if you avoid lock escalation.

Hi Zack,

Partitioning doesn’t change the behavior of isolation levels. The trick is that when you’re loading data into the staging table, none of the reports are reading from it yet– that staging table is completely separate. The only issue with locking you’re going to hit on the partitioned table is when you “switch in”. That requires the schema modification lock, so there could be blocking there– but it is one small, atomic, fast operation.

Hope this helps.

Kendra: Excellent Article, for our oragnization database we were thinking and few discussions whether or not to go for Partitioning, this article helps

Kendra what are the plusses and minuses to using Hash partitioning on OLTP tables with large numbers of inserts on a daily basis?

Hi KC,

This is a great question– and the answer could be considerably lengthy. I think it’ll make a great blog post. To give the “biggest picture” one-size-fits-all-schemas/apps answer, the first thing I would think about is this:

What are the application requirements in terms of reading? When I’m partitioning data, I have to pick a partitioning key. If speed of targeted reads is critical as well, then I will need to have a meaningful key that I can identify quickly and use to do partition eliminations on my reads. So, depending on what I need to do with the data after I write it, I may have a lot to consider there.

Other things to consider are just how many writes you need to do over time. If we’re talking table partitioning alone, we’re talking about tables in the same database. That means we only get one transaction log file, and there’s only ever going to be so much we can do with one log, for example– logs have limits. But before we ever get to that limit, we might hit limits with our storage, with our processor power, etc– all depends on that hardware.

So, long story short, things like this are why I like to step back and say, “What are our eventual goals?” and really dig into what the application needs to do and work from that direction. I might end up at a solution involving table partitioning, but I might end up with other designs as well.

Thanks for the great comment– that’s a really rich question and considerations for pros and cons there will make a great post. Stay tuned.

Hi Kendra,

You said in your article that “You want to be careful about splitting partitions— performance can be very slow.” That is definitely true and I find myself confronted with this problem, having to extract one year worth of data from a yearly partitioned table that hasn’t been maintain (and now the last partition contains 3 years instead of one). Is there anything that can be done to speed up this process? Would shrinking and / or reorganizing the filegroup before starting the split help? How about compressing the partition before the split?

Thanks

How about dribbling the rows out, say 1 or 2% a day? If the table hasn’t been maintained for 2 years, another month or two shouldn’t kill you.

Kendra, do you know about cheaper alternatives for partitioning other then upgrading to the expensive Enterprise Edition?

I hear about Rewius (www.rewius.com) which claim to provide partitioning on Standard Edition database, Do you have experience with their software?

I did this once very effectively in sql 2000 simply by creating an “archive” table. I copied the last 90 days worth of data into a new table with the same structure, then I renamed both tables, the old one was now called DataArchive, and the new one renamed DataCurrent. Then I just had sql agent move the daily records every night into the archive. The queries had to be modified to query current and archive records with a UNION, but I wrote SQL stored procedures to handle it automatically. worked great for a long time.

Clark – you can make your life even easier by checking out partitioned views.

Performance is very important with any application.If your database tables have millions of records then a simple SQL query will take 3-4 mins.but ideal time for a query should be at max 5 sec. Now the Question comes “How can improve performance with large databases.“

See this article http://techathon.mytechlabs.com/performance-tuning-while-working-with-large-database/

It’s funny– performance is important with the databases we get called in to look at, but there’s lots of databases where it’s not a big deal. I also find that a few million rows is pretty small change these days!

Table partitioning is best way to improve database performance with large databases.If your table contain millions of records then this is highly recommended you should use partitioning.In this article, I will explain what is partitioning and how can implement partitioning with database.

see: http://techathon.mytechlabs.com/table-partitioning-with-database/

I can’t disagree with your leading sentence more. Table partitioning can also be the best way to DESTROY application performance in large databases. Please keep that in mind.

Hello Kendra,

Could you tell us a bit more about how partitioning could destroy application performance in some cases. Do you mean by that too much partitioning ?

Thank you.

Hi,

I mean just because of the partitioning itself. Table partitioning is a pretty complicated thing for SQL Server to handle and it changes query optimization and join strategies.

Check out this blog post by Paul White one some query issues involving a partitioned table. This is just one example, but it shows how complex it can be: http://sqlblog.com/blogs/paul_white/archive/2013/06/17/improving-partitioned-table-join-performance.aspx

The worst situation is when people don’t expect that partitioning can hurt performance. They implement it, then application performance gets slow. People then have the task of figuring out if the table partitioning is the cause of the performance problem (in part, or in whole), or is just a bystander, and it’s a very tough situation. So definitely tread with care.

Kendra

Ok so i understand that theory is not enough is table partitioning. Execution plan analysis and maybe some modifications in the queries are required to reach the best performance.

Thanks.

Yes, exactly! Here’s another interesting example– it’s a Connect Item called “Partition Table using min/max functions and Top N – Index selection and performance”. The workarounds tab gives some examples of the kinds of rewrites that might be needed.

http://connect.microsoft.com/SQLServer/feedback/details/240968/partition-table-using-min-max-functions-and-top-n-index-selection-and-performance

Know thy queries helps when deciding how to set up partitions. If we don’t know what the queries are going to be, it’s maybe better to hold off on partitions until we do. If the queries are really unpredictable, maybe try something else. When done right and in the right situation, partitions are awesome.

I agree with Kendra. Contrary to what many believe, Table Partitioning is absolutely NOT the best way to improve database performance unless each and every query is setup to take advantage of the partitioning column. For example, if you’ve partitioned an audit table by month on the “DateCreated” column, then the only way that a query can take advantage of the partitioning is if the WHERE clause has a date range criteria for the DateCreated column. If you’re searching for something else, then you’ll be searching across all months and partitioning isn’t going to help at all. Instead, you need a good index to support the query and you need well written code that’s actually capable of using the index properly just as if the table weren’t partitioned.

To wit, partitioning is hardly ever done for reasons of performance of code. Rather, partitioning is done to ease backup requirements (especially if static partitions are set to ReadOnly), allow for “Piecemeal Restores”, and to make index maintenance take less time and require fewer resources because you can rebuild/reorg the indexes by partition and then only on those partitions that actually need it.

If you want better performance, design better tables, design better indexes, and most importantly, write better code. Partitioning alone just isn’t going to do it for you and then it’ll help only in very specific cases depending on the partitioning column you’ve chose.

Sorry, one thing to add.

Partitioning can help performance by spreading the load out across multiple disks. Anytime you can get more spindles and their separate read-write heads involved, you will usually see some performance improvement. That doesn’t happen very much, nowadays, because a lot of SANs recommend that you let the SAN handle such things. Even on SANS where you can assign logical disks to particular physical disks, most SAN manufacturers recommend that the SAN will be able to do a better job than such manual setups would do or the SAN administrators just don’t want to do such a thing.

As a bit of a sidebar, it’s almost a shame that hard disks have gotten so large because you used to be able to get a whole lot more spindles/RW heads involved than you can today.

You guys talk about partitioning a data warehouse fact table and using partition switch to load data into it. Almost always fact tables are loaded daily in a datawarehouse. Is that common to partition the fact table by day to be able to using switching?

Yep, a day is a common choice for the partitioning ‘grain’. The version of SQL Server will impact the number of partitions you can have at a given time. Originally only 1,000 partitions were allowed in a partitioned object.

As of SQL Server 2008 SP2 / SQL Server 2008 R2 SP1 and higher versions, there is support for 15,000 partitions per object, but it needs to be enabled.

So basically, yes, daily is common, but if you need to keep more than 1,000 days then you may require some special configuration. (Although of course that could be true at other grains as well.) More info is here: http://msdn.microsoft.com/en-us/library/gg981694.aspx

For a multi fact table data warehouse, if each partition is stored in its own file group with at least one file, wouldn’t that create thousands of data files for the database? It sounds kind of scary 🙂

If you followed that recipe, you could indeed end up with thousands of data files– and in turn that could create some very slow startup times for your database.

You have options about where you want to put your partitions. Many partitions can be mapped to the same filegroup (and a filegroup can have one or many files). You could go to the other extreme and have thousands of partitions all on the primary filegroup on a single file, too. All those partitions could be from one or more partitioned objects.

The “partition scheme” is where you map out how the partitions are laid out on filegroups. And it can certainly be tricky to figure out what’s going to perform best in an environment, depending on how the partitions are used and what kinds of storage you have available.

You can have more than one object share a partition scheme, by the way– it’s pretty flexible which is great but it makes the choices complex!

[Edit: added a couple more details about all the options]

You mentioned that having many file groups/data files will impact database start up. Is that the only disadvantage? What are some of the advantages of having multiple file groups when doing partitioning or even having one file group per partition. I say that because most examples I have come across, the table is partitioned by month and each month is stored in its own file group. The one advantage I see in that is the fact that you could make older file groups read-only and reduce backup times. Is that the only advantage?

Storage cost and performance also factor in. Often only the more recent portions of data are queried regularly — ideally I’ll keep those on faster storage. Much older data may need to be online, but if storage costs are an issue I might want to keep that on cheaper, slower storage.

Depending on what type of storage is in play, getting this all to work without having lots of downtime can be tricky, but there are some cool tricks if you’re using a SAN or virtualization. (And sometimes having a bit of downtime on parts of the data to get this done is perfectly fine, too.)

Filegroups also allow more benefits/options when it comes to backup and restore. If my older partitions are not actively being written to, I can mark their filegroups read-only and then back them up more infrequently– thereby reducing space, time and resources needed for backups.

All of this stuff adds complexity on the DBA side to make sure that you’re still getting the perf you need and that backups are being done properly. But it can be very cool.

Edit: I should say that the startup time issue with lots of files does take a bit of work. We’re not talking about 20 files causing that issue. On most production sized boxes it’s going to be thousands of files (across all the databases). But it can definitely happen.

Thanks for the quick reply Kendra, much appreciated.

So basically, the solution will really depend on specific requriments. Here is what we are looking at 🙂

– We have multiple large fact tables in our data warehouse (over 100 GB each)

– We need to apply a retention policy and drop/archive old data

– We load fact tables daily

– The retention could be applied monthly or quuarterly

– We are hoping to reduce the backup window and index management tasks

at a high level what sort of design would you recommend in terms of partition grain and number of filegroups/files. Based on your previous comments I was thinking of partitioing by day to improve loading of fact tables and creating monthly file groups for each fact tables so that older file groups could be marked as read-only therefore helping the backup process. In terms of retention, if it is done every month, we would need to switch all daily partition within that historical month to staging tables and drop/move them.

There’s just so much that goes into it that there’s no way I can make a recommendation based on a conversation or blog comments. I would want to start off before the assumption that table partitioning is the right fit here– there might be a different schema option that could work and be better for licensing and support.

I certainly don’t mean to blow off your question in any way. This kind of architectural recommendation is something that typically takes a multi-day engagement to make because of all the factors that are involved.

No problem. Thanks anyways!

Hi Kendra,

One general question about file groups when table partitioning is implemented: is it common to create/drop file groups and files dynamically using scripts which maintain table partitions? In other words are file groups normally pre-created and maintained when needed or dynamically created and dropped by scheduled scripts when new data comes in?

Most people pre-create a certain amount of partitions, and have an automated process that regularly checks and pre-creates more. That way if for some reason the process doesn’t run for a while you don’t end up with a bunch of data in the wrong place.

Where have you posted your helper functions for partitioning? They look very useful.

Hi Jim!

Glad the views look good!

Those will get posted with the video from today’s webcast. Look for them later this week. (I’m my own Audio Visual team and processing the video takes a little time.)

I’ll link everything up from our master partitioning page https://www.brentozar.com/go/partitioning when that goes live, too.

Edit: I originally wrote “functions” instead of “views”. Maybe that coffee was TOO good this morning.

Hey Kendra,

Nice blog, but I got a question for you.

Would you start with partitions in place if you know that your data is going to grow faster than you can respond?

The system I am working on has the potential to grow into the Terabyte size in less than a year.

It is a Mulit-Tennant system where the main partition key would be the “CompanyID”. That would then exend into customer and orders etc. And this is a transactional system.

Just wonder what you think of this situation?

Thanks!

David

If your partition ID is CompanyID and you say it’s a transactional system, I’m guessing that you’re not implementing table partitioning for switching (loading data externally and moving in a whole partition quickly / moving a whole partition out quickly).

If this is the case and you’re trying to partition for performance, I would step back and examine if it’s really the best fit for you architecturally. There may be other designs that accomplish your goals without making performance tuning so difficult over time. I would factor in not only application performance, but also RPO, RTO, and scalability across servers/licensing over time into the decision. There are lots of other options, many of which don’t lock you into EE on every SQL Server instance (including DR), and which might address your problems.

But I absolutely agree that if you know data is going to grow fast, it’s important to ask these questions early on! I would just recommend evaluating lots of different options than table partitioning since it’s such a tricky beast for performance in OLTP.

Hi Kendra,

Thanks for the response. I realize there are other methods. I am more in favor of distributing the data via filegroups and better indexing. The reason to look a partitioning is that the may be up tp 8,000 concurrent users from 15000 companies. Each of those companies may have large volumes of data in key tables. And we are tied to a response time SLA to populate the screen content.

This is a Medical office type application on the cloud. Something like the appointment table can have hundreds of million rows. Other tables are as large or even larger.

My original plan was to have the application read from a Data Mart and have all transactions pass through a separate OLTP process server that would valifdate the transaction and then pump it into the data mart.

Then the idea came up for partitioning the tables for faster select perfomance from the data mart came into the discussion. I still am not convinced, as you aren’t, that we should partition, but I have to chase the idea down and see.

While we are currently still on SQL Server 2008 R2, it occurs to me that moving to 2012 with the in-memory capability would be a better solution. But maybe not.

David

Hi Kendra,

I have a database that is taking up approximately 66% of space on a network drive that also hosts other databases. The culprit is one table which is currently 40GB in size. I was considering table partitioning as a solution to archive off some of this data onto a different file group with more space available. The table is primarily used for reads. The data is to be retained, so I don’t really have the option of purging historical records. If I have understood things correctly, am I correct in saying table partitioning would be a reasonable solution in this instance?

Thanks.

I’d step back and take a really hard look at what problems you’re trying to solve– whether it’s performance, backup and restore, or manageability. There are ways to solve problems in all of these areas without using table partitioning that are much simpler to manage in the long term than table partitioning– and also don’t necessarily come with the Enterprise Edition requirement. I’d definitely evaluate all the options, especially for a table as small as 40GB. That’s not very large by modern standards, really.

Thanks for your prompt response Kendra. The problem I am trying to resolve is to find a way of managing the growth of a database, which is taking up a lot of space on our existing server. Not worried at all about performance, back up/restores etc at this current stage. We have Enterprise Edition in our Live environment, which is why I was initially considering this option. Do you mind suggesting any alternative as opposed to the perceived “sledge hammer” approach I was considering? Thanks again.

Sure. If the database is important and I wanted consistent performance, I’d consider moving the whole thing to alternate storage. I’d also look hard at the table and indexes and identify how much of that space may be in unused or duplicate nonclustered indexes— there might be a way to reduce the space significantly just by adjusting your indexing.

You do have the ability to move entire tables or indexes to a new filegroup without partitioning them. Just be careful mixing storage sources if you need to ensure consistent performance. That holds true whether or not you’re using partitioning, of course.

If you’re open to EE features, you could also look at data and row compression. Like Table Partitioning, using these features means that you can’t restore a backup to a Standard Edition instance, which can sometimes impact DR, so just include that in your planning if any of the EE features turn out to be the best solution.

Excellent!! Thanks for those recommendations. Will use you sp_BlitzIndex to check now! 🙂

Can you do online rebuilds of individual partitions in SQL Server 2012?

This is a great question. It’s a great question partly because I’m pretty sure this isn’t answered in Books Online anymore (I looked and couldn’t find it in the likely places), and I actually thought this feature had made it into 2012. I was sure I’d tested it, and it worked. But I was wrong.

In a test database on SQL Server 2012 against a partitioned table named dbo.OrdersDaily, this command:

alter index PKOrdersDaily on dbo.OrdersDaily REBUILD partition=5 with (online=on);

Returns:

Msg 155, Level 15, State 1, Line 1

‘online’ is not a recognized ALTER INDEX REBUILD PARTITION option.

Online rebuild works for the entire index, offline rebuild works for the partition.

The good news is this is promised for 2014 RTM: http://blogs.technet.com/b/dataplatforminsider/archive/2013/08/16/improved-application-availability-during-online-operations-in-sql-server-2014.aspx

Thanks for your answer, Kendra. I had looked in the Books Online and was unable to find anything either.

Hi Kendra,

If this is the case then there is no use of partioning indexes.

I m having an application where on daily basis 3 GB of data gets inserted.Also in one table we are having more than 130+ cr of records.should i partition these tables as data gets inserted 24* 7 in these tables.

I’m not sure what you mean by “if this is the case”. There are certainly great uses for table partitioning– it’s just not a magical performance enhancer that works no matter how your application is written. It *can* be used to solve real problems when it’s a good fit for the problems and the right steps are taken to implement the feature.

There’s no way that I or anyone else can tell you if partitioning would be beneficial for your app based on 50 words in a blog comment. It’s not “always good” or “always bad” or “definitely helpful” based on any specific amount of data sizes or rowcounts.

I just ran into a very interesting situation upgrading from SQL 2005 to SQL2008R2 with a partitioned table. A query I wrote to test the new servers performance brought the 2008 server to its knees while the 2005 server handled it fine. As it turned out it appears to be in the way 2008 handled the query with the partitioning. 2005 Scanned each partition one at a time then put it back together wile 2008 processed it as one item. This was over my head I had no idea but someone gave me a hand with it as I was not sure at all what was going on.

http://technet.microsoft.com/en-us/library/ms345599%28v=sql.105%29.aspx

Hi Kendra,

I have a requirement to load a very large flat file into the SQL Server tables. I am considering the technique to load the data into smaller temp tables and then partition switching them into the main table. My query is how would the SSIS (or any other tool) know what data to load into a particular temp table. For ex., if I create a temp table in a filegroup only to hold customers from ID 1000 to 2000, then how can I make sure that one instance of SSIS will load only that data into the table while other instances are loading data to other partitions?

Thanks

SSIS won’t know that, actually. That’s up to you to code.

Hi there,

When you create a staging table, it has an independent name. You have to code whatever application that’s loading data to know the proper name of the staging table, and also code any validation checks to make sure that data is going to the right place.

Kendra

Hi Kendra & Brent,

Thanks for the response. My problem is we have a single very large file with all of the data. Now if I have to create temporary staging tables to hold different parts of the data (based on the partition scheme I choose) I would also like to run the SSIS to load all the temp tables in parallel. This means I have to use a conditional split so a particular instance loads only that data which should go into the temp table it is hitting. Is this not a performance hit? Is there a better way to handle this?

Thanks

Hey there,

Terminology may be holding us up. You can take a big heap and move it onto a partition scheme by creating a clustered index on the table on the partition scheme. This can be useful for initially creating a partitioned object. You could also get really creative and do this when loading data and switch partitions from table to table.

Now, whether this is advisable or not due to performance is something we’re not going to be able to cover in blog comments– and the same for which way to load the data is best. It’s just way out of scope for what we can do here.

Kendra

Great article! Thank you!

We are thinking of implementing a three-tier storage solution for an OLTP table that currently has over 2.2 billion records and over 1 TB of data. Today’s transactions would go into non-partitioned tblTranToday housed in 1.ndf on a RAID 10 SSD-based SAN LUN. The next 18-21 months would go into tblTransCurrent and be housed in 2.ndf on a RAID 10 HDD-based SAN LUN. The next 4*M quarters + N years would go into tblTransArchive and be housed in 3.ndf on less expensive RAID 50 HDD-based SAN LUN. All three ndf’s would be in the same secondary file group. In the early morning, the previous day’s transactions would be moved (via inserts and deletes in batched transactions) to tblTransCurrent. On the first day of each month, a job would merge the daily partitions of the prior month. Every three months, the job would also merge the oldest three months into the prior quarter’s partition, and switch it to tblTransArchive.

My question (at last) is: Will this quarterly switch work across files in different LUNs? They are in the same file group, per requirements, but I don’t see how the switch can occur via meta-data alone, since the data is in physically different LUNs.

Hi Jeff,

You are exactly right– for partitioning switching, source and target must be on the same filegroup! A good little summary of the rules is here: http://technet.microsoft.com/en-us/library/ms191160(v=sql.105).aspx

For the portions of the data on SAN storage, it’s theoretically possible that pieces of data could be migrated at the SAN level from faster storage to slower storage. However, in reality that requires a huge amount of coordination between multiple teams and is tricky to automate– so it’s not something that I find people are really able to do as a regular process.

There is also no shortcut to what you’re trying to accomplish with backing up and restoring filegroups– it just doesn’t work that way.

This is very big picture, but in your situation it’d be worth evaluating whether you can put the archive data into a separate database (read-only when not being updated, and in the simple recovery model) with its own backup plan, and potentially work partitioned views into the mix to keep individual tables reasonably small.

Kendra

Hi Kendra,

Thank you for your prompt response!

I realize now that what I was trying to do simply won’t work. I had forgotten how files within a filegroup work. You cannot specify that the hot table goes exclusively on the ndf on the fast storage and the cold tables on the ndf on the slow storage. It just doesn’t work that way. Essentially the multiple files in a file group allow you to make a poor man’s striped RAID. If I had an ndf in each of three physical storage devices, my three tables would be spread across the three storage devices, which of course is not the trick I had intended. But I think that even if it were possible to store entire tables in their own ndf’s and put all the ndf’s in a single filegroup, it wouldn’t make sense to quickly switch partitions between files on different storage devices.

Hi Kendra,

We are planning to implement the partition in our application.

In the application we have related data stored in multiple tables (around 7 tables) all the tables are having running number as clustered index.We are planning to create a new column called Partition Id (1,2,3,4,5 etc) in all the related tables , will create partition tables based on that partition id and that the related tables will also have the same partition ids so that we can fetch all the related data from same partition table.

Our application will have more than 25-30 concurrent insert/update/delete on a same table.Currently all our Select/update and delete logic are based on the clustered index. If we remove the clustered index from the running number(which is our current PK) and make it as the new column (partition id) will it impact the performance.Will it lock the tables and give timeouts/deadlocks errors?

Hi Sagesh. Why not give it a shot in your development environment? We’re huge fans of testing out things like this so you can learn how it works first-hand. That way you’ll see the exact execution plans for your own database schemas.

Thanks Brent for your quick reply.

I have solved the issue by adding Partition column into my existing clustered index.

Previously execution plan was showing “Key lookup” for the partition column after adding the column to the clustered index now it’s coming with clustered index seek.

Hi Sagesh,

Definitely test things out as Brent said. I’d recommend starting with a prototype and then doing a full sized test before you ever hit production (minimum).

One piece of information that should be helpful to you: Your clustering key must contain the partitioning key, but it does not need to be identical to it (or even lead with it).

Nobody can tell you in a blog comment what will perform well and won’t suffer deadlocks based on a short description, though– you’ll have to work with it.

Best of luck!

Kendra

What I have seen is a massive performance hit with the partitioned tables I have been testing. For instance if I select top 100 [columns ] from table where id > 22222. order by recorddate Because the table is not partitioned by the date but by the id, it does a massive costly sort to put it all back together from the partitions. Recorddate is indexed but by partition Recorddate..

Yep, I’m not surprised. Table partitioning doesn’t make queries faster, and it makes them harder to tune.

This connect bug proves that it’s not just you: http://connect.microsoft.com/SQLServer/feedback/details/240968/partition-table-using-min-max-functions-and-top-n-index-selection-and-performance

Hi Kendra,

I’m surprised at your statement above that partitioning doesn’t make queries faster. I thought it made some faster and some slower.

Consider the common case of an unpartitioned table (ID, Date, colX, colY) clustered on an identity PK (ID) If it is later partitioned on Date, clustered on Date and ID (for uniqueness), with a NC PK on (ID, Date), then queries filtered on Date can be much faster due to partition elimination. Queries filtered on ID will be a little slower since the DBMS first uses the PK to get the Date and then goes to the clustered index to get the remainder of the data (colX and colY).

My testing seems consistent with this reasoning. I’m getting logical reads 5 – 30% of unpartitioned logical reads when queries are filtered on Date. Isn’t this what you’d expect?

If you’re not partitioning, you can tune indexes to achieve as good or better query performance– and it’s MUCH simpler because you don’t have to worry about the aligned/non-aligned index mess with partitioning.

Never partition to make queries faster. Sometimes you can get partition elimination to work for you, but even getting that to work is often a struggle.

The feature is GREAT for batch data loads / removals. Don’t get me wrong. But query performance and index tuning ain’t that much fun with it.

Yes it may make some faster and some slower and imagine what it does to my 130GB (data not counting indexes) table when it forces a full scan. Try building IO to support that.

— I have partition table A (it is partition by each day). It has columnstore index.360 day partition

— I have partition table A_staging with live data updates. 5 day worth of data(day 361, 362, …)

— I have UNIOUN ALL view for above 2 table. So BI can do their query.

Question:

While live data coming to staging table can I create columnstore index on only day 361 partition and switch into table A ?

if not how can you resolve this problem?

is it advisable to create different filegroup for each partition? each partition has atleat 150 GB of data.

is it easier to backup data if it is in different file group for each partition?

Dimple – troubleshooting column store and partitioning is kinda beyond what we can do here on the blog. You’ll want personalized custom advice on things like that, and that’s where our consulting comes in. For help, click on Contact at the top of the site. Thanks!

Kendra,

When the partitioning is in process fora table, can DML operation be performed on that table in parallel?

Thanks.

Hi there,

Switching a partition in or out requires a schema modification lock– and that means nobody else can party with the table.

If you’re implementing partitioning on an existing object, whether or not it was online for read queries would depend on a lot of factors– but it’s not going to allow any other schema modifications while that’s going on.

Hope this helps!

Kendra

Thanks for the quick response.

Per my understanding, if ONLINE = ON is set, then read operations can be performed when partitioning is in progress but insert/update/delete cannot be performed. Is this correct? Also, does it hold true for both 2008 R2 and 2012 both?

No problems. Quick terminology check– what do you mean by “partitioning is in progress”? It’s not clear to me exactly what you’re asking.

I meant when split, switch or merge operations are in process.

Got it. All of those operations will require a schema modification lock at some point– in the case of switch it will need a schema modification lock on the source and target tables. Schema modification (SCH-M) locks are exclusive, and no other operations can happen while they’re ongoing. (Read, write, other mods, nothing.)

Related fact: Even an “online” index rebuild (disregarding partitioning altogether) needs an SCH-M lock at the very end of the operation.

There are some improvements in SQL Server 2014 as to what operations are online and how you can handle the blocking related to SCH-M locks, but the SCH-M locks are still required. More info on that is here: http://blogs.technet.com/b/dataplatforminsider/archive/2013/08/16/improved-application-availability-during-online-operations-in-sql-server-2014.aspx

Thanks for a thorough explanation.

I am planning to automate all the partitioning related activities by using scheduled jobs. However, if an application is accessing (reads or writes) a table to be partitioned then in this case partitioning related operations (split, switch, and merge) won’t succeed (because exclusive lock cannot be obtained). Is that true? If yes, then what is the best way to coordinate partitioning window with the applications to allow the partitioning related maintenance jobs to complete sucessfully?

You’re very welcome.

There’s no perfect built-in solution to the problem– that’s why they added those features in SQL Server 2014 in the article I linked to. (Even those aren’t perfect, but they’re an improvement!) You have to code your operations so that you can detect problems as best you can and react to them if there’s extensive blocking when you’re trying to work the sliding window.

Typically with adding new empty partitions and switching things in, you can make it very fast once you get the exclusive lock. But if there’s very longrunning transactions, even if they’re using nolock, you could be blocked for long periods. So your code has to handle it. (Lock timeout and deadlock priority are tools you can use.)

Not to add extra work, but just because it’s worth doing: I always recommend adding extra code to make sure that the partitions splits and merges you automate are touching empty partitions and aren’t going to trigger slow, painful data movement, by the way. There’s nothing worse than hitting a bug in your code and having it cause a really big ugly slow operation.

I agree. I prefer to keep empty partitions at both ends to avoid data movement as much as possible.

I will come up with a strategy to keep applications informed of when the partitioning jobs are scheduled to run so that data can be cached for that duration in application and for end user, system still appear be be online :).

Thanks again.

I am a DBA looking after a team working on GIS data and I found some good questions and answers.

Hi,

I work on a system where we receive & manipulate a lot of files and deliver to the other systems.

Now this system is being re-used for another product and inorder to avoid dependencies of file delays on the products, table partitioning cocept has come into picture. Is there any other way to handle the same.

If so , please let me know.

It’s very difficult to recommend (or not recommend) table partitioning based on what someone can describe in a blog comment– it’s just much more complicated than that.

Completely unrelated to table partitioning: in general, it’s better to not store files inside the database, but instead to store pointers to the files.

Hallo,

Could or should this be used in data archiving?

I have a database that imports a large ammount of insurance data into a set of related tables. I will need to archive this data. In an ideal world archiving would be simply detaching the ndf file. And Restoring an archive would be simply attaching the ndf file. Is this an advisable approach?

Thanks for a great article…

Nigel…

There’s no supported way to back up or detach a single file or filegroup and restore it to a different database (or a previously restored database that’s been brought online and modified). I really wish that worked!

Kendra,

Nice article, good overview.

However your text about the fact that statistics are maintained for the entire partitioned table or index is not 100% accurate anymore with the introduction of incremental statistics in SQL2014 if I’m right.

I can totally see why you’d think that. Even with incremental statistics, the entire histogram still only gets 200 steps. Sorry to burst your bubble on that one. Ben Nevarez has a good article on that feature here: http://sqlperformance.com/2014/02/sql-statistics/2014-incremental-statistics

Hi Kendra,

Thanks for sharing this article.

We have 8-10 tables which contains 4-6 years of data and application only uses 12-14 recent months of data. Tables are huge and basically considrered as history tables. Backup and restores are huge (in TB) as a result. How can table partioniong help in this scenario?

It might be able to help backups if they helped you use read only filegroups for large parts of the data – for more info on that see Brent’s video here: https://www.brentozar.com/archive/2011/12/sql-server-storage-files-filegroups-video/

Restores are still going to be rough with filegroups.

Partitioned views might allow you to move some of the historical database to another database on the instance, and back it up/restore it separately.

Hi Kendra,

I want to use table partitioning on daily basis with transnational replication. My first thought was to

create a daily job to create partition and file group for the following day but according to MSDN:

ALTER PARTITION FUNCTION is not replicated. I came up with two solutions and I wanted your input:

1. Create in advance a lot of partitions/file groups

2. Create 2 jobs. one for the publisher and one for the subscriber which will create the objects. My only fear here is what will happen if the job will fail to run on the subscriber. In this case if I’m not mistaken the replication will fail since the tables won’t match

Hi there,

Both options have some risk– even if you create a lot of partitions/filegroups in advance, eventually you could run out. And it’s bound to happen right when someone forgets that it’s even an issue 🙂

Combining replication and partitioning is definitely tricky. It kind of makes sense that it’s complicated because they support having different partition schemes on publisher and subscriber (or even no partitioning on the subscriber, so you can replicate to Standard Edition).

My biggest piece of advice would be to have your jobs that manage the partitioning include a lot of checks for both the publisher and the subscriber to make sure that everything is in the right state before it proceeds. It means a lot of coding and extra testing, but because there’s no perfect option, it ends up being needed.

Kendra when your switching out these partitions and I assume you drop your constraints what about all the related data to these records? What do people do with that ?

Oh, it’s easier than you think. You don’t have to drop foreign key constraints for switching.

Here are the rules: “Source and target tables must have the same FOREIGN KEY constraints. If the target table has any FOREIGN KEY constraints, the source table must have the same foreign keys defined on the corresponding columns, and these foreign keys must reference the same primary key as those of the target table.” … https://technet.microsoft.com/en-us/library/ms191160%28v=sql.105%29.aspx

Thanks for that info and link!!

Thanks, nice post

I found a new feature that is called Reference Partition on Oracle 11g. Using reference partitioning, a child table can inherit the partitioning characteristics from a parent table. but i cannot find like this in SQL Server 2014

Is there an equivalent feature in SQL Server?

Can I switch out partition tables with reference partitioning from parent table to child table (table A ,B,C) ?

Thank you very much

Thanks for this extremely super useful article, yet I have a question please 🙂

I’m working in a company that can’t afford the Enterprise edition, and we have a very big table (at least this is how I see it) contains 28 million rows with around 20 columns. Querying that table joined with another couple of tables is really hectic; so I was searching the net to find a way to manually mimic SQL Server table partitioning, and I found the following:

https://www.simple-talk.com/sql/sql-tools/sql-server-partitioning-without-enterprise-edition/

Although that is a solution, I’m wondering if that is an efficient solution, what do you think? I trust your opinion the most 🙂

Hi Abu,

The simpletalk article talks through partitioned views. Those can still work well, and they do work with standard edition. The article mentions one limitation with identity columns– there are some other limitations to read up on – Kyle just linked to a books online below this comment to check out, and also mentioned some query considerations.

Frequently if tables *are* suited for partitioning, using partitioned views can be really desirable even if you have enterprise edition (sometimes in combination with partitioned tables). The member tables of a partitioned view can have different columns, so if you have a large fact table, for example, and older years don’t have a column that was added more recently, that’s OK. You can also use different nonclustered indexes on the member tables of partitioned views.

So overall, still a very relevant feature!

This would be your best way if you have a good column for the constraint.

https://technet.microsoft.com/en-US/library/ms190019(v=SQL.105).aspx

I really don’t think your going to gain much from partitioning unless that column for the constraint is used in most queries. Your developers need to understand it is a well and my experience has been this is a big issue.

Hi.

Bit a noob question but I’m struggling with it slightly in my head (someone else asked me today). What happens if you update the clustered aligned index to a value for to a different partition on different disk.

Presumably if the index doesn’t need to re-balance then it will stay where it is until you rebuild the index?

Not sure why you’d do that on mass in large partitioned table! Probably not a case for partitioning or would need to rebuild the affected partition aligned indexes.

Not sure exactly what you’re asking because the wording is a bit confusing– are you asking if you can move a whole partition from one logical drive to a different one as a maintenance activity?

Or are you asking what happens if you update the partitioning key on a single row so it now belongs in a different partition?

Sorry multi tasking.

Say the table has datekey and it’s clustered index partition aligned by year. If I update datekey for 1 row from 20150101 to be 20141201 and 2015 and 2014 are partitioned onto 2 different filegroups what happens to that row? does it get moved in the update or just when the partitions are rebuilt / re-indexed?

Ah, I see. If you update the partitioning key and the row belongs in a different partition, it has to physically move the row immediately. This is processed internally as a delete/ insert.

Here’s a longer post on it: http://blogs.msdn.com/b/wesleyb/archive/2008/10/09/what-happens-when-i-update-my-partitioning-key.aspx

cool thanks. That was my first intuition but wasn’t sure if it just left it until the B-tree had to reorganise.

Hey is it Possible to create partition Quarterly for year if there is no Date Column on the table?

You have to have a column to partition on. Technically, some people do it without a date column, but in those cases they have an int column that’s a surrogate for a date– and I suspect that’s not what you’re asking about.

Thank you Kendra

was just wondering if there is way. so i cant make partition on table for using date if there is no date or surrogate key available. right ?

Correct. Think about it this way. You must choose one column as the partitioning column. In this case, what would you choose?

This is the first time i m trying Partition so have no clue right now. 🙁

Yeah, it can be tricky. The fact that you have to have one column as your partitioning key is probably the first thing you wanna learn, so you’re on the right track!

Hi Kendra.

Consider the following scenario :

“Tens of millions of new records are loaded into the data warehouse weekly, outside of business hours. Most queries are generated by reports and by cube processing. Data is frequently queried at the day level and occasionally at the month level. You need to partition the table to maximize the performance of queries.”

Can you explain what partitioning level you would implement. By day, week or month?

Jevan – for personalized architecture advice, shoot us an email at help@brentozar.com and we can set up a consulting engagement. Obviously partition design is a little beyond the scope of something you’d want to do in a blog comment. Thanks!

Hi Brent.

Small confession. This was a question on the Microsoft Sql Server exam. The answer was partitioning by day . I was stumped to find any advice as to how they came up with the answer.

I shall look further.

Hehe I remember that question.

The vagueness of some of those questions is sometimes quite funny ;at other times frustrating.

Basically the answer is Day because “Data is frequently queried at the day level and occasionally at the month level”. You’ll get the parallel performance of partitioning across lots of concurrent day queries. Month perhaps won’t be so great but will parallel run that query also however it will need to bring the results back together to aggregate.

As Brent has eluded… real life is a lot complicated than these exams prepare you for. Good luck with your studies.

HA!

Can you turn off Native Archive partitioning once you start using it.

We have a Database that is using it under SQL 2014 eval and we only need standard SQL but once we did hte inplace downgrade the DB wont start as the EE feature is in use.

if I just want to increase performance of insertion of data , I will not make any query

is Creating Partitioned Tables a right action????

Samo – no.

I have a large table with million records and I want to increase performance of insertion and updates I don’t make query’s on it .

is table partitions can fit on this scenario??

Islam – not typically, no. (For reference, a million rows isn’t actually all that much in modern relational databases.)

Thanks for such details and clear explanation.

Just wanted to check on one thing that I am currently updating 45 millions record to the live db where we have already 3 billions of record taking 25 hrs since it is live db where there are many other processes are also running. Also sometimes locking issues also kicks in because of other processes.

Would it be a good Idea to use partitioning for faster insert\update in batch of 1000 records ? I appreciate if there is any alternative if you could share with us.

Thanks.

For a 3-billion-row table, you don’t really want to get architecture advice via a blog comment. That’s where consulting or architecture comes in.

Avery good article – I especially like the doodles!

But when you say “the partitioning key must be part of each of those indexes” it seems to imply (to me at least) that the partition key must form part of the index key in order to allow switching, I don’t think this is the case.

I for one, created a non clustered index on a partitioned table which did not include the partition key as the index key column, however I did create the index on the partition scheme, so I am able to perform switch operations on the table.

What I am curious to know is what part of the the index does the partition column really form a part of? A look in sys.index_columns for that particular index shows the values for partition key column as 0 for “key_ordinal” as well as “is_included_column” but the partition_ordinal = 1 (whatever that means – BOL doesn’t clearly mention)

Nevermind, this technet article answers my earlier question:

https://technet.microsoft.com/en-us/library/ms187526(v=sql.105).aspx

“When partitioning a unique nonclustered index, the index key must contain the partitioning column. When partitioning a nonunique, nonclustered index, SQL Server adds the partitioning column by default as a nonkey (included) column of the index to make sure the index is aligned with the base table. SQL Server does not add the partitioning column to the index if it is already present in the index. ”

but still doesn’t explain why sys.index_columns.is_included_column = 0

Hi Brent,

As most of the existing queries filter on current clustered index column, can you please advise on using an existing clustered index column as partition ID , I know it will be difficult to manage partitions when a int/ID column is used rather than a dateTime column, but i prefer to go through that pain rather than affecting current application/query performance by using a DateTime Partition Column.

I’m seeking this advice only after conducting a thorough review on our current database design ,

please share your thoughts on this strategy.

Appreciate your help.

Regards,

Kiran

Kiran – you nailed it when you said “after conducting a thorough review on our current database design.” That’s exactly the kind of analysis I’d need to do before giving you schema advice. If you’re interested in consulting, click on Consulting at the top of the page and you can learn more about our services. Thanks!

Is there a way to see last update date for each partition. We have a method to see count on each partition and creation date but as example today I might have 1 million records loading into 11th month 2016 partition and then I might have 5k loading into 10th month 2016 and then lets say I have 1k loading into 7th month 2016 partition. I want to see a date that shows those 3 partitions had either records added or updated today. I can store this in my own table like I did for physical partitions (partition views) but wanted to find out if this is anywhere else in a system table. P.S. I use this for loading like SSAS I only process those partitions that have changed today.

Ken – for questions, head on over to http://dba.stackexchange.com.

Hi,

Thank you for a great article. I am not able to view “Table Partitioning” poster. Could you please fix the link or share the right one.

Thank you

That’s no longer available, sorry.

After switch partition , I performed the steps below to prepare for the next load

1. Dropped all the constraints in the staging tables

2. Load data into staging table using bulk insert.

3. Add constraints to the staging tables (This part I noticed the time increasing with number of loads using same data.)

Also, I noticed if I drop and recreate the staging tables then the time remains the same.

Can you please tell me why the time increasing with number of loads if I reuse the staging tables ?

I like this internet site because so much useful material on here : D.

Nice topic, one question, If I have table partition, I guess I can’t put table into In-Memory .

Will it be a problem for ColumnStore Indexes? do I need to create ColumnStore Indexes on partition?

Subir – this post isn’t really about columnstore, sorry.

Isn’t this post outdated when it mention that Partition is only available in standard edition

SQL Curious – that’s kinda like looking at a 2012 newspaper and saying, “This article is outdated because it doesn’t say the president is Donald Trump.”

Sir please i respect donald trump as a president of US bcoz after ofc hrs for 30 mins i watch cartoon as the character of cartoon looks same as donald trump he speak with some good stuff.

Hi Brent,

Thanks, enjoyed your article! I have a question after reading through a lot of the comments.

Can one emphatically state that, ceteris paribus, “Partitioning will always have faster ‘SELECT’ query performance if the underlying tables (or at least the biggest tables by row count) in the JOIN are all partitioned by a unique clustered integer index which is ALWAYS used in the WHERE clause” ?

Never say always. 😉

You should write in such a way that a fresher can also understand

This site isn’t really targeted at freshers – we’re for developers and database administrators.

Your site is like bible. Articles not matter when was published are like mantra and some sentences should be more precised. Especially that post is referenced in https://www.brentozar.com/sql/table-partitioning-resources/

> First of all, this is an Enterprise Edition feature. (Cha-ching! $$$.)

This sentence should be: First of all, this is an Enterprise Edition feature (up to SQL Server 2016 before SP1).

https://docs.microsoft.com/en-us/sql/sql-server/editions-and-components-of-sql-server-2016?view=sql-server-ver15#RDBMSSP

Anyway, I love to read your blog. 🙂

Awww, thanks! Sure, will update that.

This site is actual gold for information for us less well versed in SQL Server but still tasked with taking care of stuff (thanks management for that decision).

Just a question, we have a pretty busy Microsoft BizTalk environment where we replicate out the business activity monitoring data to a separate database server using transactional replication. This has been done because we have an application the is used by support staff to search for specific items. The tables we replicate grows with between 300’000 to 5 million records per day and we retain all data for 12 months. We know that 96.8% of all searches that are being conducted is for data that is less than 7 days old and we have a datetime column in each table for “LastModified”. We have all kinds of weird performance problems with this set up at the moment, the only thing we’ve really done is to throw hardware at the problems and set up indexing (thank god for sp_BlitzIndex), so.

My question then would be, would it be appropriate to use table partitioning on these tables seeing as 96,8% of all searches should really never even have to look at anything older than 7 days, even if the tables can contain 1.8 billion records out of which the search query really is just interested in the last 35 or so million?

The searches are more or less always quite specific and will only return < 50 records from each table.

Johannes – thanks, glad you like the site! Sure, that kind of question is exactly the work I do in my consulting – to hire me, click Consulting at the top of the site.

I think standard edition does support Partitioning after 2016 release

Yes, after 2016 SP1.

We have tables around 60 million row of data. Partitioning is good for these tables or good indexes are sufficient enough?

For personalized architecture recommendations, click Consulting at the top of the page.

Thanks Brent, love the site. We have (25 million row) partitioning on a (600 million row) table and use partition-elimination WHERE statements in all of our queries. Is there any need or advantage to switching old partitions out to an archive table?

Thanks, glad you like the site. For personal advice on your system, click Consulting at the top of the site.

Thanks Brent, sorry, I was trying to ask a general question, just added numbers to show that we need partitioning. Let me try again:

If we are using table partitions, and targeting our queries to specific partitions using partition-elimination parameters, is there any query performance benefit to switching old partitions to an archive table? I’m thinking there probably is for DBA type maintenance activities, but not purely for query performance.

The answer may still be “it depends” as your previous reply implies.

Thanks!

John – I need you to hear me on this. For personal advice on your system, click Consulting at the top of the site. Further replies will not be productive. Thanks for understanding.

I’m a longtime fan of you and your site. I’m partitioning a table with 1.35 billion rows. It’s almost 3tb because it’s wide. It’s on AWS RDS SQL Server 2019 EE. We are using provisioned IOPS SSD storage on the standard RDS D drive. Is it still important to create separate file groups and files for each partition on this device? I’m not sure that aspect is as important with high speed SSD.

You need to update that Partitioning is also part of standard edition, not only enterprise as it was in past SQL versions.

Great catch! Updated.