Everybody wants to know about index fragmentation

It is an inescapable vortex of malaise and confusion. Like that swamp in The Neverending Story that killed the horse. Sorry if you haven’t seen that movie. The horse wasn’t that cool, anyway.

Neither is index fragmentation, but it’s not worth losing sleep over. Or a horse.

I see a lot of people messing with the fill factor of their indexes. Sometimes you gotta. If you use GUIDs for a clustering key, for example. If you don’t lower fill factor from 100, you’re going to spend a lot of time splitting pages when you insert records. GUIDs are hard to run out of, but they’re even harder to put in order.

Setting fill factor under 100 tells SQL to leave free space on index pages at the leaf level for new records to be add to. If you don’t, and a record needs to be added to a page, it will do about a 50/50 split to two other pages.

When does it hurt?

Like most things, not at first. To prove it, let’s rebuild an index at different fill factors, and insert some fragmentation information into a table. It’s pretty easy. Create a table, rebuild the index, insert record to table. I could have done this in a loop, but I’m kind of lazy today.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 |

CREATE TABLE dbo.[FillFactor] ( TableName NVARCHAR(128) NULL, IndexName sysname NULL, index_type_desc NVARCHAR(60) NULL, avg_fragmentation_in_percent FLOAT NULL, page_count BIGINT NULL, fill_factor TINYINT NOT NULL ) ON [PRIMARY]; GO INSERT dbo.[FillFactor] ( TableName, IndexName, index_type_desc, avg_fragmentation_in_percent, page_count, fill_factor ) SELECT OBJECT_NAME(ddips.object_id) AS TableName, i.name AS IndexName, ddips.index_type_desc, ddips.avg_fragmentation_in_percent, ddips.page_count, i.fill_factor FROM sys.dm_db_index_physical_stats(DB_ID(N'StackOverflow'), OBJECT_ID('dbo.Votes'), NULL, NULL, 'LIMITED') AS ddips JOIN sys.tables AS t ON t.object_id = ddips.object_id JOIN sys.indexes AS i ON i.object_id = ddips.object_id AND i.index_id = ddips.index_id; ALTER INDEX PK_Votes ON dbo.Votes REBUILD WITH ( FILLFACTOR = 100 ); ALTER INDEX PK_Votes ON dbo.Votes REBUILD WITH ( FILLFACTOR = 80 ); ALTER INDEX PK_Votes ON dbo.Votes REBUILD WITH ( FILLFACTOR = 60 ); ALTER INDEX PK_Votes ON dbo.Votes REBUILD WITH ( FILLFACTOR = 40 ); ALTER INDEX PK_Votes ON dbo.Votes REBUILD WITH ( FILLFACTOR = 20 ); SELECT ff.TableName, ff.IndexName, ff.index_type_desc, ff.avg_fragmentation_in_percent, ff.page_count, ff.fill_factor FROM dbo.[FillFactor] AS ff ORDER BY ff.fill_factor DESC; |

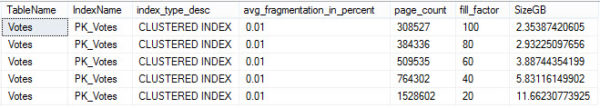

Put on your thinking cap. Fragmentation percent doesn’t budge. Granted, we rebuilt the index, so that’s expected. But look at page counts. Every time we reduce fill factor, page count gets higher. Why does that matter? Each page is 8kb. The more pages are in your index, the more you’re reading from disk into memory. The lower your fill factor, the more blank space you’re reading from disk into memory. You could be wasting a lot of unnecessary space both on disk and in memory by lowering fill factor.

Let’s do math!

Because everyone loves math. Let’s take page count, multiply it by 8, and then divide it by 1024 twice to get the size of each index in GB.

|

1 2 3 4 5 6 7 8 9 |

SELECT ff.TableName, ff.IndexName, ff.index_type_desc, ff.avg_fragmentation_in_percent, ff.page_count, ff.fill_factor, ( ff.page_count * 8. ) / 1024. / 1024. AS SizeGB FROM dbo.[FillFactor] AS ff ORDER BY ff.fill_factor DESC; |

Even reducing this to 80 takes up about an extra 600MB. That can really add up. Granted, disk and memory are cheap, but they’re not infinite. Especially if you’re on Standard Edition.

It’s in everything

It’s not just queries that reading extra pages can slow down. DBCC CHECKDB, backups, and index and statistics maintenance all have to deal with all those pages. Lowering fill factor without good reason puts you in the same boat as index fragmentation does, except regular maintenance won’t “fix” the problem.

You can run sp_BlitzIndex® to help you find indexes that have fill factor set to under 100.

14 Comments. Leave new

Erik this is a great article. I always struggled to find the best value to set the Fill Factor at the server level. My system has lots of OLTP transactions, . I set it between 70 to 80, after reading this article its worth looking at changing it to 85 or 90, to lower my page count.

I agree, but it’s also worth looking at your page splits and how often you rebuild your indexes. Keep in mind that empty space is only allocated at the time you create your index, and does not remain constant. With a higher fill factor, you may need to rebuild more frequently.

Arthur – when you say “looking at your page splits”, can you elaborate on that? Interested to hear where you’re going with that.

Yes, definitely. I’d be worried that in a high OLTP system, an index with a factor of 85 or 90 would fill up in the middle of the day, during high usage times. At that point, it would cause page splits to generate new pages for the new data. I would also be worried that an update to a varchar field, that increased the number of characters in an column to a larger number, would fill a page or cause a split at 90% fill factor.

I guess I skipped the “how” portion of that. I think to measure page splits, I’d want to measure LOP_DELETE_SPLIT log records. I’ve never done this before, this all from what I’ve heard other people talk about.

You can create an Extended Event Session to track mid-page splits.

You can read it in further detail in this article by Jonathan Kehayias : https://www.sqlskills.com/blogs/jonathan/tracking-problematic-pages-splits-in-sql-server-2012-extended-events-no-really-this-time/

Right, bingo – this is waaaaay more complex than it looks, and even just *measuring* this is hard – let alone understanding whether your system is impacted. I’d want to step back and say, “Above and beyond a count of page splits, how are you determining that it’s actually the biggest bottleneck on your system?”

That’s actually complicated and depends on your workload and business requerements. My company uses software to keep track of our website performance. Usually “something” stands out and is taking longer than it should. If it is caused by some maintenance process, i normally start by checking if that particular table and indexes are being rebuilt/reorganized very frequently and check their daily fragmentation (I use Ola Hallengreen maintenance for indexes and everything gets logged to CommandLog), and when necessary I ajust the fill factor (small values at a time, no need to be greedy).

The great thing about using that Extended Event Session is the fact you can pinpoint the problematic indexes, and change only those you consider appropriate, saving storage and cache

Don’t you speak ill of Artax.

Forget Artax.

Falcor was a much cooler mount anyway — he’s a flying mount!

(Yay for WoW references and Never Ending Story references in the same reply!)

Space Age Love Song IS the better Flock of Seagulls song. Just like Alive & Kicking is the better Simple Minds song.

When I was a DBA, I captured fragmentation nightly, and stored it in a history table. I used that table for a few things: if tables had been reorganized, not rebuilt, over the last 90 days, I would rebuild them to get back free space without needing to run physical stats in detailed mode. but I also used it to determine fill factor. if I saw indices that fragmented substantially every day, and verified that it was heavily used for scans, I would consider adjusting the fill factor.

John – uh oh, bad news. A scan doesn’t mean a long-range scan necessarily: http://sqlinthewild.co.za/index.php/2016/01/26/does-an-index-scan-always-read-the-entire-index/

[…] But if I were going to make the decision to rebuild an index, this is the measurement I’d want to use. Because all that unused space can be wasteful. […]