I wish we got a yellow bang on execution plans when SQL Server was making up an estimate out of thin air.

I’ll give you an example: if you compare two columns on the same table, looking to find rows where they’re equal, SQL Server has a hard-coded estimate that 10% of the rows will match.

I’ll query the Stack Overflow database’s Users table to find people whose DisplayName is the same as their Location. To give SQL Server a fighting chance at estimation, I’ll create indexes on both columns, in both orders, just to preemptively head off the folks in the comments section who will suggest it’s a stats problem:

|

1 2 3 4 5 6 7 8 |

CREATE INDEX DisplayName_Location ON dbo.Users(DisplayName, Location); CREATE INDEX Location_DisplayName ON dbo.Users(Location, DisplayName); GO SELECT COUNT(*) FROM dbo.Users WHERE DisplayName = Location; |

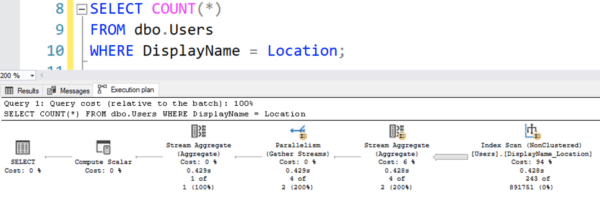

Look at the far right operator of the actual execution plan and compare estimated versus actual rows:

SQL Server brought back 243 rows of an estimated 891,751:

That 891,751 sounds awfully scientific, doesn’t it? Like SQL Server put some serious thought into it? Well, that idealistic dream is shattered when you discover that the full table size just so happens to be 8,917,507 rows:

SQL Server’s estimating that exactly 10% of the rows will have a matching DisplayName and Location. That’s a ridiculous, completely made-up number that has no bearing on reality.

This is hard to spot

even in simple queries.

Let’s say you wanted to find those users with matching DisplayNames & Locations, and find the top 100 reputations amongst them:

|

1 2 3 4 5 6 |

SET STATISTICS IO ON; SELECT TOP 100 u.Reputation, u.DisplayName, u.Location, u.Id FROM dbo.Users u WHERE u.DisplayName = u.Location ORDER BY u.Reputation DESC; |

Let’s also say your Users table has these three indexes:

|

1 2 3 4 5 6 |

CREATE INDEX DisplayName_Location ON dbo.Users(DisplayName, Location); CREATE INDEX Location_DisplayName ON dbo.Users(Location, DisplayName); CREATE INDEX Reputation ON dbo.Users(Reputation); |

Note that none of those three indexes cover the query – because the query needs both DisplayName & Location, AND Reputation.

One approach SQL Server could use would be to scan the clustered index, like this:

That approach does 141,970 logical reads:

Or, because so few users match, SQL Server could scan the DisplayName_Location index, make a list of the 243 users with matching values, and then only do key lookups for those 243 users, like this:

That version of the execution plan does just 49,633 logical reads.

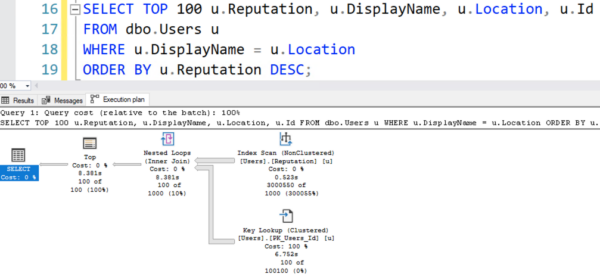

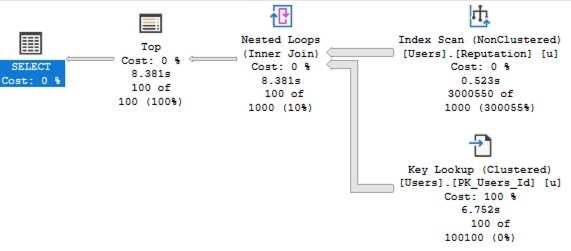

But SQL Server doesn’t do either of those. Let’s take the index hints off and watch the smoke rise:

SQL Server thinks, “Hey, about 10% of the rows have matching DisplayNames and Locations! I’ll use the Reputation index, and I’ll scan it from top to bottom. For each high-ranking User, I’ll go do a key lookup, and I won’t have to look at too many rows before I find 100 that have matching DisplayNames and Locations! Hell, I don’t even have to allocate multiple CPU cores – I can do all this single-threaded because it’s so easy.”

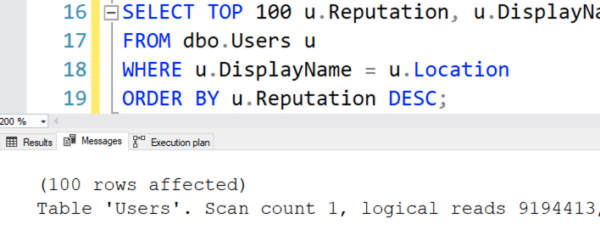

Of course, all of that is wrong, and it leads to:

That’s 9 million reads on an object that only has 140K pages.

So what went wrong? Good luck finding out if you don’t know about the secret 10% estimate. There’s no yellow bang anywhere on this plan, but worse, there’s no indication that the whole plan is based on that wild guess. On a plan like this, most folks start their performance tuning journey by examining the Reputation index trying to figure out why the stats on there are wrong – when in reality, the issue’s something completely different.

And in complex real-world queries with multiple joins? Forget it. This kind of thing is completely stealthy in execution plans.

I wish SQL Server would warn folks about that.

But I know wishes don’t get us anywhere, so I’ll be a good blogger and I’ll finish this blog post with a call to action to upvote a feature request at feedback.azure.com. I’ll just go log in and…

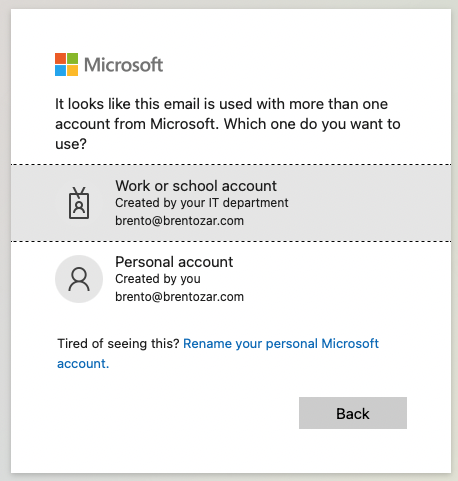

Oh God, my mortal enemy. Let’s try one, and:

Uh, yes, I know it’s taken – it’s taken by me, and I’m trying to log in, and I have the same problem with the other one, too.

It’s almost as if Microsoft doesn’t want to hear feedback from their customers.

14 Comments. Leave new

I can’t help you with your login problems. Every time I try to open a user voice issue, I get a call from a lawyer.

But you can often get a more realistic

column = columnestimate using the “””””legacy””””” CE:OPTION(USE HINT('FORCE_LEGACY_CARDINALITY_ESTIMATION')).Using the 2013 database, I get an estimate of 8573 rows, and the query uses the

DisplayName_Locationindex.If you’re into that sort of thing.

It is so like the guy from Brooklyn to say “the old one was better before they ruined it.”

Is this Mexican PBR?

I found and upvoted one: Add execution plan warning for hard-coded estimations

Found it also, and upvoted too.

Upvoted +1

Swag much? Scientific wild A guess

So what would be the least horrible fix for this do we think? Index hinting is just a different problem waiting to happen.

That one’s beyond the scope of this blog post – I dig into it in the Mastering Query Tuning class.

Updated an commented. To save others from having to do the search, here’s the URL: https://feedback.azure.com/d365community/idea/98070454-3f25-ec11-b6e6-000d3a4f0da0

Thanks Mark

Is there a list somewhere of all these hard-coded estimations?

There have been estimations (ha ha ho ho) over the years, but I’m not aware of any hard-coded (ha ha ho ho) list that’s kept up to date across version changes and compat level changes.

For example, Dave Ballantyne’s session archives at SQLBits.com on the cardinality estimator covered them back in the 2012-2014 days, and Joe Obbish’s user group sessions have covered them as well.

Gah this login with personal or work account thing is the bane of my existence.

Ever try to use 2 different Teams logins on mobile? Don’t!

Because you’ll get a login popup that only allows you to put in an email address… then refreshes to the same page. Forever. Until you clear cache/uninstall.