With new features

I really love kicking the tires to see how they work with existing features, and if they fix existing performance troubleshooting scenarios.

One issue that I see frequently is with local variables. I’m not going to get into Cardinality Estimator math here, we’re just going to look at Adaptive Join plan choice for one scenario.

Understanding local variables

When you use local variables, SQL Server doesn’t look at statistics histograms to come up with cardinality estimates. It uses some magical math based on rows and density.

This magical math is complicated by multiple predicates and ranges.

It’s further complicated by Adaptive Joins. Sort of.

A simple example

The easiest way to look at this is to compare Adaptive Joins with literal values to the same ones using local variables. The results are a little… complicated.

Here are three queries with three literal values. In my copy of the Super User database (the largest Stack Overflow sub-site), I’ve made copies of all the tables and added Clustered ColumnStore indexes to them. That’s the only way to get Adaptive Joins at this point — Column Store has to be involved somewhere along the line.

The last day of data in this dump is from December 11. When I query the data, I’m looking at the last 11 days of data, the last day of data, and then a day where there isn’t any data.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

SELECT u.Id, p.Score FROM dbo.Users_cx AS u JOIN dbo.Posts AS p ON p.OwnerUserId = u.Id WHERE u.LastAccessDate >= '2016-12-01' GO SELECT u.Id, p.Score FROM dbo.Users_cx AS u JOIN dbo.Posts AS p ON p.OwnerUserId = u.Id WHERE u.LastAccessDate >= '2016-12-11' GO SELECT u.Id, p.Score FROM dbo.Users_cx AS u JOIN dbo.Posts AS p ON p.OwnerUserId = u.Id WHERE u.LastAccessDate >= '2016-12-12' GO |

I get Adaptive Join plans back for all of these, with accurate estimates. I’m looking at the Live Query Statistics for all these so you can see the row counts on the Users table. You can see this stuff just fine in Actual and Estimated plans, too.

The first two queries that actually return rows estimate that they’ll use a Hash Join, and both choose the Index Scan of the Posts table branch of the plan to execute. The last query chooses the Index Seek branch, and doesn’t end up needing to execute either branch because no rows come out of the Users table. It also estimates that it will use a Nested Loops Join rather than a Hash Join because of the lower number of rows.

Think Locally, Act Mediocrely

If I flip the query around to use local variables, some things change. I’m using all the same dates, here.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

DECLARE @MyLeftFoot DATETIME = '2016-12-01' SELECT u.Id, p.Score FROM dbo.Users_cx AS u JOIN dbo.Posts AS p ON p.OwnerUserId = u.Id WHERE u.LastAccessDate >= @MyLeftFoot GO DECLARE @MyLeftFoot DATETIME = '2016-12-11' SELECT u.Id, p.Score FROM dbo.Users_cx AS u JOIN dbo.Posts AS p ON p.OwnerUserId = u.Id WHERE u.LastAccessDate >= @MyLeftFoot GO DECLARE @MyLeftFoot DATETIME = '2016-12-12' SELECT u.Id, p.Score FROM dbo.Users_cx AS u JOIN dbo.Posts AS p ON p.OwnerUserId = u.Id WHERE u.LastAccessDate >= @MyLeftFoot GO |

A bunch of stuff changed, mainly with estimates from the Users table. Which makes sense. That’s where u.LastAccessDate is.

Most importantly: they’re all the same now! All three estimated 143,495 rows would match from the Users. And this is where things get interesting.

The second query chooses a different index, but with the same estimated join type (Hash), and the third query bails on the Nested Loops Join it estimated when it gets no rows back.

They both have unused memory grant warnings — only the first query asked for and used its entire grant.

Lesson: Adaptive Plans can still get wonky memory grants with local variables.

But what actually happened?

Exactly what was supposed to happen. The secret is in the thresholds.

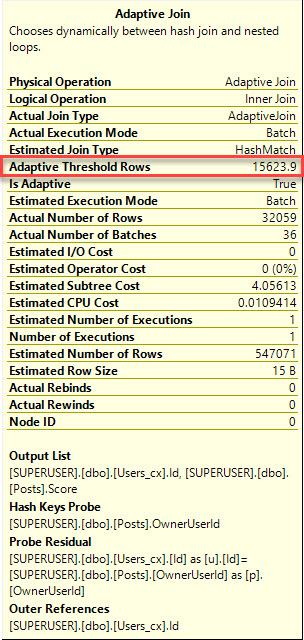

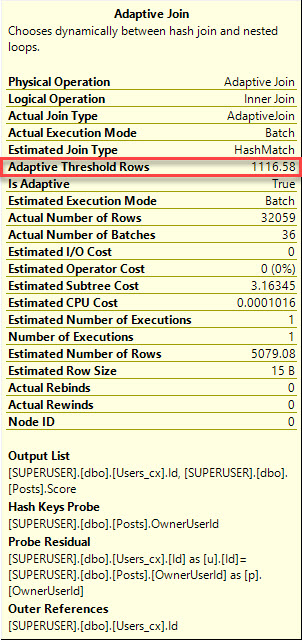

In the local variable plan, the threshold for Join choice is MUCH higher than in the plan for the literal. When the actual rows (1202) doesn’t hit that threshold, the Join type switches to Nested Loops, and likely abandons the memory grant that it asked for when it estimated a Hash Join.

The plan with the literal values has a threshold of 1116 rows for Join choices. We hit 1202 rows, so we get the Hash Join plan.

Is that better or worse?

Well, the real lesson here is that local variables still aren’t a great choice. The Adaptive Join process figures that out now, at least.

I’m not sure if it gives the memory grant back immediately when it figures out it doesn’t need it. That’ll take some digging. Or someone from Microsoft to comment.

Thanks for reading!

Brent says: In the last year, Microsoft has been generous with backporting memory grant info all the way to 2012 via cumulative updates. However, it’s still a relatively new topic for a lot of performance tuners. To learn more about that, check out our past post on An Introduction to Query Memory, and check out sp_BlitzCache’s @SortOrder = ‘memory grant’.

2 Comments. Leave new

> That’s the only way to get Adaptive Joins at this point — Column Store has to be involved somewhere along the line.

That seems quite a roadblock for most shops. Can you do the Itzik trick (slapping an empty filtered non-clustered columnstore index on the table)?

I have that planned for testing when I get back from vacation!