Hold my beer.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

CREATE DATABASE [50Ways]; GO ALTER DATABASE [50Ways] SET PAGE_VERIFY NONE; /* Normally a bad idea */ GO USE [50Ways]; GO CREATE TABLE [dbo].[ToLeaveYourLover]([Way] VARCHAR(50)); GO INSERT INTO [dbo].[ToLeaveYourLover]([Way]) VALUES ('Slip out the back, Jack'), ('Make a new plan, Stan'), ('Hop on the bus, Gus'), ('Drop off the key, Lee') GO SELECT * FROM [50Ways]..[ToLeaveYourLover]; /* Yep, we have data */ GO ALTER DATABASE [50Ways] SET OFFLINE WITH ROLLBACK IMMEDIATE; GO |

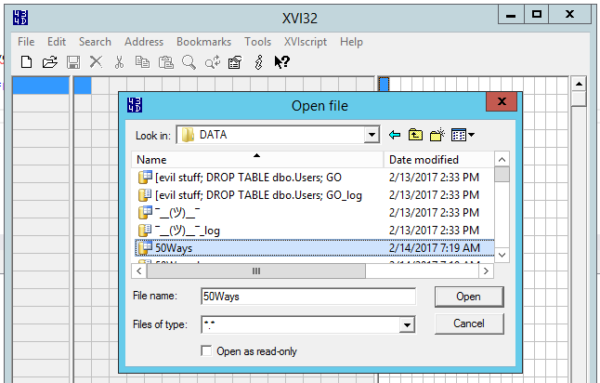

Now, let’s corrupt it. Open it with a hex editor – my personal favorite is the free xvi32 because it doesn’t require installation. Just download it, fire it up (you’ll want to run it as administrator), and open the database’s MDF file:

Next thing you know, you’re looking at the contents of the MDF file. The scientific way to approach this would be to identify the exact 8K page you’re looking for, and jump to that point of the file. However, you have my beer, so I’m trying to finish this quickly so I can get back to that. We’ll just click Search, Find, and look for text:

Presto – now you can see the raw contents of your database. What, you thought SQL Server encrypted it or something? Heck no, Stan’s social security number, credit card number, and disease history are all in there, free for the reading.

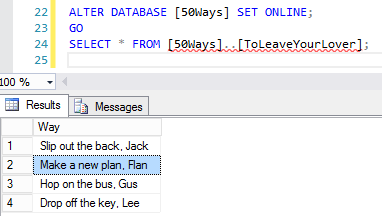

And for the writing, it turns out. Close the Find box, click on the S in Stan your right hand window, and start typing. I’ll change his name to Flan, and click Save. Close XVI32, and bring the database back online:

|

1 2 3 |

ALTER DATABASE [50Ways] SET ONLINE; GO SELECT * FROM [50Ways]..[ToLeaveYourLover]; |

And see what you get:

Or rather, see what you don’t get: no corruption warnings, no dropped connection, no errors. As far as SQL Server is concerned, this is just nice good data. You can even run DBCC CHECKDB, and no errors will be reported.

What about clustered columnstore indexes?

Look, the only reason I’m doing this is because you’re still holding my beer hostage. Same script, but now with a clustered columnstore index:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

CREATE DATABASE [50Ways]; GO ALTER DATABASE [50Ways] SET PAGE_VERIFY NONE; /* Normally a bad idea */ GO USE [50Ways]; GO CREATE TABLE [dbo].[ToLeaveYourLover] ([ID] INT IDENTITY(1,1), [Way] VARCHAR(50), [Guy] VARCHAR(50)); GO CREATE CLUSTERED COLUMNSTORE INDEX cci ON [dbo].[ToLeaveYourLover] GO INSERT INTO [dbo].[ToLeaveYourLover]([Way],[Guy]) VALUES ('Slip out the back','Jack'), ('Make a new plan','Stan'), ('Hop on the bus','Gus'), ('Drop off the key','Lee') GO SELECT * FROM [50Ways]..[ToLeaveYourLover]; /* Yep, we have data */ GO ALTER DATABASE [50Ways] SET OFFLINE WITH ROLLBACK IMMEDIATE; GO |

After the database is offline, fire up your trusty XVI32, search for Stan, and he’s still visible in clear text:

Edit him, turn him into Flan, save the file, bring it back online, and run the SELECT query again:

A clustered columnstore index works just like a regular clustered rowstore index: if you don’t have page verification turned on, no corruption is detected, even when you run CHECKDB.

PAGE_VERIFY is really important.

When I created the database, I threw in this line:

|

1 |

ALTER DATABASE [50Ways] SET PAGE_VERIFY NONE; /* Normally a bad idea */ |

That tells SQL Server not to do any page verification when pages are read or written from disk. You have 3 options:

- NONE – just a dumb, suicidal idea.

- TORN_PAGE_DETECTION – also a dumb, suicidal idea. You can retry this same demo with TORN_PAGE_DETECTION instead of NONE, and you’ll get the exact same results.

- CHECKSUM – SQL Server includes a checksum as it writes each page from here on out, and then checks the page when it’s read.

If I repeat either the rowstore or columnstore demo, but this time change the PAGE_VERIFY NONE to PAGE_VERIFY CHECKSUM, I get a totally different result:

SQL Server didn’t detect the corruption simply by setting the database online – bringing it online didn’t read this particular data page. However, when I read the page, whammo, SQL Server detected that the data on the page didn’t match the checksum.

The checksum isn’t used for data recovery, mind you – only for alerting us that the data is wrong. This isn’t like parity in a RAID array where we can rebuild the data from scratch.

What you need to do next

- Run sp_Blitz, which tells you if any databases don’t have checksum enabled

- Set checksum on those databases

- Do something that causes pages to be written (like rebuilding indexes, but keep in mind that it’s going to generate a ton of transaction log traffic)

- Set up alerts to notify you when a corrupt page is read

- Attach a corrupt database (like the one you just created) to your production SQL Server, and run the SELECT statement, which should trigger the alerts you just created

- Make sure you’re doing CHECKDB regularly from here on out

- Talk to stakeholders about what’s being stored unencrypted in the database

- Give me back my beer

27 Comments. Leave new

interesting. If i understand correctly: the option governs the *writing* of the checksum to page header as well as subsequent read/verification–i.e. if PAGE_VERIFY is NONE, does nothing gets written, checksum-wise, or is a “NULL” / not-present flag written?

i’m wondering about the following situations:

1.) I’m a good DBA and have PAGE_VERIFY = CHECKSUM. All my pages have checksums. Erik comes along and sets the option to NONE, issues a query that updates every page, and then sets the option back to PAGE. Does SQL Server suddenly freak as newly calculated checksums fail to match the pre-Erik checksums, or will Erik have cleared them with his update?

2.) I’m a not-so-good DBA and receive one of the Mr. Robot corruption errors above. I examine the page and decide that i prefer the name “Flan” to “Stan” and prefer the “corrupt” new data (however it got there). Can i cause the pesky Mr. Robot message to go away by temporarily setting PAGE_VERIFY = NONE, updating the corrupt page, and then setting the option back to CHECKSUM?

MMmmmmmmiike:

1. No, you’re not really a good DBA. Regardless, if you set page_verify to none, and then write pages, they’ll have no checksum (the checksum will be cleared). You don’t have to worry about subsequent updates.

2. Ooo, that’s a great question! I’d test it, but in the next post in this series, you’ll see why it won’t matter. (Hint: nonclustered indexes make conflicts a lot trickier.)

> 1. No, you’re not really a good DBA.

Clearly not–Erik has sysadmin on my servers. hey-o!

I wouldn’t have sysadmin if you didn’t keep making your password “xoMrsPeterHookxo”

ha! you’ve cut me to the quick, Darling. but I can’t imagine…

WHAT do you want from me??

and now you get to have that monaco song stuck in your head, too.

That’s a good one. Thanks, BrentO!

I am just wondering if there is any impact on the performance when PAGE_VERIFY is set to CHECKSUM.

P.S.: An extra beer goes to BrentO for the exquisite sense of humor. 😉

Serge – thanks, glad you liked it.

About the performance impact – I dunno, I look at the point of the database as being able to store non-corrupt data. If it goes faster and allows for undetected corruption, is it worth it? It’s kinda like saying that your car will go faster if you take out the seat belts, air bags, bumpers, etc. Sure it will, but…do you really need that extra speed? And if so, I bet you could find better ways of doing performance tuning. 😉

Thanks, Brent! Makes perfect sense to me. Seatbelts and airbags will stay. Aluminum hood and trunk – this is what I have on my Dodge to go faster. :)))

What you’re saying is true, but if it *does* have a relevant performance overhead, and you have a I/O subsystem that already does this kind of checksumming, then it’s kind of a waste.

Eamon – think through that a little deeper. If your storage subsystem detects corruption, one of two things happens: either it fails to deliver any data at all, or it will deliver a known corrupt page (and just report it to the storage admins.)

If it fails to deliver any data (just denies the read attempt for SQL Server), we have a problem because the storage may detect corruption at a larger granularity than just an 8KB page. For example, your storage might mark an entire 256KB area as corrupt when in reality there’s only a smaller area that’s affected. That’s why storage vendors will usually deliver reads even when the data is corrupt.

If it does return known corrupt data, then it’s still up to SQL Server to figure out what parts of the data are corrupt. That’s where checksums come in.

Great theoretical exercise though!

Brent,

I’ve got some servers I need to change from Torn Page Detection to Checksum. I’ve already run (and scheduled) my checkDB’s, and there is no corruption.

If I turn on checksum, and corruption appears, can that error make it out to the end users? I obviously need to make sure my databases can store data without corruption, but I’m not a fan of end users & application analysts kicking down my door when I ‘break the application’.

Maybe I need a test server…

James — for Q&A, head over to dba.stackexchange.com.

Thanks!

Done.

https://dba.stackexchange.com/questions/193522/can-checksum-alerts-make-it-to-the-end-user

cheers.

Extra points for working in a classic Paul Simon tune. Eye-opening article. Thanks!

“Hold my beer.”… how all great blog entries should begin.

Hahaha, thanks!

Heh. Those other database filenames almost slipped me by. 🙂

Thanks for this. If we restore the database to another server, will the checksum data be carried over?

Duke – awesomely, yes!

Saw this pop up on FB – one quick thing – it’s TORN_PAGE_DETECTION not TORN_PAGE_VERIFICATION. Kind of a moot point as it shouldn’t be set to that anyway.

And Paul returns an empty glass…

HAHAHA, that tells you how often I use it, hahaha. Thanks for the catch, sir.

Interesting that the CCI returned the same results. I would think the compression obfuscates the data. Are the row groups still open due to the low number of records? In that case, you’d be testing the same thing as the first scenario.

Hey, what if I want to corrupt the master DB without stopping the SQL server service, Say I want to simulate a disk which went haywire and corrupted my master DB and that too my System tables!!!

Midhun – I wouldn’t recommend trying to repair corrupt system tables in the master database. If you had disks go that bad, fail over to another server and restore your user databases, then work on fixing those.

Hi, is there any way to corrupt one specific page of data in a very large database. The described method is good for small databases. XVI32 cannot handle large files.

I wouldn’t recommend trying to learn corruption detection and repair on a large database because CHECKDB will take a lot longer.