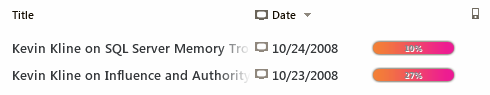

A while back, Bryan Oliver, David Gugick and I went to Redmond to take part in a SQL Server 2008 auditing and compliance lab. JC Cannon and other Microsoft SQL Server 2008 pros talked about the new 2008 features that help DBAs with their SOX/HIPAA/PCI/etc compliance needs.

I’ve dealt with some of these regulations in a limited way before, but I learned a lot out of the lab. JC and crew taught us a lot about risk management, government and compliance, and we had a set of lab exercises where we stepped through implementations to put what we’d learned into practice. We were essentially guinea pigs for this whitepaper:

Microsoft SQL Server 2008 Compliance Guide Whitepaper

As somebody who’s gone through the whitepaper start to finish (with a lot of guidance from JC’s team) I can tell you that this is really good stuff. If you work for a public company subject to regulations, if you work with sensitive financial data, or if you work with health care data, you need to read this doc.

If you WANT to go to work in any of those areas, you need to read this doc, because it’s the cheapest way to get compliance training. Read pages 7 through 12, and you’ll be well on your way to understanding the complexity and difficulty involved with supporting SQL Servers subject to regulation. If you keep reading, you’ll be pleasantly surprised – this is a good whitepaper in terms of a balance between technical content, business content, diagrams, and sample code.

If you’ve got feedback about the report or if there’s things you’d like to see added, let me know and I can pass your feedback on to JC Cannon. I promise not to take credit for your good ideas, hee hee ho ho.

Update 1/2009 – they’ve also launched a SQL Server Compliance Portal with even more SQL Server training information!