This month, to mark the 20th anniversary of BrentOzar.com, I’m stepping back and looking at the big picture rather than blogging about database problems & solutions like I usually do.

I’m going to generalize a little by decade here to keep things simple.

In 1990-2000, databases were mostly tied to specific development languages. When you developed an application with a Microsoft Access back end, you were very likely using Microsoft Access to store the data, too. If you wrote a web page in Microsoft FrontPage, you were likely using Access or Microsoft SQL Server as the back end.

During 1990-2000, one of our biggest database administration problems was server sprawl. Every time we had a new app, we just spun up a new with a new database. Most of those servers had fairly low load in terms of performance, so as licensing costs went up, we went through consolidation projects to merge multiple databases onto less servers to cut costs. (This continued in the 2001-2010 time frame, especially with the advent of server virtualization.)

In 2001-2010, object-relational-mapping tools (ORMs) tried to abstract the database away. In theory, developers worked with objects, and the ORM layer mapped things to the physical database storage. In theory, some ORMS were cross-platform, saying that you could switch your database back end without rewriting your code. In practice, that didn’t work very well. In the 2000s, our app code language choice still pretty much dictated the most popular database we would use for that particular language. C#? SQL Server. Java? Oracle.

During 2001-2010, Microsoft SQL Server rode the wave of .NET popularity, being the easy choice for C# developers. Microsoft’s strategy to grow SQL Server appeared to be making SQL Server a good fit for other purposes, too. It was hard to grow revenue by convincing PHP developers to switch from MySQL to Microsoft SQL Server – the cost difference just doesn’t make sense.

However, it was much easier to grow revenue by convincing ETL developers to switch from Informatica to SQL Server Integration Services. SSIS was free in the box with SQL Server, so if you were using Informatica to push data into SQL Server…why waste your licensing money on Informatica? Same thing with SQL Server Analysis Services, SQL Server Reporting Services, Filestream/Filetable to use SQL Server as a file server, XML in the database, CLR code to use SQL Server as an application server, and so on.

In 2011-2020, 3 things changed how we pick databases.

In no particular order, all of them being independent:

- Developers got tired of SQL’s rigid requirements and started building open source NoSQL persistence layers (MongoDB and Redis are great examples) to solve specific challenges at a very low cost.

- Cloud providers made it really easy to spin up a new production-quality persistence layer, like one you might not have otherwise had the skill or spare hardware to spin up, and only pay for what you use (in theory.)

- Hosted software-as-a-Service (SaaS) apps meant that often business folks didn’t even have to pick a persistence layer at all. Software vendors that used to have to support their apps on-premises on a variety of databases now just took that decision (and the support) away altogether. Instead of having to pick a database their enterprise customers would be comfortable with, they could use whatever they wanted.

As these 3 things continue to spread – and I use that term in the current tense, because they’re still happening – Microsoft SQL Server is less and less the obvious de-facto choice for C# developers. If you’re building a C# app in the cloud, it might make more sense to use:

- DynamoDB if you just need key/value storage, not a full blown relational database

- Redis if you just need a cache

- Azure SQL DB if you just need a little code in Azure Functions, and you need relations

- PostgreSQL if you want relational at a low cost without being tied into a cloud vendor

- Just a plain ol’ file share if you want to save XML or JSON data for later analysis with a variety of tools

- And so on.

Plus, those 3 things above lowered the barrier to using multiple persistence layers, each for their own specialized purpose. There were so many persistence layers available, at such a seemingly low cost, without having to worry about on-premises support for each of ’em – that developers became more emboldened to use multiple persistence layers even inside a single application.

The architect in me loves this because we get to choose the perfect tool for each task at hand.

The business owner in me is horrified because we’re heading for something completely untenable.

2021-2030: Welcome to Sprawl 2.0.

Data lives everywhere, in redundant copies, none of which speak to each other, and all of which cost us by the byte, by the month.

Performance sucks everywhere, and every query costs us by the byte of data read.

None of these persistence layers (SQL Server included) have good cost management built in for size or performance. Most databases are still in their infancy of telling you which queries are burning the most resources, let alone telling you which queries are costing you the most money.

I got a sneak peek when I was talking to the data warehouse manager at one of my clients. He showed me their architecture diagram for their next-generation data warehouse: Azure Data Factory pulling files from sources, loading them into various databases, dumping flat files into a data lake, queried from Spark and Microsoft Big Data Clusters. He explained which parts were pay-as-you-go services, which parts were VMs, and which parts were in Kubernetes.

Then he said, “Yesterday, my costs quadrupled. The only reason we even found out so quickly is because the accounting team had an alert set up. My team has been working on it for four hours, and they’ve narrowed it down to query workloads (not storage or ADF) – but we’re realizing we don’t even have the skills to answer the question of what queries cost us the most money yesterday, or if they’re new queries, or new users, or changed database structures, or what.”

“We’re just letting people run queries and light money on fire.”

New database servers could solve this, but won’t.

When we solved Sprawl 1.0, we were at least only dealing with a handful of database platforms. We could gather all the SQL Server applications together onto less servers.

With Sprawl 2.0, we have:

- Countless database platforms hosting…

- Countless apps built with different languages and connection libraries

- Living in a mix of different cloud vendors and on-premises

In theory, vendors could:

- Build a single database that masquerades as multiple databases

- Let you connect lots of existing apps down to just one platform

- Build good cost management, making it compelling to executives

- Maybe even build in cost reduction with automated query tuning, making it even more compelling to execs

Azure Cosmos DB is the forerunner here, I think: one database back end, but lots of APIs so that it could act as any number of different NoSQL or SQL servers. In theory – we’re talking 2021-2030 here – Microsoft could gradually enhance it so that you could take applications written for different back ends, and then consolidate them so everything lives in Cosmos DB.

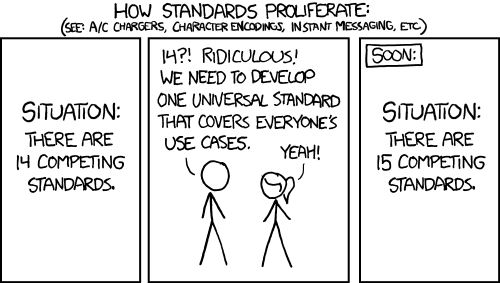

But at the same time, Azure Cosmos DB is yet another new server that we have to manage, calling to mind the classic XKCD cartoon:

Similarly, but way less ambitious, Amazon’s working on Babelfish for Aurora PostgreSQL. The idea is that you’ll put both your Postgres and SQL Server databases in the same place, and connect your applications to it. It’ll service T-SQL queries out of PostgreSQL.

Look, I said it was less ambitious – I didn’t say it wasn’t ambitious.

Babelfish has a hell of an uphill battle because just SQL Server alone has an incredible amount of features, data types, and edge case gotchas. Switching your SQL Server application over to Babelfish on Aurora is going to call to mind the ORM lessons of 2001-2010. Existing applications are complex enough that in most cases, it’s just too hard to forklift their data out of one database, move it over to another, and expect everything to work. With both Cosmos DB and Babelfish, there will be case studies funded by vendors that will gloss over the complexity, but…these migrations will be few and far between.

(Just because someone in the audience will ask – no, Azure Arc doesn’t solve this problem of database sprawl. If anything, it enables sprawl because it encourages self-provisioning of databases & servers & services, both in the cloud and on-premises.)

At the same time that vendors are trying to build one-size-fits-all databases, they’re going to be in a race against sprawl. More new specialized databases will pop up. Existing databases will get new capabilities. The centralized, sprawl-reducing databases won’t be able to keep up.

Sprawl 2.0 is the new normal.

As a database administrator, your job isn’t just to manage the configuration, health, and performance of the database servers you have today.

In 2021-2030, your job is to:

- Watch the options for persistence layers out there, reading developer news sites to see what new options are popping up, where they’re succeeding, and where they’re falling short

- Help your team evaluate new persistence layers by discussing their advantages and disadvantages compared to the persistence layers you’re already using

- Encourage the use of existing persistence layers rather than picking new ones – because every new one that comes in, you’re gonna have to learn to manage it, and you’re not exactly sitting around bored, and the company’s not hiring help for you, either, because the sales pitch on all these new persistence layers is that they’re all self-managing – and you know how that goes

You don’t have to develop a level of expertise on all of the options out there – it’s just too hard, and the new players change too quickly. For example, it’s a waste of your time to try to master a new platform like Cosmos DB if your company isn’t actually using it yet. However, you wanna learn about where it’s a good fit and what its weaknesses are – so that when your developers start talking about using it, you can understand its strengths and weaknesses relative to the ones you’ve already got.

As a blogger, I hate telling you to do something without giving you references to actually do it. In this example, I should be a good blogger and say, “Now go here to learn about Cosmos DB’s strengths and weaknesses.” The problem is that I just don’t have a good list of resources for every persistence layer out there, and I don’t have the time to go build it, either. I can’t even recommend vendor documentation sites because they’re so biased in favor of the product.

I’d leave you with one piece of advice: when you want to learn more about a database, Google for:

- (database name) release notes – and then read about the known weaknesses or bugs.

- (database name) limitations – and look for features that are important to you.

- (database name) site:news.ycombinator.com – because Hacker News surfaces a lot of interesting pro and con stories. You have to take these with a grain of salt – but the same thing is true for vendor documentation, too.

- If it’s available, read the database’s Jepsen analysis to learn about edge case failures

- And finally, if it’s open source, find its Github repo, and read the list of issues. Sort them by most commented, and see what problems the community has run into. Pay particular attention to how long those issues have been open, and what kind of progress the maintainers are making.

Because it’s really easy to start building a database or an online service.

It’s much harder to do it well.

And if you thought it was hard to manage just one, wait til you see how hard it is to manage a whole bunch of half-ass persistence layers.

33 Comments. Leave new

Great article…Everything in the Cloud has made some things easier (more accessible) but also so much more complicated. With how things are architected these days, you can’t just have a few SMEs. Future applications are going to run like the government – massive resources (# of people), inefficient, and expensive. A few years ago, I only needed UI developers, back-end (Java) developers, and a DBA/Database Architect. Now, I need a Cloud Architect, Kubernetes Architect, Security Architect, Storage Architect, etc. with each architect requiring a team of developers and support. Time to check on my 401(k)….

Great article as usual Brent. I would love it if you could take a look at MarkLogic’s offering in the “DataHub” space. Some very interesting and exciting things going on there. It can remove the need for data lakes, allows for quick security, data lineage, harmonization etc. and has simple query methods to slap APIs on top of the data both for inbound/outbound. I would love to hear your thoughts on this DataHub concept as it appears to be the latest buzzword in the EDW industry and data management

This is in no way a plug for MarkLogic, just a great example of what I think could be the next big thing in Next Gen data management.

Thanks Rick! Make sure to read that last section of the post where I explain how to learn more about new databases. Cheers!

I’m enjoying using the memstate (https://memstate.io/) pattern of database management. I rolled my own as it is fairly easy for a personal side project I’m working on. All data changes are append only (event sourcing) and I just load the customer’s data in for 20 minutes or so into memory. I get sub millisecond responses from the server and just queue the data to be saved which saves every 1 second with a bulk insert using sqlite.

I’m not sure what challenges I’ll have because of using this pattern. But it makes working with data dead simple as it is all just C# (or whatever language I’m using that week :-)).

Reading the documentation, Memstate makes an interesting tradeoff.

It simultaneously provides:

• The performance benefits of an in-memory cache

• The ACID benefits of a proper database

Of course, these benefits come at a cost; the memstate approach suffers from abysmal scalability (even worse than relational databases!) . This is an awkward sacrifice to make, since it’s not especially common to simultaneously have a small database and have meaningful performance concerns.

All that said, it looks like a great solution for small projects. I suspect the memstate approach obviates the need for an in-memory cache, which makes for a very clean implementation.

According to their docs you can get servers with high memory capacity and that is how they scale. The creators of memcache make it sound like they are hosting a significant amount of data in-memory.

But if you have data that you can partition then you can probably mitigate some of the pressures of having so much data in memory. And they say to not put large amounts of text into your in-memory data to help keep it more lean.

For the one I implemented I just keep the data in memory for about 20 minutes using ASP.NET Core’s built-in cache code. And mine doesn’t have ACID guarantees, it does what Redis does, saves to disk once every second. And mine can be partitioned by user id if I needed to split it out on multiple servers, But Linode has up to 60 GB memory servers so I’m not sure I would ever need that.

Something changed inside me when it dawned on me about a year ago that I’m not just tuning for performance (a full time job, as we all know!) but I’m now tuning for *cost* as well.

When you start seeing each operation as a nickel, and do the math to realize that op happens 60k times each week, your worldview tilts a little.

Will – exactly! Now, when clients come to me, the financial people are already on board – because when they hire me for performance tuning, they know they’re gonna save money at the same time queries get faster.

Exactly, and well put. Having that realization was like being hit with a hammer, all the ways I looked at load shifted.

It also made me *much* better at my job and gave me some really handy hammers to hit people with to get the backlog adjusted for issues that previously would have been ignored for years. 🙂

I’ve always been cost conscious… it started way back when the dinosaurs still roamed the Earth and I built a sort routine in the early ’80’s on a mainframe that needed it. My first run, which didn’t work the way I wanted it to, cost the company $80 . That was a shedload of money back then… especially for something that didn’t work the way it was supposed to!

The first thing you have to ask yourself when a new technology comes up is: “What problem does it solve?” If nobody knows the answer, drop it. Don’t dive into it just because others do.

The second question is a follow-up question: “Do we actually have this problem?”

Third question: “If so, can we solve this problem in other ways?”

These questions help prevent wasting a lot of time, or worse, bet the company on some hyped technology.

And beware of the urge of engineers to always add more technologies to their resume. Give an engineer money and they will find a way to spend it (and then move on).

If you want to spend a lot of money with little chance of getting it back, cut your applications into twenty pieces, call them microservices, give them each a document database, and make them cooperate via messages. Make sure you hire engineers who have no experience in microservices or document databases or messaging. Don’t do any feasibility studies. You’ll take the company down.

I like the idea of a monolith. But as a company grows the monolith makes it extremely difficult to make changes. Unless you have a ton of money like Facebook or Google to make your monolith more modular and more easily updatable then a microservice architecture begins to make sense. Although, people can go over the top with the microservice architecture in the opposite direction. There needs to be a balance.

Heh… we’re still mostly “on-prem”. I monitor for performance using a bit of home-grown code I wrote and a bit of problem code finally rose to the “Top 1” in my “Dirty Dozen” list. The stored proc was being executed 2-4 times a minute by actions from the front-end. Every execution was consuming 680,000 (~6GB) logical reads to twice that. It was taking about 3 seconds of CPU and duration.

The problem came down to one of those (table1.ColA=table2.ColE OR @Variable IS NULL) “Catch-All” dammits. I changed that to a dynamic query and the reads dropped to 4 (NOT a misprint) and the execution time dropped to about 78ms. It was a simple fix… someone just needed to find the issue and know what to do to fix it.

Alluding to what your good article is about, people think that being able to easily spin up extra hardware (CPU, Memory) is going to fix performance issues like that. They also think that since “T-SQL/SQL Server” is such a bear to learn (it’s not), the resort to other types of databases and other software. In general, they suck at using those, as well, because it’s new, frequently requires learning a new paradigm, and you’ve gotta know that they didn’t actually spend any time learning what they need to know there either.

An so you end up with a very expensive to operate and maintain “Tower of Babel”.

Heh… and, if this occurred in the cloud, just imagine the savings they could have had if they stopped looking at the shine of “fools gold” and spent a little time learning about what they were originally using (T-SQL/SQL Server or whatever) because “Performance is in the code… or not”. 😀

“Change is inevitable… change for the better is not”. And, remember also that “If you want it real bad… that’s normally the way you’ll get it”. 😀

HAHAHA, want it real bad, nice…

Jeff, exactly

Cosmos as front-end is NO SQL Server. Period

Its a 1.0 product and will remain that way for reporting and analytics forever

2MB maximum document size? Break me a Give.

I’ve only been a DBA for about 5 years and it is hard sometimes to grasp the bigger picture without first-hand experience of historical context; this post has cleared up some things for me. As always, thanks Brent!

I was thinking some days ago where vnext sql server will fit in upcoming years.

I remember the releases of sql 12/14 and 16 at the PASS a lot excitations in all people in the room about new features at keynotes !!! Some guys blogged from there all news from vnext !! Could you imagine something like that times in the future for sql on premise ?

The new generation of “data engineers” seems aren’t realising all money that they could burn. The business owners will face a huge challenge !

Excellent analysis Brent !!!

Thanks, glad you liked it!

One thing to add, Cyber Insurance rates are exploding right now as everyone has data breaches. Most of them cant even be called hacking – people are walking right out the digital front door.

Just like the difference between implementing cloud stuff and implementing it well, you can implement it and you can implement it securely. The threat of a security breach may seriously slow Sprawl 2.0.

Totally true, because as we’ve seen in the car insurance business, high insurance rates for teens has completely stopped all irresponsible driving behaviors by young drivers. Similarly, high health insurance rates have completely eliminated smoking and obesity.

Hang on, wait – I’m being told that might not be correct.

again, thanks for the words brent. I’m really scared with my future as a DBA. Im learning now some other tools for data analysis like powerBI, MDX queries, cubes and different databases like mysql, oracle and mongodb and some linux. I feel I lost a lot of time focusing only on slq server that now I feel so empty. i did great stuff on companies I’ve worked and I’ve learned A LOT sql server with you guys. I really hope databases dont die in the future. I NEED MONEY TO EAT AND DON’T DIE ! haha

If it comes to that learn some other tech skill. But it seems like db work will be around for a long time.

Thank god I’m retiring in 4 years. At which point I will do a bit of freelancing, concentrating on those poor souls still using 2005 and 2008 🙂 Well it’s better than working in Asda (or Walmart if you’re on the other side of the pond).

I’m looking at about a year and a half ’til I’m 70 so I’m right there with you on that, Gordon. The thing is, T-SQL isn’t just a job for me… it’s also a hobby. I’d much rather solve problems in T-SQL than solve cross-word puzzles (a metaphor, for sure) to keep my brain active and there’s a bit of extra money coming in instead of (like you say) working at Ada, Walmart, Home Depot, or whatever. And, I don’t actually have to leave the house to do it.

People have been saying that all different types of DBA’s (I’m a bit of a hybrid system/application DBA) are going to go by the wayside and that the world of RDBMS’s will go away “any minute now” for more than 2 decades and yet the need keeps getting stronger because a whole lot of stuff still needs rows and columns (Sidebar: The whole world still secretly relies on spreadsheets as an extension to databases both for input and output :D) in one form or another.

While I look forward to doing other things, I’ll probably never fully retire because I like this stuff way too much. It all helps entertain me without offending folks because I have a “face for radio”. 😀

Jeff – you know, you’ve been really relaxed lately and I figured you must be close to retirement. 😉

Heh… You know me, Brent… it’s only an outward appearance. 😀 You know the old saying when it comes to DBAs and T-SQL… “Today, I shall not “kill””. 😀

As a bit of a sidebar, I’ve decided to practice one of “Peter’s Laws” published as a part of “The Creed of the Sociopathic Obsessive Compulsive”… “If you can’t beat them, join them… then beat them”. 😀 😀 😀 I actually have the poster posted in my computer room if you’d like a picture of it.

HAHAHA, I love it!

Am in agreement with you on the hobby thing, Jeff, hence my intention to keep helping those with older systems to keep ticking along, even if it’s just voluntary work for small businesses and not-for-profit orgs. Wish I’d maintained my COBOL skills from way back in the day though. Reckon I’d be a wealthy man by now.

Interesting article, and I agree with the recent change to sprawl versus the “one database to rule them all” approach. There’s a balance between using appropriate technology versus cost of operations and support (oh, and cost of development and so on, so sort of a three dimensional see-saw).

I would point out that from experience, CosmosDB is a very *poor* implementation of a relational database, let alone a SQL engine. It’s far more of a document (hierarchical) database. It’s also super expensive. I expect it to die over time as people flock to cheaper alternatives like ElasticSearch and MongoDB.

Jeff’s retiring? The end of the world is nigh!

25 years ago, I was told that DB/2 on the mainframe would go away. Today, I still see COBOL applications on mainframes running against DB/2 on mainframes. DB/2 will probably go away someday, but I predict it’ll be about 25 years from now. In the meantime, I do predict a lot of those mainframe workloads will get migrated to the cloud.

Brent-

This post just hit my Feedly. I’ve never been here before but I greatly appreciate what you’ve written. I’m about 15 years into my professional career and started as a DBA. I had a bunch of stops along the way in consulting, architecture, etc and am now a data engineer.

There’s always a technology that’s touted to “Save the Enterprise!!11one” and subsequently put folks out of work at the same time. Hadoop was going to be that thing once.

I practically live in the cloud these days but I don’t write much t-sql so I fear my skills in that realm are starting to diminish. I am thankful for having that background as a DBA. It has helped me so many times in my career. I hope there is still a future for it and being able to write great queries.

Admittedly I’ve drunk the modern architecture Kool-Aid and I’m OK with that because I remember my roots. 🙂

This is a thoughtful piece and it gives me much to consider. Thank you for it.

I am going to go peruse your other posts now. I think you have a new regular reader!

Awww, thanks, glad you liked it!