Our SQL ConstantCare® service lets users send data in about their SQL Servers and get health & performance advice via email. Users can opt in to share their anonymized data to get better recommendations overall.

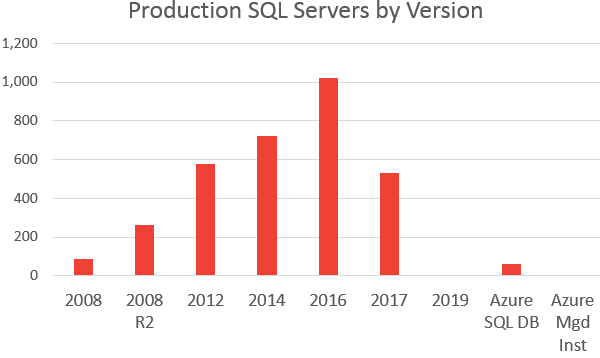

With 3,568 database servers sharing data as of November 28, there’s a lot of useful information in here for the community. Understanding what servers are out there will help you put context around where your own servers rank, and helps the community better build training material that’s relevant for most users. Let’s start with a simple one:

What versions are the most popular?

Or if you’re into percentages:

A few things jump out at me right away:

- SQL Server 2008 has more adoption than Azure SQL DB and Azure Managed Instances combined.

- SQL Server 2019 has only 1 production instance sharing data in the population.

- Almost 50% of the population are already on SQL Server 2016 & 2017. (That’s better than I expected!)

- About 1 in 10 SQL Servers are no longer under support (and don’t even get me started on patching levels.)

Now, I’ll be the first to tell you that SQL ConstantCare® users simply aren’t an accurate representation of the population overall. Quest Spotlight Cloud’s metrics as of 2019/11/28 look a little different: they have 4% of the population on SQL Server 2008, but only 1% of the population on SQL Server 2017. My guess is that that page isn’t kept up to date.

I’m looking forward to trending this data over time to see the rate at which people adopt newer versions of SQL Server. I’m going to publish these quarterly. In the next couple/few, I’ll stick with this same presentation format, but once we’ve got some timeline-friendly data, I’ll look at more interesting ways to present it.

I’m not sure if people who use SQL ConstantCare® are more or less likely to jump to newer versions faster. I’d like to think that you, dear reader, are more likely to be on the cutting edge. However, I might have a couple of different kinds of readers: one conservative group that likes mainstream versions and monitoring tools, and another group that likes cutting edge stuff and doesn’t want SQL ConstantCare®.

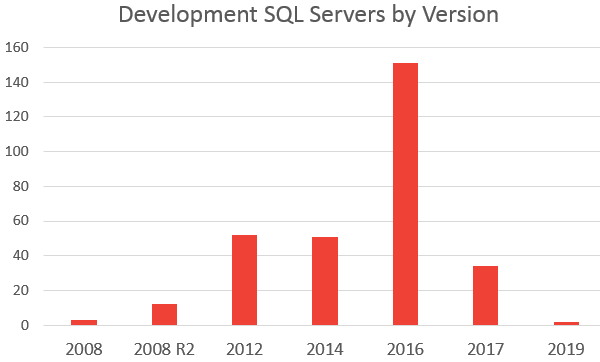

But what about development? Are people maybe testing their apps on newer versions?

What versions are people using in development?

It’s interesting how different this mix is than the last chart! 50% of all Development Edition servers are running SQL Server 2016, and 11% are on 2017.

Twice as many people are using SQL Server 2019 in development as there are in production. (I’ll leave the math to you, dear reader.)

For the rest of the census report, I’m going to focus on just the production servers.

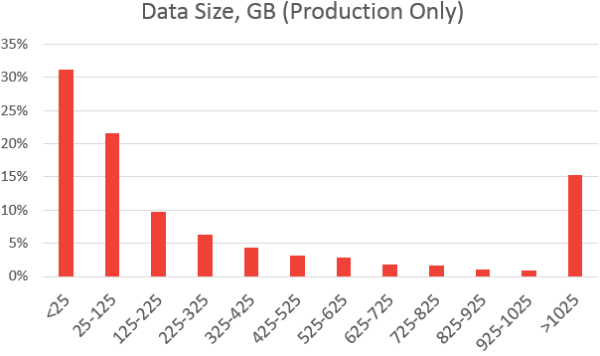

How much data do servers have?

I think this chart really helps to set the rest of them in perspective:

A lot of the SQL ConstantCare® population have what I would consider to be a fairly small server: 30% of them are hosting <25 GB data.

About 15% have 1TB of data or more, but even though that number sounds low, the population is still large. That still gives us about 500 servers’ worth of metadata to help guide folks who are in those larger tiers. There are a dozen instances in the population with over 10TB – good times there.

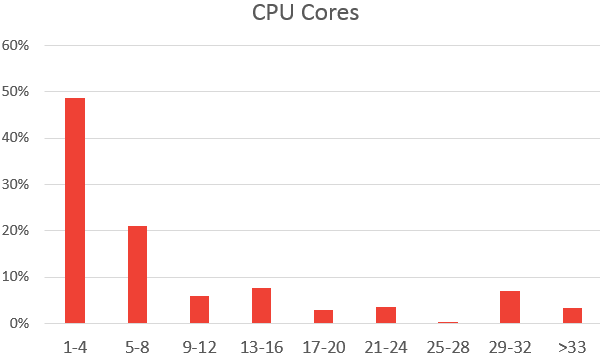

How much hardware do production servers get?

This really helps to reset folks’ expectations around what’s normal for SQL Servers out there. There are a huge, huge amount of production SQL Servers that just don’t need a lot of CPU power. Almost 70% have 1-8 cores, while only 10% of servers have more than 24 CPU cores.

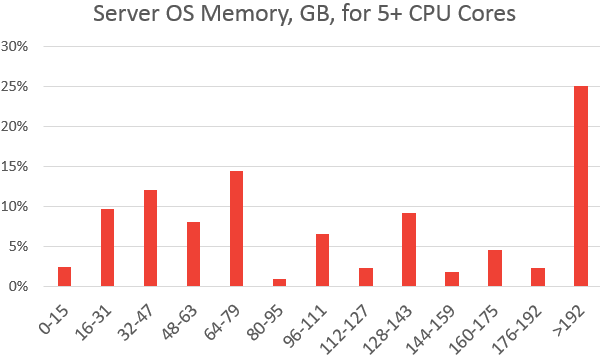

Given that almost 50% of the population have 1-4 cores, that isn’t too surprising. But what if we exclude the folks using 1-4 cores?

Okay, now that’s much more like it: 68% of that population is using 64GB of RAM or more. Good to see folks investing in the resource that has an amazing impact on performance, especially given the SQL Server licensing costs involved.

What questions would you ask the data?

If there’s something you’d like to see analyzed, leave a note in the comments. To make it more likely that I’ll actually run your experiment, include why you want to know it, and even better, your guess as to what the data’s going to say. (That’ll make it a more fun game to play, heh.) I’ll take my favorites and do a followup post with those answers.

44 Comments. Leave new

Out of curiosity, i would like to see different MAXDOP settings between % of servers like how much of those servers have MAXDOP =0, how many have MAXDOP=1, between 1-8 , 8-16, 16-32 or even greater than 32, if possible 🙂 Thanks

Kapil – why? What decisions would you make differently based on that data?

Brent, may be i am not sounding correct. But just wanted to see how many have adapted the new MAXDOP numbers introduced by MS starting SQL 2016 with auto soft numa enabled feature. What decisions would i make i am still not sure as that might need more data like if the servers are actually healthy with those numbers.

Gotcha, but *why* do you want to know that? What are you going to do differently based on the numbers that you see come out of there? I’m confused as to what good this data is going to do for you.

I need to know what settings are applied based on new MAXDOP number as mentioned by MS here https://docs.microsoft.com/en-us/sql/database-engine/configure-windows/configure-the-max-degree-of-parallelism-server-configuration-option?view=sql-server-2016

which seems pretty confusing here for SQL servers starting SQL 2016 and above. Migrated couple of SQL server from 2012 to SQL 2016, seeing some performance hits, just wanted to make sure how others are adjusting MAXDOP based on these new settings

I’d be interested to see data related to patching schedules and versions. How long are people waiting before they patch to the latest and greatest of their SQL version? Is everyone even on the latest version? I’d be interested to see that data across the users mostly because i think our operations team does a great job and I want to see if we’re ahead of the curve or if there’s more we can accomplish to stay up to date. Or perhaps folks are more conservative with applying their patches to production so would that patch upgrade timing differ between the production servers and the development servers? Maybe limit results to look at SQL 2016 since that’s the largest percent of both production and dev servers?

Brandon – the short answer is that no, most shops are nowhere near the most recent patch. If your sysadmins are keeping the server within 30-60 days of the patch releases, you’re way, way ahead of the curve.

Number of databases per server. Just curious from an app perspective and also a DBA maintenance one.

Would also like to see “Number of servers per DBA”

I’d like to know what percentage use AGs and of that, how many use a read only secondary for scaling. I suspect it’ll be a very low percentage (8% and 2%), because I have trouble finding similar cases to my own when researching, but it’d be interesting to confirm

we’re one of those with an AG and read only secondary 🙂

I don’t suppose you got anywhere with supporting UK servers did you?

Rob – no, not really going for the EU market at this time due to the GDPR: https://www.brentozar.com/go/gdpr

Thanks for getting back. I did read that a while ago but thought I’d ask on the off-chance anything had changed.

Brent, if you want a more comprehensive look at what’s out there – based on popularity I figure you could add an option in sp_Blitz to send the basic data needed for sampling back to you free of charge this way you would get a bigger picture as you would see data from servers not under constant care (I presume mostly due to lack of funds) but at least being cared for in some way.

Sassan – nah, I’m not really interested in building the infrastructure for that. sp_Blitz is open source, though – if you’d like to build that, you’re totally welcome to!

I would like to see the cpu and memory analyses broken down by virtual vs physical servers. I work in a virtual environment and have the challenge of getting cores and more importantly memory from the the server admins.

“I feel your pain” – B. Clinton.

Memory and CPU cores are cheap compared to people waiting.

Unlike NASCAR, there are no rewards for getting speed out of stock products (kind of stock)

But cores is a direct licence impact, whereas memory is for ‘free’…

And that is why I love/hate the cloud… when in need we clicked to a bigger vm. And with the PaaS offering from MS it is a simple slider and no license impact (well… capex vs opex…).

Kapil: MaxDOP=1 might be misleading as SharePoint recommends it. I have see two PeopleSoft installs on SQL Server that used it.

If only there was a way to check “Waiting for vendor to certify on SQL 20xx” or “Running unsupported SQL 2008 app and afraid to move to SQL16 with compatibility set to 10.0 because there is no support”

Number of AG servers and hybrid Onprem/Azure v.s. Onprem/AWS v.s. AWS/Azure AG groups would be nice. One company in my SS user group is doing AWS/Azure.

TechnoCaveman – I’m not sure how we would detect whether a SQL Server is on premises in Azure, in AWS, etc.

Hmmm. Thanks.

Let me consult Google or StackExchange. I’m not sure it would violate the J.E.D.I. or other contracts to know that.

Ola has some pointers in his scripts, but it is not very conclusive, the ersion of Azure SQL DB is 12.0.2000.8. But in the end it is still not very conclusive. IaaS Azure vs self-hosted? No clue.

SET @Version = CAST(LEFT(CAST(SERVERPROPERTY(‘ProductVersion’) AS nvarchar(max)),CHARINDEX(‘.’,CAST(SERVERPROPERTY(‘ProductVersion’) AS nvarchar(max))) – 1) + ‘.’ + REPLACE(RIGHT(CAST(SERVERPROPERTY(‘ProductVersion’) AS nvarchar(max)), LEN(CAST(SERVERPROPERTY(‘ProductVersion’) AS nvarchar(max))) – CHARINDEX(‘.’,CAST(SERVERPROPERTY(‘ProductVersion’) AS nvarchar(max)))),’.’,”) AS numeric(18,10))

IF @Version >= 14

BEGIN

SELECT @HostPlatform = host_platform

FROM sys.dm_os_host_info

END

ELSE

BEGIN

SET @HostPlatform = ‘Windows’

END

SET @AmazonRDS = CASE WHEN DB_ID(‘rdsadmin’) IS NOT NULL AND SUSER_SNAME(0x01) = ‘rdsa’ THEN 1 ELSE 0 END

I’m just glad you built decent charts this time 🙂

Zero pies in sight!

Number of SQL Server installations on Linux.

I’d like to see what Page Life Expectancy is. Mine is currently 300, which the internet tells me should be my target, so I’m super happy!

In all seriousness, it would be interesting to know what PLE is as a proportion of total memory. Mine averages around 18,000 for 192GB. I’m not sure there’d be any huge conclusions to draw, just out of curiosity really, as there are so many varying factors.

PLE isn’t really useful when you do daily sampling, though. It varies so much second by second.

Just in case Shaun is being serious rather than joking: On a server with a lot of RAM, a PLE of 300 is not necessarily that great. If you have a 128GB buffer pool, having the pages in that buffer pool expire every 300 seconds means that your server is reading 128GB off of disk (assuming the pages expired because they need to be overwritten) every 5 minutes.

The 300 PLE recommendation is a very old recommendation.

Hi Brian, yes I was joking, fortunately. As I said in the post, the PLE on one of our production SQL Servers averages around 18,000. It drops off a cliff during maintenance windows are you might expect. I’d be very concerned with anything close to 300.

Perhaps this could be an inspiration for a future blog post – “what’s the worst SQL Server advice that still exists across the internet?” (something about table variables being in-memory only springs to mind!)

I’m a big fan of the disable windows firewall or UAC guidance myself. thankfully that seems to be finally dying.

Shaun for some it is no joke.

One production application runs around 300 seconds, sometimes less, during the day.

*Smart* rebuilding of tables with forwarded pages and indexes with fragmentation over ten pages helps but is not even a band aide solution.

What percentage of users are on Enterprise edition and what’s their average stats. From the data you have given I’m going to go with <30%. It seems like there are a lot more smaller servers with less cores than I would have expected, although maybe those with bigger needs have bigger budgets and don't think they need your service.

Tim – oh jeez, I can’t believe I didn’t include that in this post! That’s a great question, and will definitely include that in the followup.

I would like to see a count of numbers of tables, views and stored proc per database.

The reason is to get an idea if people structure their databases in general as I do. The question is partial if they have many databases or a lot in few, and partially if people uses stored proc over views.

My guess is that most of them will have twice as many stored proc than tables. And about one-third of views compared with the number of tables.

Does SQL ConstantCare track VLF counts in transaction logs? If so, how many instances have databases with VLF counts in excess of say 10 per GB on average?

I’m currently putting a process together to automatically manage the VLFs in my platform’s instances as I’m facing a couple of hundred databases with thousands of VLFs in comparatively small log files, and am curious how unique I am in this regard. If there’s a big population of fellow DBAs out there with the same issue, I’d be willing to push my process out there as open source.

I’m not sure if you’ve seen this from the Tiger Team (MS SQL Server Engineering) for VLFs? https://github.com/microsoft/tigertoolbox/blob/master/Fixing-VLFs/README.md

I have indeed, and my process is based around theirs but includes smarter metrics than purely number of VLFs in a given log such as distribution of VLF density throughout the transaction log, as well as how recently growth and shrink activities have occurred.

My intention is make it as automated as possible, and am wondering how much wider an appetite there is for such a tool.

Putting on my product marketing hat for a second: if people don’t know that they’re having a pain, then you have to educate them about the pain, AND educate them about the solution. Solutions like that don’t really have an appetite.

If you saw a thundering amount of questions from people on sites going, “OMG, I have all these VLFs and I can’t figure out how to get rid of ’em,” then there’s an appetite for the tool. I just don’t think most people even know what a VLF is.

I think my first encounter with a VLF was when sp_Blitz told me I had a high number 🙂

https://www.brentozar.com/blitz/high-virtual-log-file-vlf-count/

I suspected that might be the case, so I’d likely push it out as open source but not put much effort into advertising it for now.

Thanks Brent, Philip and Shaun!

No additional questions, just a suitcase full of ‘thanks you’ notes for sharing this data. (Although it could have been a suitcase full of money if it wasn’t for GDPR [crying sound]).

any chance to get the raw data used for these graphs? I’d love to see for example, what percentage per version by say memory size or core count. things like that.

Trav – yes, we’ll be sending it out to the SQL ConstantCare customers who opted into sharing their data as a thank-you for that.