When you run a query, SQL Server guesses how much memory you’re going to need for things like sorts and joins. As your query starts, it gets an allocation of workspace memory, then starts work.

Sometimes SQL Server underestimates the work you’re about to do, and doesn’t grant you enough memory. Say you’re working with a table variable, and SQL Server only estimates it’ll find one row inside – but millions of rows start to come back. Your query doesn’t get granted more memory, oh no – it just spills to disk (TempDB.) What’s worse, if you run that query a hundred times, it’ll spill to disk every single time.

Other times, SQL Server overestimates the work involved in your query, and gives you too much memory. That sounds awesome – but the memory comes at the expensive of other running queries and the buffer pool, leaving you less able to cache data, query plans, and let multiple big queries run at once. Again, SQL Server was kinda death-do-us-part here in the past – it would keep wildly over-granting memory on every execution of the query.

Microsoft knew this was a problem, and they’ve been moving towards a fix:

- In SQL 2012 SP3, we got tools to diagnose memory grants

- In SQL 2016, columnstore users got adaptive memory grants

- In SQL 2017 CU3, we got tools to diagnose TempDB spills

Now, SQL Server 2019 brings adaptive grants to regular queries. Your plain ol’ rowstore tables operating in row (not batch) execution mode get adaptive memory grants. This is the kind of “it just runs faster” feature that everybody can benefit from – although there are a few gotchas.

Let’s see how they work.

We’ll start with the StackOverflow2010 database, the small 10GB version so you can play along at home. I’m going to set the database’s compat level to 150:

|

1 2 3 4 5 6 |

USE [master] GO ALTER DATABASE [StackOverflow2010] SET COMPATIBILITY_LEVEL = 150 GO USE StackOverflow2010; GO |

Then I’m going to run a query that produces an artificially high memory grant, but doesn’t actually use it:

|

1 2 3 4 |

SELECT TOP 101 * FROM dbo.Users ORDER BY DisplayName, Location, WebsiteUrl; GO |

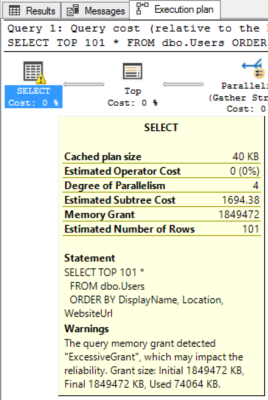

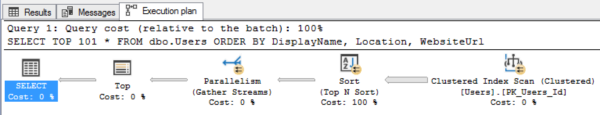

The first time I run the query, the execution plan shows the cowardly yellow bang with a warning about a too-high memory grant:

If you right-click on the SELECT, go into properties, and check out the grant info:

Desired memory is 1.8GB, and feedback isn’t adjusted yet because this is the first execution.

Run it again, and the plan’s warning is gone!

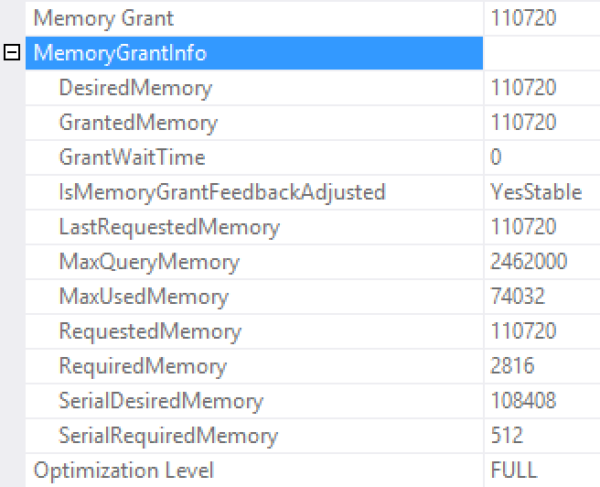

And in the memory grant properties, the memory grant is WAY down. Desired memory is only 111MB, and we see that the memory grant feedback is adjusting:

Put your finger on F5 and hit me baby, one more time, then:

The memory grant has stabilized at 111MB!

This is awesome, but there are drawbacks.

If the grant vacillates too often, like if your query is sensitive to parameter sniffing issues and has wildly different memory usage numbers, SQL Server will try to adjust the grant, but then eventually give up after 32 executions.

If you rebuild your indexes or create new indexes, the grants reset. SQL Server starts its learning curve again – yet another example of why focusing on fragmentation is likely hurting your performance, not helping it.

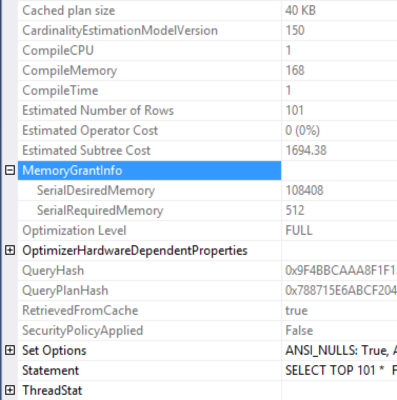

But here’s the really tough part: it’s not easy to see that this is happening, let alone happening in a way that’s beneficial to you. When you’re looking at query plans in the plan cache, their memory metrics are totally devoid of any feedback whatsoever:

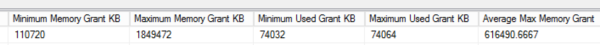

Here, I’m looking at the query plan from the cache (using sp_BlitzCache), and I don’t get any feedback data at all. There’s a small clue if I analyze the wide variations in min and max grants from sp_BlitzCache, which comes from sys.dm_exec_query_stats:

But that’s it. There are no Perfmon counters to show you this is happening. To diagnose problems with it, you’re going to need to bust out Books Online and set up an Extended Events session watching for memory_grant_updated_by_feedback and memory_grant_feedback_loop_disabled.

In summary, I love it. When I explain memory grants in our training classes, people are stunned to find out that they’re not adaptive. SQL Server 2019 just quietly fixes something that’s been a problem for a long, long time, and doesn’t make a big deal of it. I love it – it’s just like the new improvements to table variable estimations. And for more 2019 info, check out Aaron Bertrand’s writeup of what’s new (so far) in SQL Server 2019.

3 Comments. Leave new

I guess you mean 1.8GB desired memory instead of 1.8MB?

I just upgraded a few servers to 2019 RTM-CU18 and with the database in 150 compat mode the IsMemoryGrantFeedbackAdjusted and LastRequestedMemory options are missing. I’ve put a question on dba.stackexchange.com on this and it’s looking like this is an enterprise only feature? is this true? the MS feature matrix says the feedback is enterprise only, but does that mean adaptive stuff is still working under the hood and they’re just hiding the feedback or is there not adaptive grants in Standard edition?

As the documentation states, this is an Enterprise Edition feature.