You’re Crazy

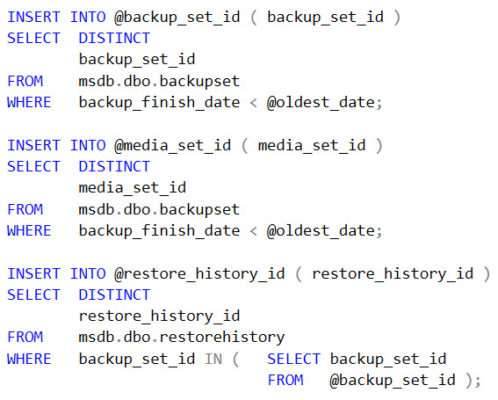

In Part 1, I showed you code that I think has some anti-patterns in it.

In case you didn’t recognize it, it’s actually code that Microsoft wrote. It’s from sp_delete_backuphistory, and it is plum awful.

I have a lot of personal dislike for this one, because after inheriting a server with a 25GB msdb, I nearly crashed it running this proc on one day of backups.

Back then, a really smart guy told me something: If you make a copy of it that uses temp tables instead, it’s a lot faster.

The issues I have with it can be distilled into three things

- Table variables, c’mon

- Running eight different deletes inside one BEGIN TRAN

- TABLE VARIABLES, C’MON

Making Faces

Anyone who has processed data in volume knows how bad table variables can be. The inserts are forced serial, they spill to tempdb a lot, and unless you recompile (which this proc doesnt), you end up with a one row estimate.

Which makes it doubly awkward to trip yourself up inserting to a table variable with a predicate on another table variable. Have mercy.

I have no idea if sticking a PK on any of these might help. Heck, the DML sort might even slow things down.

But it’s not like the optimizer is gonna know you went through the trouble of selecting distinct IDs, otherwise. Table variables get no column level statistics.

This is the thing I like about the temp tables — you can stick an index on them afterwards. In this scenario, I’m in favor of that causing a statement level recompile.

Begin Without End, Amen

The worst thing, though, really is the use of a transaction around eight deletes.

You can have a god awful amount of data in these tables, even in a single day. The server that I had issues with had around 5000 databases on it, getting ~15 minute log backups.

Plus daily fulls and 6 hour Diffs.

Do that math.

The whole time a delete is running, the log backup jobs running couldn’t write to the tables.

If one of them throws an error towards the end, they all have to roll back. Imagine 7 or 8 deletes all rolling back.

Blocking your log backup jobs from writing to tables.

So you can’t take more log backups.

And these are the people who write the product

If you’re an ISV, or someone getting started with SQL Server, you might poke around the code in the system for tips and tricks.

And you’d see stuff like this.

Which may explain some things…

Microsoft — if you’d like some help with SQL Server, click the consulting link at the top of the page!

Thanks for reading!

22 Comments. Leave new

“Hey, Whiz-kid, welcome to Microsoft. Your first task will be pretty simple, you know, just to get your feet wet.”

“Cool, happy to be here, I’ll do anything.”

“We need a stored procedure to clear old backup history from MSDB. Be innovative.”

“On it!”

Two days later.

“Great work, kid! You pass the test. Ready for your real assignment?”

“Absolutely.”

“Let me introduce you to the Entity Framework Team. They’re doing some really great, abstract, innovative stuff. Cutting edge! You’ll fit right in…”

Will Done!

(GET IT?)

Wow… that’s harsh… not wrong but harsh. 🙂

A lot of the sample databases. The code SQL products are not normalized, properly designed or conformed to any kind standards at all. Microsoft is no exception. But in their defense I will say sample database tries to show all the features of your product/SQL, and the application actually has to implement all of them. However, their inability to conform to any kind of ISO rules, basic naming conventions, or any kind of consistency (probably goes parts of the sample database were built by different teams) is a nightmare for someone trying to use them to learn either SQL or the product. Microsoft really ought to take the time to go through this, clean it up and reissue it. People the real world don’t have either the money for the luxury of a sample database that Microsoft as.

Joe — yeah, totally. When AdventureWorks dropped with entirely unnecessary spatial data in the Employee table, I gave up on it.

Every employee is spatial!

(GET IT?)

Nice post! The first time I came across this one was when someone decided to create a maintenance plan to purge the backup history to retain just the last month of data… There was data in msdb dating back 4 years and the worst part was that not only was it using that awful proc but it used just one call to the proc to try and purge the entire 4 years in one go!! Soon got shot of that maintenance plan 🙂

Hah. Oh boy. “I don’t know why this is slow…”

Well they are no longer using Cursors as they did in 2000 and maybe 2005.

Did they fix the indexing for the FK checks?

Maybe not, I looked at 2008 R2 and in the backupset table, the column media_set_id does not have an index on it, therefore if you delete from the [backupmediaset] it has to do a table scan on backupset.

I don’t know if that issue is still there in 2012 or later. Probably it is.

There are a bunch of indexes on backupset now, yeah.

Aw heck, just add an index to those msdb tables. The big explicit tran will get short again :). Just remember to save your custom indexing script, because upgrading SQL Server can send your indexes to the big bit bucket in the sky…

Nice post Erik. Actually one statement from the sp_delete_backuphistory Job was one of the top 10 queries on my prod server (old SQL 2008)…until I added an index on it. Didn’t go that far to look at the sp and make changes to the code though.

Martin — you know it’s funny, I wanted to add an index to msdb, but the table was so big that the poor sloth of a server it was on couldn’t handle it. I had to add it after I deleted all the data.

Ouch!

Hi Erik, enjoyed this series! I have a question – what is it about table variables that you don’t particularly like? When I first started with T-SQL years ago, my initial mentor suggested using them for specific cases where you need to define a finite number of values. Given that I haven’t seen issues with using them throughout my career, I primarily use them to store small sets of relatively static data (< 10 rows) that I need to refer to for conditional operations (almost always IF/ELSE). If it's a larger set of data I need to operate against or use to JOIN on physical tables, I'll use a temp table. But the relatively cleaner syntax is appealing, and I have not noticed any differences on the performance side.

A more recent implementation of mine is where a comma separated list of up to 4 string values is passed to a stored procedure parameter. The strings are no longer than 3 characters each. Based on what exactly is passed, I order them in a particular manner. I run or skip smaller IF/ELSE blocks within the transaction based on the combination that was passed. The values are only used internally by the stored procedure for these conditionals.

An example of the case:

DECLARE @Series varchar(16) = 'EFG,LMN,ABC,HIJ'

DECLARE @SeriesSplit table (SeriesPriority tinyint, Series varchar(3))

INSERT INTO @SeriesSplit (SeriesPriority, Series)

SELECT

ROW_NUMBER() OVER (ORDER BY CASE [value] WHEN 'ABC' THEN 1 WHEN 'EFG' THEN 2 WHEN 'HIJ' THEN 3 WHEN 'LMN' THEN 4 ELSE 5 END) AS 'SeriesPriority'

,[value] AS 'Series'

FROM

string_split(@Series,',')

WHERE

[value] ”

SELECT

SeriesPriority

,Series

FROM

@SeriesSplit

Am I wrong in using a table variable over a temp table here?

I will definitely look into the performance of using a temp table on the full stored procedure to see if I notice any increases in performance or differences in execution plan, but I’ve been satisfied with this usage for some time. Perhaps I’ve navigated the internet where I hadn’t read anything that contradicted my usage up until now, so I may very well be wrong and can quickly improve on various stored procedures and make sure I’m doing so dilligently in the future as well.

Thank you!

Zak — for small amounts of data like that, you likely won’t notice a difference.

Table variables turn rancid with larger data sets. I believe I outlined a few reasons either in this post or the first one.

Thanks!

Hey, I’m speaking up on behalf of plums!

Very few of them are plumb awful! 😉

Dambit.

SSISDB cleanup jobs were obviously written by the same person! And the default config is so sub optimal if you’re running meaningful numbers of jobs every day.

The server that I had issues with had around 5000 databases on it, getting ~15 minute log backups.

Wow. Just wow. I thought having 100 databases on a single server was pushing it.

“They’re all temporary, use-once databases. We can just drop them when we’re done. Don’t need backups or anything.”

-The Original Spec

“Microsoft — if you’d like some help with SQL Server, click the consulting link at the top of the page!”

Seriously this is the funniest thing I have read on the interwebs in the last week. Well done, Erik 🙂