The Humble Heap

If you don’t know this by now, I’m going to shovel it at you:

If you have a table with no clustered index (a Heap), and you delete rows from it, the resulting empty pages may not be deallocated.

You’ll have a table with a bunch of empty pages in it, and those pages will be read whenever the Heap is scanned.

Under “normal” circumstances, you can use a TABLOCK or TABLOCKX hint along with your delete to force the deallocation to happen.

Otherwise, you’re left relying on your delete query possibly escalating to a table level lock, and releasing the pages on its own (absent a rebuild table command).

This is true under any pessimistic isolation level. This is not true under optimistic isolation levels (Snapshot, RCSI).

Demonstrate My Syntax

Here’s some stuff:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

USE Crap; SET NOCOUNT ON; ALTER DATABASE Crap SET ALLOW_SNAPSHOT_ISOLATION ON; --Save this for a second test --ALTER DATABASE Crap SET READ_COMMITTED_SNAPSHOT ON; DROP TABLE IF EXISTS dbo.HeapMe; CREATE TABLE dbo.HeapMe ( id INT PRIMARY KEY NONCLUSTERED, filler_bunny CHAR(1000) DEFAULT 'A' ); INSERT dbo.HeapMe WITH(TABLOCKX) ( id ) SELECT x.n FROM ( SELECT TOP 100000 ROW_NUMBER() OVER ( ORDER BY @@DBTS ) AS n FROM sys.messages AS m ) AS x; ALTER TABLE dbo.HeapMe REBUILD; |

We have a Heap with a nonclustered primary key. This sounds misleading, but it’s not. A nonclustered PK is not a substitute for a clustered index.

It’s there to speed up the delete that we’ll run in a minute.

We have a couple ways to look at the Heap.

A query I kinda hate:

|

1 2 3 4 5 6 7 8 9 10 11 |

SELECT DB_NAME(ps.database_id) AS database_name, OBJECT_NAME(ps.object_id) AS table_name, ISNULL(i.name, 'EL HEAPO') AS index_name, ps.page_count, ps.avg_fragmentation_in_percent, ps.avg_page_space_used_in_percent, ps.index_id FROM sys.dm_db_index_physical_stats(DB_ID(N'Crap'), OBJECT_ID(N'dbo.HeapMe'), NULL, NULL, 'SAMPLED') AS ps JOIN sys.indexes AS i ON ps.object_id = i.object_id AND i.index_id = ps.index_id; |

And sp_BlitzIndex:

|

1 2 3 |

EXEC master.dbo.sp_BlitzIndex @DatabaseName = N'Crap', @SchemaName = N'dbo', @TableName = N'HeapMe'; |

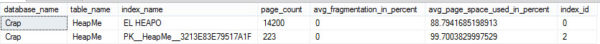

What do we know from looking at these?

- The first query tells us that our heap has 14,200 pages in it, and the NC/PK has 223 pages in it, that there’s no fragmentation (lol I know), and that the average space used for both is pretty high. Our pages are full, in other words.

- sp_BlitzIndex tells us that our Heap is ~111MB, and our NC/PK is 1.8MB. Both have 100k rows in them. It does not regale us with tales of fragmentation.

Deleting Many Singletons

If a delete comes along — alright, so it’s a row-at-a-time delete (stick with me on this) — and deletes every row in the table, what happens?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

--Quote this out to test RCSI SET TRANSACTION ISOLATION LEVEL SNAPSHOT; DECLARE @id INT = 1; WHILE @id <= 100000 BEGIN DELETE hm FROM dbo.HeapMe AS hm WITH (TABLOCKX) WHERE hm.id = @id; SET @id += 1; END; |

I’m doing the delete like this because it will never escalate to a table level lock on its own, but I’m hinting a table level lock for EVERY delete.

You can do this in other ways to avoid them, but this is the path of least resistance.

What do our exploratory queries have to say?

We have 0 rows in the table, but pages are still allocated to it.

In the case of the Heap, all pages remain allocated, and it retains the same size.

In the case of the NC/PK, about 200 pages were deallocated, but not all of them were (yet — more on this later).

So that’s… fun. I’m having fun.

You can get slightly different results without the loop.

If you just delete everything, the Heap will remain with all its pages in tact. The NC/PK will (as far as I can tell) be reduced to a single page.

|

1 2 3 |

SET TRANSACTION ISOLATION LEVEL SNAPSHOT; DELETE hm FROM dbo.HeapMe AS hm WITH (TABLOCKX) |

Oddball

Under both RCSI and SI, if we use a TABLOCK hint instead of TABLOCKX…

The size of, and the number of pages in the NC/PK effectively DOUBLES.

DOUBLES.

>mfw

Repetition Is Everything

I left other code in there if you want to try it at home with other combinations.

You can also try it under Read Committed if you’d like, to see different results where pages are deallocated.

Now, you can fix this by running ALTER TABLE dbo.HeapMe REBUILD;, which will release all the empty pages back.

Deletes Mangle Scans

If we run a couple simple queries, things get awkward.

|

1 2 3 4 5 6 7 8 9 |

SET STATISTICS IO ON; SELECT COUNT_BIG(DISTINCT h.filler_bunny) FROM dbo.HeapMe AS h; SELECT COUNT_BIG(DISTINCT h.id) FROM dbo.HeapMe AS h; SET STATISTICS IO OFF; |

The first query, which needs to access data via the Heap, does a lot of extra work.

The query that hits the PK/NC does significantly less (comparatively)

|

1 2 |

Table 'HeapMe'. Scan count 1, logical reads 14287, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. Table 'HeapMe'. Scan count 1, logical reads 2, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0. |

Q&A

Why does this happen?

My pedestrian explanation is that when the deletes happen, and rows get versioned out to tempdb, the pointers keep the pages allocated just in case.

Why don’t they deallocate afterwards?

It looks like they do deallocate from the NC/PK the next time a CHECKPOINT/Ghost Record Cleanup process runs.

The Heap remains Heapy, with all the pages allocated to it though. It’s as if the table level lock never happened.

Does query isolation level matter?

No, setting it to Serializable, Repeatable Read, or anything else results in the same mess.

Thanks for reading!

6 Comments. Leave new

After I Delete the rows, EL HEAPO still has 14.8% average space used. What’s still on those pages?

Something I find very strange: If you go through the exercise to the end, then try and insert another 10,000 rows into HeapMe, its nearly 10x slower. For me, it took ~2.5 seconds to insert the first time, then ~20. The deletes remain almost the same at ~1:10. I’d expect the inserts to be faster the second time, not slower.

James, you can use DBCC PAGE to find out. I’d wager it’s versioning pointers to tempdb though.

What’s the best solution to deal with this retrospectively ? Will a rebuild of the NC PK do the trick, or would a ‘total cleanup’ amount to rebuilding the the file(group)?

gb — no, unfortunately just rebuilding the PK/NC won’t help. You’d have to rebuild the table, which would also rebuild the PK/NC, and any other NC indexes you’ve got on there.

Great illustrative article, I love seeing huge heap tables when I start new positions, it’s like having a corpse in the corner of the room, you’re not quite sure when it is going to start to cause trouble or how much, you just know it will at some point.

I’ve been seeing large amounts of unused but allocated pages on CLUSTERED indexes within my database requiring REBUILD, ( i think this is because the index regularly receives inserts that are PK collisions) DBCC page shows a repeating pattern of 1 used, 7 or so unused pages in sequential order.