Your boss or client came to you and said, “Give me a quick health check on this SQL Server.”

Step 1: Download & run sp_Blitz.

Go to our download page and get our First Responder Kit. There’s a bunch of scripts and white papers in there, but the one to start with is sp_Blitz.sql. Open that in SSMS, run the script, and it will install sp_Blitz in whatever database you’re in. (I usually put it in the master database, but you don’t have to – it works anywhere.)

Then, run it with these options:

|

1 |

EXEC sp_Blitz @CheckUserDatabaseObjects = 0, @CheckServerInfo = 1; |

These two parameters give you a server-level check without looking inside databases (slowly) for things like heaps and triggers.

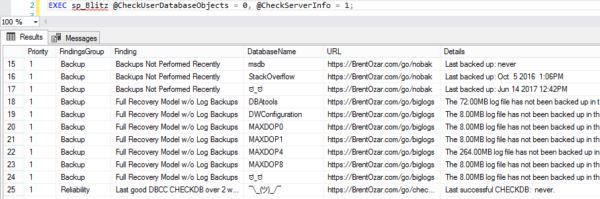

Here’s what the output looks like (click to zoom):

The results are prioritized:

- Priority 1-50 – these are super important. Jump on ’em right away, lest you lose data or suffer huge performance issues. If you’re doing a health check for a client, you should probably include a quote for this work as part of your health check deliverables.

- Priorities 51-150 – solve these later as you get time.

- Priorities 151+ – mostly informational, FYI stuff. You may not even want to do anything about these, but knowing that the SQL Server is configured this way may prevent surprises later. You probably don’t want to include these in the health check.

Each check has a URL that you can copy/paste into a web browser for more details about what the warning means, and what work you’ll need to do in order to fix it.

Step 2: Summarize the health risks.

sp_Blitz is written by DBAs, for DBAs. We try to make it as easy to consume as we can, but if you’re going to present the findings to end users or managers, you may need to spend more time explaining the results.

If you only have ten minutes, run sp_Blitz, copy/paste the results into Excel, and then start hiding or deleting the columns or rows that you don’t need management to see. For example, if you’re a consultant, you probably wanna delete the URL column so your clients don’t see our names all over it.

I know what you’re thinking: “But wait, Brent – you can’t be okay with that.” Sure I am! Your client has already hired you to do this, right? No sense in wasting time reinventing the wheel. That’s why we share this stuff as open source to begin with.

If you have an hour or two, add an executive summary in Word that explains:

- The risks that scare you the most (like what will happen if the SQL Server dies at 5PM on Friday)

- What actions you recommend in order to mitigate those risks (like how to configure better backups or corruption checking)

- How much time (or money) it’ll take for you to perform those actions

Your goal is to make it as easy as possible for the reader to say, “Wow, yeah, that scares me too, and we should definitely spend $X right now to get this taken care of.”

If you have a day, build a PowerPoint presentation, set up a meeting with the stakeholders, and then walk them through the findings. To see examples of that, check out the sample findings from our SQL Critical Care®.

And that’s it.

You don’t have to spend money or read a book or watch a video. Just go download sp_Blitz and get started.

37 Comments. Leave new

For christ sake, Brent it doesnt ske me much of a consultant to take your scripts maybe glen barrys , who is active and various other scripts… The main point is that someone understands rather than blindly runs someone elses script. Really they should call you in togi e them a healthcheck. What if i were a doctor relying onsomeines book or software

Mark – sure, in a perfect world, everyone would call in a professional database administrator to look at every server.

In that perfect world, every server would be perfectly configured, patched correctly, all of the databases would be backed up, we’d never have corruption, and my breath would always smell of roses. (I’d say every blog post commenter would have perfect spelling and grammar, but hey, let’s not go too far.)

Unfortunately, we don’t live in that perfect world, and sometimes people have to work on things they’re not quite qualified to work on. That’s why we build our tools, and why Glenn builds his – to help people conquer servers before bad things happen.

LOL that you bothered to reply to this guy.

😉

” people have to work on things they’re not quite qualified to work on” – This is how we learn. I’ve learned far more fixing slow code/servers/PCs than I ever did watching good code run flawlessly.

It is only after getting the “in sufficient memory for large optimizer” error that I learned there are three optimizer sizes in SQL server.

Error messages are guides to what I did not know.

thanks for all of the work making my job as accidental DBA easier – I have a friend who runs veem backups and not sql backups – how do we get veem to tell SQL that the databases have been backed up which would then make blitz work even better?

Jim – well, kinda bad news for your friend there. It sounds like they’re using VM snapshots, but NOT using Veeam’s built-in SQL Server integration. As a result, they’re only getting dirty (crash-consistent) snaps of the VM, and sometimes the databases will come back as corrupt.

To fix that, have your friend read Veeam’s instructions on how to configure backups differently for SQL Server:

https://helpcenter.veeam.com/evaluation/backup/vsphere/en/sql_backup_and_restore.html

Hope that helps!

Brent

I’m also having Veeam SQL Server backups encouraged to me by our VM admins, and some recovery features look good assuming it is configured correctly as the URL above defines. I already have my own processes that take full backups and copy to another server so I can validate the backup file will restore OK and also run database consistency checks….could I turn off my own transaction log backups, and add a COPY_ONLY to my full job leaving the transaction log chain intact for Veeam able to handle point in time recovery yet also give me a second native backup file? I haven’t had a chance to test this (obviously).

Kyle – for anything regarding backups, you would want to test it yourself. Things can change so fast with third party products, and you don’t wanna gamble.

Thank you Brent – in some ways you are right. Of course this is almost philosophical in that you (and paul randall,glen,aaron bertrand et all) help as much as you can. All in all that is the right thing to do ! I love your expression for being out of your depth….dunning kruger effect anyone

Mark – you’re welcome! Yeah, Paul, Glenn, Aaron Bertrand, Adam Machanic for sp_WhoIsActive, Kendra Little for writing sp_BlitzIndex, Jeremiah Peschka for writing sp_BlitzCache, Ola Hallengren for building his maintenance solutions, the entire DBAtools.io volunteers, I could just go on and on. The SQL Server community is phenomenal at sharing the stuff they’ve built in order to raise everyone’s game.

[…] Health Check. […]

Hi there,

I used sp_Blitz in one of our database server and it is really helpful as we can get a feel how the database is behaving.

One question, is it possible to have sp_Blitz on a separate server and the running it against multiple remote database servers? I’m a bit new on SQL Server, I am an Oracle DBA/Developer transitioning to SQL Server.

Thanks

Chris

Chris – nah, it has to be run on the database itself. Hope that helps!

Thank you very much, Brent. No problem. Really appreciate for replying.

Maybe you can use a 3rd party tool from redgate to execute on multiple remote servers: https://www.red-gate.com/products/dba/sql-multi-script/index but all servers should be on the same network if not maybe you have to switch vpn connections. You can run on all databases executing “sp_msforeachdb ‘sp_blitz'” (if you don´t have sp_msforeachdb on your SQL Servers you can download from https://github.com/BrentOzarULTD/SQL-Server-First-Responder-Kit/blob/dev/sp_foreachdb.sql and add to your master database on each server before use)

[…] rather reuse what’s already out there for example Brent Ozar’s SQL Health Check (https://www.brentozar.com/archive/2017/10/free-sql-server-health-check/). I will use the intial out of the box analysis, running it across a variety of systems to see […]

FYI – 1) These scrips work on the new SQL 2019 CTP

15.0.1200.24. Patch Level: CTP2.2. Edition: Enterprise Evaluation Edition (64-bit). Availability Groups Enabled: 0. Availability Groups Manager Status: 1

2) Run the scripts before some one else does. I thought I had a clean house (doesn’t everyone)

3) Keep learning. Had to google a few things to fix what it found. Why? Fix it fast now to take the course and do SQL Saturdays

we have 32 virtual processor and 199 GB allocated memory for sql In my organization

and current Cost Threshold of Parallelism is set to 20 and Max degree of parallelism set to 2.

Also we have many client databases among one is having huge/heavy transactions and couple of queries get timed out in the application 3 or 4 days once but the same query produce resultset in few seconds if it triggered directly from the SSMS.

Will I get any improvement ,If I increase the Cost Threshold and max degree of parallelism.

Appreciate your help

Diwa – sure, that’s exactly the kind of question I answer as part of my consulting. Click Consulting at the top of the page and you can learn more about my services. Thanks!

When I run step 1 on AWS RDS SQL Server, I got an error

“You do not have permission to run ‘SYS.TRACES’.”

What I should do?

Alex – for support on the scripts, go ahead and hit the readme.txt they came with. Thanks!

Hi!!, i need create a database “dbatools” on every server that i want to check healthy or i can run sp_blitz locally and extract data from remote instances???

Pablo – hit the readme for where to go for support questions. Thanks!

Hi, thanks for reply!, bue i’ve submitted my email on sqlslack.com but i don’t have a user yet….

PLE new record set – 5 seconds. Under 7 seconds sustained.

– SQL 2016 with 14Gb of memory on a 16Gb server running windows server 2012 R2 Standard.

Yes I have the screen shot from Idera DM to prove 5 seconds. No photoshop.

Now to find a similar large machine with an even shorter PLE. Not a good thing. You have probably seen worse.

Errr, first, 16GB isn’t large. My laptop has more.

Second, yes, we do achieve worse (with larger hardware) during our Mastering Server Tuning class. Come on down! You’d love seeing it.

Sorry, I replied in the wrong place.

(sigh) 16Gb is small but getting memory is hard – as if he was taking it out of his kids X-Box. (not implying that is the case. Just really hard. Different bosses, nuff said)

Yes I need to take the class. With November sales there is no reason for me not to.

Just could not believe PLE of 5 seconds.

(leaves to check if PLE is in SP_Blitz)

What about disk busy time? I share the SAN with others?

Once SQL waits are reduced – first place to look – and cxpacket waits are kind of ignored. reducing I/O traffic and disk I/O is preemptive help.

BTW mw work PC home is high end think pad with 32Gb DDR4 memory and dual NVMe SSDs. Better than the production 230Gb database with 16Gb memory. It is a strange corporate world Mr. Ozar as you know. Strangers in a strange land if you grok.

Disk busy time is a made-up metric: it’s disk queue length * 100%. If you have a disk queue length of 1, disk busy time will be 100%. 2, 200%, etc.

Gonna be brutally honest here: it doesn’t sound like you’ve been to a SQL Server training class in the last decade. That number hasn’t been useful since maybe 2000.

Be brutally honest, life is. So are problems – they do not cut me any slack & “I get no respect”

Yes even a pre SQL 6.5, pre Tom Kyte DBA needs a skills tune up.

Without being told “those measurements” like “SQL bucks” for cost of execution have gone the way of the Dodo, PLE, Disk I/O – etc will still be used.

Changing disk cluster size from 4K to 64K really did help. Physics of the system always wins “cause I can’t be changing the laws of physics” – Montgomary Scott.

To paraphrase Sun Tzu “the best disk I/O is the one you do not have to fight”

[yes I’m thinking of how to get my boss to pay for the class]

(sigh) 16Gb is small but getting memory is hard – as if he was taking it out of his kids X-Box.

Yes I need to take the class. With November sales there is no reason for me not to.

Just could not believe PLE of 5 seconds.

(leaves to check if PLE is in SP_Blitz)

Is it fine to run on prod backup – my client/manager to run on prod?

I have created the sp_Blitz on Azure SQL database and apeared following error…

“Msg 40515, Level 15, State 1, Procedure sp_Blitz, Line 16 [Batch Start Line 3]

Reference to database and/or server name in ‘model.sys.objects’ is not supported in this version of SQL Server.”

SQL Server version is ” Microsoft SQL Azure (RTM) – 12.0.2000.8 ”

Is there any solution of it ?

Check the readme, which links to you to what’s supported and what isn’t. sp_Blitz is not supported on Azure SQL DB.

Thank you for the update, Brent. While I initially thought it was supported, do you have any plans to develop an sp_Blitz version that is compatible with Azure SQL DB? I believe many DBAs are eagerly anticipating such an enhancement.

No.