When you change MAXDOP and run RECONFIGURE, SQL Server clears the plan cache. (It’s documented, by the way, as is the rest of the behavior I’m about to show you, but sometimes I like to learn the hard way by running experiments.) Here’s the easiest way to see it – but make sure to only do this on a development server, not in production:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

/* See the oldest plan in the cache: */ SELECT TOP 1 * FROM sys.dm_exec_query_stats ORDER BY creation_time GO /* sp_configure has auto-complete, kinda, very convenient. Just check what it was before so you can put it back. */ sp_configure 'max degree', 4 RECONFIGURE GO /* Now check again */ SELECT TOP 1 * FROM sys.dm_exec_query_stats ORDER BY creation_time GO |

When you check again, you’ll see that your plan cache has gotten the Men in Black treatment.

It makes sense that changing parallelism from single-threaded (1) to any other option would require building plans anew, but you might be surprised to see that any change causes it to blow the plan cache – even from, say, 4 to 8.

Or even if you change it from 4 to, uh, 4.

There’s no logic built into RECONFIGURE – it just flat out blows the plan cache if you issue any sp_configure command to alter MAXDOP, regardless of whether the value was changed or not. (Remember that – it’s going to come in handy here in a second.)

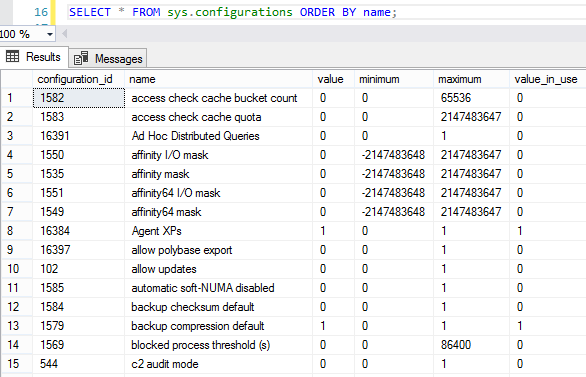

So what about other options? To see the full list, check the sys.configurations table:

Dang, there’s dozens! Clearly, I’m not going to run each of these by hand to write a blog post, dear reader. To the power of dynamic SQL! Knowing that a non-change still blows the plan cache, let’s write dynamic SQL that will:

- Put a query in the plan cache

- Run sp_configure with one of the configuration values, and its maximum value

- Run reconfigure

- Check to see if the plan cache was cleared

- Run sp_configure again with the original value to set it back

DO NOT RUN THIS ON A SERVER YOU CARE ABOUT.

But here it is in case you wanna poke holes in my logic, because I’m pretty sure there’s some:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 |

DECLARE @string_to_execute NVARCHAR(1000), @current_name NVARCHAR(200), @current_value_in_use SQL_VARIANT, @current_maximum SQL_VARIANT; CREATE TABLE #configs (name NVARCHAR(200), value_in_use SQL_VARIANT, maximum SQL_VARIANT, clears_plan_cache BIT); INSERT INTO #configs (name, value_in_use, maximum, clears_plan_cache) SELECT name, value_in_use, maximum, 0 FROM sys.configurations; DECLARE config_cursor CURSOR FOR SELECT name, value_in_use, maximum FROM #configs WHERE name NOT IN ('default language', 'default full-text language', 'min server memory (MB)', 'user options'); OPEN config_cursor; FETCH NEXT FROM config_cursor INTO @current_name, @current_value_in_use, @current_maximum; WHILE @@FETCH_STATUS = 0 BEGIN /* Put something in the plan cache */ /* Run sp_configure to set it to maximum */ SET @string_to_execute = 'sp_configure ''' + @current_name + ''', ''' + CAST(@current_maximum AS NVARCHAR(100)) + '''; RECONFIGURE WITH OVERRIDE;'; EXEC(@string_to_execute); /* Check the plan cache */ IF NOT EXISTS(SELECT * FROM sys.dm_exec_query_stats) UPDATE #configs SET clears_plan_cache = 1 WHERE name = @current_name; /* Run sp_configure to set it back */ SET @string_to_execute = 'sp_configure ''' + @current_name + ''', ''' + CAST(@current_value_in_use AS NVARCHAR(100)) + '''; RECONFIGURE WITH OVERRIDE;'; EXEC(@string_to_execute); FETCH NEXT FROM config_cursor INTO @current_name, @current_value_in_use, @current_maximum; END CLOSE config_cursor; DEALLOCATE config_cursor; SELECT name, clears_plan_cache FROM #configs WHERE clears_plan_cache = 1 ORDER BY name; DROP TABLE #configs; |

The end result:

Voila – these are options should probably only be changed during a maintenance window, and monitor for parameter sniffing issues afterwards.

6 Comments. Leave new

What SQL Server Version and Edition did you run this on?

I’m getting slightly different results for 2008 R2 (SP3) – Standard.

(I also had to disable the Affinity Mask settings as SQL didn’t like a mask of 2147483647…)

access check cache bucket count

access check cache quota

cost threshold for parallelism

cross db ownership chaining

index create memory (KB)

max degree of parallelism

max server memory (MB)

max text repl size (B)

min memory per query (KB)

query governor cost limit

query wait (s)

remote query timeout (s)

2016 SP1 Developer Edition.

A bunch of smart people have told me to be very paranoid about clearing the plan cache. Having never been burned by this, these concerns seem overblown:

1) Yes, clearing the cache might cause different, worse query plans to show up. But that could just as easily happen due to an automatic statistics update. If a query is in danger of switching to a bad plan, that danger exists even without clearing the plan cache.

2) Yes, recompiling a query plan has a cost, but that cost is tiny compared to the cost of executing plans. In a data warehouse, I would expect compilation cost to be tiny compared to query cost, even for a single query (similarly, data import CPU costs are usually driven more by the amount of data being moved, rather than generating the plan itself). In an OLTP database, I would expect that most of the queries are cheap to compile, cheap to run, and are run frequently…but compilation only has to happen once per distinct query.

Unless your database is constantly bumping up against a CPU ceiling (i.e., not even enough head room to deal with micro-variation in demand), I would think freeing the procedure cache would just be a blip, lasting for under a second and being indistinguishable from blips caused by variance in demand.

What kind of extreme databases are people running where this is anything more than a minor footnote?

Just an example off the top of my head – let’s say you get asked to look into why this database you’ve never seen before has been running slowly over the last couple of days, but it’s not slow at this moment. Right off the bat, you notice that MAXDOP and Cost Threshold for Parallelism are using the factory settings and you want to change them based on the server specs. If you just go ahead and update them at the start, you lose the plan cache and with it all the related data you could have looked at for query performance.

(And yes, I spend a lot of time in sp_BlitzCache, often on databases I’ve never seen before.)

Wayne, you make an excellent point. I was primarily responding to the final sentence, where Brent warned that the configuration changes should be made during a maintenance window because the procedure cache would reset (rather than warning that changes can be made whenever, but performance statistics should be collected before they’re inadvertently lost due recompiles).

Personally, I have a tendency to (ab)use the procedure cache to collect simple database statistics, so the idea that making a change would reset such statistics is not very concerning; I’d usually want to compare stats before/after a change myself, so I’d probably have reset the procedure cache myself (and, obviously, all of the changes that reset the procedure cache are changes that tend to have significant performance impact).

Microsoft has a few more options documented that they say will wipe the plan cache, including Max/Min Server Memory:

https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-freeproccache-transact-sql?view=sql-server-2017