In our new 2-day hands-on lab course, Performance Tuning By Example, students have a series of performance tuning goals they have to accomplish. For example, in one scenario they have to fix the performance problem only using indexes, and in another they’re only allowed to change code (but not indexes.)

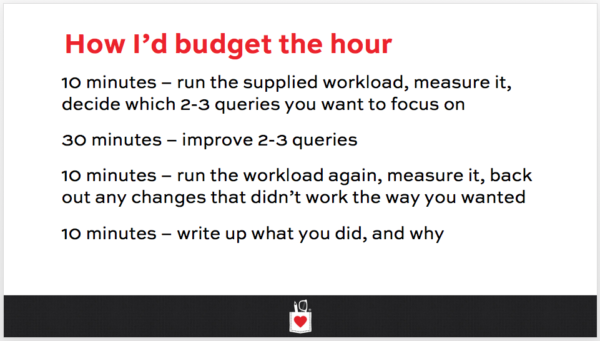

In each lab, they have just one hour to do their work. It’s way harder than it sounds, so I give them a time budget for starters. Here’s Lab 3’s budget – keep in mind that by now, they’re already used to running & measuring a workload very quickly, so they don’t have much time to do that portion:

Sketching out a high-level project plan of what you want to do when performance tuning helps you understand the depth to which you can go in the limited time you’ve got.

If someone hands you a 1,000 line stored procedure and asks you to make it go faster, think about:

- Getting a working set of parameters to run it

- Verifying that the parameters take the right amount of time to run in development (for example, make sure it’s as slow in development as it is in production)

- Measuring the code to see which part of it is consuming the most resources

- Reading through that part of the code to get a rough idea of what it’s doing

- Making a hypothesis about why that part uses so many resources, and what you could do to cut that resource consumption

- Testing that hypothesis – writing the code, and testing the performance difference

- If it works, getting other parameters for the proc and making sure their performance doesn’t get worse

- Testing the validity of the data output (making sure it still returns the same result)

After you’ve done this a lot, you’ll understand how much time each of those steps takes for your particular application, and you’ll be able to do better work estimates. However, when you’re just getting started, a lot of those can be shots in the dark.

When I first got started building the labs for this class, I thought I’d have to put together complex scenarios with hundreds of queries running concurrently, making students sift through all kinds of conflicting data in order to find the right next steps. The more I ran through the labs myself, though, the more I remembered just how hard this stuff is when you’re facing a database you may never have seen before!

3 Comments. Leave new

Frankly, I would not trust stored procedure of 1000 lines. It just seems so fundamentally wrong. The T-SQL is a simple one pass compiler that was intended as a simple tool for working with the database. It was never, never meant to be a full production language. Did you ever wonder why variable names can have a @, @@, #, or ## prefix? It’s so that the scan could be done in one pass, without a complicated symbol table.

That’s why we added the SQL/PSM to the standards, which is equivalent to the Oracle full language product (am I allowed to say it’s a name in a Microsoft forum?). These advanced languages were based on ADA and were intended to be computationally complete.

The old rule of thumb, back in the dark ages, was that a stored procedure in SQL Server should never be more than one page long, including comments. You were supposed to use GOTO-less programming, in spite of the fact that there is a GOTO in the language, part of this decision is a matter of style of the time, but it really does result in better performance.

Remember that, SQL is a database language which was never, never meant to be a computational or display language. Ideally, we want to do or query, get the data in a standard well understood format (let me stress “standard”) , and “throw it over the wall” to another tier in a tiered architecture where computations, statistics, display formatting or whatever the next thing we need is can be done with the data.

I’m gonna poke the bear here…

While interesting, the history lesson here doesn’t really do much to forward the discussion. That is unless you are expecting companies worldwide to halt production and refactor, well, everything? The fact of the matter is, this type of code exists, everywhere, and we have to learn and know how to make it perform in the best possible manner. And the only way to do that is to practice, practice, practice.

Now I’m gonna run and hope the bear is not hungry enough to chase me.

True, a stored procedure of 1000 lines is fundamentally wrong, and often a child of an environment that is imperfect to say the least. But as the doctor only see sick people I have seen worse. (For example take this application; in order to avoid people to modify the same row, the primary key value was stored in a special ‘ locktable’. If you want to update a row, you first have to check in that locktable if the row isn’t being modified. Hey! A do-it-yourself locking mechanism, that makes your product viable for both MSSQL and Oracle! Nice find!). And a 1000 row procedure is just one of these antipatterns in the field: I think we need more bears.