So far in this series, I’ve shown you how to corrupt a clustered index, then how nonclustered indexes can be corrupted independently. If you haven’t read those, you should start there first.

Let’s start again with our 50Ways database, but this time we’re going to be good and make sure that we’ve got checksums enabled, and that we’re in full recovery model:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

CREATE DATABASE [50Ways]; GO ALTER DATABASE [50Ways] SET PAGE_VERIFY CHECKSUM; /* To detect corruption more easily */ GO ALTER DATABASE [50Ways] SET RECOVERY FULL; /* To recover from corruption more eaily */ GO USE [50Ways]; GO CREATE TABLE [dbo].[ToLeaveYourLover]([Way] VARCHAR(50)); GO INSERT INTO [dbo].[ToLeaveYourLover]([Way]) VALUES ('Slip out the back, Jack'), ('Make a new plan, Stan'), ('Hop on the bus, Gus'), ('Drop off the key, Lee') GO SELECT * FROM [50Ways]..[ToLeaveYourLover]; /* Yep, we have data */ GO BACKUP DATABASE [50Ways] TO DISK='50Ways_Full_1.bak'; /* For insurance */ GO BACKUP LOG [50Ways] TO DISK='50Ways_Log_1.bak'; /* Building a log cabin */ GO USE master; GO ALTER DATABASE [50Ways] SET OFFLINE WITH ROLLBACK IMMEDIATE; GO |

Then fire open your trusty hex editor, like xvi32, and open up the MDF file just like we did in Part 1. Change Stan’s name to Flan, save the MDF, close your hex editor, and bring the database back online again:

|

1 2 3 4 5 6 |

USE master; GO ALTER DATABASE [50Ways] SET ONLINE; GO SELECT * FROM [50Ways]..[ToLeaveYourLover]; GO |

Thanks to the magic of checksums, SQL Server knows what you did last summer:

|

1 2 3 4 5 6 7 8 9 10 11 12 |

Msg 824, Level 24, State 2, Line 33 SQL Server detected a logical consistency-based I/O error: incorrect checksum (expected: 0x7f0b1ab2; actual: 0xff0b16b8). It occurred during a read of page (1:256) in database ID 8 at offset 0x00000000200000 in file 'M:\MSSQL\Data\50Ways.mdf'. Additional messages in the SQL Server error log or system event log may provide more detail. This is a severe error condition that threatens database integrity and must be corrected immediately. Complete a full database consistency check (DBCC CHECKDB). This error can be caused by many factors; for more information, see SQL Server Books Online. |

So the table is toast, right?

Try inserting more rows.

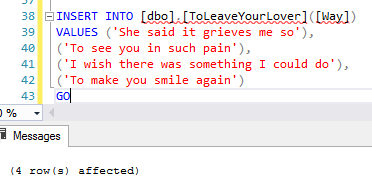

Let’s add another four rows:

|

1 2 3 4 5 6 |

INSERT INTO [dbo].[ToLeaveYourLover]([Way]) VALUES ('She said it grieves me so'), ('To see you in such pain'), ('I wish there was something I could do'), ('To make you smile again') GO |

And if the moon is right, it works just fine.

Adding more data doesn’t necessarily detect corruption. But if you try to select them back out, you’re still going to have a problem.

This is the worst part about database corruption – SQL Server will let your users keep right on databasin’, adding more data into a corrupt database. You have a serious time bomb on your hands here: the longer you let this go on, the more dangerous things get. Watch this.

Take a full and a transaction log backup of your database again:

|

1 2 3 4 |

BACKUP DATABASE [50Ways] TO DISK='50Ways_Full_2.bak'; GO BACKUP LOG [50Ways] TO DISK='50Ways_Log_2.bak'; GO |

And they work fine – no errors. And guess what happens when you try restores? You’ll need to change M:\MSSQL\DATA\ to your own data/log paths here (I try to keep scripts super-generic so they work everywhere, but restore ain’t so flexible):

|

1 2 3 4 5 6 |

USE [master] RESTORE DATABASE [50Ways_Restored] FROM DISK = N'50Ways_Full_2.bak' WITH FILE = 1, MOVE N'50Ways' TO N'M:\MSSQL\DATA\50Ways_Restored.mdf', MOVE N'50Ways_log' TO N'M:\MSSQL\DATA\50Ways_Restored_log.ldf' GO |

No errors there either.

By default, backups and restores don’t detect corruption.

So now think through the timeline of what we just did:

- We created a database

- We put some data in it

- We took a full backup #1

- We took a log backup #1

- We corrupted the data

- We took full backup #2 (which backed up a corrupt data page)

- We took log backup #2

If we need to recover from the corruption that just happened (and assuming we can’t do page-level restores, which is for another blog post), the proper sequence is:

- Take a tail-of-the-log backup, which gets the last of our transactions and seals the database as read-only

- Restore full backup #1 (from before the corruption happened)

- Restore log backup #1

- (Skip full backup #2 altogether, because its data page is corrupt)

- Restore log backup #2

- Restore the tail-of-the-log backup

But this only works if you actually have log backup #1. This is kinda horrifying because I bet you:

- Do CHECKDB once a week, like on Saturdays

- Do full backups every day

- Do transaction log backups multiple times per day, like hourly

- But due to limited drive space, you delete log backups older than 24-48 hours

So take this timeline:

- Saturday – DBCC CHECKDB runs, reports success

- Sunday – full database backup, and logs all day long

- Monday – full database backup, and logs all day long,

and we delete Sunday’s log backups - Tuesday – full database backup, and logs all day long,

and we delete Monday’s log backups - Wednesday – we find database corruption.

Depending on when the corruption happened, you may not be able to restore without experiencing serious data loss.

Thankfully, it’s easy to detect corruption.

Once you’re aware of this problem, you have a few great options.

You could keep your log backup files around longer. In theory, you keep them all the way back to your last clean CHECKDB. In practice, you’ll need to keep them longer than that. If you do CHECKDB every 7 days, and you delete log files older than 7 days, then when CHECKDB fails, a human being probably won’t disable the log-deletion job fast enough to keep the log backups online. In that scenario, 10-14 days of log backups might be a better choice – especially if there’s only one DBA, and everyone else just leaves the alert emails for the DBA to handle when they get back from vacation.

You could run CHECKDB more often, or on a restored copy of production. It kills me when I see people doing index rebuilds every night, but CHECKDB only once a week. Your priorities are backwards. DBAs get fired for lost data, not for slow performance. (Are you kidding me? You actually get extra training and tools budgets when your server is slow. Come to think of it, you should probably go hit the turbo button on the SQL Server just to make it slow down for a while. The turbo button doesn’t exist anymore? Maybe change your power plan down to Fair and Balanced instead of High Performance.)

Configure your alerts and failsafe operator. By default, SQL Server keeps its secrets and doesn’t tell you when it detects a corrupt page. It’s crazy easy to set up alerts – check out our SQL Server Setup Guide in the First Responder Kit.

Use the WITH CHECKSUM command on backups. When specified, this makes SQL Server check the checksum on pages while backing them up. It’s not as good as a full CHECKDB, but in the case of our toy database, it works like a charm:

|

1 2 |

BACKUP DATABASE [50Ways] TO DISK='50Ways_Full_3.bak' WITH CHECKSUM; GO |

You could implement mirroring or Always On Availability Groups. By themselves, these don’t make it easier to detect corruption, but they make it easier to deal with the aftermath. Sure, they have Automatic Page Repair, but they also have something simpler: a separate copy of your database’s data files. Instead of your data files being a single point of failure, now they’ve got redundancy from storage failures (assuming of course that they’re on separate physical storage, not on the same storage device.)

5 Comments. Leave new

Although trace flags have differing levels of acceptance, worth mentioning 3023 to force all backups to do a checksum without needing to change any backup code anywhere.

https://support.microsoft.com/en-us/help/2656988

Great article Brent.

As a consultant, I am always working on DB’s someone else has created. I have been using sp_Blitz for a few years and it is one of the best tools I have found. I also love sp_AskBrent and sp_BlitzIndex, these 3 tolls have saved me a lot of time and headaches. Thanks for all of your hard work

CJP – great, glad I could help.

Definitely a great serie of articles! Thanks a bunch! 🙂

Hi Brent,

After reviewing the section “the proper sequence to recover from the corruption” in the article, my friend asks if I could restore log backup #2 in step 5 without restoring full backup #2 in step 4. Thank you very much!