Oh, so you’re the one

While SQL Server 2016 adoption is still pretty low, and 2017 still quite isn’t out yet, that’s no reason to not invest a few days of dev time into a stored procedure that is only usable by the smallest portion of SQL Server users. Right?

Look, someone around here has to be future-proofing things.

With that in mind

I set out to write a stored procedure that does for Query Store (BOL) what sp_BlitzCache does for the plan cache, but with a new approach. sp_BlitzCache is glorious, don’t get me wrong! You ask it a question by giving it a sort order, and it gives you all the stuff in your plan cache ordered by that metric.

But, the plan cache is a fickle flower. Prone to clearing for various reasons, and often riddled with inconsequential items. I’m not saying Query Store isn’t also riddled with inconsequential items, but we can hopefully just do a bit more to avoid them.

I also didn’t want to reinvent the wheel – after all, there’s a GUI for Query Store. It has built in reports. I don’t wanna rewrite someone else’s query and dump it into a table and blah blah blah. That’s not fun for me, and it’s not helpful to you.

What we look for when we examine slow servers

When we come in to look at a SQL Server that’s in trouble, we wanna know what was going on when things were bad.

When you run sp_BlitzQueryStore, it basically:

- Looks at your workloads over the last 7 days (that’s configurable)

- Finds the highest resource consuming periods of time

- Finds the top 3 queries that used the most of that resource in that time (the top is configurable)

So, if your highest CPU usage was on 2017-05-31 between noon and 3pm, we’ll grab the top three queries that used CPU, then we’ll repeat that for all the other metrics we grab. As of now, that’s: duration, cpu, logical reads, physical reads, writes, memory, and rows returned. By sampling each of those, we can get a pretty well-rounded view of the worst stuff in your Query Store data.

After we have a list of plans to go after, we circle back to grab additional information. Doing this all at once wasn’t very efficient, especially considering the breadth of data available in Query Store, plus collecting query plans and text. Also a departure from sp_BlitzCache, we’re no longer using one impossibly wide table to store everything. Data is reasonably normalized across temp tables that have specific purposes. Weird, huh?

Parameters you pass in

Right now, these are configurable parameters:

- @DatabaseName NVARCHAR(128) — Database you want to look at Query Store for

- @Top INT — How many plans per metric you want to bring back

- @StartDate DATETIME2 — Start range of data to examine (if NULL, will go back seven days

- @EndDate DATETIME2 — End range of data to examine (if NULL, will default to today’s date, though this changes if @StartDate isn’t NULL)

- @MinimumExecutionCount INT — Minimum number of executions a query must have before being analyzed

- @DurationFilter DECIMAL(38,4) — Minimum length in seconds a query has to run for before being analyzed

- @StoredProcName NVARCHAR(128) — If you want to look for a particular stored procedure

- @Failed BIT — If you want to look for only failed queries

- @ExportToExcel BIT — Backwards compatibility, skips the generalized warnings, doesn’t display query plan xml, and cleans/truncates query text

- @HideSummary BIT — Hides the general warnings table

- @SkipXML BIT — Skips XML analysis entirely, just returns unanalyzed plans

- @Debug BIT — Prints out any dynamic SQL used for debugging

Example calls

--Debug

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Debug = 1

--Get the top 1

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @Debug = 1

--Use a StartDate

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @StartDate = '20170527'

--Use an EndDate

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @EndDate = '20170527'

--Use Both

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @StartDate = '20170526', @EndDate = '20170527'

--Set a minimum execution count

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @MinimumExecutionCount = 10

Set a duration minimum

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @DurationFilter = 5

--Look for a stored procedure name (that doesn't exist!)

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @StoredProcName = 'blah'

--Look for a stored procedure name that does (at least On My Computer®)

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @StoredProcName = 'UserReportExtended'

--Look for failed queries

EXEC sp_BlitzQueryStore @DatabaseName = 'StackOverflow', @Top = 1, @Failed = 1

For each of these, I’ve set @Top to 1, but you can change it to whatever. I’d ask you to be reasonable (say, less than 10), unless you’re skipping XML processing; it can be time consuming. Though we do de-duplicate collected plan_ids to reduce overhead, the higher number you use here does tend to increase the chances that we’ll have more distict plans.

What sp_BlitzQueryStore passes out

Maybe that was a bad choice of words. Anyhoo, here are some example of what output looks like, with some explanation.

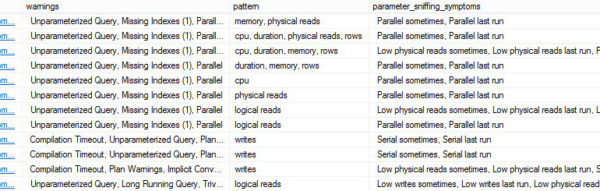

No Blitz proc would be complete without some thoughtful (hey, we try) analysis of collected data. This should look familiar to you if you’ve used sp_BlitzCache:

We have the database examined, query cost, query text, if it was a statement, or if it came from a stored proc or function, the query plan, any warnings we generated, and new here is the pattern column.

We also use similar routines to sp_BlitzCache to examine plan XML for actionable items. This is still limited to data in cached plans, but hey. It’s not like you’re used to better. Tee-hee.

One new feature in sp_BlitzQuery store is a column called ‘parameter_sniffing_symptoms’. Since we have fairly robust historical data about past runs of a query, we go and look for sharp discrepancies across a range of metrics, and given enough variation, we’ll list things out for you. This hits all the metrics we have data for (cpu, duration, et al.)

We’ll give you back a list of queries in a very familiar output table with all the analysis stuff, and important metrics. We also give you a generalized warnings table, just like in sp_BlitzCache, though with some new items in it.

Remember when I said we de-duplicate plan_ids? Before we do that, we set up a csv list of all the patterns a plan popped up in, since it’s possible that a query was running during more than one top metric. Also new is the parameter sniffing symptoms column. This is a csv list of all the large deviations in execution metrics, so you know how different parameters might be causing trouble.

Swipe right for more detailed metrics

We also show you a bunch of collected metrics from the query store views. These should’t need much explanation.

One thing to keep in mind is that data in Query Store is noted in microseconds, 8k pages, and KB. We standardize that to milliseconds and MB across the board. That makes things a bit easier for you human beings to consume.

If you’re on 2017 (or if the wait stats DMV here gets back ported to 2016), we’ll show you the top 3 wait stats for each query if they’re significant. Right now significant means >= 5ms. Maybe that’ll change, or be configurable in the future. Let’s see how this thing takes off first.

If you’re on a supported version (right now, 2017) this is what the top three waits column will look like. You’ll have to forgive this screenshot being boring, I haven’t done a lot on this particular VM lately. I promise, it’ll look snazzier when you do it 🙂

Introduce yourself

That’s a quick introduction post to our newest stored procedure. It’s available in our First Reponder Kit, which is still free. Please give it a shot and send me any feedback. This is v1, so the water is pretty blue for suggestions.

Thanks for reading!

21 Comments. Leave new

Amazing work, Erik. I am in a temporary holding pattern with regard to evaluating and migrating to SQL Server 2016 but this (along with column store index improvements and Partitioning in standard) have me REALLY excited to upgrade.

Thanks, Wes! Hope you eventually find this thing helpful.

Thanks for this, awesome work. I now work entirely in Azure though, so I hacked up your beautifully crafted script to make it work for me (basically just removed/altered version checks, and all references to @DatabaseName – it’ll need to be run from within the database to be examined). 🙂

Oh boy. Yeah, this is why the barrier to entry for a lot of our scripts remains so high for Azure, plus we never know when something will change. If you want to submit your changes over on our GitHub repo, I’d love to see what it would take to make it more adaptable overall.

Thanks!

I’ll flick it through, but basically, you can’t have cross-database gumpf, so need to create the proc on the DB you want to analyse, and therefore lose all the @databasename references (or replace with DB_NAME() where you want to output the name in the message).

To make it *compatible* would take a little more fiddling – but should be doable. Just check whether it’s an Azure DB and if so make the @DatabaseName parameter optional, and alter the various bits of dynamic SQL that reference it to handle not referencing a DB name. Otherwise, it just worked! 😀

Cool, thanks. I’ll take a look when I have a chance.

Testing it on AWS – SQL 2016 Express with 1 CPU and 1 GB RAM. Executing for 1h26mins so far… 😉

I wish you had said “running for 1h26mins” so I could say that’s the only thing around here doing any running.

This is awesome!

no big deal but see line 3630 has an invalid char. (scroll to the end of the script file)

Yeah, it’s a ® sign, and it’s there on purpose.

Enjoy!

I love the rest of the sp_blitz suite and was looking forward to this. Unfortunately, it doesn’t work for my use case. I get an error: “‘TRY_CONVERT’ is not a recognized built-in function name.” A little Googling reveals that the issue is that my database compatibility level is 100 (for Sql Server 2008), though I’m running 2016. If making it compatible isn’t in your scope, you might still want to add that quirk to the version-checking logic. Regardless thanks for your ongoing work!

Oh boy, that’s certainly an odd combo. You could bump your compat level up to 110 (SQL Server 2012), and it will work. I doubt anyone would notice 😉

I’ll see if there’s an easy way to change that in the next couple days when I’m working on the FRK release for this month.

Yep. I’m an accidental DBA who inherited the SQL Server along with the application code. No one left knows what sort of tragedy will occur on other people’s ancient code if we bump it. It’s on our backlog to write some test plans and prepare for that update, but… I’m sure you’ve heard it all before. Obviously I’d love a backwards fix for sp_BlitzQueryStore but I completely understand if that’s not your priority.

Hey Will,

I opened a GitHub issue for this, and created a branch to do some testing.

With my StackOverflow database set to compatibility level 100, the proc runs without error. Not sure where to go from here.

Since

TRY_CONVERTis only used once in the proc, go ahead and change it toCONVERTand see if it runs for you. If it does, I’ll try to think of what else could help.If you have a GitHub account, please comment on the issue I opened.

Thanks!

Hey, just got your latest version off the dev branch, but installing it on a Azure SQL db with the right compatibility worked, but running it gives no results; not even with @Debug = 1.

Is there some other dependancies perhaps?

Has anyone else run it on Azure SQL successfully?

Thanks for the effort and great work, as always.

For support, please use the GitHub repo. Thanks!

I did not want to run this on our production server, so I made a backup from our prod server and restored it to my local instance and ran EXEC sp_BlitzQueryStore @DatabaseName = ‘MyDatabase’.Will this have different results than when run on production (I see master db references in the stored procedure).

Thanks

Hari — check out the readme for where to go for support. We don’t do it in blog comments to keep it centralized.

Thanks!

Erik, did you consider adding a parameter that would allow us to search the cache looking for plans that use specific index? So it would be similar as searching for specific stored proc. Why would that be useful? Let’s say that I used sp_BlitzIndex and identified some indexes that have high write:read ratio and I would like to remove them. But before I do that, I would like to check which queries were using them and maybe tune them upfront.

Piotr – yes, people have suggested things like that, searching for text, or sorting the plan cache by cost. But parsing the entire plan cache xml performs terribly. Searching for a stored proc name is different. You should read the code sometime.

Thanks!