Listen, I can explain. Really.

We had a client with a 5GB database, and they wanted it to be highly available. The data powered their web site, and that site needed to be up and running in short order even if they lost the server – or an entire data center – or a region of servers.

The first challenge: they didn’t want to pay a lot for this muffler database. They didn’t have a full time DBA, and they only had licensing for a small SQL Server Standard Edition.

At first, that sounds like a perfect fit for Azure SQL DB. For a relatively small monthly cost, they could have Microsoft manage their high availability and disaster recovery. Want more replicas in more data centers? Just turn the knob, and your credit card bill gets warmer. RPO/RTO becomes the business question it was always meant to be – if you wanna be more available, you just change your billing level, and there’s no implementation project or staff training.

But there was a catch.

During due diligence, we unearthed another tricky challenge: a lot of the data was regenerated from scratch, every single day, during overnight ETL jobs. This regenerated data was projected to grow between 10x to 1,000x in the next two years.

- 50GB in a single Azure SQL DB isn’t a big deal.

- 50GB of data regenerated every night, however, is.

- And 5TB of that is simply untenable.

See, Azure SQL DB isn’t just priced by storage capacity and availability: it’s also priced by performance capacity. The more data you churn, the more cash you burn.

So we asked a question: which parts of the data really need high availability?

In this case, the data that was constantly churned was only for internal reporting purposes. It didn’t drive the web site. It could have a completely different RPO/RTO goal.

And the mission-critical data that drove the web site? It was actually fairly compact and stable, less than 1GB of data altogether.

The answer was to split the 5GB database into two parts:

- A small core subset of mission-critical data living in Azure SQL DB

- A skyrocketing but lower-value majority of data living on-premises in their already-paid-for SQL Server Standard Edition box, with easy-to-handle HA/DR technologies designed for less ambitious RPO/RTO

Here’s how you can make these same decisions.

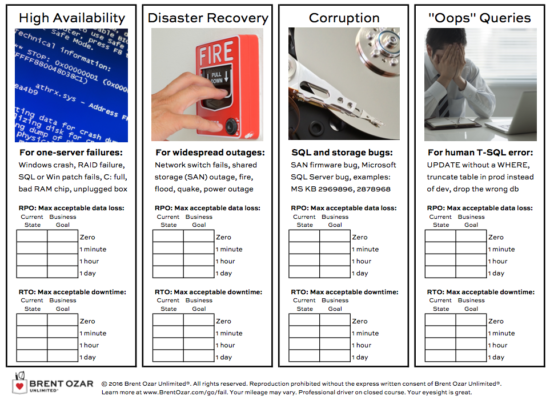

In our First Responder Kit, we’ve got a set of worksheets, scripts, and e-books that I teach you how to use during my Senior DBA Class. The RPO/RTO worksheet helps you and the business users determine your high availability goals:

After filling that out, if you need to buy new hardware (or rent new cloud space), then you use the hardware sizing worksheet:

You gather the business users and IT teams into a conference room, put this up on the projector in Excel, and talk them through each of the questions. You record everyone’s answer, and then come to a consensus.

Then, armed with these answers, you can build a better long term architecture design.

And yeah, sometimes that means splitting a 5GB database into two parts.

9 Comments. Leave new

Hi Brent,

5TB or 5GB? 😉

Thanks

GB, heh.

What would you have done, if no data was ever to leave premises?

So, what if cloud was politically no option?

Peter – I can’t really do justice to architecture questions like that in a blog post comment, sorry. (As you can see, it took me a whole post just to explain the basics of this particular scenario, heh.) It’s a fun thought exercise though!

Cool enough for me, bro. But maybe you can point fingers towards a place I might find what I’m looking for? 😉

Sure! Click on Training at the top of the page. 😉 We discuss this kind of thing in our Senior DBA Class. Come on down!

Seems very reasonable — right now. But will they really regenerate 5 TB every day? At some point they’ll have to look into doing the ETL incrementally, which will also generate much less traffic and resource consumption.

Also, is the regenerated data coming from the stable data? If yes, that means they still need to read everything from the cloud and transfer over WAN to on-prem on a daily basis…

It’s 5GB, Brent typo’ed (as noticed above), cause he’s not used to work with such low volume anymore 😉

Thanks, but I meant the 5 TB it’s expected to reach in the future 🙂