Microsoft just announced a new round of D-grade VMs that have 60% faster CPU and local SSD than can go up to 7,000 IOPS in a canned IOmeter test. Before jumping to conclusions or, even worse, picking a cloud provider, it’s best to look at these numbers critically.

CPU Speeds

The new CPU is being advertised as 60% faster than the previous generation of processors. Clearly this has got to be some next generation hardware, right? Maybe we’ll get access to the new Xeon v3 – it’s not that outlandish of an idea; Amazon Web Services (AWS) had Xeon v2s in their datacenters before the chips were generally available.

Glenn Berry, a consultant who digs into computers for fun, did some initial testing with these new Azure instance types. In his investigations, he saw 2.2GHz E5-2660 chips. These aren’t even the slower end of the new generation of Intel Xeon v2 chips – they’re the previous generation of CPU… from 2012. Azure trades raw power for power efficiency.

If these not-so-fast CPUs are 60% faster, what are your current Azure VMs and SQL Database instances running on? Anecdotal evidence indicates that the current generation of A and P series VMs are running on older AMD Opteron hardware. Older AWS hardware is in the same boat, but it’s slowly being phased out.

SSD Speeds

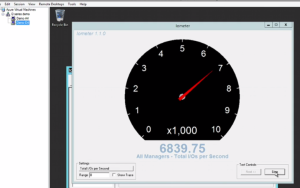

Microsoft are reporting performance of up to 7000 IOPS per local Azure SSD but persistent storage is still rotational. During the D Series SSD VMs interview a screenshot of iometer at 7,000 IOPS is shown, but no additional information is provided. Iometer tests typically use a 4k read/write block size for tests, which is a great size for random file access. It’s not awesome for SQL Server, but we can divide that by 16 to get a representative SQL Server number…

437.5 64KB IOPS.

Or so the Azure Product Manager says in the original interview. I don’t believe what I hear, and you shouldn’t either, so I fired up an Azure D14 VM to see for myself. What I saw was pleasantly surprising:

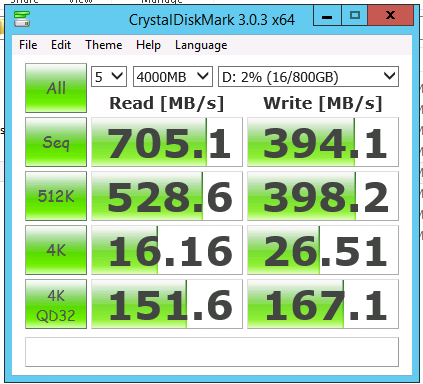

If we dig into the IOPS provided by Crystal Disk Mark, we see a decent looking picture unfold:

|

1 2 3 4 5 |

----------------------------------------------------------------------- CrystalDiskMark 3.0.3 x64 (C) 2007-2013 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 byte/s [SATA/300 = 300,000,000 byte/s] |

|

1 2 3 4 5 6 7 8 9 10 11 12 |

Sequential Read : 705.103 MB/s Sequential Write : 394.053 MB/s Random Read 512KB : 528.562 MB/s Random Write 512KB : 398.193 MB/s Random Read 4KB (QD=1) : 16.156 MB/s [ 3944.4 IOPS] Random Write 4KB (QD=1) : 26.506 MB/s [ 6471.1 IOPS] Random Read 4KB (QD=32) : 151.645 MB/s [ 37022.8 IOPS] Random Write 4KB (QD=32) : 167.086 MB/s [ 40792.5 IOPS] Test : 4000 MB [D: 2.0% (16.2/800.0 GB)] (x5) Date : 2014/09/23 0:24:10 OS : Windows Server 2012 R2 Datacenter (Full installation) [6.3 Build 9600] (x64) |

What’s it really mean? It means that the 7,000 IOPS number reported was probably for 4KB random writes. It’s hardly representative of SQL Server workloads, but we also can see what kind of numbers the drives will pull under significant load.

Comparing AWS and Azure Performance

AWS offers an instance called the r3.4xlarge. It comes with 16 cores and 122GB of memory. The AWS instance type is about the same as the D14 (16 cores and 112GB of memory). The D14 is $2.611 / hour. The AWS instance is $1.944 / hour.

All prices include Windows licensing.

So far, the Azure D-grade instance costs 70 cents more per hour for 4.8GHz fewer clock cycles and 10GB less memory. Not to mention the computational differences between the current generation of CPU and what Azure is running.

Surely the SSD must be amazing…

Not so fast. Literally.

Some AWS local SSDs benchmark have reported numbers as high 20,000 16KB IOPS for random write and 30,000 16KB IOPS for sequential read. Sure, the AWS instance only has a 320GB disk, but it’s capable of performing 5,000 64KB IOPS compared to the 440 IOPS (I rounded up to be generous) that Azure supplies.

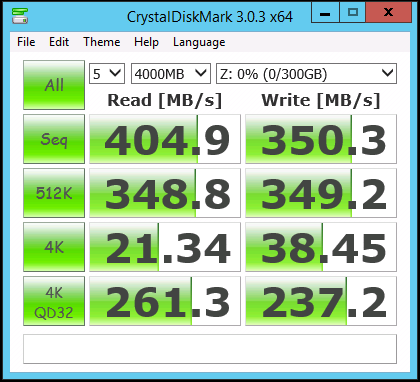

In my testing, the AWS local SSD beat out the Azure SSD on random I/O by a reasonable margin:

How about those IOPS?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

----------------------------------------------------------------------- CrystalDiskMark 3.0.3 x64 (C) 2007-2013 hiyohiyo Crystal Dew World : http://crystalmark.info/ ----------------------------------------------------------------------- * MB/s = 1,000,000 byte/s [SATA/300 = 300,000,000 byte/s] Sequential Read : 404.856 MB/s Sequential Write : 350.255 MB/s Random Read 512KB : 348.770 MB/s Random Write 512KB : 349.176 MB/s Random Read 4KB (QD=1) : 21.337 MB/s [ 5209.3 IOPS] Random Write 4KB (QD=1) : 38.448 MB/s [ 9386.7 IOPS] Random Read 4KB (QD=32) : 261.320 MB/s [ 63798.8 IOPS] Random Write 4KB (QD=32) : 237.201 MB/s [ 57910.4 IOPS] Test : 4000 MB [Z: 0.0% (0.1/300.0 GB)] (x5) Date : 2014/09/23 1:05:22 OS : Windows Server 2012 R2 Server Standard (full installation) [6.3 Build 9600] (x64) |

So… First – Azure offers really good local SSD performance if you decide to purchase the entire instance. Using a D14 instance type is a reasonable expectation for customers deploying SQL Server – SQL Server is a power hungry monster and it deserves to be fed.

Despite their truth, the Azure numbers aren’t all they’re cracked up to be. Here’s how it breaks down:

Cost: 34% more expensive

Sequential Reads: 74% faster

Sequential Writes: 12.5% faster

Random Reads: 42% slower/fewer IOPS

Random Writes: 30% slower/fewer IOPS

Azure has a history of mediocre performance, but it’s well-documented mediocre performance. Azure persistent storage currently maxes out at 500 no-unit-given IOPS per disk (compared to AWS’s 4,000 256KB IOPS for EBS volumes), but these limits are well-documented.

The Bottom Line

Not all clouds are created equal and 60% more doesn’t mean that it’s any better than it was before. It’s up to you, dear reader, to determine what 60% faster means and how that applies to your environment. For companies dipping their toes in the cloud waters, be very wary with the new improved Azure performance. You may find that you’re deploying far more VMs than you thought, just to handle the same workload.

16 Comments. Leave new

I assume that they are comparing the new 2.6 Ghz cores to the really old and nasty 1.9 Ghz CPUs when it says 60%. faster.

I very much doubt that a 16 core “D-14” series will be any faster than the existing A9 image. They already have the higher end 2.6 Ghz CPU and 1,600 FSB RAM.

One thing that confuses me is price. When I provision a new D-14 image it says “€768.48” per month which is half of the cost per hour quoted. I think the new MS portal now assumes images are turned off 50% of the time when showing prices – which is a bit naughty IMO.

I’m positive that’s what Microsoft is comparing current Azure performance to, especially since they go out and say it. I bring up AWS as a comparison because it’s important to compare across different platforms. For people about to make a choice seeing “faster azure” may lead them down a route that can’t perform. Just because this year’s cargo van is 60% faster than last year’s cargo van, that doesn’t mean a top speed of 96mph is going to win any races.

Which generation of chip is in that A9 image? Is it the Xeon v2 or is it an older v1? 1GHz of clock speed is not the same across all processors. Of course, you already knew that.

I’m not sure why you’re seeing that weird 50% cost. Maybe you get an amazing 50% discount for being Irish? When I did the math on the price, it quoted me the hourly rate x 24 hours x 31 days. Or Microsoft just knows I’m going to make noise and complain anyway, so why offer me a discount?

I’m chatting to the local MS guys here – I guess your prices are the right ones but some the euro pages on the new portal are showing “too good to be true” prices.

Shame I was soooo excited when I saw the 769 euro per month for a 16 core image ;-(

Actually, all of the D-series virtual machines that I have looked at so far have had the older 32nm Xeon E5-2660 Sandy Bridge-EP processors, not the newer 22nm Xeon E5-2660 v2 Ivy Bridge-EP processors that you linked to (which actually bolsters your point).

It is a little puzzling why Microsoft is using processors that are two years old for this new offering.

The A8 and A9 “Compute Intensive” Azure VMs are using slightly faster Xeon E5-2670 Sandy Bridge-EP processors.

Thanks for catching that! I’ve updated the post.

“we can divide that by 16 to get a representative SQL Server number”

I don’t follow that logic. It is unclear how Azure throttles. If they are in their right mind they don’t throttle purely by bandwidth. If an Azure SSD gives 4000 4k IOPS then it is likely to give by far more than 2000 8K IOPS. A physical SSD would give 3800 or so.

You’ve lost me.

Typically, all other assumptions aside, you can assume that a device capable of moving X bytes per second can always do that. Ignoring other constraints. Testing with SSDs shows this time and time again. It’s also why SSD vendors report 4KB IOPS or, sometimes, 512 byte IOPS. The numbers look amazing when you fudge them.

For a reference, the SSDs in my laptop can get around 100,000 IOPS.

Those 100K IOPS are probably at 4K block size. At 8K block size you don’t get 50K IOPS. You get more like 95K IOPS because at small block sizes throughput is not limited by bandwidth but by block access times.

I understand that you were saying that we get 437.5 64KB IOPS from 7000 4KB IOPS. That does not follow.

I understand now. Yes, reality can get in the way. That’s why we assume a perfectly spherical cow. Thanks for dropping by!

I’ve just logged onto a customers sluggish 4-Core A3 to find it’s E5 2673v3 running at a measly 800mhz (as reported by

7z b)Would this be considered normal?

Patrick – why not spin up another A3 to find out?

Sorry to follow up on an old thread, but I’ve been doing some research lately and this thread popped up. I’m confused about something. Microsoft seem to have (recently) tied IOPS to disk size and its frustrating. OK I come from the old school of DBA thought whereby I could have multiple smaller disks with a high IO each but Azure seems to have made these two “mutually inclusive”…. I am guessing their train of thought its that if you need 12,500 IOPS per disk “yer gonna need a lot of space”???

Microsoft best practice states that for production VM’s you need “a minimum of 2 P30 disks – (1 for log and 1 for data). Does this mean I can head for a larger disk of high IOPS and just chuck everything onto it?

It’s cheaper you know…..

Glenn – great question, but server design is a little beyond the scope of what I can do for free in blog post comments.

I completely understand Brent. I actually think I just needed to vent. 🙂

Hi Glenn

Check out my somewhat old deck here on storage design for sql https://sqlbits.com/Downloads/566/Bob%20Duffy_SQL%20Storage%20Fundamentals%20for%20Azure%20IaaS.pptx

Some takeaways:

– lots of small disks in azure will still give U more bang for buck. Say p20 is sweet spot.

– BUT once you hit VM limit all bets are off and disk doesnt matter.

– make use of cached and uncached pools if u hit VM limit to squeeze more out.

– don’t worry about Iops. You’ll hit the throughput limit first MB/sec

(Unless your workload is doing 16k io size!).

So I’d say it’s probably harder to design good storage in azure VMs than on premise (if u want the racing car).

Thanks Bob, much appreciated, I’ll have a read.