Update on 5/25/2014: At least parts of this improvement have been backported to SQL Server 2012 SP1 CU 10. Read the KB here. After applying CU10 to SQL Server 2012 SP1, the test query shown in this post performed as it did against SQL Server 2014.

I was excited to read Bob Dorr’s recent post about a hidden gem in tempdb performance in SQL Server 2014. I had a copy of SQL Server 2014 Developer Edition handy, as well as a workload that beat up tempdb, so I decided to do a quick performance test. I wanted to find out how much this might help performance when tempdb was already on SSDs.

The tempdb improvement (in a nutshell)

There’s been a myth for a long time that if you populate a temp table or table variable, the data just resides in memory. This hasn’t been true in the past, but in SQL Server 2014 temporary operations are not as “eager” to cause disk IO.

The test setup

I ran a simple workload using HammerDB. My workload “warmed up” for one minute, then ran for three minutes. The workload ran with 1 watcher thread and 6 worker threads. Each worker thread selects data into a temp table as fast as it possibly can, over and over again. The workload isn’t much like a normal production workload: it just beats up tempdb.

I ran this test a few times independently against two VMs on my laptop. Both VMs have 6000MB RAM, 4 vCPUs, Windows Server 2012 R2, and all their storage is on SSD.

- Mister: SQL Server 2012 SP1

- ChairmanMeow: SQL Server 2014 RTM

Both SQL Server instances were configured with ‘Cost Threshold for Parallelism’ set to 50, which effectively makes this workload single threaded. Each instance had 40 equally sized tempdb data files configured. (That’s a lot more than you’d usually have with 4 vCPUs! This is a contrived workload with an especially high amount of tempdb contention.)

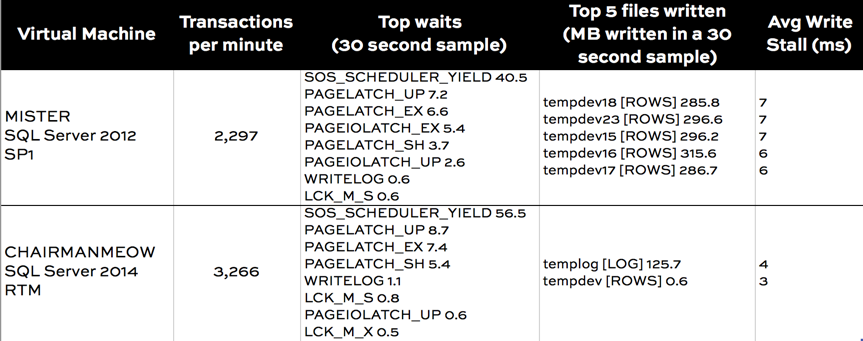

During each test run, I took a 30 second sample of waits and file io stats using sp_BlitzFirst®. (I ran: exec sp_BlitzFirst @ExpertMode=1, @Seconds=30)

The Test Query

The workload runs this test query. The execution plan costs out as 16.71 on both of my instances and the plan is the same shape.

|

1 2 3 4 5 6 |

SELECT TOP(5000) a.name, replicate(a.status,4000) as col2 into #t1 FROM master..spt_values a CROSS JOIN master..spt_values b; GO |

How did the instances do?

I got quite consistent results over multiple tests. Here’s a comparison:

Wait stats comparison

My SQL Server configuration isn’t perfect. Sure enough, there’s still some tempdb contention: I’ve got PAGELATCH waits because my queries are fighting over PFS and GAM pages.

PAGEIOLATCH show up more in the SQL Server 2012 sample. That’s the first tip that disk access is more of an issue.

MB written by file comparison

The real validation on what’s happening in this feature comes in on the two columns on the right. sp_BlitzFirst® peeks at your sys.dm_io_virtual_file_stats DMV, and when you run it in ExpertMode it lets you know the top 5 files you’ve read and the top 5 files you’ve written to.

We can clearly see that in SQL Server 2012, I was doing a lot of write IO. That write IO was going to every single tempdb file evenly (that’s expected if they’re evenly sized), as well as to the tempdb log. My average write latency was 7 ms, which isn’t really bad given what I was doing to my SSDs.

And sure enough, that IO just about disappears in SQL Server 2014! That adds up to a notable improvement in transactions per minute.

You’ll really love this feature if you can’t put SSD underneath all of your tempdb databases. But even for those who’ve already tuned up their storage, this feature should help you out.

19 Comments. Leave new

ChairmanMeow! 🙂 Seriously though, good news on reduction of tempdb i/o.

ChairmanMeow is working out really well as a VM.

I think so, too! This is a really cool little bonus.

Out of curiosity, are there any other implications to using “SELECT INTO #tmpX” vs “CREATE TABLE #tmpX -> INSERT INTO #tmpX” methodologies? Since the latter is not a bulk logged operation, would it be accurate to conclude that it may be more advantageous to starting favoring SELECT INTO #tmpX in 2014? Of course, I’m only speaking generally here.

You’ve psychically anticipated the next blog that’s on my mental list of “things to write”.

SELECT INTO gets some new perks in 2014, too. It can now Parallelize. ZOMG.

In a few very basic tests that I have done, I saw that INSERT INTO #tempX did capture the same benefits in terms of SQL Server choosing not to write to disk.

However, on a 30 million row data set the overall performance of SELECT INTO was almost 3x faster on my 4-core spinning drive machine due to the parallelism of the Table Insert operator. The parallel insert ability is not available for INSERT INTO (yet?).

I don’t believe that parallel insert has been backported. That improvement was implemented separately so I wouldn’t expect it to be backported with this, but that would certainly be nice!

It looks like SQL 2016 CTP2 also extends the same parallel insert capability to INSERT INTO WITH (TABLOCK).

I was able to confirm on my copy of CTP2 after reading about here: http://www.nikoport.com/2015/08/19/columnstore-indexes-part-62-parallel-data-insertion/

A little bit frustrating that SQL 2014 will have the parallel insert only for SELECT INTO, but it’s a great improvement in 2016 to be able to get the benefit with INSERT INTO as well (and even if the table isn’t empty!).

Thanks Kendra!

But one question! How do you support the below statement?

“I’ve got PAGELATCH waits because my queries are fighting over PFS and GAM pages.”

What is the percentage of signal wait time for PAGELATCH wait type? I’m asking this because SOS_SCHEDULER_YIELD wait time is high and that indicates some processing pressure.

Thanks again!

Hey Reza,

I verified that it was GAM/SGAM pages with sp_whoisactive– the screenshot isn’t in this post. (I also used this same workload for doing tests and validating that those waits decline with tempdb file configuration changes, too.)

The thing I was really interested in for this post was just proving that it was NOT doing the physical IO for this type of “busy small query in tempdb” workload, so I just didn’t include it here. I do have an upcoming post on tempdb contention where I have more detail and screenshots, coincidentally.

Kendra

Great post. Now if i could only somehow upgrade from SQL 2008 R2 which is really starting to show its age now.

Supporting PeopleSoft financials where performance is crtical and there are a lot of insert into select from where exists statements over and over. Tempdb seems to take a thrashing not to meniton the I/O on these tables. PeopleSoft uses regular database tables designated as its ‘temporary’ tables.

Glad you enjoyed it!

Ah, yeah, scratch tables in the production database can be really tricky, especially if you need to be in the full recovery model. Happens often with applications that support multiple types of platforms and aren’t SQL Server specific.

Thanks for the great post Kendra. I am eager to see the improvements in performance above this using the memory optimized temp variables in SQL 2014.

We have a giant reporting database that is getting incredible contention on tempdb, we recently upped it from 20 (1 for each CPU dedicated to SQL) to 40 data files and got a 25%+ bump in performance. I always cringe at going against the SQL teams advice, and am hoping I can convince our leadership to move to SQL 2014, in lieu of adding another 20 files and praying.

Hi Aaron,

The advice is to add more data files if you still see contention on PFS/GAM/SGAM pages: so if that what you resolved by adding files, you’re still following the guidelines. This KB has more info: http://support.microsoft.com/kb/2154845

That kind of contention isn’t related to physical IO at all, so the SQL Server 2014 improvement wouldn’t alleviate it. It’s contention on pages in memory.

Thanks. A decent number of the waits you are showing above in SQL 2014 and a significant portion of our waits (48%) are for latches. Don’t you think by replacing temp tables with memory allocated temp variables that we should pretty much eliminate all these waits? Also, for us, during our load testing we are maxing at 2000 IOPS for writes on the SAN drive tempdb sits on, which is why we are hoping the new all-memory temp variables with alleviate this bottleneck by eliminating most of the writes.

Hey Aaron,

I’m not entirely sure what you mean by Memory Allocated Table variables. Are you talking about Hekaton’s “Memory Optimized Table Variables”? If so, how are you going to monitor bottlenecks with those? (There aren’t any DMVs or metadata for you to use with them at this point, unfortunately.)

In the test workload above I was able to eliminate the latch waits by reconfiguring my tempdb data files. That’s just the topic of a different post– this was just showing the physical IO reduction in SQL Server 2014.

I’m a fan of 2014 as much as anyone else, but it sounds to me like you might be able to solve a lot of your problems with your current system. And that’s OK, too.

Kendra

Hi Kendra. Just an update for anyone reading this. If you want this in SQL2012 SP2 you’ll need Cumulative Update 1 for SQL Server 2012 SP2 (http://support.microsoft.com/kb/2976982). SP2 only contains SP1 Cumulative Updates one to nine.

Kendra,

I set the SET STATISTICS IO ON and run my query which has some order by and group by statement. I don’t see any physical IO, all logical. However, when I run the following query, I see the activities keep going up. Seems like the order by is running in tempdb. Can you explain why I cannot see in STATISTICS IO?

SELECT num_of_writes, num_of_bytes_written FROM

sys.dm_io_virtual_file_stats(DB_ID(‘tempdb’), 1)

GO

Thanks,

Chung.

This is awesome.

Hello,

thanx for the great post. I also would like to change some of my stored procs to use memory optimized tables.

Before i do this, could you help me to calrify some things?

#) parallel execution.

Somewhere i read, the tempdb doesn’t support it. I mean if i look at execution plan (using tempdb), there is no parallel exec for hashjoins and other operations.

#) memory underrun.

With memory optimized tables could it be possible, that i get an error, if the server is less of RAM?

In this thread (https://www.brentozar.com/archive/2014/06/temp-tables-table-variables-memory-optimized-table-variables/) i can read about this.

#) performance boost.

Memory optimized tables do the boost, or should i consider something?

This thread describes, that tempdb (starting with SQL 2014) can also reduce disk IO.