Business is booming; the sales team is bringing in more business and the software developers are successfully scaling out at the web server tier. There are signs of pressure on the database tier and you realize that it’s time to scale out. You’re using SQL Server 2012, you need to improve performance, and the first thought that comes to mind is the new hotness: SQL Server AlwaysOn Availability Groups.

Stop.

Get Your Bearings

Before making any infrastructure decisions you need to stop and consider your goals. Ask yourself: What pain am I trying to solve?

If you’re trying to scale out reads without changing much code, AlwaysOn Availability Groups are probably the right idea. With an AlwaysOn Availability Group we can scale out to a total of 5 servers to scale out reads across the board. This is fantastic for scaling out – we change a connection string or change a DNS CNAME and point connection strings at the Availability Group.

The hope and dream is that we can wildly scale with SQL Server AlwaysOn Availability Groups. Reality is different.

The Average of its Parts

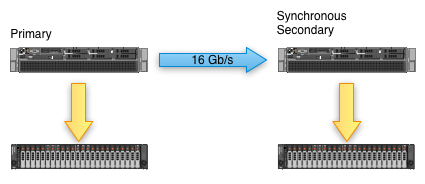

Think about this – in order for an AlwaysOn Availability Group to work for scale out reads, we have to get data from the write server to the replicas. The bottleneck isn’t how fast we can read; the bottleneck is how fast we can write.

Of course, you’re only going to use those secondaries for the same databases as the primaries, right? Nobody would ever want to add another database to one of those secondaries, right? Keeping in mind that we can only have two synchronous secondaries, how much faster can we make our SQL Server for synchronous data?

We can increase read performance, but write performance isn’t going to move.

The Average of its Parts… Over Time

The game changes when we bring asynchronous secondaries into the mix. With synchronous secondaries, all servers in the mix need to have the same write characteristics – if the primary has eight SSDs, the secondaries need to have eight SSDs (or at least the throughput of eight SSDs). When we’re using asynchronous secondaries, the secondaries have to be able to keep up with the primary’s workload on average.

To figure out what kind of hardware we need for the asynchronous secondaries, we need an SLA. Think about it like this: in order to make a hardware decision, we need to know how long our asynchronous secondaries can take to catch up. If we don’t know how far the secondaries can lag behind the primary, then we can’t accurately size the hardware. Conversely, once we have an SLA, we have to size all of the equipment to make sure that the async secondaries can never fall further behind than our SLA. Of course, that SLA is also going to have to include index rebuilds, bulk loads, and other activity that abuses the transaction log.

The Average of its Parts… with a Perfect Network

Assuming a perfect network, this all holds true. Once network congestion comes into play, performance guarantees go out the window.

During the design stage of building out an AlwaysOn Availability Group it’s critical that the network is taken into account as a critical component of the infrastructure. The network must be able to handle the load of multiple secondary nodes – network performance may cause more problems than poor disk performance.

Consider Your Environment in Aggregate

Before planning a SQL Server AlwaysOn Availability Group deployment, consider your environment as a whole. Make sure that you have the bandwidth available to stream regular operations between the primary and replicas as well as to stream under peak load. Remember – adding four additional servers may put considerable load on your network backplane. Also consider that these won’t be physically cheap servers; if you need performance from the primary server, the synchronous and asynchronous secondaries require enough power to meet your performance SLAs. This isn’t an activity for the faint of heart.

If you have an existing application and you want to scale with money, you may be able to get away with an AlwaysOn Availability Group deployment. You’ll be buying multiple servers, multiple SQL Server licenses, and a lot of high end switching equipment, but you’ll only have to change a few connection strings in your application.

If you’re considering scaling out and you’re willing to make code changes, consider Windows Azure SQL Database. In many cases, you’ll be able to scale out using database federations. Through the changes you’re likely to lose flexibility in how you tune T-SQL, perform database maintenance, and work within your schema. The end flexibility may be worth it, but there may be significant code changes to go along with the database changes.

If you’re considering scaling out and you’re willing to consider many options, take a step back and consider your application requirements. Think about the database features your application is using, how those features fit into different databases, and how those features can be spread across different services. There are a lot of distributed databases out there that may meet your requirements and provide better scale out functionality than Windows Azure SQL Database or AlwaysOn Availability Groups.

Learn more in our AlwaysOn Availability Groups resource page.

11 Comments. Leave new

Microsoft do seem to have missed a trick with the availability groups as well. The readable secondaries aren’t load balanced through the Listener so if anyone wanted to farm out reads to a number of servers (such as for negating the complexities of replication) the Listener doesn’t help. It looks like the option here is to use a load balancer across the readable secondaries instead which means bypassing the Listener for reads. This negates some of the benefits of setting the application intent.

Ryan – yes, but even the load balancing by itself isn’t enough in most scenarios. Most of my clients using AGs are doing it across multiple data centers. Say your production DC is in Chicago, and your DR DC is in Atlanta. You may have reporting needs in both locations, so you set up at least one secondary in each DC as a readable replica. If you’ve got a reporting web server in Atlanta, you don’t want it connecting to Chicago to run a query. Even simple load balancing in SQL Server wouldn’t be enough – you’d want geographic proximity awareness.

No matter how you slice it, you’re going to want to use a load balancer – but odds are, if you’re big enough to use read-only routing, you’re probably big enough to already have load balancers in the shop. No sense in building that kind of logic into a database server. Use the load balancer for what it’s good at, and use the database server for what it’s good at.

How does the number of DBs in the AG affect performance?

If, for example, async readable secondaries are required for a system that’s unfortunately spread across 20 DBs on one instance, how would that affect the performance of the primary?

Alex – the more databases you have, and the more replicas you have, the more threads become needed for synchronization. I’ve got a client running about a hundred databases per AG to a few replicas, and we ran into some worker thread issues there, but after increasing the number of max worker threads, we were fine. Of course, the amount of transactions, size of logged data, bandwidth, etc are all also concerns.

That’s a great tip about max worker threads! I’ll make sure to monitor it.

It’s also good to hear about the case of 100 DBs.

Thanks!

I have been working with SQL Server since quite some time and I am ashamed to say that I have been ‘blissfully’ ignorant about Always on availability group. Some really nice learning for me.

Hello,

not sure if this is the right article for that, but I have the following question:

I have found a great article about AlwaysOn Performance in synchronous mode:

https://blogs.msdn.microsoft.com/sambetts/2014/08/21/alwayson-asynchronous-vs-synchronous-commit-performance-for-sharepoint/

The short version of this artice is: updates are about x2 slower than standalone for a x2 node AlwaysOn cluster; reading data is about the same performance (which would make sense).

As we currently create an AlwaysOn Cluster SQL 2014 with one primary synchronous replica if have also done some performance tests. For this I have used a scripts which inserts 20000 rows. Please find the script at the end.

My results: (Script see below)

Single Database (standalone, not in Availabilty Group): 9seconds

Synchronized Database (AlwaysOn, synchronous mode): 19seconds

It would be very interesting for me if you get similar results and what you think about this: Is this high latency really a normal behavior, means by design?

CREATE TABLE dbo.TestTableSize

(

MyKeyField VARCHAR(10) NOT NULL,

MyDate1 DATETIME NOT NULL,

MyDate2 DATETIME NOT NULL,

MyDate3 DATETIME NOT NULL,

MyDate4 DATETIME NOT NULL,

MyDate5 DATETIME NOT NULL

)

DECLARE @RowCount INT

DECLARE @RowString VARCHAR(10)

DECLARE @Random INT

DECLARE @Upper INT

DECLARE @Lower INT

DECLARE @InsertDate DATETIME

SET @Lower = -730

SET @Upper = -1

SET @RowCount = 0

WHILE @RowCount < 20000

BEGIN

SET @RowString = CAST(@RowCount AS VARCHAR(10))

SELECT @Random = ROUND(((@Upper – @Lower -1) * RAND() + @Lower), 0)

SET @InsertDate = DATEADD(dd, @Random, GETDATE())

INSERT INTO TestTableSize

(MyKeyField

,MyDate1

,MyDate2

,MyDate3

,MyDate4

,MyDate5)

VALUES

(REPLICATE('0', 10 – DATALENGTH(@RowString)) + @RowString

, @InsertDate

,DATEADD(dd, 1, @InsertDate)

,DATEADD(dd, 2, @InsertDate)

,DATEADD(dd, 3, @InsertDate)

,DATEADD(dd, 4, @InsertDate))

SET @RowCount = @RowCount + 1

END

No, for random questions, go to a questions site like http://dba.stackexchange.com.

Hi Jeremiah/Brent – great post, thanks! Definitely things to consider here… we are about to implement an asynch AG for our data warehousing system. 1 GB dedicated link to DR. About 46 databases (not sure whether it would make sense to divide them up into a few AGs or just the one – any impact on performance at all?). All databases are in simple mode so we don’t do any t-log backups so don’t know what the log traffic will look like. How do i go about gathering stats to ensure we aren’t going into this blind? I’ve never looked into network performance monitoring so do you have suggestions for exactly what stats i should be looking at here? Perf counters etc? Any tips would be welcome…

Doodling DBA – that’s kinda beyond the scope of this blog post, so instead, for questions, head on over to a Q&A site like https://DBA.StackExchange.com. Thanks!

Noted – and done!