Do you want to make your database server faster?

If you answered no, you can go back to manually checking that all of last night’s backups succeeded.

If you answered yes, think about all the ways you can do this. From proper database design to the right hardware for your workload to proper indexes, you have many options. One step is to make sure that when your database needs to grow – whether a data file needs to expand, more files are added, or the database is restored – the growth happens quickly.

This can be accomplished by adjusting a security policy at the Windows Server level – Perform volume maintenance tasks. Giving your SQL Server service account rights to this policy allows it to take advantage of instant file initialization (IFI).

Normally, when you need to grow a file, the space to be used will be overwritten with zeros. Depending on how large your file needs to be, this can take some time. With rights to use IFI, SQL Server skips that step – the space is claimed on disk, and the stuff on disk is overwritten as needed.

It makes database growth faster.

Prove it

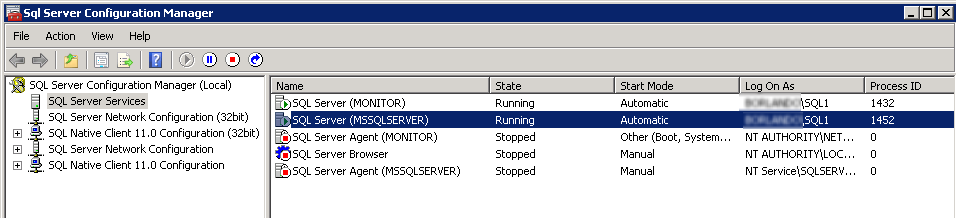

I have a SQL Server instance that is using SQL1 as the service account. This account is not a local administrator, nor is it a domain administrator. (Safety first!)

I open secpol.msc, go to my Local Policies and check User Rights Assignment. Only Administrators have permission to Perform volume maintenance tasks.

Now I’m going to create a database. How long does it take to create a file?

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SET STATISTICS TIME ON; CREATE DATABASE IFIOffTest ON ( NAME = IFIOffTest_dat, FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\IFIOffTest_dat.mdf', SIZE = 1000, MAXSIZE = 2000, FILEGROWTH = 100 ) LOG ON ( NAME = IFIOffTest_log, FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\IFIOffTest_log.ldf', SIZE = 100MB, MAXSIZE = 300MB, FILEGROWTH = 20MB ) ; GO |

Statistics Time tells me:

SQL Server Execution Times:

CPU time = 15 ms, elapsed time = 4665 ms.

Now I go to Local Security Policy and add SQL1 to Perform volume maintenance tasks, and restart the SQL Server service.

I create a second database.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

SET STATISTICS TIME ON; CREATE DATABASE IFIOnTest ON ( NAME = IFIOnTest_dat, FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\IFIOnTest_dat.mdf', SIZE = 1000, MAXSIZE = 2000, FILEGROWTH = 100 ) LOG ON ( NAME = IFIOnTest_log, FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL11.MSSQLSERVER\MSSQL\DATA\IFIOnTest_log.ldf', SIZE = 100MB, MAXSIZE = 300MB, FILEGROWTH = 20MB ) ; GO |

What does Statistics Time give me here?

SQL Server Execution Times:

CPU time = 15 ms, elapsed time = 659 ms.

I created two databases on the same server, the same hard drive, with the same file sizes. With IFI turned on, the database was created in 14% of the time. And that’s just with a 1,000 MB file! Imagine the time savings as your database scales up.

Like Growth Hormones, Without the Facial Hair

This is one of the fundamental steps I always have on a SQL Server database server setup checklist. If you’re not using this option yet, add it to yours!

25 Comments. Leave new

This is all good, but there is a bit of a security problem with the IFI. Since you are using IFI, and you expand a file, this means that you are not zero-ing out the newly acquired space. This means that the data which was written on that space previously is still available. This is how the NTFS works.

So, if you allocate extra space to a database file without zero-ing it out, then anyone who has permissions to the database file can actually read the non-zeroed out pages and see what they contained before they became part of the database file.

This is not too big of a deal for many environments, but for companies which have high priority for security IFI is a concern.

Feodor, you’re correct – there is a slight security risk when IFI is enabled. It’s up to the company to determine if the benefits outweigh the risks.

Only the SQL Server service itself should have permissions on the database file, so a curious or malicious human would have trouble getting to read the file. It’s likely that SQL would need to be shut down, and then the intruder could use a hex editor to read the file, but only if they got permissions somehow.

Also, it’s unlikely that someone was creating sensitive Word documents on the drive that SQL will be using. There would be a procedure in place to format the drive before putting it in SQL Server’s pool of drives,

Nevertheless, you are correct to weigh the security concerns against using IFI.

Mark – actually, it’s much easier than you think. Take the following scenario:

Payroll database is created in production

For some reason, the database file is moved (like restored to another drive, or another copy is restored)

An empty, non-confidential database is created in the same disk space

The non-confidential database is detached and given to someone

That backup file now contains payroll data, because it’s got the formerly “empty” pages from payroll.

Well, it’s not the backup file that contains payroll data, because the backup doesn’t contain an image of pages not in use, but the now detached database that does.

The lucky recipient of this database file would still need to use a hex editor, and be able to decode the page and record structure of the payroll pages to see salaries, but I take your point.

I think what Feodor had in mind was a confidential text file or CSV [or Word document?] that had been deleted in the OS, and then had a database file created on top of it with IFI turned on.

I have my own opinions here, but how would you say this affects the argument that you should never turn on autogrowth (only for data files since IFI doesn’t affect log growth) because it will slow down database responses during the auto growth?

I consider it best practice to turn on auto growth for database data files. If a large amount of data is inserted or updated and my database needs to grow, I want it to. I don’t want the transaction to fail, especially if there is space on disk for the file to grow. Even with IFI turned on, there is still a small performance penalty for the file growth, but as you can see in the above example, it’s much less than if IFI was not turned on.

I tried this on our test SQL 2012 server which is running Windows 2012 Standard. I didn’t get the improvement I expected. When only Administrators had the authority to perform volume maintenance task, I ran a script very similar to yours (same size data and log files) on our virtual server with a drive on our SAN and got:

CPU time = 62 ms, elapsed time = 14693 ms.

And then after I gave NT Service\MSSQLServer (the account under which the SQL Server service runs) the authority to perform volume maintenance tasks:

CPU time = 62 ms, elapsed time = 13077 ms.

Which is only about a 10% improvement, not what I expected.

Did you restart the sql service after giving permissions??

Thanks, the reboot did the trick. Before the reboot I got CPU of 62 ms and elapsed times of 14693 and 13007 ms. After the reboot I got CPU of 0 ms and elapsed time of 2544 ms, which is an elapsed time of less than 1/10. Thanks Jes!

You have to restart the SQL Server service for the changes to take effect.

Does the improvement apply when you have something like a Netapp filer for your data?

Randy – yep, it does! You can run your own tests to verify that, too.

“Like Growth Hormones, Without the Facial Hair” LOL…

I like my beard though!

How does virtualization affect this, if at all. I’d love to test it myself but I don’t have the opportunity to compare virtualized vs. metal.

This is at the disk level, so whether your SQL Server is physical or virtual, you’ll get the same benefit!

I am beginner in SQL, really very very awesome articles, i read today.

I’ve been doing a series of tests on instant file initialisation but focused on database recovery rather than day to day performance. I found that recovery completes in approaching half the time with it enabled. That alone should put it on the must do list for most outfits that do not operate in an extreme security environment

http://rule30.wordpress.com/2014/04/18/how-to-halve-your-database-recovery-time-in-60-seconds/

Cheers!

Thanks for this post, has been of great help.

However, I just noticed that the reported CPU time was fairly high (830 ms) when running the query. This is on SQL Server 2014 in a test environement with no load atm.

I distinctly remember the CPU time being way lower when I’ve previously run this – like single-digit low.

Any idea what could be causing this? How would I go about troubleshooting?

Thanks!

Peter – I’d probably double-check to make sure I actually had IFI on, try in the most current cumulative update of 2014, and try on a different 2014 box. Hope that helps!

Wow – that was fast!

I actually ran this query back when I first “enabled” IFI (which was a couple of weeks ago) and confirmed that IFI was indeed on – the execution times dropped significantly.

I re-ran the query today for some reason (let’s call it paranoia) and was a little dumbfounded by the high CPU times, because, as I mentioned, I remember them being way lower (both before and after enabling IFI), similiar to the ones in this post.

The SQL Server is up-to-date. It’s running on a Windows Server 2012 R2 VM, which is hosted on VMware ESXi 5.5.0, with 16 vCPUs.

Could virtualization play any part in this?

Thanks!

Peter – unfortunately, we’ve hit the end of the road where I can do personalized performance troubleshooting in a blog post comment. As I recommended earlier, I’d get up to date (your CU# isn’t mentioned in the comments) and try it on another SQL Server. Hope that helps!

I absolutely understand.

FWIW, I just restarted the server (the VM not just SQL Server) and I’m down to 10-20 ms CPU time again.

Glad it’s “back to normal” but this kind of leaves a bad taste in my mouth.

Oh and I’m on CU6.

What are your thoughts about adding the SQL Agent Service user to the “Perform Volume Maintenance Tasks” group? Should this help improve backup speeds from SQL Agent jobs for example?

Is this more of a security risk?

Colin – this sounds like a great thing for someone getting started with SQL Server to test out and blog about.

Thanks!