Imagine you work for a zoo, and you need to ship an animal to another zoo. If you are told one will be a mouse and one will be an elephant, you will need to handle them differently. The Detroit Zoo used a moving van to move two elephants, while mice can be put in a box and driven or flown.

Something Smells Funny

And it’s not the elephant. SQL Server uses a process called parameter sniffing when it executes stored procedures that have – you guessed it – parameters. When the procedure is compiled or recompiled, the value passed into the parameter is evaluated and used to create an execution plan. That value is then stored with the execution plan in the plan cache. On subsequent executions, that same value – and same plan – is used.

This is a normal, expected behavior in SQL Server. Because compiling queries is expensive, you want plans stored in the cache. You want SQL Server to re-use them as much as possible.

But what happens when the values in a table you’re querying aren’t evenly distributed? What if one value would return 10 rows and another value would return 10,000 rows, or 10 million rows? I call this the elephant and the mouse problem. You would handle one animal differently than the other; SQL Server might create different plans for the queries. But it doesn’t, because you’re using parameters.

What will happen is that the first time the procedure is run and the plan is compiled, whatever value is passed in is stored with the plan. Every time it’s executed, until it’s recompiled, the same value and plan will be used – regardless of whether it is the fastest or best plan for that value. If this is happening to you, and causing performance problems, there are ways to deal with it.

Parameter Sniffing in Action

I’m going to run a few queries to show you this behavior. I’ll be using AdventureWorks2012 on a SQL Server 2012 instance.

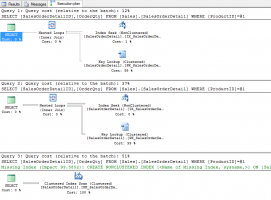

I’m going to query the Sales.SalesOrderDetail table. I want to know the order numbers and quantity ordered for specific products. My first set of queries will use literal values in the WHERE clause. When using literal values, SQL Server will compile each separately, and store a separate execution plan for each.

|

1 2 3 4 5 6 7 8 9 10 11 |

SELECT SalesOrderDetailID, OrderQty FROM Sales.SalesOrderDetail WHERE ProductID = 897; SELECT SalesOrderDetailID, OrderQty FROM Sales.SalesOrderDetail WHERE ProductID = 945; SELECT SalesOrderDetailID, OrderQty FROM Sales.SalesOrderDetail WHERE ProductID = 870; |

The same query was run three times. The queries returned 2, 257, and 4,688 rows, respectively. Two different execution plans have been created, even though the query remained the same!

At some point, the optimizer decided it was faster to do a clustered index scan, instead of a nonclustered index seek and a key lookup.

How does this behave when parameterized? I create a stored procedure to test this.

|

1 2 3 4 5 6 7 |

CREATE PROCEDURE Get_OrderID_OrderQty @ProductID INT AS SELECT SalesOrderDetailID, OrderQty FROM Sales.SalesOrderDetail WHERE ProductID = @ProductID; |

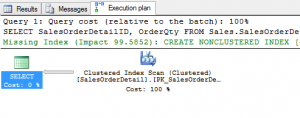

I’m going to execute this stored procedure for the first time with the “elephant” – product ID 870, which returns 4,688 rows.

|

1 |

EXEC Get_OrderID_OrderQty @ProductID=870 |

The data is retrieved through a clustered index scan once again.

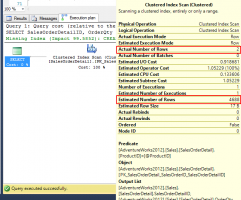

Now, I’ll execute this stored procedure with the “mouse” – product ID 897, which returns 2 rows.

|

1 |

EXEC Get_OrderID_OrderQty @ProductID=897 |

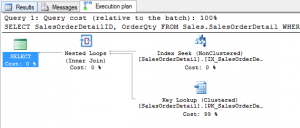

This time, instead of using a nonclustered index seek and a key lookup, the values are retrieved with a clustered index scan. Also note that the estimated number of rows and actual number of rows are very different!

What happened? This is parameter sniffing in action. One value is stored in the execution plan, and that is used to create the plan, regardless of what value is passed in. I can verify this by right-clicking the execution plan and selecting “Show Execution Plan XML”. In the XML, I search for “ParameterCompiledValue”. I find the following line.

![]()

The compiled value is 870. Until the stored procedure is recompiled, this is the value that will be used.

Is This Bad?

The real question is, “Is this bad?” It isn’t – until it is. Depending on the query and the data, each execution of this query may return the results in an acceptable amount of time. It is only when query performance reaches an unacceptable threshold that you may need to do something to prevent it from happening.

When Parameter Sniffing Stinks

When parameter sniffing is negatively affecting a stored procedure, what can you do to fix it?

The first option is to do nothing. You can explain the behavior of SQL Server to your end users. They might even listen! But they won’t be happy. They need their data, and they need it faster.

Other options you have are to recompile the stored procedure each time, use a query hint to produce a good enough plan, or do some creative coding. None of these are perfect options – they all have drawbacks. Understand the benefits and drawbacks of each option. Test the variations carefully in a development environment. The last thing you want to do is make the problem worse!

Recompiling

You can force SQL Server to recompile the stored procedure each time it is run. The benefit here is that the best query plan will be created each time it is run. However, recompiling is a CPU-intensive operation. This may not be an ideal solution for stored procedures that are run frequently, or on a server that is constrained by CPU resources already. Another thing to remember is that the plans won’t be stored in the cache, which makes them harder to find if they are problematic.

To show this, I’m going to alter my stored procedure to include the WITH RECOMPILE statement.

|

1 2 3 4 5 6 7 8 |

ALTER PROCEDURE Get_OrderID_OrderQty @ProductID INT WITH RECOMPILE AS SELECT SalesOrderDetailID, OrderQty FROM Sales.SalesOrderDetail WHERE ProductID = @ProductID; |

What plan will executing this with the “elephant” – product ID 870 – produce?

|

1 |

EXEC Get_OrderID_OrderQty @ProductID=870 |

A clustered index scan has been performed.

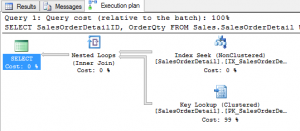

What plan will executing this with the “mouse” – product ID 897 – produce?

|

1 |

exec Get_OrderID_OrderQty @ProductID=897 |

The nonclustered index seek and key lookup are being performed, as expected. This is happening because WITH RECOMPILE tells SQL Server, “Don’t store a plan in cache for me. I’m smart enough to figure this out on my own each time.” Remember, the cost of this is increased CPU usage each time the stored procedure is run.

Query Hinting

Another option is to use the OPTIMIZE FOR query hint. This tells SQL Server to use a specified value when compiling the plan. If, through testing, you can find a value that produces a “good enough” plan each time, and the performance is acceptable for both mice and elephants, this is a good option for you.

However, understand that you are bossing the query optimizer around. You are telling it what you think is best. The biggest drawback with OPTIMIZE FOR is on tables where the distribution of data changes. The faster it changes, the more out of date this hint could become. What if the value you provide is not optimal in a month, or a year? You need to have a method in place to regularly review and revise this.

I know that using product ID 945 produces a “good enough” plan for this query, so I alter the procedure to include the OPTIMIZE FOR query hint.

|

1 2 3 4 5 6 7 8 |

ALTER PROCEDURE Get_OrderID_OrderQty @ProductID INT AS SELECT SalesOrderDetailID, OrderQty FROM Sales.SalesOrderDetail WHERE ProductID = @ProductID OPTION (OPTIMIZE FOR (@ProductID=945)); |

What plan will the “elephant” – product ID 870 – produce?

|

1 |

EXEC Get_OrderID_OrderQty @ProductID=870 |

A nonclustered index seek and key lookup has been performed. Viewing the XML shows us that the compiled value is 945.

The “mouse” – product ID 897 – has the same execution plan.

|

1 |

EXEC Get_OrderID_OrderQty @ProductID=897 |

Be Creative

Another option may be to create separate stored procedures for each value, or at least for those values you know will produce a specific execution plan. I’m not going to show this. Why? Because it’s ugly, it’s cumbersome, and I don’t recommend it. Who wants to maintain multiple stored procedures, when you can maintain one? What do you do when the distribution of data changes? How do you make the logic clear and easily understood?

When Parameter Sniffing Stinks

Understand that parameter sniffing is not a bad thing – it is an integral part of SQL Server. The data in your tables and your queries can lead to parameter sniffing not making sense, however. Understanding how to identify if it is a problem and knowing various ways to fix it when it is a problem are valuable skills to have. When parameter sniffing stinks, test methods to correct it and determine the best way to freshen the air in the server.

Watch Brent Explain It

From the session at SQLDay Poland:

Learn More in Our Mastering Query Tuning Class

We explain how execution plans are built, cached, rebuilt, and how to fix ’em when they go wrong.

90 Comments. Leave new

What is also useful at times is to add the option recompile at the statement level.

The added benefit I believe is that the plan is cached to the point it encounters such a statement and all _current_ values of the arguments _and_ local variables are taken into account.

Example:

SELECT …

Table1 Join Table2 ON

WHERE @Midnight <= col1

OPTION (recompile)

You’re correct – you can recompile at the procedure or statement level. When the WITH RECOMPILE statement is added at the stored procedure level, none of the statements in the stored procedure have plans cached. When OPTION (RECOMPILE) is added to an individual statement in the stored procedure, that particular plan will not be cached – but others in the procedure are not affected.

I ran into an issue like this a few days ago. One stored procedure was looping through a bunch of values and calling a second SP with those values as a parameter. This second stored procedure was taking about a minute to run. Examining the execution plan I could see that it was using the compiled parameter value to estimate the plan, rather than the current value. My solution was to assign the parameter value to a local variable, and change the queries in the SP to use the local variable rather than the parameter. Execution time came down to about 3 seconds.

Now that’s a terrific tip. Thank you.

I usually avoid it by loading a local variable with the parameter value at the start of the sproc. Is that a bad idea?

Nope – local variables are another way to manage it!

Thanks for the wonderful article. I am somewhat confused with the local variable discussion and would greatly appreciate your insights on this.

So, If you set the local variables and pass these as input to the query, SQL can only generate a ‘generic’ plan as per the statistics.

So, let’s say there is a ‘Names’ table and ‘id’ is the PK and ‘last name’ has Non clustered index. Assume there are total of 100000 rows out of which 90000

are ‘Smith’ and remaining are unique(let, ‘Santa’ be one of them). Now, if we run the procedure using the local variables method, it would use only

generic plan, i.e “clustered index scan” even while looking for “Santa”(Ideally this should be a Non clustered Seek).So, I am wondering, this is still in a way, parameter sniffing problem (Using the same plan for everything). Please clarify if I have misunderstood anything. Thank You.

I think you got it. The generic plan is better but still suboptimal. Look at my previous comment for option recompile at the statement level which does take into account the current value of a local variable. It was introduced with sql2005.

And I have found often faster than a recomple.

Old post I know.

This has been very useful, thanks for writing this. I think we have a case of parameter sniffing but with a slight twist due to some “interesting” coding. In this case we pass in three parameters, but one can be reassigned. To explain more clearly:

MyProc @VarA INT, @VarB INT, @SearchKey INT

….

IF @VarA = 0 SET @VarA = VarB

…

SELECT * FROM MyTable

WHERE Key1 = @VarA

AND Key2 = @SearchKey

In our database we dont have any data in MyTable where Key1 = 0, so I think what happens is that if the procedure is first called and compiled with a @VarA = 0 then it generates a plan using 0 for Key1, whereas infact the value to be used is really in @VarB. I then think that due to parameter sniffing it keeps the plan for subsequent calls.

I think this is what could be happening, but would welcome any thoughts on this. The assumption is that the query is compiled using the parameter values and doesn’t take into account the reassignment.

My fix for this is going to be to define a local variable and assign the relevant parameter to that and then use the local variable in the selection.

Thanks

Ian – well, once you get to the point where there’s code samples involved, you’re best off posting that at a dedicated question and answer site like http://DBA.stackexchange.com where lots of people can jump in to help refine your question and get you great answers fast.

Thanks for the quick reply, I’ll try there. I’ve run a basic test and the fix does appear to work, but I’ll see if anyone can shed some light on what the optimiser is doing.

Thanks for writing this, Jes. I think it’s the best explanation this DBA has seen yet on the topic and gave me better clarity as to how to find the “source of the smell”. 🙂 Thanks again!

Thanks Don!

Jes,

Great article! Thanks!! Never saw such a good explanation about plan parameterization.

If you excuse me, maybe I can add something here. When you are covering the hint OPTMIZE FOR, you could explain about OPTMIZE FOR UNKNOWN too.

I had never a case to use “OPTMIZER FOR @somevariable” and I think it’s a little risky if one can’t keep an eye on the plan. But I already used OPTMIZE FOR UNKNOWN more than once with absolute success.

Regards

Thanks! Yes, OPTIMIZE FOR UNKNOWN can also be a helpful hint.

OPTIMIZE FOR UNKNOWN Did that trick for us.

Thanks a lot.

i’m down to 40ms instead of 500ms !! YeY !!

That’s so great to hear!

Hi, this is very insteresting, What do you think local variables like this ?

ALTER PROCEDURE [dbo].[Get_OrderID_OrderQty]

@ProductID INT

AS

DECLARE @ProductIDLocal INT = @ProductId

SELECT SalesOrderDetailID, OrderQty

FROM Sales.SalesOrderDetail

WHERE ProductID = @ProductIDLocal;

Is this useful ? Why didn’t you describe this option ? Is bad option ?

Thanks a lot !

You can use local variables in stored procedures to “avoid” parameter sniffing. Understand, though, that this can lead to many plans stored in the cache. That can have its own performance implications. There isn’t a one-size-fits-all solution to the problem!

You mentioned that if you use local variables you will get a plan for each unique parameter. Could you do a mix of parameters and local variables? Thus getting a plan for the different local variables while using the other parameters for the initial plan? For example, I have @p_topic_ID and @p_participant_ID for parameters. I’d like to set the @p_topic_ID to a local variable to get the different plans for each topic, but I don’t want a plan for each @p_participant_ID, so I wouldn’t set that as a local variable.

Marc, I’m not entirely sure what you’re asking. Why don’t you post a sample over at http://DBA.stackexchange.com – include code, and there are a lot of other people there to answer the question and offer opinions, too.

Jes, I’m going to do that. And here is a sample for what I was asking. Once I here back for the other site, I’ll post the response. And thanks, this article has been very useful.

ALTER PROCEDURE [dbo].[stp_get_topic]

@p_topic_ID INT,

@p_participant_ID INT

AS

DECLARE @topic_ID INT = @p_topic_ID

SELECT t.topic_ID, t.topic_name, pp.current_step

FROM topic t

INNER JOIN participant_progress pp

ON t.topic_ID = pp.topic_ID

WHERE t.topic_ID = @topic_ID

AND pp.participant_ID = @p_participant_ID

What you’re describing will work similarly to the OPTIMIZE FOR UNKNOWN option. I didn’t cover that here, but it’s worth looking into!

Thanks again for such a clear article in this subject. The information about OPTIMIZE FOR… helped me solve a problem in our production database yesterday. My question for you is, what conditions in the data would suddenly make SQL Server start using parameter sniffing in a stored proc to decide to apparently optimize on that parameter as numeric when the query ran fine for months and months before that? Situation is that the values of the parameter in question are always stored in the database as varchar(8), but 99.99% of the time, the values themselves are numeric. The problem I ran into was that all of a sudden the query just started hanging and would never complete. It would gain CPU time perpetually but never finish. Flushing caches/recompiling/etc. had no effect. It appeared that SQL was in an internal loop when trying to build a query plan–but I can’t verify that. The query in question would run fine (1 to 2 seconds) when run as a script outside the stored proc. Your article gave me the idea to try “OPTION (OPTIMIZE FOR (@param=’ABC’))”, and the stored proc then ran in a second or so.

So the question is, do you think it was some kind of growth of the data over some threshold that triggered the server’s behavior? Have you had any luck with some kind of query that can predict when this sort of thing will come into play?

As data in your tables changes over time, the statistics on the table will be updated. As statistics are updated, the query plans are recompiled. The value passed in at the time the plan is recompiled is what will be saved in the execution plan (unless you use a hint like OPTIMIZE FOR).

This is the best explanation on Parameter sniffing I have read so far. Comments are equally helpful here as I got to know more ways to handle parameter sniffing by going through the comments.

very nice article…. In my project I am using output parameter everywhere.. I have a doubt like output parameter in stored procedure will create parameter snipping..?? If yes how we can avoid it

Rohan – output parameters are different, and they’re not affected.

I have a serious performance in some procedures where the parameters are not always used. This paremetros are tested in the WHERE clause like this:

WHERE (COL1 = @ PAR1 IS NULL OR @ PAR1)

AND (COL2 = @ @ PAR2 PAR2 OR IS NULL)

What you guys suggest to solve this problem? Dynamic query?

This a a common pattern to specify optional parameters and its also common to run into performance problem because of plan caching. You can rewrite the query (I can think of some strategies but they take time to explain). My advice is to disable plan caching (this is a sproc right?) with the downside that there will be some additional time due to plan construction but this is probably “amortized”.

Thanks for the reply

rewrite as a dynamic query would be one of your strategies or not?

What would you do?

Marcelo,

As you have code inside your stored proc it’s better to put conditions and split the OR

Example:

IF (@PAR1 IS NULL AND @PAR2 IS NULL)

BEGIN

SELECT …

WHERE (COL1 IS NULL)

AND (COL2 IS NULL)

END

ELSE IF (@PAR1 IS NULL AND @PAR2 IS NOT NULL)

BEGIN

SELECT …

WHERE (COL1 IS NULL)

AND (COL2 = @PAR2)

END

ELSE IF…

And go on spliting whatever conditions you have. As SQL brakes down query plans inside procedures you will have a query plan for each condition.

Splitting as Marcelo said is one of the strategies but its clear that this quickly becomes impracticable when the number of optional parameters grows

Dynamic is something to try but I don’t like working with strings. You can also write dynamic sql in many ways such as using a parameter placeholder or substituting a value. I’m pretty sure that a placeholder also uses plan caching.

An excellent resource for dynamic SQL is Erland Sommarskog’s blog The Curse and Blessings of Dynamic SQL.

It is a very good article about parameter sniffing.Now only i understand why my SP executed at different speeds for different stored procedures

Nice job on the topic. SQL can count really quickly when the query is fully sargable. Count the number of results for the given parameter and use that to determine which plan hint to use. That way you could limit this query to using either of two plans based on a setting you control. You would not have to recompile – losing any benefit of reused plans and incurring CPU load.

All code is nothing more than strings. Source control code-merging and compilers and sp_ExecuteSQL/EXEC take in strings and operate on it. If you split the code with CASE, you are typing a whole lot of extra text and introducing mistakes. SQL will still create/re-use separate plans for each case, so there really is no problem with dynamic sql inside the sproc.

Mike, not sure what you’re referring to. Can you post a code sample?

Hi Jes

It seems there are only two ways to optimize your original query, so using a manually set threshold, we can tell SQL Server which plan to use. I’m hopeful counting to see if we exceed the threshold is fast enough to compete with OPTION RECOMPILE, OPTIMIZE FOR UNKNOWN, variables etc. My understanding is the count should always be an indexseek since SQL stores the number of matches for each distinct key value in the index itself.

I’m concatenating @Hint into the SQL command, but that is not a risk for SQL Injection as @Hint was not passed into the sproc. I’ve parameterized @ProductID in the call to sp_executeSQL, so that is not a SQL Injection risk. The query will be exactly the same each time, so plans will be reused. The values for mouse, elephant and threshold puts optimization of this in the hands of a person.

ALTER PROCEDURE Get_OrderID_OrderQty

@ProductID INT

AS

DECLARE @Threshold Int,

@Counter Int,

@Hint Int,

@Params NVARCHAR(max),

@SQLQuery NVARCHAR(max)

SET @Threshold = 100

/*SQL will count really fast – but, is it faster than other methods?*/

SELECT @Counter = COUNT(*) from Sales.SalesOrderDetail WHERE ProductID = @ProductID

IF @Counter < @Threshold

/*Mouse*/

SELECT @Hint = 897

ELSE

/*Elephant*/

SELECT @Hint = 870

SELECT @Params = ' @ProductID INT '

SELECT @SQLQuery =

' SELECT ' +

' SalesOrderDetailID, OrderQty ' +

' FROM Sales.SalesOrderDetail ' +

' WHERE ProductID = @ProductID ' +

' OPTION (OPTIMIZE FOR (@ProductID=' + @Hint + '))'

EXEC sp_executesql @SQLQuery, @Params, @ProductID

I can see how this would work, and nicely – but I don’t ever want to leave decisions like “threshold for optimization” up to a person. I want to leave that up to what does it best – the query optimizer.

What happens when the data for both the “elephant” and the “mouse” increases? What if the “mouse” value exceeds the threshold? Is it thoroughly documented so that the threshold gets updated? What if the developer who created that left, and the person taking over for her had no idea that was there?

Leaving things like that to be managed by a person can be risky!

Hi Jess

I agree, except the optimizer didn’t do it best this time. Microsoft will have to add a new feature for this.

If the application server and the SQL server are not on the same server, where is the cache stored on? Is it cached on the application server, or on the SQL server?

The execution plans SQL Server uses are always stored on the SQL Server. The application may have its own cache elsewhere for query results that it can pick up again without talking to the SQL Server at all (memcached, app fabric cache are two common varieties of this).

Thanks for the quick response!

I did couple of testing in SQL Server 2012 and after looking into execution plan properties for SELECT, i see no parameter sniffing happening.

So i think that Microsoft did some enhancement by doing some Parameterization.

Thanks

Nice Topic. I did same of testing in AdventureWorks2012 on a SQL Server 2012 instance

and after looking into execution plan xml. The parameter value was always changed. I wonder why

I do not using the option recompile.

Hello Jes,

Nice job in this topic it is really helpful to many people.

I have issue with stored procedure execution from my application and working fine when i run from the SQL management studio. to check the issue i have start the SQL Profile.

I execute the stored procedure from the SQL Management Studio it execute in milliseconds and return 1000 records.

I have checked the SQL profile CPU : 156 , Reads : 10577 , Duration : 421 (Milliseconds)

Now I execute the stored procedure from application and it take around 24+ seconds and return same rows yes parameter values are same for both execution.

I have checked the SQL profile CPU : 24484 , Reads : 1748922 , Duration : 24900 (Milliseconds)

Can you please advice what is the exact issue ?

Thanks in advance.

Here’s a great resource on that exact topic: http://www.sommarskog.se/query-plan-mysteries.html

Very well written. Thanks for sharing this.

Hi Jes,

This article explains the problem very well. Thank you very much.

With regards to local variables, does this essentially disable parameter sniffing?

Is this the same as recompiling the stored procedure though?

One of the workarounds I’ve seen our team do is to rebuild the indexes, gather stats, recompile all procedures in the database; and also to alter the procedure so that it has the with recompile option. Very drastic…

Thanks.

Correct – local variables will not cause SQL Server to reuse the parameters. That is the same as recompiling the procedure every time. This can be very resource-intensive!

Recompiling all plans in the server by clearing the cache, or recompiling an entire stored procedure when only one statement in it is performing poorly, is indeed very drastic!

Someone in my company did the following to prevent parameter sniffing. I don’t think it does anything except waste memory. What do you think?

ALTER PROCEDURE [dbo].[SelectInternalReportItems]

@FiAccountId BigInt,

@IsReportQuery Bit,

@Filter VARCHAR(500),

@StartDt DateTime,

@EndDt Datetime,

@PageNumber Int,

@PageSize Int,

@StatusValues VARCHAR(MAX),

@SortColumn VARCHAR(50),

@SortDescending BIT,

@UserName VARCHAR(50)

AS

BEGIN

–Assign parameters to local variables to prevent parameter sniffing

DECLARE @FiAccountIdLocal Bigint = @FiAccountId,

@IsReportQueryLocal Bit = @IsReportQuery,

@FilterLocal Varchar(500) = @Filter,

@StartDtLocal DateTime = @StartDt,

@EndDtLocal DateTime = @EndDt,

@PageNumberLocal Int = @PageNumber,

@PageSizeLocal Int = @PageSize,

@StatusValuesLocal Varchar(Max) = @StatusValues,

@UserNameLocal Varchar(50) = @UserName,

@SortColumnLocal Varchar(50) = @SortColumn,

@SortDescendingLocal Bit = @SortDescending

Joshua – for questions about your code, the best bet is to post them on http://StackOverflow.com. We don’t really want to get in the habit of doing code analysis in blog comments. Thanks!

It hard to decide the best one, therefore looking for some input. As I think about the 3 main options we have

1. Recompile – CPU intensive to recompile but always uses best execution plan. Of course if plan is bad could consume considerably more CPU than what Recompile uses to have a good plan.

2.OPTION(OPTIMIZE FOR UNKNOWN) -This one I would think is very much the same as option 1 with exception if you have multiple blocks of code you can have some compliled and others not. If you only have 1 select then it should be same as option 1, right? Basically it is recompile at run time, right?

3. Local Variables – will not recompile at run time but will not have the most optimal plan for everything. This could end up using more cpu than option 1 do to the fact its not a great plan.

IN conclusion It would seem that the option 2 would be the safest general option for most things and then look at option 3 or 1 for one offs? I am not sure what benefit option 1 would have over option 2. I can see so many benefits option 1,2 has over 3.

Ken – as we discuss in the article, it depends on the query and the frequency that it’s being called. There’s no one-size-fits-all answer.

That’s a great Article, Jes. You have explained Parameter Sniffing simply.

After some hours trying to understand some procedures bad performance, this article sove the problem. Thanks.

Jes,

I recently came across this article and wanted to say great job. My initial questin is what’s causing the third query to choose a different plan. Its retrieving more records so one would think it would choose a better plan then an index scan.

Hi,

Our company has a Java application with an MS SQL database that i’m the Senior DBA for. We had performance issues which we tracked down to parameter sniffing (clearing the execution plan cache improved things) but our application doesn’t use stored procedures, it just throws SQL statements at the database.

Our best solution was to disable parameter sniffing. It was a little while ago but I believe this makes SQL build the execution plan based on the spread of data in the tables rather than the parameters, and it solved our issues, hopefully this may be of use to someone.

There is a trace flag 4136, this disables parameter sniffing.

Thanks,

Gary.

Gary – what would you say the drawbacks of this trace flag would be?

Well so far only one, and that is remembering to turn it on when you build a new environment 😉

We haven’t found any drawbacks other than this, it solved our mid-morning performance issues so that made me popular. We have a very skewed data spread, some customers are massive whereas others are little fish so this trace flag saved our bacon.

Thanks,

Gary.

Hi Jes,

I had faced similar issue in my environment, where store procedure variable was taking 33+ secs to run the complex query, one of my developer used the local variable and it is using 3 seconds now.

Does it mean, we should use the local variable for all the Stored procedures ? if not do we need to use OPTION (RECOMPILE) with all the queries ?

Thanks,

Sahil

Sahil – this is a little beyond something we can answer fast in a blog post. (I’ve got an hour-long module about it in our performance tuning class.)

Awesome Demo. Thanks much. If could learn little more about an indepth understanding of how to read the execution plan will be greatly helpful in future sessions.

Hi,

Thanks for the great post. Somewhere above using local parameter has been mentioned, I was wondering to ask can we use it as best practice for all stored procedure or it has to be used whenever we find parameter sniffing problem with specific code?

Neka – no, it’s definitely not a best practice. For more on how that works, click on Training at the top of the site, and check out our performance classes on statistics.

Re the “be creative” section, you ask this:

“How do you make the logic clear and easily understood?”

While I agree the whole suggestion ugly, I don’t see this being all that difficult. There would be a main sproc, and it would have the logic to select which of the mouse/elephant-specific sprocs to call. Which, most likely, would all have the same code, but with a different optimization hint.

I suppose an equally ugly method to avoid unnecessary recompiles, but without the separate sprocs, would be to store the last value used when determining an option recompile in a global variable created for that sproc. The sproc would then check if the current parameter(s) result in a different OPTIMIZE FOR value being used. This presumes the same general knowledge available as used to determine separate sprocs as above.

So, the one sproc would first determine if a recompile is needed by a presumably simple test that should be much quicker than recompiles. If it determines the last recompile was acceptable, it uses non-hinted queries. If it does determine a recompile, then the hinted version is executed, along with the current value of the parameter (which has been determined to be in the same class of likely plans as the last recompile).

As a last bit, of course after the recompile, the global variable for that parameter/sproc be updated.

This all gets even weirder when different parameters might control different query plans, but the same principle would apply.

And, yes, still ugly.

The above should read “(which has been determined to be in A DIFFERENT class of likely plans than the last recompile)”.

But after My test,

the problem already fixed by MS for the version 2017

Awesome! Sounds like you’re good to go. Enjoy.

Cannot get enough of your videos! Learn so much in each session! Thanks so much Brent!

Glad you enjoy ’em!

OPTIMIZE FOR took a 30-40 sec duration sp to 198 ms, amazing! Thanks FOR THIS POST! :()

Barring ETL and data warehouse background tasks, if you are still writing procs in 2019 you should have your keyboard taken away.

Pshhhh, who still uses keyboards in 2019?

Sorry I’m a few years late to this conversation but I’ve run across what seems to be the same issue. While I do understand the elephant vs. mouse analogy (the significant difference between the parameters), can this still be an issue even if the nature of the parameters much the same? Basically, as we create orders, the subsequent order line ids are passed into a procedure (which calls others). One day the system is fine, processing in under a second, the next day it’s slow: 5 – 6 seconds. Then fast again the following day. We do have a nightly maintenance job that rebuilds indexes for the tables involved. Everything I’ve read so far sounds like this. Just wondered if it may still be the case for order after order (again the nature of the parameters being the same – just newer values).

Thanks.

Matt – to troubleshoot that, we’d have to see the query plans – kinda beyond what I can do in a blog post comment. Best to start here: https://www.brentozar.com/archive/2009/03/getting-help-with-a-slow-query/

Thanks Brent. I’ll give that a look. I greatly appreciate the response!

I know this is a real old thread, but it feels like I read it over and over.

If my understanding of the cache is correct, it will do a hash on the SQL that is executed and if it finds that hash in the cache, then it will use that plan.

I have a multi-tenanted db and the results and data look wildly different for each client housed in the db. You can almost think of the CompanyID as a partition key in that the data will never touch.

Let’s pretend I have a simple sproc with the following signature:

spSearch @CompanyID bigint, @Filter nvarchar(50)

When I run this search for @CompanyID=1 it returns 20k records, but when I run it for @CompanyID=2 it returns 2 records.

This to me is a perfect candidate for the woes of sniffing. I don’t want to recompile every time, but I do want sql server to be smart enough to generate an appropriate re-usable plan that has been tailored for each CompanyID.

Knowing that the cache matches based on the exact sql, will appending a comment to the back of each call have an affect or is the cache based on the content of the store procedure?

exec spSearch @CompanyID, @Filter /*1*/

exec spSearch @CompanyID, @Filter /*2*/

I have tried to answer this question by querying dm_exec_sql_text, but I don’t like the answers I am getting 🙁

Am I missing something? Would this be applicable to other queries when our calling code is smart enough to know that different parameters will most likely trigger sniffing and a need for a different plan?

Say hash in the cache 10 times fast…

Sounds like you’re at the perfect point to attend my Mastering Parameter Sniffing class. I wish I could teach the whole thing in a blog post comment, but …

How does parameter sniffing db level settings affect to work load vs. changing the code with options ?

When to use DB level settings vs. code change?

That’s a great question, and a little beyond what I can cover quickly in a blog post comment. Check out my Fundamentals of Parameter Sniffing and Mastering Parameter Sniffing classes.

[[Another option may be to create separate stored procedures for each value,]]

What I used to do when I expected a limited quantity of discrete values that would normally result in significant parameter sniffing problem, was to code conditional logic into the SP so that the actual sql statements could have hard coded search argument.

The actual sql query would be repeated in each conditional section, the only difference (usually) would be the hard coded parameter. In this way the compiler at first run would build optomized plan for each hard coded parameter all in the one SP and the conditional test would determine at runtime which hard coded statement would be executed.

This works incredibly well for performance when there are a limited number of expected parameters that would cause performance problem, or when there are some expected parameters that are known will be huge problem for performance if other parameters happen to be compiled for first. For example, null parameter vs. any other discrete value passed in.

That’s true too! It’s just a bummer that it’s hardly ever an option since most code involves a lot more than just a couple/few parameter values.

Great article

Can a table type parameter cause Parameter sniffing ?

Here is solution provided by our DBA to avoid parameter sniffing: For each procedure input parameter, make *_copy parameter, and use these *_copy parameters in procedure queries.

Zoran – unfortunately, your DBA is wrong. When they get time, send ’em to my Mastering Parameter Sniffing class, where I explain why that solution doesn’t work. Cheers!

[…] https://www.brentozar.com/archive/2013/06/the-elephant-and-the-mouse-or-parameter-sniffing-in-sql-se… […]