A client said the coolest thing to me the other day. He said, “We talked before about why we would want to start using optimistic locking in our code. How do we get there?”

If you’re not a SQL Server geek, that comment probably doesn’t even make sense. But to some of us, when you get an environment to the point that it can handle optimistic locking AND you have developers who are ready to make a few code changes to use it to their advantage, it’s exciting!

I am a huge fan of optimistic locking in SQL Server and have seen dramatic improvements in performance when it’s implemented well. For the right database and the right team, a little hard work makes scaling the application easier in the long run.

Optimistic Locking 101: A Quick Review

If you’re new to Isolation Levels in SQL Server, this post may be hard to follow. Consider taking a quick break to browse through our big list of references on Isolation Levels in SQL Server around the web.

Here’s a very simplified refresher for those who know their isolation levels, but need to brush out the cobwebs:

SQL Server uses “pessimistic” locking in user databases unless you tell it to do otherwise. Your default isolation level in SQL Server is READ COMMITTED. Although that sounds reassuring– who doesn’t want a little commitment from their database?– it’s a form of pessimistic locking. Under the READ COMMITTED isolation level readers can block writers, and writers can block readers. Sometimes the default isolation level isn’t strong enough to provide the right level of consistency, so people need to use higher (“more pessimistic”, if you will) isolation levels to prevent phenomena like non-repeatable reads and ghost records.

Let’s say we have an Orders table, and we’ll add a row in it:

|

1 2 3 4 5 6 7 |

CREATE TABLE dbo.Orders (Id INT IDENTITY(1,1) PRIMARY KEY CLUSTERED, CreationDate DATETIME2 NOT NULL, ItemOrdered NVARCHAR(100) NOT NULL); INSERT INTO dbo.Orders(CreationDate, ItemOrdered) VALUES (GETDATE(), N'Jaguar XKR-S'); |

When our database isn’t under much load, it’s easy to query the open orders – just SELECT * FROM dbo.Orders, and you instantly see the one and only order we’ve had.

However, as load increases in a database, then tension between ensuring transaction isolation / correct results and also supporting concurrency increases. You start to battle with blocking and deadlocks.

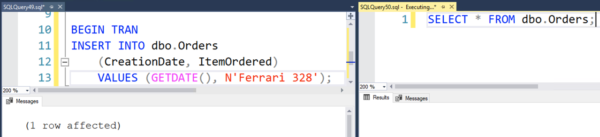

While someone is trying to load a new row into the Orders table:

|

1 2 3 4 |

BEGIN TRAN INSERT INTO dbo.Orders (CreationDate, ItemOrdered) VALUES (GETDATE(), N'Ferrari 328'); |

If someone tries to check our Orders at the same time, they’re blocked:

In the screenshot above, the left window is inserting a row, and the right window is blocked. That select will just hang there forever until the left query either commits their transaction, or rolls it back.

To work around that, people often use NOLOCK – which works in the sense that the query on the right can now finish instantly:

But that has disastrous results because NOLOCK will show you rows that never got committed, it’ll skip rows, it’ll read rows twice, and your query can outright fail. I explain and demo that over here.

Enter optimistic locking.

SQL Server 2005 introduced two new isolation levels to help you in your mission towards ever greater concurrency: SNAPSHOT and READ COMMITTED SNAPSHOT isolation (this second option is often called “RCSI”). These two isolation levels use versioning to allow some or all of your queries to speed on by “in flight” transactions and read a previously committed version of the data.

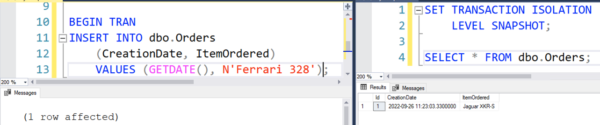

I just have to do two things: turn on optimistic concurrency (more on that in a second), and add a single line to my read-only queries that want to bypass blocking problems while still seeing accurate results:

|

1 2 3 |

SET TRANSACTION ISOLATION LEVEL SNAPSHOT; SELECT * FROM dbo.Orders; |

The result is, well, results! I can get results instantly instead of being blocked:

The select query sees only the true data – as if the insert on the left hasn’t committed yet, which is true!

Readers Don’t Block Writers, Writers Don’t Block Readers

The simplest, purest benefit from optimistic isolation levels in SQL Server is just this: you can allow heavier, reporting style queries to run without blocking your critical writes under OLTP. You suddenly have another option between tuning every expensive query, using dirty reads, or having to run queries against a secondary server.

Sometimes you can decide, “This query isn’t the greatest, but it’s OK to run if it doesn’t block anyone and just reads previously committed data.”

And yes, you can do more than that. You can use the SNAPSHOT isolation level for data modification queries, but then you need to start detecting and handling update conflicts. (Note: this does not apply to READ COMMITTED SNAPSHOT.) There’s nothing wrong with doing that, but in most environments it is not the “biggest bang for your buck” change to implement.

How Do You Implement Snapshot or Read Committed Snapshot Isolation… Safely?

Moving to optimistic locking sounds great, but it’s not a quick change. Rush in too soon and you may suffer big performance problems, loss of availability, and incorrect query results.

That list of potential problems scares off most people. That list makes a project manager think, “I’ve got 99 problems and optimistic locking ain’t one.”

But it really isn’t that hard to test and implement optimistic locking. It just takes a little time and a good plan. Here are three steps I always include when planning out an Isolation Level change.

1. Measure the Effect that Enabling Optimistic Locking has on SQL Server Performance

When you have an existing code base and you’re evaluating optimistic locking, at first it seems that you have a little bit of a chicken and egg problem.

- You’re not sure if your production server can handle the load

- Testing resources are required to make sure the change won’t create bad data

Nobody wants to invest in testing if the environment can’t handle the load. And people don’t know how to test the impact of the feature safely if it hasn’t been proven to not create bad data. It may seem like a project management deadlock.

Never fear– there’s a method to testing the load first! The key lies in understanding the difference between the two isolation levels. If you enable SNAPSHOT isolation on a database, SQL Server starts implementing versioning technologies so that queries can set their isolation level to snapshot. (Queries have to do this explicitly– as in run a command ‘SET TRANSACTION ISOLATION LEVEL SNAPSHOT’.)

Here’s the bit that’s easy to miss. As soon as you enable SNAPSHOT isolation on a database, SQL Server waits for running transactions to complete, then immediately starts using versioning for data modifications. You start using an extra 14 bytes per row on tables in the database itself. Also, versions are created in the tempdb version store to hold the previous value of data for updates, deletes, and some inserts. This happens even if no queries are run using SNAPSHOT isolation. Seriously.

This may seem a little bit like a rip off. Yeah, you’ve gotta pay for the performance even if you’re not using SNAPSHOT! But it’s for a good reason– your SQL Server does not yet have the feature of being psychic. It can’t predict if at any instant you’re going to start a query using SNAPSHOT, and it’s got to make sure that the database is ready if you do that at any time.

But this can also be a useful feature. You can test the performance impact of versioning for a database just by setting ALLOW_SNAPSHOT_ISOLATION to ON. When you issue the command to change this, SQL Server will wait for all active transactions to complete before returning a status to you (Books Online explains why and how to handle it here.) You can then monitor performance for as long as you have planned and then reverse the process.

As long as you haven’t changed any code and are using the ALLOW_SNAPSHOT_ISOLATION option, no queries will actually USE the changed isolation level and you can simply monitor performance impact of optimistic locking.

One gotcha: I’ve mentioned a specific setting on purpose. The READ_COMMITTED_SNAPSHOT option is named similarly, but is very different! That setting changes your default isolation level to use optimistic locking without code changes on your queries. As soon as you turn it on, some queries could start getting unexpected results or writing incorrect data. (An example of this is below in #3.) The process of turning on READ_COMMITTED_SNAPSHOT also works differently. For a single instant the connection issuing the command to enable RCSI must be the only active command in the database. (I go into more detail on this in the “gotchas” section at the end of this guide.)

Because of both of these reasons, ALLOW_SNAPSHOT_ISOLATION is much more suitable to dip your toe in and find out how enabling optimistic locking impacts your workload. Still follow common sense: test first at low volume times and work your way up to busier times. Know that disabling and going back to “normal” may not always happen as fast as you want if you have long running transactions or a very heavy workload.

2. Plan to Prevent Disaster with Snapshot Isolation

The second step to identify what might get out of hand if something unusual happens– like a rogue session that leaves a transaction open. This isn’t a far fetched scenario. I’ve had this happen due to both human error as well as bugs in application frameworks. It’s really not that rare and it could happen to you.

Your plan needs to identify the biggest risks from the change for your business and what may be challenging to the team who responds to incidents. Then set things up so that if weird things happen, people get notified and know how to triage the issue.

Here’s an example of two things you may identify as your biggest concerns if you implement optimistic locking in SQL Server:

- Tempdb could fill

- Long version chains could be created, causing query performance to get super slow

How you handle these will vary depending on your monitoring system, tools available, and documentation. Even if you don’t have fancy tools, you can use SQL Server’s performance counter Alerts to let you know if used space in Tempdb rises above a given water line. You can also alert on performance counters for longest running transaction time, or use DMVs to monitor the version store in detail. (Note that not all these DMVs are lightweight to use when the version store gets large.)

Your mission is simple: identify the simplest way for you to monitor the conditions you’re concerned about. Test that it works on a non-production system. Most importantly, write down documentation for the alert that will help someone decode the problem if you’re not around to handle everything and take basic actions.

3. Choose Carefully Between Snapshot and Read Committed Snapshot Isolation (RCSI)

OK, now this is where the developers come in. You can use optimistic locking in SQL Server by using either of these database options– or both. Which one is right for you?

The biggest consideration with an existing code base is how much testing is available for the code. I think the READ COMMITTED SNAPSHOT isolation level is great, but it is going to change your default isolation level for every query right away. This may lead to incorrect results, depending on how your code is written. The worst-case example is if your code:

- Reads table data into variables or temp tables

- Uses the variables or temp table data to update table data, and the code just assumes no changes have been made between steps 1 and 2

This is called a race condition, and explaining this is beyond the scope of this post. Not all coding patterns are prone to this issue. However, with an existing code base, validating all the code and ensuring that you don’t have any legacy code that’s prone to this issue isn’t always a simple thing. If you have the ability to make changes to your code and you already know a class of queries which you’d like to use with optimistic locking, you may prefer to introduce SNAPSHOT isolation into your environment first, instead of READ_COMMITTED_SNAPSHOT.

To implement SNAPSHOT isolation on some statements, you need to first enable it using the ALLOW_SNAPSHOT_ISOLATION database option. You can then implement SNAPSHOT isolation for some queries– you do this by setting the isolation level with ‘SET TRANSACTION ISOLATION LEVEL SNAPSHOT’. It’s easier to implement this at first for read queries which you’ve tested and know aren’t prone to data issues similar to what we showed above. This means that you may need to use separate connection strings for those queries, or make sure you reset and check the isolation level that you’re operating at after statements complete.

In other words, isolation level is a session level setting. You need to make sure that don’t use SNAPSHOT isolation by accident for more queries than you intend to.

Gotchas With Implementing Snapshot and Read Committed Snapshot Isolation Levels

There’s a few little facts that I’ve found over the years which I didn’t expect. They make total sense, I just hadn’t thought of them until I worked with optimistic locking in SQL Server.

-

- Hints in your code still apply. Let’s say you have a problem with locking. Over the years NOLOCK hints are added in many places to help make this better. You finally get confirmation from your dev team that READ_COMMITTED_SNAPSHOT is safe for your applications and your change is approved, so you turn it on. You’re spending all those performance resources on versioning, but guess what? Those NOLOCK hints are still causing queries to do dirty reads instead of using the data versioning! The NOLOCK hints gotta go.

- Writers still block writers in Read Committed Snapshot Isolation (RCSI), and depending on how you’ve written your transactions, this could change results in your application. Read more here.

- Update conflicts aren’t the same as deadlocks. Update conflicts are only possible when you use SNAPSHOT isolation for data modification queries– you don’t have to worry about these with READ_COMMITTED_SNAPSHOT. However, it’s often more practical for people to implement SNAPSHOT because of the testing issues I outline above. Even if you’re only implementing SNAPSHOT for read transactions, familiarize yourself with the error codes and messages for update conflicts and make sure your code handles error 3960 (“Snapshot isolation transaction aborted due to update conflict…”).

- Enabling READ_COMMITTED_SNAPSHOT on a busy system is harder than it sounds. As I mentioned before, turning READ_COMMITTED_SNAPSHOT on or off is a little unusual. You don’t technically have to put the database into single user mode, but to get the command to complete you need to be running the only active command at the moment. The simplest way to do this is to use the ‘WITH ROLLBACK IMMEDIATE’ clause of the ALTER DATABASE command. However, I have not found this to run predictably or easily on very high transaction systems. I recommend planning a change to turn the READ_COMMITTED_SNAPSHOT setting on or off in a database in a very low volume time if you need to keep things predictable.

- Rolling back and disabling SNAPSHOT requires more code changes. In order to stop row versioning, you need to disable SNAPSHOT — and as soon as you do that, queries that set the isolation level to SNAPSHOT and try to run will fail with Error 3292: “Snapshot isolation transaction failed accessing database ‘dbname’ because snapshot isolation is not allowed in this database”

Isolation Level Changes Are Not Actually Rocket Science

Isolation levels in SQL Server are complicated. But if you’re new to this, don’t think that it’s only optimistic locking that makes it complicated. It turns out that the read committed isolation level isn’t as straightforward and predictable as you might think, either!

As I work with SQL Server more and more, I continue to learn that isolation levels are complex, whether you’re using pessimistic or optimistic locking. The critical thing is to use a smart testing and implementation plan to safely find the settings that work for your team and applications.

Want help? Talk to Brent for free.

The problem probably isn’t fragmentation – you keep defragmenting and the problems keep coming back.

Our SQL Critical Care® is a quick, easy process that gets to the root cause of your database health and performance pains.

Learn more, see sample deliverables, and book a free 30-minute call with Brent.

145 Comments. Leave new

Your optimistic marbles look a little to much like Jack from the Jack in the Box commercials….{-:

Good article!

Maybe the marbles DO need a jaunty hat? If I do a presentation on this soon, I’m going to use that 🙂

Kendra – Great post!

We dove into snapshot and RCSI on a project last year…and luckily since it was still in the development phase, we didn’t have to worry about disrupting a production environment. But there was still a bit of code review involved.

Some of the tricky issues we came across dealt with the effects (of snapshot isolation) on trigger-based constraints. A detailed explanation (with simple examples) can be found in Defensive Database Programming with SQL Server, by Alex Kuznetsov…which is a free book, btw!

Thanks for the comment Bill, that’s great.

Having implemented optimistic locking and handled some tricky issues– what is your take? Would you recommend using snapshot isolation to others?

(Edit: I also added a link to those chapters by Alex K to our “Isolation Levels in SQL Server” links and references page— that’s an awesome resource!)

It may be overkill for some situations…ex. typical data warehouse with regular batch load window….but for us it worked well for the system we were designing (a hybrid OLTP/DW).

Yes, gr8 article. Before coming across it i spent 3 hours reading others and just ended up with major confusion. You explained it very clearly and succinctly.

Awesome article.

Thanks

Great article! It cleared up a question that I was asked today, and I appreciate it!

Hey David,

Thanks for the comment! Glad it was helpful. And nice to see you here on the blog!

Hi Kendra,

Must say a good article, beautifully describes both of these isolation levels and other reference material also add to its magic.

Regards

Gurpreet Singh Sethi

Thank you very much! Really appreciate your feedback.

Hi Kendra,

Great article thanks! Could you clarify one point for me please………. in your article you have said

“You start using an extra 14 bytes per row on tables in the database itself. Also, versions are created in the tempdb version store to hold the previous value of data for updates, deletes, and some inserts. This happens even if no queries are run using SNAPSHOT isolation. ”

Does this mean that once snapshot is enabled 14k immediately gets added to every row in the DB? (This is what I inferred from your article) This does not appear to be the case in my testing. Or does this mean that from this point onwards each newly updated row contains an extra 14k?

Thanks for your help!

Jonathan – it’s only added for new writes going forward, and it’s 14 bytes, not 14 kilobytes.

When we switched on RCSI on a large and very busy database we started getting a lot of deadlocks in certain areas of the system. What had been blocking locks no longer blocked, which allowed the code to continue on different paths until there was a deadlock. Ick.

However, the fix was not too hard. It involved creating new indexes and the deadlocks went away.

Excellent post…

Hi, quick question for you regarding SNAPSHOT isolation: I’m a bit confused by the “Update conflicts aren’t the same as deadlocks” warning in your “gotchas” section. If I’m understanding the SNAPSHOT isolation setting correctly, even though it’s always preparing data for snapshot queries, it only actually comes into play with an explicit ‘SET TRANSACTION ISOLATION LEVEL SNAPSHOT’ command, either inline or in the connection string, correct? If that’s true, and you do not set that level on your connection, how could you get an update conflict on an update if you did NOT explicitly set that level? If your only use of SNAPSHOT isolation is when you explicitly turn it on for reads, would you ever get an update conflict error?

Thanks for your help! Great article — our system has grown rapidly over the past year, and I’m wrestling with the deadlock beast, so I need all the advice I can get!

Hi Landon!

Yes– you only get the UPDATE conflict when you are using SNAPSHOT iso (and have explicitly requested it) and you are modifying data. (Won’t happen if you’re using read committed snapshot, and won’t happen if you are using Snapshot and NOT doing a modification.)

Hope that helps,

Kendra

Kendra,

Yes indeed, that does help — thanks for the quick reply. I thought I was understanding it correctly, just wanted to make sure.

And, if you have the time, here’s a follow-up question. If I’m not ready to go very optimistic (RCSI), which is a more appropriate first line of defense: using NOLOCK in my reads, or enabling SNAPSHOT and using that explicitly in my reads? I mean, I’m working on a schema re-design right now that should alleviate some deadlock pressure by removing some ugly read/update combination procs, but I’d like to safeguard against the problem as much as possible.

Thanks again for your help — I really appreciate it.

Cheers,

Landon

I’m not the biggest fan of NOLOCK, but I think there can be a time and a place for it in small quantities. NOLOCK can be OK when:

* Dirty reads (incorrect data) is OK to return to the user

* It’s OK if a query sometimes fails with an allocation order scan error (this can happen with read uncommitted)

* You can go into the code and pull out the NOLOCKs later on when you move to optimistic locking

When you look at that list of three things, NOLOCK is pretty depressing. Queries can fail, can return wrong data, and end up with hardcoded hints that you have to clean up later when you do it a better way? That’s just kind of a drag. But it is a pretty quick fix AND it doesn’t require all the work of making sure that your tempdb can support versioning/ doesn’t run out of space/ etc.

So the answer to this depends on how well you can support versioning in your environment. Do you have a strong enough IO subsystem and enough DBA support to handle turning on optimistic locking for SNAPSHOT at this point (everything from monitoring down)? If so, I would start there.

Thanks for your advice — it’s a big help. I think my true first line of defense is going to be a schema redesign to try to keep application processes from stepping on each other, and if that’s not enough, we’re moving to a bigger DB server, so I can try SNAPSHOT isolation. Wish me luck!

Great Post, very informative

Excellent information. An interesting side effect of enabling Read Committed Snapshot (RCS) I’ve found that SQL doesn’t always release data when deleting data from tables that contain LOB data (varchar(max), varbinary(max) etc) . We have a table that gets purged on a monthly basis and contains large amounts of data in the LOB columns, as soon as we enabled RCS the space the DB (and table) used stopped decreasing after the delete. As soon as you turn off RCS sql starts freeing decreasing the space the table is using.

Thanks for the great article!

If i enable SNAPSHOT isolation on my database, does this mean that only queries that now specify ‘SET TRANSACTION ISOLATION LEVEL SNAPSHOT’’ will always read the snapshot version of the data in tmpedb?

If so, then is it fair to say that usually only SELECT statements get re-jigged to use the snapshot so that ‘readers don’t block writers and vice versa’?

Thanks

Maybe i should have been more clear, my bad, i meant if i enable ‘ALLOW_SNAPSHOT_ISOLATION ‘ on…

Hi Mark,

This is a bit complicated. There are two settings, and they are named very similarly, so that’s the source of most of the confusion.

1. SNAPSHOT – This can be enabled for a database and it starts row versioning. Only queries that specify ‘SET TRANSACTION ISOLATION LEVEL SNAPSHOT’ will use it (and the generated versions of data). This lets you use optimistic locking at a transaction level (can be more than one statement). You *can* use this one for update transactions, I don’t recommend starting there however.

2. READ COMMITTED SNAPSHOT – This can be enabled for a database and ANY query that uses read committed will automatically start using this isolation level and the generated version. This lets you use optimistic locking at a single statement level. If you have NOLOCK hints in your code or other hints setting isolation levels to things other than read committed, those will continue to be honored.

Hope this helps!

Thank you Kendra for the quick response! Without knowing too much more, i guess its safe to say (as you mentioned), TEST TEST first using ALLOW SNAPSHOT ISOLATION ON, and using SELECT queries and determining the performance impact first (tempdb, long version stores, etc..) before going any further.

Have a good weekend!

Hi Kendra,

Great article! I have a follow up question to this.

From (http://technet.microsoft.com/en-us/library/ms189050(v=sql.105).aspx):

When either the READ_COMMITTED_SNAPSHOT or ALLOW_SNAPSHOT_ISOLATION database options are ON, logical copies (versions) are maintained for all data modifications performed in the database. Every time a row is modified by a specific transaction, the instance of the Database Engine stores a version of the previously committed image of the row in tempdb. Each version is marked with the transaction sequence number of the transaction that made the change. The versions of modified rows are chained using a link list. The newest row value is always stored in the current database and chained to the versioned rows stored in tempdb.

>logical copies (versions) are maintained for all data modifications performed in the database.

This part worries me. If I set ALLOW_SNAPSHOT_ISOLATION to ON then will *all* data modifications have row versions in tempdb, even default READ COMMITTED ones? My ideal would be to have only SNAPSHOT ISOLATION transactions keep row versions. But if this were the case, I guess this would mean that an update under READ COMMITTED would still block a select under SNAPSHOT ISOLATION.

Anyway, so my question is: If ALLOW_SNAPSHOT_ISOLATION is ON, then will all data modifying transactions of any isolation type create row versions in tempdb?

Thanks

Yes, versions have to be created for all modifications, regardless of their isolation level.

To simplify it down, let’s just talk about ALLOW_SNAPSHOT_ISOLATION for a second. When someone is updating data, SQL Server has no idea if a transaction using SNAPSHOT isolation might start at any instant– so it has to do versioning for the modification just in case. Because how could it know?

READ COMMITTED transactions will escalate to snapshot isolation if you set the READ_COMMITTED_SNAPSHOT option as Kendra describes.

This works fine for single atomic queries but not so great if you want to do multiple reads on a set of snapshot tables, but it depends on whether you care about reading those published pieces of data as sets’

You can read my post on this for more details:

http://brettwgreen.wordpress.com/2013/03/21/reading-from-snapshot-databases-with-multiple-tables/

Thank you for a great article! I have a question about locking behavior when you use RCSI. If row level locking is used, I see no issues, since only the changed row snapshot will be created. But if sql decides to use page(s) locking, does it mean that it has to create a snapshot for every row in those pages? I hope the answer is no, because if yes, I would seriously consider disabling PLL. Could you please clarify?

Hi Ilya,

This is implemented as row versioning– and the rows versioned are the rows modified (not the rows locked). You can prove this yourself in a test database (far far away from production) by using modification queries with locking hints that force page locks or table locks, and snooping around in the actual version store records in sys.dm_tran_version_store. (Be very very careful with that in production, as books online notes it’s SUPER detailed.)

http://msdn.microsoft.com/en-us/library/ms186328(v=sql.105).aspx

Hope this helps,

Kendra

Hi Kendra,

Thanks for the post! Could you take a look at the question I have, please?

How does SQL Server know that he has to look a record up in the version store? Let’s pretend session A modifies a few records and escalates the row-level locks to a page-level one. At the same time another session B tries to read that page. It sees the page being under X lock but what happens afterwards? How does session B know which records on that page are modified and therefore in the version store, and which are not? 14 bytes pointers? But, to me, all the rows on the page can have them: some pointers can be relevant, but some can be just outdated.

Thanks!

Borka – Kendra doesn’t work here anymore. For random q&a, head over to dba.stackexchange.com

Blog comments aren’t a great place for this kind of help.

Thanks!

please send me new notes….

New notes on what?

Quick question… let’s say I have a stored proc that calls another stored proc that in turn implements “SET TRANSACTION ISOLATION LEVEL SNAPSHOT”; when the stored proc returns, is the isolation level still set to SNAPSHOT, or does it retain that of the current stored proc before the call? Or, is the scope of the isolation level… isolated … to the scope of the called stored proc?

Hey Greg,

Short answer: “Isolation levels or lock hints set in stored procedures or functions do not change the isolation level of the connection that calls them and are in effect only for the duration of the stored procedure or function call.” (From: http://msdn.microsoft.com/en-us/library/tcbchxcb.aspx)

So the scope should just be to the “child” stored procedure.

Longer story: I’ll demo this in an upcoming blog post to show how you can prove that it’s working yourself.

Hi Kendra,

Have you done that blog post ? I haven’t finished reading the comments yet

Zeeshan – no.

Thank you K for this article. I am going to do a 3 part training on locking, Blocking and noLocking for my developers (for me too.) would you suggest a book and maybe a Youtube Video?

Sure, check out our isolation levels resource page that has a ton of links and videos:

https://www.brentozar.com/isolation-levels-sql-server/

I think I’m in love!! 😉

This was a FANTASTIC article!! I’ve been struggling trying to understand the nuances of the whole snapshot isolation paradigm and couldn’t find anything that really broke it down for me in easy to swallow nuggets…VOILA!!

I can’t believe I haven’t run across your blogs in my ten years of working with SQL Server.

You have a new fan!!

A thousand thank you’s!!

Great article!! I noticed you mention that the isolation level can be set in the connection string. Do you have an example of how to set via the connection string?? I can only find examples directly setting within each individual query using “SET TRANSACTION ISOLATION LEVEL SNAPSHOT” or by using the ADO.NET SqlConnection.BeginTransaction method. For example: If I wanted certain reports to quit blocking DML operations, it would be much easier to add (or remove as part of a rollback plan) snapshot isolation in the connection string of a few shared DataSources on a reporting server vs. changing each query for each dataset in each report.

Hi Brad,

I believe that I misspoke and need to correct the article. I believe that you are correct based on this page: http://technet.microsoft.com/en-us/library/ms189542(v=sql.105).aspx

It does appear that resetting the connections doesn’t reset the isolation level, which may be why it seems like it’s set for the entire connection: https://connect.microsoft.com/SQLServer/feedback/details/243527/sp-reset-connection-doesnt-reset-isolation-level

The link to Alex Kuznetsov’s Defensive Database Programming book has moved:

http://www.red-gate.com/community/books/defensive-database-programming

this is a monograph please be brief when you explain something

Hey Miguel,

On complex how-to topics, we do sometimes choose to put lots of detail into a single post. Otherwise you end up with 12 part posts where folks have a hard time finding everything.

If you need a brief explanation of how to do what we describe in this article, I’m fairly certain the feature isn’t a good fit for you. There are a lot of gotchas, and you’re likely to end up in an outage situation.

Best of luck,

Kendra

Hi,

For implicit TSQL commands (INSERT/SELECT) that are not wrapped inside an explicit transaction, will simply turning on snapshot isolation be sufficient or Do I need to explicitly wrap it with a BEGIN TRAN ?

I don’t want to turn on read committed snapshot option as it applies for entire DB activity.

Thanks.

Hi Praveen,

To use SNAPSHOT, you must explicitly set the isolation level for the session to snapshot– but you don’t have to stop using autocommitted transactions as long as you do that.

One of the issues with RCSI referenced in books online (http://msdn.microsoft.com/en-us/library/ms188277(v=sql.105).aspx) is that “•Update performance can be slower due to the work involved in maintaining row versions”. You mention that this is also true for some inserts. What about bulk insert methods that are non-logged (BULK INSERT, INSERT INTO … WITH (TABLOCK), etc)?

Hey Tim,

Bulk inserts are sometimes minimally logged, but they do get logged. Typically an insert won’t generate new records in tempdb because there’s no “old version”, but there’s the extra overhead from the on-row pointer.

Still, I would look at data modification performance on a case by case basis. The amount of work the version store is doing varies by workload, and of course in many workloads data modifications may be faster with RCSI due to reduced blocking!

If you’re concerned about a specific case with bulk load then I would just do some testing with a restored copy of the database and time how long it takes with both settings and your specific bulk commands.

The most common risk I’ve found with optimistic locking is that long-running transactions cause version store pileup, and that causes query performance problems with anything using versioning (which is a lot!). So that’s still one of the main things I’d monitor for.

Hi Kendra,

Fantastic article, I really learnt alot from this. But I have some follow up questions:

If I enable RCSI, do I NOT have to set session level transaction isolation?

If my application use’s bcp to do an insert into temporary tables, will I need to configure the loader script to use bcp bulk insert with a TABLOCK hint in order for it NOT to conflict?

Do you have an example of bcp statements when RCSI is enabled?

If RSCI is enabled and I’m using bcp, should I consider modifying batchsize to reduce the amount of time bcp locks my table? Do you have any examples of how you would do this (optimum batchsize based on record count)

Hi Andrew– glad it’s helpful!

If you enable RCSI it changes the default isolation level for queries using the database. So you don’t have to set it on every session. However, if you’re already setting it to something like READ UNCOMMITTED with statements or hints, that’ll still take effect.

For your BCP question, if they are temp tables as in tempdb then I’m not sure they’d be impacted? Perhaps I’m misunderstanding. But either way I would just encourage you to do some testing.

Hi Kendra

Have added Transaction Isolation Level Read Uncommitted to all Stored Procedures.

Also, Have added WITH(NOLOCK) Table hints in SPs.

These are added to avoid Blocking and Deadlock – We are facing lot of issues because of deadlock.

If i go for above 2 implementations then what would be the Pros and Cons of Uncommitted isolation ?

Note : We cannot go to SNAPSHOT Isolation and at the same time i need to the better Concurrency.

Your help would be much appreciated.

Hi Praveen,

This post isn’t really about deadlocks or dirty reads specifically. Check out Jeremiah’s posts on deadlocks here: https://www.brentozar.com/archive/2011/07/difficulty-deadlocks/

There are a lot of downsides to read uncommitted. We have a video on that here: https://www.brentozar.com/archive/2011/11/theres-something-about-nolock-webcast-video/

Kendra

Hi Kendra,

That was really good article about deadlocks indeed. But i have few followup questions about No Lock.

1.To eliminate deadlocks, I am using no-lock/uncommitted in my queries,but again this will cost Dirty read and concurrency would be affected. I cannot go for RCSI as of now, cos that would need extensive testing and would be stuck in FULL Tempdb scenario. So, what is the best and quickest way to mitigate the deadlocks ?

Its for sure that i am going to work with few architectural patterns to use in an application to avoid deadlocks as stated in your links.

Thanks

Praveen – to get the right answer for your scenario, we would need to know more about the underlying tables and the queries involved. It’s a little beyond what we can do quickly in a blog post comment, but if you can post example schema, code, and deadlock graphs to http://DBA.StackExchange.com showing the problems, you might find folks willing to help for free. Enjoy!

Hi Kendra,

Just wanted to confirm my understanding of your marbles(!) example..

With READ_COMMITTED_SNAPSHOT ON, the reason why your marble is RED is:

– it uses transaction sequence numbers against the objects being updates, so Transaction 2 has a more recent transaction sequence number than Transaction 1, so when T1 gets committed, the real value is that of the latest transaction sequence number for that object (T2)

WITH READ_COMMITTED_SNAPSHOT OFF, the reason why your marble is WHITE is:

– T2 is blocked by T1, so when T1 commits, it changes the black marble to white. Then T2 is allowed to go through but there is no more black marble to update to red.

Do I understand correctly? Thanks in advance and happy holidays!

Hey Mark,

It may just be that it’s Friday afternoon after a long week, but— there aren’t any red marbles at all. So I’m just officially confused!

But yes, XSNs are used for this. If you wanna dig more into that and prove it, Kalen Delaney has info on a DMV that you can use to get your hands dirty here: http://sqlblog.com/blogs/kalen_delaney/archive/2008/04/03/how-sql-server-detects-the-correct-versioned-rows.aspx

Edit: it has clearly been a very long week because I have now found the red marbles in the example 🙂 But I think I may have lost my own!

kl

Hi Mark,

With RCSI on, you get that result because of the versions of rows that SQL Server is operating on, not because of a last writer wins race condition.

With RCSI off, and pessimistic locking in place, you’re correct – the lock taken out by transaction 1 is held until commit. This prevents transaction 2 from proceeding until transaction 1 is committed or rolled back.

For more fun with isolation levels, check out A Critique of ANSI SQL Isolation Levels.

Thank you both K and J.

We have implemented SNAPSHOT for only 3 main/long-running reports in our ASP.NET app which tend to cause deadlocks with other portions of the system (previously we used NOLOCK, but removed that and started using SNAPSHOT)

At first, everything seemed great. SNAPSHOT was used ONLY for read-reports (as the first line of the SQL query) and never for any inserts/updates.

Here is the problem: Even though our SNAPSHOT argument is ONLY used for the read-reports (as a first line in the query), we are getting occasional exceptions for other queries such as:

Snapshot isolation transaction aborted due to update conflict. You cannot use snapshot isolation to access table ‘dbo.tbl_Name’ directly or indirectly in database ‘db_name’ to update, delete, or insert the row that has been modified or deleted by another transaction. Retry the transaction or change the isolation level for the update/delete statement.

But, we are NEVER using the SNAPSHOT argument for these insert/update queries. I realize that the snapshot setting is kept per session, but we open/close our database connections properly for every individual query (This is an ASP.NET web application) so any insert/update query gets a fresh sql connection, always.

Can you offer any suggestions? I would really like to avoid going back to NOLOCK.

Thanks

Hi Chris,

Are you using connection pooling, perhaps?

“When a connection is closed and returned to the pool, the isolation level from the last SET TRANSACTION ISOLATION LEVEL statement is retained. Subsequent connections reusing a pooled connection use the isolation level that was in effect at the time the connection is pooled.” … http://msdn.microsoft.com/en-us/library/tcbchxcb(v=vs.110).aspx

Kendra,

Thanks for the response. Yes, we use methods like this to connect to the database and view data…

Dim SqlConnection As New SqlConnection(“connection string here…”)

Dim SQLstring as string =

“SET TRANSACTION ISOLATION LEVEL SNAPSHOT;” & vbcrlf &

“Select * From SomeTable;”

Dim SqlCommand As New SqlCommand(SQLstring, SqlConnection)

SqlConnection.Open()

Dim sqlreader As SqlDataReader = SqlCommand.ExecuteReader()

Do While sqlreader.Read()

‘// Some process to prepare data for viewing in aspx page

Loop

sqlreader.Close()

SqlConnection.Close()

If it is connection pooling, could you potentially just use a different pool for the snapshot transactions? Any change in connection string will use a different pool, so you could potentially use this workaround to split out those commands.

(This issue applies to all isolation levels and this workaround was suggested by a user on StackOverflow here: http://stackoverflow.com/questions/9851415/sql-server-isolation-level-leaks-across-pooled-connections)

Kendra,

We use the same connection string for everything (set in the web.config)

If I understand you correctly, for any snapshot transactions, I could simply add something to that connection string (even if I am adding an unnecessarily redundant option) to change the string so that it wont be used for the other queries which can’t use snapshot. Is that correct?

Thanks,

Chris

Yep– pooling is very finicky and variations in the string will cause different pools to be use. In the Stack Overflow item the poster suggests a couple of options (logins, an option on the pool), but even capitalization, ordering of options, and spacing can cause different connection pools to be used in .NET.

Kendra,

Thank you so much. You really helped me out of a jam.

Regards,

Chris

Kendra,

Unfortunately, through trial and error (and found MS documentation stating same) we found that changing a case or adding something to a connection string does not separate that unique connection string. It is a myth. The only way to accomplish this goal and separate the connection pool to only certain queries which use that connection string is to use another SQL Server Login/User.

Hopefully, this helps someone else.

Regards,

Chris

Hi Kendra,

Thanks for the article, I love reading your books and articles. I have one simple question. After doing some test on a dev server set on read committed and reading some other articles online, I’m having trouble finding a scenario where a reader blocks a writer in read committed. In one query window I am running queries like (TABLE_A has millions of rows)

BEGIN TRAN

SELECT *

FROM TABLE_A

WHERE COLUMN_A = 1

or

BEGIN TRAN

SELECT TOP 100000 FROM TABLE_A

and in another window I’ll run

UPDATE TABLE_A

SET COLUMN_B = ‘updated’

WHERE COLUMN_A = 1

and it always works even if I leave the previous transaction open, even if I put the select statement in a while loop. Shouldn’t it be putting a shared lock on the whole table and not allowing an update?

Mike – just because you have a select statement open in a transaction doesn’t mean you’ve locked the rows. You might be thinking of the REPEATABLE READ isolation level – in that one, you’re putting locks on selects because you want to be able to repeat your read again and get exactly the same data.

Hey Mike,

SELECT statements under read committed will take out locks, but I like to say it “Loves the one it’s with” (whether it be a row or page lock). So it is releasing the locks quickly (even with the transaction open), and it’s tricky to reproduce with just a couple of windows in management studio.

Just off the top of my head, for demo purposes you could inflate a really large table (add a lot of rows, maybe do something awful like rebuild it with fillfactor=1), make the select do a table level lock (by a hint or finagle a query that’ll do lock escalation), and then run an update and you should be able to see blocking if you time it right and the reads are slow enough.

I have occasionally written demos that rely on blocking, and using small demo databases and having SSDs on my laptop definitely makes it much harder. I’ve periodically put demo databases on rotational drives connected by USB to make it a bit easier by slowing down storage response time. (Also requires limiting the memory on the VM, of course.)

KL

edit: added more info on why this is tricky

Thanks so much for the quick response Brent and Kendra! I’m not sure if I needed the Begin Tran there for my little demo, but I’m just using ReadCommitted right now. I was reading an Erin Stellato (who is also awesome!) article http://erinstellato.com/2012/03/isolation-levels-effects-on-select-statements/

where she says that Readcommitted only locks until the resource is read. So amidst my confusion I ran the above query, except with no where condition (just SELECT * FROM TABLE_A) and then in another window I queried the tran locks DMO

SELECT * FROM sys.dm_tran_locks

and I could see two rows had been added – one with an IS lock for the OBJECT TABLE_A and an S lock for the data page. But I could still update.

So I added the hint WITH TABLOCK and the page lock went away and the IS lock for the object turned into S lock and I could not update.

So in short I think I’m coming away that it’s a little uncommon to have updates blocked by readers if readcommitted is isolation level because the locks are dropped so quickly that the update will be fine, And one of the ways the update can be blocked if its a table lock on a large table.

Thanks so much both of you for your help in understanding this!

Fantastic article Kendra. One question I have (I’m evaluating the use of Transaction level snapshots where a long read of an audit table for reporting is locking the audit table, so that it cannot be updated by other online transactions, and client connections are therefore timing out) is:

For how long does a version of a row exist in the tempdb version store? When is an old version deleted?

Great article Kendra,

I’m investigating the use of TRANSACTION ISOLATION LEVEL SNAPSHOT for a long-running read against an audit table for reporting purposes, as the current SELECT query is locking the audit table so that is cannot be updated by other online transactions, causing Client connections to timeout.

So my question is, for how long does a row version exist in the tempdb version store? When is an old version deleted?

Thanks,

Jools.

Thanks!

Cleanup will kick in based on the longest running transaction. So if you have transactions that might stay open for hours or days, that can cause quite the pileup if the system has lots of changes. There’s performance counters you can monitor for longest running transaction time and version store size.

Here’s what to do next: experiment on your test system by generating activity, leaving some transactions open, and watching those counters. Use that to design your monitoring around it.

Hope this helps!

Kendra

Kendra, great article!!! One question. so if you have Snapshot Isolation enabled on the DB and you still have NOLOCK in the queries, will the query bypass the versioning in tempdb?

Ooo, great question! I was 99% sure I knew the answer to this, but I love to test this stuff, so here’s what I did:

In my copy of the StackOverflow database, this returns 1 row:

SELECT COUNT(*) from dbo.Posts (NOLOCK) where Id=9346444

In session 1, I ran:

BEGIN TRAN

DELETE FROM dbo.Posts where Id=9346444

In session 2, I ran:

create proc #test

AS

SET TRANSACTION ISOLATION LEVEL SNAPSHOT;

SELECT COUNT(*) from dbo.Posts (NOLOCK) where Id=9346444

GO

exec #test

(The stored procedure wasn’t strictly necessary, I just did it that way.)

It returned: 0

So it read the dirty data of the non-committed delete, and also honored the NOLOCK hint.

Hope this helps!

There’s a lot of really great stuff in both the post and the comments/replies, will be a lot for me to ponder.. thanks for writing!

Something very simple that I’m still too much of a newb to figure out quickly — if I had a chunk of code running at READ UNCOMMITTED, part of which was a SELECT that didn’t care about the above-outlined issues with dirty reads, and afterwards there was an UPDATE or INSERT statement, does SQL Server ignore the TRANSACTION ISOLATION LEVEL and still grab the locks that it needs to do said UPDATEing / INSERTing? Or will this throw errors? Known issues?

Thanks again,

J

That’s a great question! Inserts, updates, and deletes have to take out exclusive locks, even if you’re in read uncommitted. (It’s implemented in a pretty fancy way involving intent locks, update locks, etc.)

Hi Kendra,

in this very interesting post you mention the overhead of using row versioning, which kicks in when you enable SNAPSHOT ISOLATION or READ COMMITTED SNAPSHOT support at the database level.

Is it worth mentioning that other features also turn on row versioning – in particular, if you have any triggers, or if you are using MARS (Multiple Active Result Sets) on any connection. Presumably if you are using either of these features there is *no* extra overhead in enabling optimistic transaction support?

David

Hi David,

Totally correct that those other features also use the version store. I would expect that you would see more overhead in the version store by turning on optimistic locking because it impacts all the tables in the database, but I suppose it depends how extensively you use MARS and triggers.

I have never looked into whether the version store usage by MARS is the same as optimistic locking. I hardly ever find MARS implemented!

Kendra

Hi Kendra, I read through your article and I have a questions about read committed snapshot.

If I select and sum a customer’s balance from Transactions and save the results into a parameter table in my understating I will be reading the row version from the TempDB which would committed snapshot of the customer’s data. If I then use the data in the parameter table to update the client’s balance I run the risk of setting the wrong balance with an old value if the same customer’s record was on the middle of an update of the Transactions table at the time I initially populated the parameter table. If my assumption is right then would changing the code to use a CTE for the update eliminate this concurrency problem? Eg.

Using a parameter table:

——————————–

DECLARE @ClientTotals TABLE (ClientID int primary key, ClientTotal money)

INSERT INTO @ClientTotals

SELECT ClientID, SUM(ISNULL(Balance,0.0000)) as TotalBalance

FROM Transactions

GROUP BY ClientID

UPDATE Clients SET CurrentBalance = TotalBalance

FROM Clients c

join @ClientTotals ct

on ct.ClientID = c.ClientID AND ct.TotalBalance c.CurrentBalance;

Using CTE

———————–

WITH ClientTotals (ClientID, TotalBalance) as

(

SELECT ClientID, SUM(ISNULL(BalanceEffect,0.0000)) as TotalBalance

FROM Transactions

GROUP BY ClientID

)

UPDATE Clients

SET CurrentBalance = t.TotalBalance

FROM ClientTotals t

join Clients c

on t.ClientID = c.ClientID

where t.TotalBalance c.CurrentBalance;

Great question. You’re correct that a table variable/temporary table changes the behavior.

One important thing to know about the CTE approach is that although writers don’t block readers, writers still block writers! So if something is currently locking the row for your update, it will get blocked and have to wait. I just wrote up a quick post demonstrating this that will go out in a couple of weeks, but it just basically proves that writers block writers.

Kendra,

This answer confuses me a little…

If the queries listed above are using snapshot isolation, and are part of a transaction, then using a CTE will *not* change the behavior, and will not solve the concurrency issue of the CurrentBalance becoming out of sync, because both the select into the table variable and the CTE select would be using row versioning to get the snapshot that existed *at the time that the transaction began*, correct?

The question is about Read Committed Snapshot isolation.

[Edit: don’t mean to be curt or too brief, just was in a hurry and I think this is the source of the confusion]

Hi Kendar, Thank you for your post on Read committed snapshot.

I will take into consideration your input and also Brad’s.

I am looking forward to you next post on writes block writes.

Regards Ignacio

Kendra,

While doing some perf tunning on a database, I noticed that both Snapshot and ReadCommitted Snapshot were turned on. What would occur in this state?

Hi Mark,

It’s totally allowable to have both of these features on. They use the same version store– so it’s not like you’ll get double versions or anything. The two isolation levels are different– SNAPSHOT can be used for updates, and concurrency is for an entire transaction (not just a given statement), so there are valid reasons that you might want both.

Cleanup of the version store is a bit more complicated for SQL Server, but it’s fully supported. You can monitor the version store size with perf counters or DMVs (just like if only one of them was on).

Hope this helps!

Kendra

Thanks Kendra!

Thanks Kendra for the wonderful article. I tried your code, but apparently the session 2 code is taking loading loading and loading to update and that’s because session 1 has an open transaction. Could you please guide what exactly is the problem ?

Thanks.

It sounds like your transaction in Session 1 is not committing. There’s a step to run a commit there. You may have accidentally run the ‘BEGIN TRAN’ more than once?

Hello Kendra,

Nice Name, Great Article. For the sample you tried out. Correct me if I am wrong, that scenario only returns different datasets if one of the transactions is not committed and RSCI is onand vice versa. However, they should both return the same sets if the transaction is committed, which of course cannot be controlled per say but could be said to be accurate the time t. I just want to ask, is okay to say that the data cannot be called dirty its inserted within a BEGIN and COMMIT block and hasn’t been committed therefore it cannot be read if selected?

That’s the first question :D. Second, what actually causes the update conflict? Can you shed a little more light on that

Hi James,

You’re correct that the differences are because the transactions are happening at the same time. In this example, there aren’t any dirty reads– we don’t read uncommitted data. The different isolation levels here are all reading committed data, it’s just working differently.

An update conflict occurs if a session using snapshot isolation does a begin tran, reads some data, that data is modified by another transaction, then the snapshot transaction comes back and tries to update the data.

“With READ_COMMITTED_SNAPSHOT OFF, you get two white marbles”

i agree for Session 1.

But Session 2 should see Black-White because the update in Session 1 was not committed?

Correction to my post:

But Session 2 is “READ COMMITED” and so does not see the uncommitted update in Session 1, so Session 2 will attempt to update the black to Red and wait for Session 1 to release the lock?

Hey Tom,

Run the code! Test it, it’ll make it more clear.

Kendra

Hey Kendra,

I’ve been wanting to experiment with Snapshot for quite some time. I was recently tasked with resolving some deadlock issues, and decided to see what would happen if adjusted a few of the queries to run in Snapshot. While I was pleased with the overall outcome (no more deadlocks in this case), I am frustrated by one issue.

This will *NOT* work:

CREATE PROCEDURE MyProc AS

BEGIN

SET TRANSACTION ISOLATION LEVEL SNAPSHOT;

BEGIN TRAN

CREATE TABLE #DataExtractItem

(

ItemID INT PRIMARY KEY CLUSTERED

,DataExtractResultsID INT

)

CREATE TABLE #MyTempTable

(

SomeID INT PRIMARY KEY CLUSTERED

,SomeOtherId INT

)

CREATE INDEX #Idx_MyTempTable_SomeOtherId ON #MyTempTable(SomeOtherID)

Do Some Stuff

COMMIT

END

Do you see the problem? The CREATE INDEX statement is a DDL statement that creates metadata. Metadata is not versioned in SNAPSHOT. So, the CREATE INDEX statement causes the procedure to bomb when run in snapshot.

The bottom line is that its not possible to create an index on a #Temp table inside of a transaction that is running in SNAPSHOT.

Indeed, MSDN docs provide a list of DDL statements that are not supported inside of an explicit transaction running in SNAPSHOT.

For the above example, there is a simple work-around, which is to create the #Temp table and index before setting the isolation to SNAPSHOT (the db’s default isolation is READ COMMITTED)l, or do these things before the BEGIN TRAN.

However, it strikes me that it would *not* be possible to do a SELECT INTO #MyTable and then create an index on #MyTable inside of a transaction running in SNAPSHOT. Here again, there is a work-around, but I am surprised that certain DDL operations would not at least be supported on #Temp tables. After all, they are not visible to other transactions, so its not really necessary to version that metadata.

Anyway, just wondering what your thoughts are about this.

In your example you wrote

With READ_COMMITTED_SNAPSHOT ON, you get one red marble and one white marble.

With READ_COMMITTED_SNAPSHOT OFF, you get two white marbles.

Now, when RCSI is ON the marbles are both changed because both select statements found the black marble, right ?

But when RCSU is OFF, is the second black marble not found because the select had to wait for the commit of the first session and then it was white, or did it find the dirty white marble ? I guess its the first option, but just want to be sure

What I am looking for is writers that do not block readers, so when I start a transaction, do lots of stuff there, and some other session wants to select a table being updated in this transaction it will not be blocked, but simply sees all records as if they where not being changed. Which I thought READ COMMITED should do, but it does not, it blockes readers.

I need my readers to read all commited records without beeing blocked by any writers, which is logical since the writers transaction has not commited yet.

You’ve got the concept right– with RCSI, readers read committed data and are not blocked by writers.

Another case where I’ve seen RCSI produce incorrect / undesired results is the case where an Oracle application that used sequences was ported to SQL Server. Those sequences were used to populate a PK column of another table. The developer chose to use a single row table to mimic the sequence rather than using SQL’s built in sequences. Multiple sessions concurrently queried the ID from the sequence table and tried to use that same value is the next sequence # for the PK resulting in PK constraint violations.

Kendra, I like this article a lot and learned a lot. One thing I’d like to add, that I didn’t see anywhere, is that the versioning tags can cause page splits, because as far as I understand, the versioning tags are added to existing data page which may be close to full already.

Arthur – that’s only a one-time thing. Once the version tags have been added to a record, they don’t need to be added again. You can mitigate it by rebuilding indexes.

Kendra,

Great article first off, and are does RCSI or SI cause any issues regarding DBCC CHECKDB, or INDEX REBUILD operations?

Eric – no.

Are there any issues with enabling snapshot isolation or rcsi level on a replicated table (subscriber)?

When I put on my developer hat I say:

“The reason I limit the size of my DB transactions is to reduce lock contention.

Snapshot isolation fixes all lock contention, so now I don’t need to limit my DB transaction size any more.

I can process this 50,000 line file in one transaction! ”

When I put on my Junior DBA hat I say:

“Lets hold on there a moment.”

What does the Senior DBA say?

Brian – can you rephrase your question and ask it in a more normal way? I’m so distracted by the question format that all I can think of is: https://www.youtube.com/watch?v=jofNR_WkoCE

When designing an application, there is a sweet spot for DB transaction size.

If a transaction is too small (1 update), then it spends most of its time waiting

for the DB log write to finish and the application has low throughput.

But if a transaction is too big (1,000,000 updates) then we get different problems

1. SQL server may run out of lock objects

2. SQL may use lock escalation and acquire a table lock

3. Other transactions may be blocked on one of our locks

4. If the transaction does a rollback, it will consume lots of server resources.

So when designing an application, I try to size my transactions in the 100 to

1000 changes range. But since snapshot isolation reduces lock contention

(item 3 above) and I can configure SQL Server to have more lock objects,

has the sweet spot size for the number of changes in a DB transaction changed

a lot?

(moo)

Brian – gotcha. Generally, during design, I tell people to design for batch, set-based operations. If you need to do a million updates in an OLTP environments at the same time end users need individual locks, there’s probably a bigger design issue – we need to step back and ask what we’re trying to do, and why.

“Never fear– there’s a method to testing the load first! The key lies in understanding the difference between the two isolation levels. If you enable SNAPSHOT isolation on a database, SQL Server starts implementing versioning technologies so that queries can set their isolation level to snapshot. (Queries have to do this explicitly– as in run a command ‘SET TRANSACTION ISOLATION LEVEL SNAPSHOT’, or have it set in their connection string.)”

How do i define the isolation level in the connection string?

I have checked https://www.connectionstrings.com/sql-server/ https://msdn.microsoft.com/en-us/library/system.data.sqlclient.sqlconnection.connectionstring(v=vs.110).aspx and there is no reference on how to do it.

Just use SET TRANSACTION ISOLATION LEVEL.

One question for me as a sum-up … is there / what is the impact of turning on ALLOW_SNAPSHOT_ISOLATION and doing only some read operations with snapshot isolation?

Goal is that big reports doesn’t block the system.

Norbert – go ahead and read the post – it’s inside there. You’ll need to test.

Coming from an Oracle world, it’s hard to understand why this needs to be so complicated.

I was a “master certified” Oracle DBA in the 90’s. When I first worked in a SQL Server environment, and came across SQL Server’s “isolation levels” and the fact that out of the box, with SQL Server, readers can block writers and writers can block readers, unless in each query or session, you use an “isolation level” that allows you to read uncommitted data! Talk about an opportunity for disaster! That data might get rolled back, it might not even be consistent with related data in other tables if your application enforces it’s own integrity by using transactions (as opposed to relying on things like cascading foreign key constraints)! I was shocked, horrified and appalled. I was flabbergasted. People pay beaucoup bucks for this thing! And gobs of people think it’s the best thing since sliced bread! And this is how it behaves!? What’s worse is, the developers and DBA’s in the environment seemed to think this was a sort of “normal” headache to deal with! And they were USING nolock WITHOUT understanding the implications! They were initially mad at me for NOT using nolock! I had to *prove* to them that nolock was bad joo joo!

If you’d asked me at the time, I’d have said that any serious RDBMS that is worth the paper one set of it’s documentation would be printed on implements the same standard thing. There aren’t many options around it, it is what it is for a whole bunch of reasons, that are summed up by the supporting documents for an RDBMS concurrency handling standard whose name I don’t remember off hand, but which was well known and understood and considered authoritative by nearly everyone. In Oracle (and, I believed, in Informix, Sybase, Ingres, Postgres and probably MySQL as well, when using the default ‘engine’), readers don’t block writers and writers don’t block readers. If you select a row that is being updated in an uncommitted transaction, you see the current version of the data (as of the time you started your query), not the version that will be there once that transaction is committed. If you update a row that is currently being updated in another transaction, you either get a row lock error, if you choose not to wait, or you wait up to some time out length, and your transaction goes through once the other transaction has committed, unless the timeout is reached first, in which you get a (slightly different) row lock error.

So in a “standard” RDBMS system, you should get one white marble and one red marble, unless, in your second session, you choose not to wait around, or, if in your first session, you didn’t commit for 30 seconds or whatever the timeout length has been set for the database or overridden in session 2. If you select the data in session 2, you should see black and white marbles until session 1 commits, then you should see white and white. Then after your update commits, subsequent readers should see red and white. You should not be blocked by session 1 when you go to select the data! And you definitely should absolutely not see white and white in session 2 unless session 1 commits before the select starts in session 2. (Assuming these are the only two sessions accessing that data during the time period in question.) And if the update in session 2 started after the update in session 1, then after session 2 commits, there should be no way at all that you end up with white and white. Because session 2 should not be able to commit until after session 1 either commits or rolls back, and everyone should definitely see the most recently committed version of the data at the time when they look at it.

The update in session 2 should block, if it starts after the update in session 1 but before the commit in session 1, until session 1 commits or rolls back. It should either block, or error. But if session 1’s session disconnects and/or idles out, in which case any uncommitted transactions in that session should get rolled back, at which point session 1 should be able to continue.

That’s what should happen. That’s the standard behavior. That practically everyone on the planet outside Microsoft takes for granted as being fundamental. Nobody even argues about it. All the arguments were made, remade, made again and hashed out long ago and practically everyone agreed on what the standard behavior should be and understood very well why. Until MS comes along and does something far worse for reasons people had already argued about and eventually practically unanimously decided against, but against all sanity MS still defends their way as being better and then all the MS fans who seem to somehow be completely ignorant of all that had already gone before argue once again for the previously repeatedly defeated arguments supporting the MS way. Gawd, just shoot me. Just freaking shoot me. I mean, ok, not everyone can pay for Oracle – but come on, even the free RDBMS’s get this right! And SQL Server ain’t exactly cheap!

Anyway, thank goodness they do at least implement “read committed snapshot,” even if they have to invent a fancy name for it. I hope they implement it well. (Detect dead sessions quickly and rollback their transactions, quickly unlock the right locks held by those transactions and transfer them to the correct waiting ones, minimize rollback segment space usage, do everything in the correct order, etc. etc.) I guess they don’t set it as the default because they don’t think they can trust their user base to make sure the MS equivalent of rollback segment space (tempdb space, basically) doesn’t fill up. I guess they very well might be right about that.

Oops, where I said “session 1 should be able to continue” I meant “session 2 should be able to continue.”

Hi, Digital Equipment Corporation DEC had a relational database system called RDB and it was used by the big organisation such as the Stock Exchange in some country. DEC RDB also used the same isolation level as MS SQL Server, so I am sure that both DEC RDB, MS SQL Server and possibly ORACLE RDB are using a standard set a long time ago, that as you have said (written by some one I cannot remember). The fact that other’s have decided to change it in their product does not mean that MS SQL Server only adheres to a standard that is only theirs. Let me put it this way, there are car manufacturers that are still using naturally aspirated engines and are doing very well, while every other manufacturer are using forced induction

What do you drive?

May I know that if deletes also cause versions in tempdb.

Please let me know.

thank You,

Vaibhav – rather than giving you a fish, I’d rather teach you how to fish. How might you run an experiment to get that answer yourself?

Hi Brent, how would turning on RSCI affect the database if it’s the subscriber of a lot of transactional replications? Any changes or issue would occur on the replication end?

Thank you!

Amy – for Q&A, head over to https://dba.stackexchange.com.

Anyone out there ever set RCSI as their default isolation level across an org without testing every app? I understand the implications, just gauging risk / reward. We’ve had many performance issues that would be solved by using RCSI, and I haven’t heard of or seen any issues with the 10-20 servers we’ve set it on by default. The due diligence part of having a full UAT validation is becoming time consuming enough that I am wondering if its worth it. Just looking to see if anyone out there in BrentOzar land bit the bullet and just enabled it by default on everything without a full set of testing. I keep hearing the “why not, its on by default in Azure” statement. Thanks.

Are there any advantages or disadvantages to enabling RCSI in an availability group environment with one synchronous replica? I know the primary replica has to be removed to enable it, but how will RCSI impact performance? It’s my understanding that the primary replica waits for the commit on the synchronous secondary replica to commit which may cause some latency; so will adding RCSI add any additional latency?

HI Brent, In this article Kendra indicated that if a query was still using a NOLOCK, it was still being honored.

One Question. If a DB had RSCI and an sp still had ” SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED;” in it, would this still be honored as well?

thanks Mark

[…] into using snapshot isolation. Snapshot isolation has been around since SQL 2005, but I haven’t seen a lot of developers using it. Overall, […]

Fantastic post, very helpful.

How should i address it if we talk about pre-made applications like sharepoint etc…?

Thanks! Check with the vendor for their support policy.

Why would someone implement bith Snapshot & Read Committed Snapshot together ? What is the benefit? Why is it even allowed?

Why would someone implement both Snapshot & Read Committed Snapshot together ? What is the benefit? Why is it even allowed?

For general questions, you’ll want to hit a Q&A site like https://dba.stackexchange.com.

> You can then implement SNAPSHOT isolation for some queries– you do this by setting the isolation level in a connection string

How to do this? What should I add to the connection string?

eg: “data source=(local); initial catalog = dbtest; Integrated Security = True”

Dario – sorry, that wasn’t clear. We were referring to the ability to pick a readable secondary, which then automatically gets you snapshot isolation. If you don’t have Availability Groups, that isn’t an option. If you do have Availability Groups, use the read-only intent option: https://techcommunity.microsoft.com/t5/sql-server-support/connect-to-sql-server-using-application-intent-read-only/ba-p/317758

I read here that if you have ACCELERATED_DATABASE_RECOVERY enabled (new in SQL 2019) that the version store will be in your local database, and NOT in TEMPDB — which unfortunately, erases the advantage of pushing READ_COMMITTED_SNAPSHOT version store workload into TEMPDB. 🙁 (in our case, we have TEMPDB on fast volumes, and sometimes in NvME) https://docs.microsoft.com/en-us/dotnet/framework/data/adonet/sql/snapshot-isolation-in-sql-server

Hello

in a SqlServer 2019 we have both READ_COMMITTED_SNAPSHOT and ALLOW_SNAPSHOT_ISOLATION ON in a production DB. We noticed a significant increase in the TEMPDB LOG file which is resolved ONLY by restarting the SqlServer service. Any ideas?

Howdy. For personal production support, click Consulting at the top of the page.

Hi, the Craig Freedman’s post has been moved to https://techcommunity.microsoft.com/t5/sql-server-blog/serializable-vs-snapshot-isolation-level/ba-p/383281

Actually, based on the old URL and link text referring to RCSI, I believe this is old Craig Freedman article possibly: https://techcommunity.microsoft.com/t5/sql-server-blog/read-committed-isolation-level/ba-p/383258

Hi

Thanks for this

If a statement that does an INSERT/UPDATE/DELETE on the primary…obviously.

The 14 bytes are added to each affected row on the primary – Is this 14 bytes then released after the INSERT/UPDATE/DELETE commits?

No, because it’ll be needed the next time a row is changed.

Thanks

The 14 bytes added to the row, as far as I’ve read contains the transaction sequence number that identifies that transaction that performed the change to the row. It also contains the pointer to the versioned row in TempDB.

So, if a transaction modifies a row and the DB is in one of the optimistic concurrency models:

1. Update transaction starts

2. Row is copied to version store

2. 14 bytes added to existing row to point possible read transactions to versioned row.

3. Row is updated

4. Transaction completes.

I have to be missing something by thinking that the 14 bytes is now no longer needed and will be recreated when another transaction comes and modifies that same row.

Where am I going wrong?

Thanks

just a heads up your site is jacked up in ie and chrome.

Working fine here – if you have more specific examples, feel free to email ’em to us with screenshots/urls/etc. Thanks!

This is a question. We have an application that reads data from a packaged accounting software using views to read the data. When developing we were allowed to keep our database on the same server as the database for the package. They are moving to Azure and on Azure we do not have access to their server except through linked servers. I have tried to get performance out of openquery and have been unable. We are now going to periodically refresh tables with the data from the views. Currently, of course, it is locking everything in the app for the 30 seconds or so that the refresh takes. I would like to have an isolation that works for just this transaction that allows the transaction to occur while the app is running then the new data appears at the time of the commit. I have been trying to find a way to set snapshot or read committed snapshot just for this stored procedure which is all done in one transaction. I don’t want to have to go through the testing needed if other transactions must be running in this new isolation level. The application is already developed and moderately complex

Bill – unfortunately this is pretty far beyond the scope of this blog post. For custom coding advice, you can click Consulting at the top of the screen to learn about how I work with clients.