I’m a bit of a researcher. When I want to know about a topic, I start off by doing a lot of reading. After I take a lot in, I want to make sure I understand the details. At that point, I try to think like a scientist. I come up with ways to test what I’m learning and see it in action. My process isn’t revolutionary, but it goes something like this:

- Formulate a question.

- Pose a hypothesis.

- Form a prediction.

- Run a test.

- Analyze the results.

I can’t do this for everything, but I love it when it works.

Today’s story is about how I used this process to learn about how SQL Server writes to transaction log files.

This won’t be a super-practical post that will change your life. I’m not going to make any recommendations here, other than widely published best practices. But I promise to show you a tool that may help you learn a lot about how SQL Server works.

The Question: How Large are the Writes to a Transaction Log File?

A widely published SQL Server configuration best practice is to format your log file drives with a 64KB allocation unit size. There are exceptions to this for certain storage subsystems— you should always check the documentation from your storage provider, and you can also run tests with tools like SQLIO to determine how you can get the best performance with your storage implementation. (Different SAN configurations and settings like RAID stripe size make a difference when it comes to performance with a given allocation unit configuration.)

My question was: what does the allocation unit size mean in terms of how much SQL Server writes to disk when I issue a write against a transaction log? Do I always have to do the same unit of work as my allocation unit size?

In other words, am I always going to do a minimum of 64KB of IO against my log file? That seems like a lot, so I doubt it! But if not 64KB, then how much?

First, Some Background on Allocation Unit Size

The “Allocation Unit Size” on a drive is also called the “cluster size.” These are pretty much synonymous, and the fact that we have two ways to talk about this can make things confusing.

The default allocation unit size on a windows drive is 4KB. When you format the drive, you can set that to a larger size— again 64KB is recommended for SQL Server data, log, and tempdb files. The allocation unit size is basically the smallest unit of storage that any individual file can take up. If I set the allocation unit size on a drive to 64KB and then create a tiny text file which only takes up 11 bytes, that one file will take up 64KB on disk.

So 64KB isn’t a great allocation unit size for a drive with many tiny text files, but our SQL Server log files are all much larger than 64KB—- each file takes up many allocation units.

Hypothesis: We’re Writing to the Transaction Log in Small Chunks

My initial guess was this: even though I’m setting my allocation unit size to 64KB, the way that SQL Server issues the writes doesn’t write out 64KB each time. That would just be too high!

I did some reading to learn more about this from the SQL Server perspective. In the “Determining I/O Capacity” section in the Best Practices Whitepaper, it states, “Log writes are sequential in nature with a varying size, which is dependent on the nature of the workload (sector aligned up to 60 KB).”

So we’ve got a varying size, and it’s “sector aligned.” Bob Dorr explains more about sectors and transaction log writes in his SQL Server I/O Presentation: “Any time the physical flush takes place it occurs on a sector boundary. The FlushToLSN will attempt to pack as many active log records into sector aligned boundaries and write on the sector size. The sectors each contain a parity bit that the log can use to detect the valid sectors flushed before a crash recovery.”

Now we’re getting somewhere! So, in theory, the smallest write should hit ONE disk sector. A disk sector is a physical sector on a drive— it’s configured by the storage manufacturer, and isn’t something you configure. Most drives contain sectors which are 512 bytes, but now new-fangled drives (called “Advanced Format” drives) contain 4KB sectors.

To recap our terminology and put things together: if you format a single (“old school”) drive with a 64KB allocation unit size, each 64KB cluster will have 128 physical sectors. Each sector will have 512 bytes.

Based on this, my prediction is that SQL Server will write 512 bytes to the log file for a single tiny write transaction. Ideally, I want to observe this IO outside of SQL Server, not inside of it. It might be the case that SQL Server thought it was doing a small IO, when Windows saw it doing more IO.

My Test: Watching Writes with Process Monitor

It didn’t take long to find a tool to monitor IO with. I am a big fan of the SysInternals Suite suite of tools. Over the years SysInternals tools have helped me solve many problems and learn a lot.

Process Monitor does just what the name says: it helps you monitor the behavior of processes. As it monitors it streams a lot of information to the client, and I always get a bit nervous if I have to run it in production due to the volume. (Make sure you apply very good filters!)

However, for a lab environment it’s just candy: easy to use, quite intuitive, and highly configurable. I fired up process monitor and filtered out all processes except for sqlservr.exe. (Protip: all the cool kids drop vowels near the end of words. Just call me “Kendr.”)

This first filter helps reduce the abundant flow of information coming from Process Monitor. It helped me focus in on my quarry.

I was just interested in seeing IO to a log file. Next, I filtered out everything except for writes to the log file for a database named InsertTest, which was located at the path “T:\MSSQL\Data\InsertTest_log.ldf.”

I also validated in a command prompt that my T drive is formatted with a 64KB allocation unit size, and Windows understands my drive to have 512 bytes per sector. Sure enough, 64KB!

Setting Up A Database to Test

I wanted to do a very small write and see how much IO was issued, so I created the following table:

|

1 2 3 |

Use InsertTest; GO CREATE TABLE lilbittytable (tinycol bit) |

That’s pretty darn small, right?

I started collecting data with ProcessMonitor and I ran an insert into my table:

|

1 2 3 |

Use InsertTest; GO INSERT lilbittytable (tinycol) SELECT 1 |

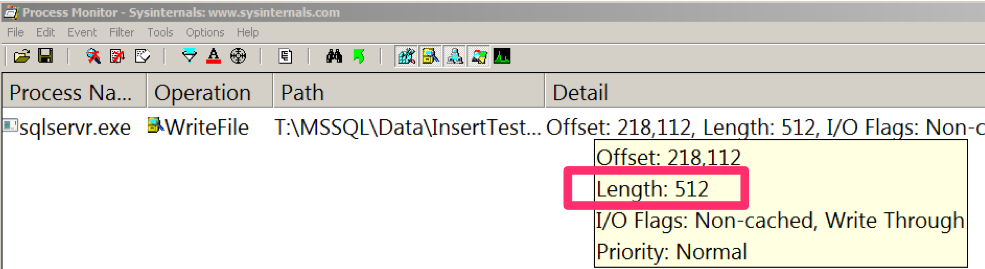

And, what do you know, here’s the write I saw:

As soon as I inserted the row, I hadn’t defined an explicit transaction, so it auto-committed. This required that the row be flushed to the log. This write went into my log file with a length of 512— and that length is reported by Process Monitor in bytes.

This verified my theory: tiny transaction log file writes are indeed written in a unit that corresponds to a single phyiscal disk sector, as defined by the drive itself. The answer to my original question is that the size of data written is NOT influenced by the allocation unit size you set when you format a drive.

Wrapping Up

There are lots of questions that the information I showed today may raise for you. What happens when you write larger records, or have multiple sessions doing writes at the same time? Does this all correspond to what you see inside the SQL Server transaction log when you read it with the (undocumented) fn_dblog function? What sizes of writes do you see happening against the data files after a checkpoint?

Good news: it’s really easy for you to find out! Firing up Process Monitor on a test environment is super easy, and will take you less time than reading this post did. Give it a shot, and happy exploring.

41 Comments. Leave new

If you know me, you know I love this stuff!!!

Good post, Kendra!

This information post helped me understand the fundamentals on disk usage for tran log writes and also introduced me to Process Monitor. Thank you very much Kendr!

I could be wrong…but I wanted to check with you on a possible typo:

To recap our terminology and put things together: if you format a single (“old school”) drive with a 64KB allocation unit size, each 64KB cluster will have 128 physical sectors. Each sector will have 512KB.

Is it 512KB or should it be 512 Bytes?

…and of course I meant to say “This informative post…” 🙂

That should be 512 bytes– thanks for the note!

surely 512 bytes.

64KB clustersize for TL for performance.

But if SQL can write only 512 bytes at a time in a transactionlog and the clustersize is 64KB, wouldn’t it be faster having a smaller cluster-size for an ERP-DB where most transactions contain only 1 or few records?

Hi Alain,

512 bytes is the *smallest* transaction log write size– but not the only size.

Here’s the quote from the Best Practices Whitepaper: “Log writes are sequential in nature with a varying size, which is dependent on the nature of the workload (sector aligned up to 60 KB).” (Link: http://technet.microsoft.com/en-us/library/cc966412.aspx)

You can see the larger writes with the same method I used. I just wanted to confirm the smallest possible write size using external tools in this test.

Hi Kendra,

One quick question on this – the log file virtually divided into VLF(s) > Log Block(s) >Log records. The Log block minimum size 512 bytes and maximum size is 60KB.

– Is there any relation between log block size and sector size? i mean to say log block size depends on the HDD sector size.

OR

– As per above scenario, Suppose, the HDD sector size is different (4096-byte), will it take still 512 bytes length or 4096 bytes length?

Thanks in advance for your reply!

You can get details on this in the “SQL Server I/O Basics, Chapter 2” whitepaper here: https://technet.microsoft.com/en-us/library/cc917726.aspx

There’s a whole section on 4-KB disk sector sizes.

Alain: The cluster size (or Allocation Unit Size) determines the size of the metadata required to store the allocations maps in the file system. It has no impact on the size of the write when doing unbuffered I/O. Because of this, you want the largest possible AUS – to reduce the footprint of the metadata.

Your SQL Server IDE is black – which program do you use to get color schemes?

Thanks

Hey Mike,

The highlighting in the post is from the “syntax highlighter evolved” wordpress plugin. I put the code in that way so that people can copy/paste it easily — wish I could use sceenshots and still get that, but no such luck.

That is Kendra. You write in so simple language and make it easy for us to understand.

Great post and Thank you very much.

That is very odd. I can see why writing as small of a chunk as possible would be helpful in that it gets the transaction committed as quickly as possible.

But, I obviously have some learning to do, this shouldn’t be possible in that SQL Server could want to do a 512B write but should only be aware of the smallest units presented to it… which is the allocation unit as defined when creating the NTFS volume. The volume is an abstraction layer. SQL Server can look up the BytesPerSector but it can’t address them.

A) How is a 512B write passed through a 4k allocation unit NTFS layer (assuming the NTFS volume was created at 4k)? With the fun follow up question of what is the point of the allocation unit if applications write based upon the disk sector size?

B) How can I change this behavior to only write at the size of the NTFS volume or, if not that, in the size that I want. Assume I have a good reason. Sybase has a “sp_logiosize” and Oracle has a configuration for its redo write block size. Anything for us SQL Server folk?

I realize that you are the messenger, not the code writer.. but still, thought I’d ask.

I now wonder the point of Microsoft recommending a 64k allocation unit size for log volumes.

Very informative, thanks.

Matt

Hi Matt,

I was writing very very tiny records in all the testing I did here– if you use transactions that write more data, you get larger write sizes. I was just trying to see what the smallest I could get are. If you check the paper I link to by Bob Dorr, you’ll see that the max size of the writes for the tlog is 60KB (not quite 64KB– just shy of it). I didn’t publish all the steps in my article but I can indeed see the larger writes.

The allocation unit size has to do both with efficiency in managing files for windows more than anything else. You generally want to use the largest allocation unit size possible that works with your application and your storage subsystem– and this is typically 64KB– but as I mention I always like to test new storage with a tool like SQL IO, as some fancy storage subsystems can show different results.

So I hope this explains more about why there’s the general recommendation and how you can test it here.

Hope this helps!

Kendra

Matt, once you have located a block to write, you can write parts of it in any multiple of the hard drive sector size (typically 512B as is the case for Kendra’s test, but it can be 4K for larger drives).

The write path only needs the offset on the disk, it does not need the actual block to write.

For the nitty gritty detail, refer to Windows Internals by Russinovich.

Kenra,

Thanks for the follow up. I was mostly hoping to find some sort of tunable that would allow me to force the transaction logs to write at a 4k minimum vs 512, even if the disk sector is set to 512. It sounds like SQL Server doesn’t have a traceflag or configurable to change this behavior. Thanks!

Matt

This is a great article! I am very thankful that there people like you who’d like to share knowledge to the public. This is a great start for me to learn how to design server hardware that uses SQL.

Hi Kendra,

I want to know when we read log file through fn_dblog, does it happen in the same way we read the data from data file(means first the data is fetched to buffer and then we get the results)?

Asif – can you take a quick step back and explain the problem you’re trying to solve? (I’m curious how the internals of fn_dblog matter for the project you’re working on.)

Brent- I agree with you,We generally don’t read the log file, and if we read any thing in SQL server it comes through buffer, so i am curious to know does this apply with reading the log file too.

You can certainly impact the performance of an instance by reading from the transaction log file, if that’s what you’re asking.

Hi Kendra,

thanx for your reply. my question is that do we use buffer pool when we read the log file means data fetched from DISK to MEMORY.

Why not perform a quick test and then report back? Here’s what I’d do:

Set up a VM with a set amount of memory– say 20GB and a database that’s bigger than 20 GB. Read all the data from the database into memory and also populate your transaction log file– maybe SELECT INTO from large tables into other tables (all in the user database). Verify that your buffer pool is full of user data using the buffers DMV.

Then do a large read from your log file and observe the impact on your buffer pool.

I don’t generally advocate for people to read from their log files in production, so this isn’t something that I’m really interested in testing myself, but I bet you could find it for yourself pretty easily! (And you’ll learn a lot more from exploring it yourself.)

Hi Kendra,

I performed test on my test machine which is having 8GB RAM, i created a database of 12 GB (6GB data and 6 GB log file) .

Created tables, did some bulk inserts, so that data and log file are more than 80% filled.

During insertion of records and reading data from tables memory utilization went upto 7GB and monitored the dirty and clean pages through sys.dm_os_buffer_descriptors, it remained same until I ran manual checkpoint and DBCCdropcleanbuffers.

Restarted SQL services and memory utilization came down to 2GB. started log file read operation.

During log file read i noticed there is very slight change in memory utilization around 100MB, but noticeable thing was disk utilization was 100%.

Aha! So it sounds like in your test it was able to stream that data pretty efficiently. Interesting!

Thanks Kendra for the guidance.

So i can say that while reading log file buffer is not used, direct disk read is there.

Very interesting. I have not been using SQL Server very long, having trained up when databases I support were migrated. In the old DBMS, log records were written from the first updated column to the end of the row, rather than logging the full row.

Does SQL log the full record?

Hey John,

Great question– and you can totally answer this one for yourself. Check out the end of the article where I talk about the fn_dblog function and link to a source on it!

The whole fun of this article is pointing out that you really can research this yourself, and it’s a lot more fun than taking someone’s word for it. Dive on in.

Kendra

Great post Kendra !

Hi Kendra

A few interesting bits to add:

First, the size of the log write depends on how busy the system is. As you say, easy to test. The largest you will get in 60KB (not 64K). For those people running a geographically distributed storage system, this is a number to be careful about.

Second, the MINIMUM size of the log write is actually dictated by the disk geometry that the log is situated on. Hard drives (including SSD and simulated hard drives like SAN) report the smallest possible write size that is guaranteed to be atomic to write and the log write is always a multiple of that. Today, this size is either 512B or 4K – but you could imagine drives in the future using larger sizes than that.

Third, the NTFS allocation unit size (AUS) ONLY dictates write sizes when you do buffered I/O. SQL Server uses non-buffered I/O, so the AUS really doesn’t impact the block sizes in any of the major write and read paths (it has other benefits though). You can validate the effect of different AUS on write size by running Analysis Services, which generally does buffered writes.

Retried for 2016 CTP2 on Windows Pro 10 Build 10130 with 4K Cluster Size, same results as expected!

Hi Kendra,

I know this post is from a few years ago, but I just stumbled onto it and was working through a test of my own. I used your same process from above but modified it to see how the logging changed if you pushed larger data sizes through and did not use IMPLICIT transactions. I was curious to see without the looping I hit the 60K limit, but during the loop I noticed 320K writes. Now I remember some years ago reading a Microsoft KB regarding that, but I can’t for the life of me find it. Anyway, the code I used is below. The Length I got back was 327,680 (320). Do you know what that is from?

DROP TABLE test

GO

CREATE TABLE test (col1 VARCHAR(MAX))

GO

DECLARE @X INT = 1

, @Y INT = 1000

BEGIN TRANSACTION

WHILE (@X <= @Y)

BEGIN

INSERT INTO dbo.test (col1) VALUES (REPLICATE('A', 8192))

SET @X = @X + 1

END

COMMIT

I also retested starting with 100 inserts and incrementing by 100 and didn’t see any larger writes until I hit 900. At that point I got 1,310,720 for a length value. I also see where the operations for positioning and flushing were called prior. (SetPositionInformationFile, QueryPositionInformationFile, setEndOfFileInformationFile, SetAllocationInformationFile and FlushBuffersFile) I am going to go out on a limb and guess that the larger IOs are from the FlushBuffersFile, but that is just a guess. All other writes are 60K or below.

John – sorry, this is beyond something we can troubleshoot fast in a blog post comment.

Does this mean all log, data, tempdb drives should be formatted to 64 Kilobytes

James – sure, check out our setup checklist included in our First Responder Kit. Generally speaking, we still start with 64K NTFS allocation units, but with modern storage, we see less and less benefits of it. Check out this post as an example:

https://www.brentozar.com/archive/2013/08/load-testing-solid-state-drives-raid/

Hey Guys, picked up a strange 1, I have a ms 2008 SQL cluster, combination of physical an virtual servers on Ibm storage, I have set the block size to 64k when I configured the volume on the physical server but when I fail over the instance to the virtual server, the block size changes. I am able to see the change in size in what we receive on our backend storage which is greater than 64k..

Any ideas why that could be…

For random questions, head here: http://dba.stackexchange.com

We recently migrated our databases to new servers. Unfortunately, the disks in the old servers had physical sector sizes of 512B, while the new servers have a physical sector size of 4096Bytes. This led to huge numbers of Alignment errors, especially in the tran log files.

This is not easy to fix. My best workaround is to add a new, secondary tran log file, move all data from the primary tran log file to the second one, shrink the primary one to the smallest size possible, and then set autogrow off for the primary tran log file.

In that way, I force SQL Server to use the secondary tran log.

But this is not the elegant solution that I am always striving for…

Has anybody got a nice solution on this?

Franck – for general Q&A, head to a Q&A site like https://dba.stackexchange.com.