The cloud is robust and reliable. The cloud solves all of our scaling needs. The cloud makes my poop smell like roses. While all of these statements are theoretically true it takes some effort to make them true in reality, especially when a database is involved.

Who Is Deploying SQL Server in EC2?

A question I hear a lot is, “Who is putting SQL Server into EC2?” Sometimes there’s a hint of incredulity in that question, hinting that people can’t seriously be deploying SQL Server into EC2. That’s far from the truth: many companies, large and small, are deploying SQL Server into Amazon’s cloud, with varying degrees of success.

Back to the question of who’s deploying SQL Server in EC2…

I work with a start up building a platform on a Microsoft stack. The front end is hosted on mobile devices, the middle tier is written in .NET, and the database is SQL Server. They’re just starting out and they don’t have the time to spend speccing out servers, ordering them, and waiting for delivery. It’s easy to spin up servers to test new features and functionality and if a feature doesn’t catch on the servers are turned back off. Traditional hosting facilities didn’t offer the the flexibility or response times that they were looking for, so they chose Amazon EC2.

Another company I work with is a ISV transitioning to a Software as a Service (SaaS) model. The ISV works in an industry where there is a lot of specialized knowledge and technical ability, but that knowledge and ability doesn’t have anything to do with keeping a server up and running. In the past they’ve added additional maintenance routines to the software that they sell to customers, but sometimes customers don’t have anywhere to host this mission critical software. The ISV has hosted a few customers on their own servers, but they don’t want to keep buying new hardware in response to customer demands – what happens when a customer leaves? The ISV hasn’t made the move to cloud computing yet, they’re feeling out the different options available, but EC2 provides a level of flexibility that they won’t get through traditional IT solutions.

What are some of the problems?

There are, of course, problems with every way that you can possibly deploy SQL Server. When you deploy on premises, you have to purchase hardware, wait for it to arrive, set it up, and then you have to maintain it. When you host your database with another hosting provider, you’re limited by the amount of server space they have available. When you host in EC2 there are a different set of problems.

Cost

One of the perceived problems with deploying into the cloud is the cost. You’re renting something by the hour (like that “friend” your uncle brought to Thanksgiving last year); and renting something by the hour can get really expensive when you want that something to be up and running all the time.

How much would it cost to keep a reasonable SQL Server running 365 days a year? $10,862.40 assuming that you’re using a Double Extra Large Hi-Memory instance (that’s 4x 2.61GHz Xeons with 34.2 GB of memory). You can really kick out the jams and get yourself a Quadruple Extra Large Hi-Memory instance (8x 2.66 GHz Xeons and 68.4GB of memory) for twice the price. Yeah, that’s expensive. Of course, you can also reserve the instance and just buy it outright for a fraction of that cost, but who wants to do that?

What would a similar server cost you from HP? You can get an HP ProLiant DL380 G7 with a pair of fancy pants Intel E5640 CPUs (that’s right around the same speed as the EC2 instance), 32GB of RAM, and 1.2TB of in chassis storage for about $8,600. That price doesn’t include an OS or any other licensing costs. It also doesn’t include power, cooling, or even a spare server sitting around ready to pick up the load if your primary server fails.

Storage isn’t tremendously expensive in Amazon’s cloud – 10 cents per GB per month of provisioned storage. Over the course of a year, 1 terabyte of storage is only $102.40 a month, and that storage is fairly redundant within a single data center.

Despite the cost, Amazon’s cloud is still incredibly popular with many businesses. Why? Simple: it’s easy to start with a small amount of resources and rapidly scale in response to customer demands. If something doesn’t pan out, you just stop the servers and delete the storage and you don’t have to worry about it anymore. The costs go away as soon as you stop using the compute cycles.

Noisy Neighbor

I used to live in an apartment. It wasn’t a great apartment. In fact, it wasn’t even a nice apartment. I could hear everything that my neighbors did. After a while, I knew about their girlfriends, their love of Super Tecmo Bowl, and I learned that they liked to listen to loud music on week nights when they didn’t have to work the next day.

My noisy neighbors made it difficult for me to get things done. When you’re sharing a host, noisy neighbors can make it difficult to get things done, too. This sort of thing can happen on a server right now – when one program is aggressive writing to disk, read performance will suffer. If you’re sharing a physical server with another guest operating system, you have no idea what that other OS is doing. It could be sitting there doing nothing, it could be chugging along doing 3d rendering, or it could be a BitTorrent server. You have no idea what’s going on in the next room and how it could be affecting your performance.

Unfortunately, there’s not a lot that you can do about noisy neighbors apart from moving. In the real world, you move to a new apartment or buy a house. It takes time and money to get a new place, but it’s feasible. In EC2 it’s a lot easier to get a new place: you just upgrade your instance. You pay more by the hour, but it’s easy to get a bigger place.

Update: This is nowhere near the problem that it used to be. In addition, customers can designate an instance as “Dedicated Tenancy” when the instance is created to ensure that any noisy neighbors are your own VMs.

Crashes in general

To be fair, you can’t do much about crashes if you’re using someone else’s hosting service; when the data center loses power your server is going to go down. So, how do you protect yourself from crashes? Redundancy, of course.

Unfortunately, redundancy gets expensive fast. If you want a redundant mirror for that 10k a year server, you need a second 10k a year server. If you want multiple servers spread across the country, you’re paying for each one. Thankfully the cost of the servers includes the Windows license, but it’s still a large cost for many people to stomach.

SQL Server has a number of options to help you keep your servers up and running in case something horrible happens. Traditional database mirroring will work in EC2. You are free to implement whatever Rube Goldberg machine you want. It’s important to keep in mind that you have little control over your storage (there are no SAN snapshots or SAN mirroring that you can control) and there is no dedicated network that you can rely on. All of your connectivity goes over the open infrastructure within Amazon’s data centers. You’re fighting for bandwidth with Amazon, Netflix, Heroku, and everyone else in the cloud.

Limits: Number of Drives

How many drives can you connect to an installation of Windows locally? 26 or so, right? That’s how many letters there are in the alphabet. You can go beyond that using mount points to boost the amount of storage you have. For most purposes, though, you effectively can attach an unlimited number of drives to a Windows machine. Certainly more than you’ll need. SANs make it easy to create huge volumes, after all.

In EC2, that’s not quite the case. You can only attach 16 drives (Amazon call them volumes) to a server. It’s important to keep this limitation in mind when designing your strategy to move into Amazon’s cloud. If you need to store more than 16 terabytes of data, you’ll need to find a way to spread that data out across multiple EC2 instances. Considering some of what we’ll cover later on, this is going to be a really good idea.

Limits: Network Throughput

Did you know that there’s a pretty solid limit on network throughput in EC2? I didn’t either until I start performing some storage benchmarks (more on this soon). It turns out that there’s a single active gigabit connection into each host. That’s right: a gigabit connection per host. You might be sharing that server with a lot of other servers. If there are 4 other SQL Servers sharing the same gigabit connection, you could end up with some very poor performance.

Amazon recently added instances with 10 gigabit network cards, but they’re limited to Linux machines in the US-East region right now. In the future there might be instances with 10 gig connections that run Windows, but there’s nothing in EC2 right now to make the path between a Windows server and the storage any faster. To keep up to date on which instances have 10 gigabit ethernet, visit the list of EC2 Instance Types and search for “I/O Performance: Very High”.

In short, if your applications are chatty, you’re going to need to learn to break them apart into many smaller pieces to keep throughput high.

Limits: Instance Sizes

We just briefly touched on this in the last section – not every size of instance is available in every region. Typically, the US-East region gets the newest fanciest servers, at least as far as I can tell. Other regions slowly get new hardware over time. The name of the instance type (m1.small, m2.4xlarge) will stay the same over time, but it may not be possible to bring up instances of the same size in every region.

Performance: Instances

Just like any other computer, there are a finite number of resources available on any host. Likewise, the VMs that you spin up have a finite amount of resources available to them. Unlike your local data center, you have no control over which VMs share the same host.

Normally you could put different machines together and stagger their functionality around the clock so that guests that did bulk processing at night would be on the same host as machines that record orders during business hours. When everything is under your control, it’s very easy to make sure that any host isn’t overcommitted during a certain time window.

The only way that you can guarantee performance is to allocate larger instances that take up all of the physical resources of the host but cost more. By doing this you’re able to eliminate the effect of noisy neighbors. It’s important to understand how to get the best performance out of your resources. This is still applicable, even when you can configure a dedicated instance.

Performance: Storage

If you want to keep data around for any length of time, it’s important to persist it somewhere. Persisting your data means that you have to write it and, if you aren’t writing it to your users’ computers you have to write it to the database. Hiding beneath all of that fancy database software is something as simple as a pile of disks for storing data in rows and tables. Making sure the disks are working as fast as they can is critical to keeping a database running at peak performance.

A Note About How Gigabit Ethernet Sucks and Infiniband is Your Friend

Gigabit ethernet sucks. If disk I/O is your absolute bottleneck, you only have two options – you need to write to as many drives as possible. We’ll find out why that isn’t entirely possible in EC2. The other option is to make the connection between your database server and the storage as fast as possible. Since you can’t get super fast storage in EC2, you’ll have to find another way to make your database fast.

Just How Fast is Gigabit Ethernet?

The question isn’t rhetorical. It’s important to understand how fast gigabit ethernet is. Gigabit ethernet can really only transfer, at most, 120 megabytes per second. That’s the theoretical maximum assuming that everything along the line is operating perfectly and there is no latency or additional hops to make.

We’re not likely to see this in the real world. You’re not likely to see this in your own server room and you’re sure as hell not going to see it in EC2.

Why is your storage going to be slow? You have keep in mind that not everything involved may be able to push data at 120 MB/s; the drives could be slow, fragment, or you could be sharing space with someone else who is doing a lot of reading and writing. Other people could be reading from the same host as you or they could be streaming movies from the host to a customer in the suburbs.

In an attempt to find an answer, I conducted several tests on an EC2 instance over the course of several days to answer the question, “Just how fast is gigabit ethernet?”

Testing Methodology

For all of the tests, I used the same m2.4xlarge instance. I installed it from a standard Windows Server 2008R2 SP1 AMI that Amazon supply. Nothing was changed with the operating system. This Windows was about as vanilla as it gets.

For my tests with the drives, I used EBS volumes that were allocated in complete 1TB chunks. I made two rounds of tests. In both cases, the drives were formatted with a 64k sector size. The first round of tests was done with drives that were quick formatted. The second round of tests were performed with drives that were not quick formatted. FYI, formatting 10TB of drives in EC2 takes about 2 days. I used a Large Windows instance to format the drives at a cost of about $26 in CPU time plus another $57.20 in I/O cost just to format the drives.

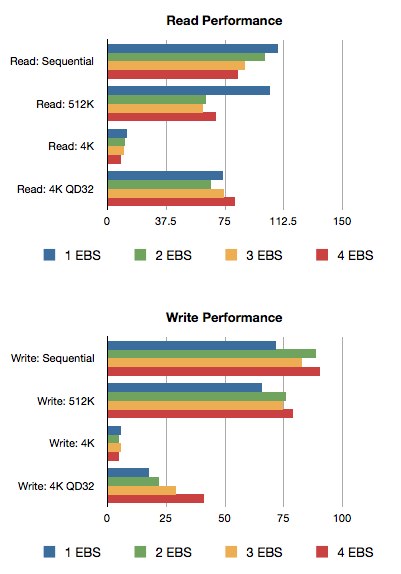

The first set of tests I ran involved using CrystalDiskMark. I used the default configuration and ran it across the quick formatted drives. Drives were configured with 1 EBS volume, 2 EBS volumes, 3 EBS volumes, and 4 EBS volumes. I conducted a second set of CrystalDiskMark tests using fully formatted drives with 1, 2, 3, 4, and 10 EBS volumes in a single drive.

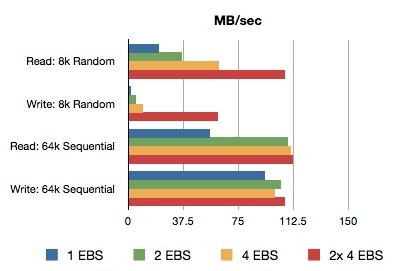

The second set of tests was more complex and involved using SQLIO to simulate sequential and random reads from SQL Server over a period of time. All tests were performed on a single EBS volume, two striped EBS volumes, four striped EBS volumes, and a pair of striped four EBS volumes.

EC2 Storage Test Results

Right away, we can see that performance quickly spikes for sequential reads and writes. While the results for read decrease, this isn’t a reflect of the storage itself so much as it is a reflection of trying to cram a lot of data through a tiny ethernet pipe. Overall, the quick formatted drives don’t show a lot of performance improvement no matter how many spindles we throw at the problem.

There are some things that we can conclude about the performance of disks in Amazon’s platform. First and foremost: format your drives. Don’t just use a quick format; use the slow format. The downside is that it takes time to format disks and time is money, especially in the cloud.By using a full format, disk performance improved by 30% for 4K writes with a queue depth of 32 and improved, on the whole, by around 15%. There were a few anomalies – specifically the 4k write performance spike with 4 EBS volumes and the sequential read performance spike for 2 and 4 EBS volumes. The only conclusion I can come to is that reads were cached and did not hit disk.

The SQLIO tests show a different story. Right away we can see that sequential I/O performance peaks with a maximum of two volumes, but random reads and writes keep scaling right up until the tests stop – the more spindles that were used, the more random I/O that we could perform. And here’s where things get interesting again. Looking at the graphs you can see that sequential I/O falls apart a little bit north of 1000 IOPS. That’s not a limit of the underlying disks, we’re just maxing out the ethernet connection. When there’s a finite pipe limiting how fast we can read, of course the number of IOPS is going to stop pretty quickly.

Looking at the throughput in terms of the amount of data (rather than the number of reads and writes) that we can move through EC2, it’s very easy to see where we hit the ceiling. With a theoretical maximum of 120 MB/s, it’s easy to see that sequential read and write max out when two volumes are use. It’s clear, though, that random reads and writes continue to scale as we add more drives. I suspect that if I had been able to add more spindles, the random write performance would have continued to scale up to 120 MB/s.

What does this mean for SQL Server in EC2?

You can get the random I/O performance that you want, but sequential read/write performance is not going to be good. Taking that a step further, here’s what you don’t want to do in EC2:

- Data warehousing

- Large ETL

- Bulk data processing that requires sequential reads

- Anything else that requires a lot of sequential read and write

On the flip side, what should you use SQL Server in EC2 for?

- OLTP

- Off site processing that isn’t time sensitive

- Distributed write loads (sharding)

SQL Server hosted in EC2 is going to be very effective for highly random loads and especially for loads where data can be kept in memory (currently less than 68.4 GB).

A Sidebar about Ephemeral Storage

Don’t use it. It goes away and may never come back. Well, it goes away when your instance reboots.

Because it’s the drives that live in your server chassis. You can’t predict where your server is going to be when you turn it back on again, so you can’t predict if that data is going to stay with you or not. This is a best practice for virtualization, if you think about it. When you’re setting things up using VMware or Hyper-V you don’t want to attach long-term storage that’s in the server chassis, it makes your virtualization more brittle. Using local storage, if any thing happens (a motherboard fails, a drive fails, a CPU fan goes out, or memory fails) you’ve lost that host. You can’t bring that VM up on another server because the OS is still on hard drives in that broken server.

Local storage is great for temporary (ephemeral) things. Make sure that you take that into account when you’re designing your infrastructure. Fast, cheap, temporary storage is great for fast, cheap, temporary operations like TempDB or local scratch disks.

First Touch Penalty

Amazon mention in a lot of their documentation that there’s a penalty, and potentially a large one, for the first write to a block of storage. When you look at the numbers below you’ll realize that they weren’t kidding. Amazon further go on to claim that if you benchmark the storage (as I did) you’ll be horrified by the results (as I was) but that you shouldn’t worry because the first write penalty is only really obvious during benchmarking and will be amortized away for time in the real world.

Here’s where I have to call bullshit. If your workload consists almost entirely of writing new data, you’re going to see a first touch penalty until the drive is full. It won’t end. Writes will suck until your drive is full. Then you’ll add more drives and the suck will continue. This is compounded for relational databases where the currently accepted best practice is to ensure sequential writes by clustering on an arbitrarily increasing integer.

The only way to guarantee acceptable performance is to format your drives and write to them block by agonizing block until the storage is full formatted.

Staying Up in the Clouds – HA/DR Options

Clustering: Sad Trombone of the Cloud

You can’t cluster in EC2. Don’t try. There’s no shared storage. EBS volumes can only be attached to a single server at a time. You could share the drives, but you really shouldn’t do that. You could use some Windows based SAN system that mounts drives and pretends it’s a SAN, but without any guarantees of performance or longevity, why would you want to?

We’ll just leave this one as: You can’t cluster in EC2… with SQL Server 2008 R2

Changes in SQL Server 2012 make it possible to host a clustering using shared disks on SMB file shares. Whether or not that’s a good idea is completely up to you, but at least it’s a possibility.

Availability zones and regions

In case you didn’t know, Amazon’s magical cloud is divided up into regions. Each region is made up of multiple availability zones. They’re all right next door to each other, but they’re in separate buildings with separate internet access and separate power.

Availability zones exist to protect users from localized failure. Hopefully, if there’s a problem in a data center, it’s only going to be a problem in one data center (one availability zone). If that zone goes down, the others should be up and running. If you want to keep your server up and running through local failures, you need to make sure you keep a copy of your data in multiple availability zones. If you want to keep your business up and running in the event of a regional failure (the smoking crater scenario), you need to keep copies of your data in multiple regions.

Region – national level.

Availability zone – local level.

Got it? Good.

Mirroring SQL Server in EC2

One of the easier ways to keep things up and running in the event of a failure is to use SQL Server’s mirroring. Using synchronous mirroring with a witness is going to put you in a great place in terms of disaster recovery. It’s both possible and advisable to put the primary in one zone, the secondary in another, and the witness in a third zone. We can feasibly survive some pretty heavy duty hardship by configuring SQL Server mirroring in multiple availability zones. The applications talking to SQL Server need to be able to fail over between the mirrors but that should be pretty easy for today’s modern developers to handle, right guys?

SQL Server Replication in EC2

Replication is always an option and is probably a better option when you want some kind of geographic fail over. Instead of diving into contentious issues around designing a replication topology, I’m going to hit on the pros and cons of replication in the cloud.

We’ve already seen that there significant limits to network throughput in EC2. We’re limited to, at most, a gigabit of storage throughput. We’re also limited by our ability to write to disk in 64k chunks – only a little bit more than 1000 IOPS are possible at a 64k write size. These limitations become a problem for transactional replication. In an on premise set up, transaction log performance is frequently a problem when replication is involved. If you think that the performance of the transaction log won’t be an issue in the cloud, think again. The I/O characteristics of Elastic Block Storage guarantee that the performance of the transaction log will become a problem under heavy load.

Replication latency is another concern for DBAs in EC2. Not only do reads and writes occur slower, but that read/write slowness means that transactions will be committed to the subscriber further and further behind the publisher. Of course most applications aren’t writing constantly, but it’s important to consider what might happen if the subscriber isn’t able to commit the load that’s being written throughout the business day – will it be able to catch up after hours? If it can catch up today, will it be able to catch up tomorrow?

Crashes happen in EC2, entire regions have gone offline. Unfortunately, at any kind of scale something is bound to be broken right now. What do you do when that happens? What do you do when that happens to the distribution database? (You are running the distributor on a separate server, right?) What do you do when the distributor goes offline and never comes back up, drives corrupted irreparably?

I don’t have the answers, but that’s something your recovery model must take into account when you’re deploying into the cloud.

SQL Server Backups in the Cloud

You gotta keep that data safe, right? Taking backups and hoping for the best isn’t going to cut it once you’ve moved all of your data up into the cloud. You need a retention and recovery strategy.

EBS Data Protection

Amazon’s Elastic Block Storage (EBS) looks like some kind of SAN drives that you can attach to your server. When you look more closely at the disks themselves, they look like drives attached to some kind of storage device powered by Red Hat Enterprise Linux. I’m overusing the phrase “some kind” because you just don’t know what’s back there.

There are, however, some certainties around EBS. An EBS volume lives within a single availability zone. There are multiple drives involved and multiple block level copies of your data. There could be a failure of a back end drive and you’ll never know about it.

The downside of EBS is that there’s no global availability of your data. There is no SAN replication, no global single back up of your data. If you want that, you’ll need to look into using Amazon S3 to provide greater safety.

Be Safe with S3

Amazon Simple Storage Service is a very simple storage service indeed. It’s a block level data store that refers to big binary chunks of data by name. It’s like a filesystem that exists everywhere. Sort of. The important part of S3 is that your data can be persisted at a very paranoid level over a very long period of time. In their own words S3 is “Designed to provide 99.999999999% durability and 99.99% availability of objects over a given year.”

Why mention S3 at all? It’s not very fast and it’s not very easy to mount S3 storage to Windows. There are two things you can do with S3:

- Store SQL Server backups.

- Snapshot EBS volumes.

Storing SQL Server backups in S3 should be a no brainer for anyone. S3 storage is cheaper than EBS storage and it’s far more durable. Since S3 is global, it’s also possible to push data up to S3 from one region and pull it down in another. Your servers will live on.

S3 can also be used to store snapshots of EBS volumes. Don’t be fooled by the word “snapshot”. The first time you take a snapshot you will likely make a very bad joke about how this isn’t a snapshot, it’s an oil painting. Don’t do that.

The first EBS snapshot to S3 is going to be slow because there is a block level copy of the entire EBS volume happening in the background. All of your data is zipping off into the internet to be safely stored in a bucket somewhere. The upside is that only the blocks of data that get changed are going to get snapshotted. If you perform frequent snapshots then very little data will need to be sent to S3, snapshots will be faster, and recovery should be a breeze. Keep in mind, of course, that these are not crash safe SAN snapshots, so they shouldn’t be used for live databases. I’m sure you can think of cunning ways to work with this.

A Plan for Safe & Secure SQL Backups in EC2

What’s the safe way to manage backups in EC2? Carefully.

Were it up to me, here’s what you’d do:

- Backup your data to an EBS volume.

- Mount an S3 drive to your Server.

- Copy the data to the S3 drive using xcopy with the /J flag

Of course, if it really were up to me, you’d have mirroring set up with a primary in one availability zone, a secondary in another, and the witness in a third. Oh, and you’d be using synchronous mirroring.

Scaling SQL Server in EC2: Scaling Up

This is, after all, why we’re here, right? You’re not just reading this article because you want to learn about database backup best practices and how many IOPS some magical storage device can perform. You want to know how to give your SQL Server some more zip in EC2. There’s a finite limit to how much you can scale up SQL Server in EC2. That limit is currently 8 cores and 68.4GB of RAM. Those 8 cores currently are Intel Xeon X5550s that clock in at 2.66 GHz, but that will have changed by the time you’re reading this. I wrote this post back in 2011, I updated it in 2015, and who knows what year it will be when you find it.

This is, after all, why we’re here, right? You’re not just reading this article because you want to learn about database backup best practices and how many IOPS some magical storage device can perform. You want to know how to give your SQL Server some more zip in EC2. There’s a finite limit to how much you can scale up SQL Server in EC2. That limit is currently 8 cores and 68.4GB of RAM. Those 8 cores currently are Intel Xeon X5550s that clock in at 2.66 GHz, but that will have changed by the time you’re reading this. I wrote this post back in 2011, I updated it in 2015, and who knows what year it will be when you find it.

In EC2, there’s a limitation on how much we can scale up. Commodity hardware really does mean commodity hardware in this case. If you can’t scale up, the only thing left is to scale out.

Scaling SQL Server in EC2: Scaling Out

It’s incredibly easy to create more instances of SQL Server in EC2. Within a few clicks, you’re most of the way there. You can automate the process further using Amazon’s developer tools to script out additional configuration, attach local storage, and attach EBS storage. The developer tools make it a breeze to create and deploy your own custom images so you have pre-configured instances up and running. You can even use tools like Puppet or Chef to completely automate adding more servers to your environment.

Summing It Up

So, there you have it – it’s possible to get great performance for certain types of SQL Server operations in EC2. If you’re looking for a cheap way to set up a data warehouse, you should look elsewhere. You might be able to slap something together in EC2 that performs well enough, but there will be a lot of man power involved in making that solution work. If you’re looking for a quick way to scale your application up and out without worrying about buying hardware and making sure it’s being used appropriately, then you’ve come to the right place.

Want to read it later? Download the PDF.

Learn more about our SQL Server in Amazon cloud resources page.

62 Comments. Leave new

Great article. Makes me wonder if Oracle on EC2 would exhibit the same characteristics.

Great Article! Thanks for taking the time to write such a in-depth article. Are you going to do one on Azure?

I’m glad you liked it. It was a lot of fun to research and put this together.

I won’t be doing anything like this for Azure. There’s a lot less that you can do on the administration/configuration side of things with Azure and a lot more that takes place in code.

Excellent Article….

Thank you! I hope you got a lot of useful information out of it.

Great article!

A few corrections:

1. Only 11 EBS volumes on 1gbit instances, but 17 on the new 10gbit cc1 for windows!

2. Mounting s3 as a filesystem is pretty slow for backups, maybe one of the s3 applications would be better? Cloudberry, s3sync, etc

3. $10k is shockingly cheap when you put the software licenses, colo, spares, staff, etc on top; plus you can turn it off when not using to save!

Keep up the great writing!

Thanks for your comment, Julian! I’m glad you liked the article.

I did just verify that I could attach 13 additional volumes to a t1.micro instance running in us-east-1c, so maybe you’re seeing something different based on a different AMI or instance type.

I’d never backup directly to S3 and I mention that a few times; copying off to S3 after the back up is complete is the only palatable solution. Unless you can verify that a vendor is actually using an unbuffered copy, I’d avoid third party software like the plague. This bug can cause a lot of memory problems on Windows Server 2008 R2. Until it’s fixed, it’s best to use unbuffered copy and roll your own solution.

Thanks for covering a very relevant topic. You praise cross-zone synchronous database mirroring as an availability option – not bad on the face of it – but do not mention the impact of principal-to-mirror network latency on performance. Have you done any benchmarking of this?

I wouldn’t say that I’d praise it as an availability option, it’s just an option that is available and easier to use than some of the other options. I haven’t done any benchmarking around it and I’d strongly recommend that anyone going down that path examine the network latency involved in a test environment before implementing synchronous mirroring.

Great read! Just the kind of info on IO throughput on EC2 I was looking for.

Glad you liked it. There are instances with 10 gig iSCSI now. I hope to have benchmarks for those available soon.

Thanks for a great article. I am considering the mirror option in EC2 for our SQL to improve the uptime and better automated failover.

At the moment what we have done which works slright if you don’t mind losing a little bit of data is:

– Full backup on a Sunday, with diff backups every other day. And hourly backs in between.

– The backups are done onto a separate EBS volume connected to the SQL instance. We then snapshot this entire backup volume hourly using the AWS api

(we also copy this down to our servers locally every night)

– We also have a pre-built and configured SQL instance of the same size in a different zone, which is shut-down and waiting incase the current live SQL zone has a major problem (it’s also shutdown so we dont pay for it)

So essentially if we have a problem in the zone the Live SQL instance is in, we can quickly recover by starting up the prebuilt SQL instance that’s in another zone. Then converting the snapshot of the backup drive into a volume again and reattach it to the prebuilt instance in the healthy zone (being in S3 its not stored in a particular zone). And then we just do a DB restore as normal.

Ofcourse the problem with this approach is the data loss of up to an hour in our case, but its a loss we are happy to live with at the moment. But we do want to move to the mirror option in the not too distant future.

Hello Brent,

Can you share the exact numbers or the output file for the SQLIO test that you did? I am comparing to my numbers on Amazon and this is what I get:

dir size block thread type rw IOo IOSec MBSec MinLat AvgLat MaxLat

S:\sqlio_test.dat 8 random Write 86.03 0.67 1 92 1151

S:\sqlio_test.dat 8 random Write 1 82.65 0.64 1 95 1706

S:\sqlio_test.dat 8 random Write 4 89.79 0.7 1 354 2677

S:\sqlio_test.dat 8 random Write 16 88.63 0.69 1 1432 7867

S:\sqlio_test.dat 8 random Write 64 92.78 0.72 381 5456 17029

S:\sqlio_test.dat 8 random Write 128 92.36 0.72 465 10918 25296

U:\sqlio_test.dat 8 random Write 128 87.07 0.68 1 91 593

U:\sqlio_test.dat 8 random Write 1 90.37 0.7 1 87 883

U:\sqlio_test.dat 8 random Write 4 89.37 0.69 1 356 1288

U:\sqlio_test.dat 8 random Write 16 90.73 0.7 2 1403 2832

U:\sqlio_test.dat 8 random Write 64 91.3 0.71 2 5562 9121

U:\sqlio_test.dat 8 random Write 128 92.39 0.72 4 10949 16769

Brian – no, sorry, I no longer have that available.

You may see wildly different numbers depending on where your storage is located. Storage isn’t necessarily located near the machine it’s attached to. If you don’t get the performance you want, I suggest destroying the volumes and creating new ones until you get the performance you want.

I used a 40GB file on pre-formatted disks. Apart from that, I don’t have the scripts around that I used – I deleted that VM after the test was done and foolishly didn’t save my SQLIO script.

Hi Brent/Jeremiah,

This is very informative and thank you. I do have a question, is it possible to set up a log shipping between on-premise instance of SQL Server and AWS SQL Server Instance?

I am not sure, but i needed to set up a log shipping between this two instance.

Thanks,

Abi

You sure can! You’ll need to figure out how you’re going to get the files to the AWS SQL Server (you can either use a VPN or some sort of hand rolled copy job). Once you have that part working, you should be able to take the backup, push the backup file to a network share, and then restore the backup on the log shipping secondary. You may or may not need to disable one of the log shipping copy jobs, but that entirely depends on how you implement it.

Great, Thank you. I will work on it and let you know if there are any questions.

Hi Jeremiah,

Great article! just have a quick question in mind.

Is it still impossible to build a SQL Server Failover Cluster (2008) in EC2? does the new feature VPC help to overcome the issue?

I have been searching for solution but with no luck.

Thanks,

Art

Hi Art,

There’s still no built-in way to do failover clustering in EC2. You could, in theory, run SQL Server off of a UNC path, but I haven’t tried it so I can’t say one way or another if it’s going to work or even perform very well (I doubt it will perform well at all).

What’s the problem that you’re trying to solve by virtualizing in EC2? If I know the question, I might be able to provide a better answer.

Hi!

You are correct this is not documented simply because the support for SQL clustering and WSFC not supported by AWS. VPC alone does not address the issue. SIOS Technologies had DataKeeper for Windows http://us.sios.com/products/steeleye-datakeeper-windows/ which run in conjunction with WSFC and we PoC’ed and planning to introduce a secret sauce that would provide the capability the failover of the SQL instance between nodes in WSFC and SQL. I am seeing a growing interest in this feature and we’ve successfully introduced the solution to Amazon architects. Would you and/or your company be interested in the solution?

The same applies to all interested.

Thank you!

Hi, Sergey. I’ve approved your comment but redacted the email address. We’d appreciate not using our blog for sales pitch purposes. Thanks, though.

Great article! I think some of this may be a bit outdated however. I would love to hear you opinion on the services to date. I really think they’ve made some improvements in the SQL in the Cloud design using RDS and they’ve added instances available with clustering services.

Good stuff!

If you want the low down on SQL Server RDS, you can find it over at https://www.brentozar.com/archive/2012/05/sql-server-rds/

A “cluster compute” instance is different from a SQL Server cluster or a Windows cluster. It’s an instance specifically designed for high throughput applications operating in a shared nothing cluster – think high performance computing or a shared nothing database like Cassandra. SQL Server clustering is still not possible because an EBS volume can only be mounted to a single SQL Server instance; there’s no shared storage.

It is possible to create multi-subnet AlwaysOn Availability Groups, but that’s the closest to clustering that you’ll get in AWS.

Thanks again for the great information!

Great article! I have actually implemented it all including the creation of a .Net service that performs all the work suggested for the backup and movement of files. The only part that is eluding me at this point is the “mounted” S3 drive. There are several applications out there like TNT drive and others that will mount the S3 storage as a drive but I don’t believe xcopy /j will perform as expected using these apps since those tools are doing some other form of transfer under the covers (does that sound right?). So my question is: What did you use to mount your S3 storage when you ran your tests?

As I mentioned in a previous comment, I never mounted S3 as a volume during testing.

Recent work with S3 has been through lits3. This library does use buffered writes, but they’re buffered in C#. You’d be better off asking on a vendor’s forums to figure out how they perform their work.

I am not a DBA so some of these terms may elude me.

Can you clarify the solution needed to scale a web application running on a single database?

If I have an ASP.NET web application that is a Software as a Service (SAAS),how can I configure Amazon to scale if the load gets too big? I have a Tenant architecture based web application that uses SQL Server.

If I need better performance due to the increase in the number of customers using this application, how can I scale this solution in AWS to meet the demand?

I have a good picture of how the web server would scale but not the sql server side?

Would a SQL Server db configured as a slave allow newer customers to use the same data from this single database but be able to handle the new load demands? Is this possible? if not, then how should this be handled?

Hi Nate,

Unfortunately, this is a bigger question than I can easily answer in a blog post comment. There’s a lot of complexity involved in scaling SQL Server; but, in short, SQL Server does not have anything like MySQL’s read slaves before SQL Server 2012, and even then it’s only available in Enterprise Edition as an AlwaysOn Availability Group. If you’d like to talk more, I’d be happy to arrange a time. You can head over to our contact form and drop us a line, I’d be glad to chat.

Outstanding document – that must have taken some serious work and is very much appreciated. Thanks for sharing.

PeterG

Hey Brent,

Many +1’s for the detail in this article. There’s only one part that rankles at me.. it’s the second paragraph of the ‘Cost’ section:

>> “How much would it cost to keep a reasonable SQL Server running 365 days a

>> year? [….] Yeah, that’s expensive. Of course, you can also reserve the instance

>> and just buy it outright for a fraction of that cost, but who wants to do that?”

Would a need for SQL Server running 365 days a year not qualify as a *great* use case for a reserved instance and the ~40% cost savings it can bring? (3-year contract) Paying the on-demand price is crazy when you absolutely want that server image to have as large an uptime as possible.

That said I can fully understand avoiding the ‘Dedicated Reserved’ category for single-tenancy since that’s not cloud. Keep up the great work – your writing is always authoritative to a non-DBA such as myself. (and I almost typed ‘mysql’ there… )

Cheers,

Gavin.

Hi Gavin, glad you liked it.

One of the concerns about a 3 year reserve is that your requirements might change. When you buy a reserved instance (say an m1.large), you’ve got that m1.large for the duration of the reservation. The upside of reservations is that they’re cheaper (about 1/3 the price for a reserved, dedicated, EBS optimized instance). The downside is that you’ve got those resources for the duration of the reservation.

Thankfully there is a reserved instance marketplace where you can sell off a reservation when you don’t need it.

So… yeah, there are a lot of different things you can do to save or spend money. Depending on projected growth and known performance, it can be better to start with on demand and then switch over to reserved instances once you have a handle on your load.

Ah, you’re one of Brent’s instances of multiple-personality-syndrome 😉 (ahem)

Thanks for the tip on the new marketplace – that looks super-useful as a back door to a contract, with only a 12% service fee.

I guess the other choice is to stick to 12-month reservations (for those machine types that support it) since Amazon’s prices only ever go down and play reduced investment now against future savings 🙂

I’m about to dip my toe into the world of MSSQL soon, probably with the (deprecated) mirroring in 2012 for simple HA since there’s no need for AD or shared storage – wish me luck =)

Hello thanks for the article. I see your point on ephemeral disks and TempDB “Fast, cheap, temporary storage is great for fast, cheap, temporary operations like TempDB or local scratch disks”.

We use the ephemeral disks as a location for our TempDB databases and it works fine (although not as a RAID 5 as the disks were originally set up!).

I would like to point out a trap I fell into recently that caused the SQL Service not to start after a reboot (maybe self-inflicted but a trap all the same).

Normally I keep my TempDB data file(s) in a manually created folder structure [MSSQL\Data] that resides on the respective drives.

I created this structure on the new ephemeral drive and moved the TempDB data files e.g TempDB data was “Z:\MSSQL\DATA\TempDev.mdf” etc

This all worked fine and dandy until we had a IO error with another drive. To fix this the EC2 instance was shut down and re-created on a new set of disks….but…when this happens the ephemeral drives are re-created so the original TempDB is gone as well as any files / folders on the disk.

This of course meant when the SQL Service started up it could not find the MSSQL\DATA folders because they were gone.

So to prevent any future issues the TempDB files on the ephemeral disks now reside at the top level e.g. Z:\TempDev.mdf

Also related to this, assuming the above is followed and the TempDB databases can be created I also noticed that the ephemeral disks may come online after the rest of the disks as they have to do some formatting or whatever.

This meant that again SQL failed to restart because the ephemeral drive location was not available early enough.

Just a matter of restarting the service manually to fix but not good if the DBA is on holiday and the junior does not know what is going on.

Hope this makes sense and may prevent a DBA getting the “SQL Service Could Not Start” message….thanks

Good catch! I’ve run into that trap before, too.

When I’ve run into this problem, I set up a new windows service to check for the ephemeral storage, format the storage if necessary, and create any directories (if missing). I then set up the SQL Server service to have a dependency on the service that I created. It does add a little bit of time to the SQL Server start time, but it also ensures that everything starts up the way I want and with the disk formatting that I want.

Hey Jeremiah,

Sounds like you’re successfully straddling the multiple beasts of MSSQL, Sysadmin and Software Development!

Can you share what/how you’re creating such a new windows service? srvany.exe has long been a friend but are you just running some PowerShell or is it a compiled .EXE ?

When I put this together, I just used a batch file. I tested for the existence of drive letters and looked at the output from diskpart and other tools to figure out if I needed to format disks. Since you can pass a set of commands to diskpart, I used that when the drives weren’t present.

And, yup, we used srvany.exe to turn the batch script into a service.

Nice one – I’ve been doing some tests recently using AWS’s own Provisoned IOPS volumes with a customer – no matter how high we cranked the provisioned IO value, a single Full backup would kill their application on an m1.large instance.

After multiple iterations the best performance / cost turned out to be 4 x standard EBS volumes in a RAID0 stripe. The database is mirrored so we are happy with that risk.

The service sounds like the right way to go, since then MSSQL can be set as dependent on the batch file completing successfully 🙂 Thanks!

PIOPS work when you want raw IOPS, but you have to make sure you’re using the EBS optimized instances. PIOPS also don’t make sense on smaller instances (like the m1.large) – throughput is limited by the smaller instance size. I got the best results with CC2 instances, but the m2.4xlarge does well enough for the 1Gbps instance types.

Disk performance in AWS is highly variable and I’ve found that it can rapidly become the most expensive part of any AWS deployment.

thank you for the reply, Just to clarify, to set up a dependency on a service is this done on the SQL Service via services.msc as opposed to a setting in SQL Server Configuration Manager (where I normally manage the service)?…thanks

You are correct. I can’t remember exactly what steps you need to take, off the top of my head, but it’s in the Services MSC snap in.

Great article! Just stumbled upon this researching some EC2/SQL topics. Thanks for sharing such a thorough run-through.

So what do you recommend as an alternative block size to maximize throughput on the general SSRS that max out at 160MB/s for an OLTP DB? I have a mix of 100GB & 200GB Luns that I stripe files for a given file group over. From the AWS docs I see my 100GB Luns will have a max throughput of 300 IOPs and the 200GB Luns max out at 600IOPs (they have a figure of 3 IOPs/GB for the general purpose SSDs).

I have done the full format at 64kb block size but I’m wondering if I need to back that down to 32kb or even 16kb.

Thoughts?

AWS SSD IOPS are measured at 256 KB or less (see EBS I/O Characteristics). I would keep the block sizing at 64 KB. A 512 KB read ahead (SQL Server Standard Edition) counts at 2 IOPS and a 2 MB read ahead (Enterprise Edition) counts as 8 IOPS.

I’m hoping I’m misreading your statement, but it sounds like you’re mixing EBS volume sizes in your RAID volume. If that’s the case, I would create a new set of EBS volumes that are all the same size and use a uniform EBS volume size for all of the RAID volumes that you configure in Windows.

If you need additional throughput, you’ll need to increase the instance size and make sure you designate the instance as EBS optimized.

Sorry, I meant I have several LUNs that I spread a filegroup over, for example, FIlegroup DATA1 has one file on each of the following drives E:, F:, G: Then Data2 Has one file on each of H:, J:, K:. So these are not set up as Raid Sets.

I should have added – do you think you’re waiting on disks? Are you seeing any wait types that indicate you need to change things?

The throughput just seems very low, I do have waits but hard to pin them down to disk IO in general when I have so many “opportunities” for performance improvements.

Seems is a relative term – you will only see 160 MBps per volume if your AWS instance itself can keep up. You haven’t said what instance size you’ve chosen, so I can’t comment on speeds you’re hinting at.

If you head over to http://www.ec2instances.info/, you can look at the speed rating of instances given in relative terms. Since everything is iSCSI, the Network Performance column directly relates to disk performance, as would checking the EBS optimized box when you created the instance (you did remember to do that, right?).

SQLIO numbers are helpful, too.

Your questions sound more complex than I can reasonably answer in a blog comment.

If you’d like someone to look at actual numbers, feel free to post a question over at http://dba.stackexchange.com and dump a link back in here. Or you can contact us over at help@brentozar.com and we can set something up.

That’s awesome, I’ve been looking for something like this for a while now.

The instance is an M3.2XLarge, so it looks like its rated at 125MBs throughput.

I find it curious that they publish the MAX EBS Optimized 16k IOPS as a relative performance figure.

I will dig in some more.

Thank you again for you time

Indeed a great article Jeremiah, very informative. It kind of confirms my understanding that shared storage is not possible in EC2 and hence an active/active cluster is not possible. But I don’t understand how clustering is possible using 2012 with a file share (probably I don’t understand that specifics here). Would you please explain it a bit more?

Your two SQL Servers would use the file share on a third file share server as the shared storage mechanism. You have to reference the shared storage using a UNC path, but it’s entirely doable. Check out SQL Server Can Run Databases from Network Shares & NAS.

Also – active/active clusters aren’t a thing, just ask Allan Hirt 😉

Hi Jeremiah, This is very informative but is it still true today as this is almost 6 years old article now.

Hi! Jeremiah is blogging over here these days.

To answer your question, a lot of it can be summed up by checking out prices here. There’s still a premium on higher network and IO throughput, hardware size, etc.

This is great, but I wonder how things have changed with all of AWS advancements and SQL 2014/2016 since this was written… Have you since written an update to this? We’re likely to move our DBs into AWS this year, and I’m in the beginning steps of finding resources to plan it out. My biggest uncertainties are about how to correctly/reliably migrate our five, three (or four) node availability groups located in two data centers (or what other strategy might cover our redundancy/DR needs, and Service Broker (with both local and server-to-server routes). My understanding is that those two things rule out RDS, and we’ll need to go with SQL on EC2.

We’re probably going to get some consulting help, but I want to be as able as I can be to make good judgments about their recommendations.

The first thing I found was this: https://aws.amazon.com/windows/resources/whitepapers/alwayson/ but as I said, I just started looking. Any other recommended reading?

Corey – for personalized guidance on complex systems like 3-4-5 node AGs across multiple data centers, that’s where consulting comes in. You can’t expect an off-the-shelf guide to work well for that.

Thanks!

If you want to be sure you have researched the option, you should also look at Azure Virtual Machines for hosting SQL Server. There is lost of info available on running SQL in Azure VMs, including HA/DR scenarios, For example:

https://docs.microsoft.com/en-us/azure/virtual-machines/virtual-machines-windows-sql-server-iaas-overview

https://azure.microsoft.com/en-us/services/virtual-machines/sql-server/

https://docs.microsoft.com/en-us/azure/virtual-machines/virtual-machines-windows-sql-high-availability-dr

https://docs.microsoft.com/en-us/azure/virtual-machines/virtual-machines-windows-portal-sql-availability-group-tutorial

https://docs.microsoft.com/en-us/azure/virtual-machines/virtual-machines-windows-portal-sql-alwayson-availability-groups

It is actually possible to be up and running with a full AG deployment in less than an hour using a template, at least as a starting point for exploring and learning. Azure VMs running SQL was not available when this post was originally written, but now it is a very good option.

How did your deployment go? We’ve been running an AWS EC2 Always On Cluster for the past 1 1/2 years and haven’t had any issues with the AWS Environment

Great article.

This relates to building your own SQL env in Ec2.. and not the ready made, AMis with SQL already setup.

Have you checked these out and if so how do they compare with building your own?

Thanks!

I think EC2 nowadays still has a “IOPS credit” , meaning that instance will not provide the claimed IOPS in the beginning.

We had m4.x2large EC2 instance running SQL SERVER 2012 R2. We can see that copying files can reach the claimed speed. However, ETL can only reach to 80Mbps on average.

The claimed IOPS https://aws.amazon.com/ebs/details/ are full of it. They are max value. It does not work for large ETL.

For newest information you have to pay a visit the web and

on web I found this web page as a most excellent web

site for newest updates.discount basketball jerseyshttp://army.clanfm.ru/viewtopic.php?f=1&t=3365https://dpya.org/wiki/index.php/Usuario_discusi%C3%B3n:Baidai66http://ontobedia.hegroup.org/index.php/User_talk:Baidai66

I think the following articles show a lot of progress in terms of the restrictions and possibilities: https://d1.awsstatic.com/whitepapers/best-practices-for-deploying-microsoft-sql-server-on-aws.pdf