When you need SQL Server to handle more load, there’s two big-picture ways to do it:

- Scale up – use a more powerful SQL Server

- Scale out – use more SQL Servers

Quick disclaimer first – before you try either of these solutions, the easiest thing is to circumvent the whole problem – don’t ask SQL Server to handle more load. Tuning your existing queries, schema, and server settings is almost always faster and cheaper than scaling up or scaling out. For today’s discussion, though, I’m going to assume you’ve already tuned the bejeezus out of your existing SQL Server setup and you’re worried about running out of options. Today, we’re going to focus on scaling up.

Scaling up means using a SQL Server with more oomph – more CPU power, more memory, or more storage throughput.

You have to fix the right bottleneck. Don’t go writing checks to hardware vendors without knowing the real thing holding back your server. SQL Server 2005 & newer track the bottlenecks for all queries, all the time, and they’re yours to discover by querying wait stats. Glenn Berry’s diagnostic queries are a useful tool for this job because they’re well-commented – you can run his queries step-by-step, read the comments, and understand what your SQL Server is telling you.

If Memory Is Your Bottleneck

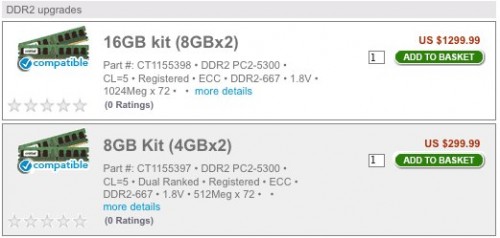

If your bottleneck is memory, the good news is that memory’s easy to scale. Scaling up memory is one of the easier scaling solutions because in most cases, your boss didn’t max out the memory when he originally bought the server. The max amount of memory is usually leaps and bounds more expensive than the second-highest amount of memory. Take these memory upgrades for an HP DL785 server from Crucial:

16GB of memory is $1,300, and 8GB is $300. To double your memory, you have to spend four times as much. The good news is that memory prices drop over time, so by the time a server is a year old, it’s often more cost-effective to go back and double the memory. Unfortunately, the older a server gets, the reverse happens – it’s harder to find warranted memory for a long-out-of-production server.

If you can fit your entire database in memory, plus space for sorts & joins, you can get away with a cheaper IO subsystem. This is the approach StackOverflow takes with their database servers. The challenge is that the moment your data stops fitting in memory, performance falls off a cliff. Queries that used to fly through memory suddenly crawl because they have to fetch data off disk, which is exponentially slower than moving data around in memory. You have to stay keenly aware of your memory metrics and be willing to write checks to support new database tables and indexes, adding memory as you need space for data.

Every server has a maximum amount of memory that it can handle. Once you hit that max, you have to upgrade to a newer server, typically one with more memory slots. Physically small servers (like 1U rack mount pizza boxes) have a limited amount of real estate devoted to memory slots. Larger servers have more space for memory slots – but they also have more CPU sockets as well, and that’s where even more money comes in.

If CPU Power Is Your Bottleneck

If your bottleneck is CPU power, brace yourself to write big checks. Scaling up works fairly well from one CPU socket to the four-socket tier, but once you break past four CPU sockets, you’ll be spending significantly more on hardware. Getting an eight-CPU-socket server is usually much more than twice as expensive as a four-CPU-socket server.

CPU scaling challenges have these phases:

- Replace the existing server with one having the same number of sockets, but more modern processors. Thanks to Intel and AMD pumping out the jams, we can usually get dramatically faster CPUs every year with more cores.

- Switch from a 2-socket server to a 4-socket server. At this jump, we double our licensing costs, but we’re still using commodity off-the-rack hardware.

- Switch from a 4-socket server to an 8-socket server. Suddenly, the pricetags get serious. 8-socket servers are enterprise-only. Thankfully, though, we can still use SQL Server Enterprise Edition.

- Switch from an 8-socket server to a monster. Now we’re in the price tag tier where server manufacturers say, “Please call for details.” It gets worse, though – starting with SQL Server 2008 R2, database servers with more than eight CPU sockets require SQL Server Datacenter Edition at roughly $60,000 per socket. That’s half a million dollars just for the SQL Server license.

The first few steps are easy, but we have to figure out a solution before we get to phase 4. Thankfully, the multitudes of zeroes involved make it easier for managers to justify fixing bad code. If we have to choose between buying a Datacenter Edition class server or hiring a couple more programmers to fix the code, sometimes it’s easier to do the latter. (This is often where I come in – managers are out of options before they have to write a very, very large check, and a week of me performance tuning can help avoid a seven-figure purchase.) Speaking of seven-figure purchases….

If Storage Is Your Bottleneck

When dealing with a server that only has local storage (not a Storage Area Network), scaling storage throughput is the opposite of scaling memory. Your boss probably loaded the server up with storage throughput already. He might have gotten cheap with the quantity (meaning, bought 146GB drives instead of 300GB drives) but in most cases, swapping in drives twice as big isn’t going to make the server twice as fast. There are some niche cases where we can isolate a poorly designed database to its own drives, but that’s the exception rather than the rule.

If all of the server’s drive bays are filled up, there are three solutions to improve storage throughput:

- Add storage that bypasses the drive bays altogether – like Fusion-IO or OCZ solid state drives on PCI Express cards

- Swap out the storage for solid state drives – like Intel X-25 consumer drives as StackOverflow is doing

- Reduce the amount of storage throughput required – by tuning indexes, queries, or procedural code

Notice how that last one keeps sneaking in? Over and over during scaling exercises, the cheapest answer is manpower. It’s so often safer to make code improvements rather than introduce new pieces of hardware or software into the mix. As a DBA, whenever I did performance tuning, I made sure to point out to my management that my work directly saved us from buying more hardware. Sometimes I would even avoid performance tuning altogether until the project managers and developers asked for my help. They’d say, “Brent! Our CPU use on the SQL Server is constantly high! What should we do?” That gave me the ability to answer with a choice: they could either spend $X upgrading to new hardware, or they could give me two weeks of uninterrupted time to tune the application. After those kinds of interactions, managers understood that my time really was worth money, and it helped to free up my agenda to do what I love most – performance tuning.

30 Comments. Leave new

>If you can fit your entire database in memory

Are we talking ‘enough memory to fit the whole physical MDF file into’ here e.g. your MDF is 6Gb, so you’ll be needing 8Gb of memory, or a different figure?

>plus space for sorts & joins

Is there a rule of thumb for how much space you’ll want to leave for this?

Phil – about the memory for the data, it depends on how full the MDF is. If it’s 100% full, then yep, you’d want 6GB of buffer pool space at the bare minimum to fit the database in memory. For a general guesstimate, I’d tack on another 4GB there for the OS and SQL Server plus any other services that might run, plus for those bozos who RDP into the server and start something like SSMS or VS.

For the space for sorts & joins, no, there’s not a rule of thumb. It depends on things like tempdb object needs and the types of queries you’re running.

Hope that helps!

Brent

@Brent

When it comes to the size of the memory vs the MDF, Nick Craver brought an interesting idea that I am wondering if you can verify. He was saying (hopefully I am repeating this correctly — and I can’t remember which SQL/Windows versions he was talking about) that In order to avoid data going over the QPI some memory will be duplicated in multiple processor systems. So for instance if each CPU has 48GB of RAM some parts of the MDF will exist in the 48 GB for CPU A and are then duplicated for CPU B. Do you know if this is true? If that is the case it seems it would be pretty hard to properly size memory based on the MDF.

-Kyle

Yep, that is indeed true! That’s for systems with multiple NUMA nodes. I’m jumping into a client call, but you can learn more about it in our book, Professional SQL Server 2008 Internals and Troubleshooting. 😀 Buy yours today….

Yikes. Suddenly my 8Gb two-processor server feels a bit under-powered!

Thanks Brent!

Thanks for the detailed post on this subject. I believe your emphasize on doing a proper tuning by adding proper man power before rushing to buy additional hardware will be a great advice and cost saver for many business. (Because your opinion really counts 🙂 )

If memory or storage is causing the bottleneck, are there cases where it might be smarter to switch to cloud storage than to upgrade?

Amazing what a re-review of the basics can uncover. Great article! Looking forward to the Scale Out followup.

Wow, I gotta say Brent, this is a great post.

What may not be clear to the casual reader is that there is an awful lot of valuable information here, based on years of in the field experience, being presented in a VERY concise and easily consumable fashion.

Nicely done sir.

Thanks, sir!

Just a quick note – if you do any sort of “multi-tenant” type model where multiple customers share one DB, I’d seriously recommend either looking into Azure or start investigating the “scale out” model sooner rather than later. Trying to just scale up in those cases can be painful. I really look forward to reading the scale out part and would love to see if you have some advice for starting a scale-out strategy. I know that you can get all sorts of fun when dealing with SQL < 2008 and getting a query plan cached that might work well for one customer, but not all. 🙂

It does seem like performance tuning always seems to sneak its way into these sorts of discussions, though. Amazing how that happens. Who would ever think that this could be a root cause of problems?

I agree with everything you say here but I continually run into the problem of not enough developers to fix the bad code. Additionally the argument is that it’s too risky to touch it so we continually just get more hardware. I guess it’s the nature of having all the hardware I could possibly need in the warehouse next door.

That’s where you really need someone to step in and say “it’s time to address these issues”. It may not be often, but eventually someone needs to realize that if it’s risky to touch all that code, you have a much larger problem that will eventually bite you. We have similar problems here, but people have been stepping up and saying – “Let’s do something about this legacy code” so we can move forward. We still have a lot of legacy code, but we’re chipping away at it instead of using it as an excuse.

I’m kind of surprised that nobody is asking whether or not you’ve tried refactoring rather than buying more hardware. Eventually you’ll run into problems that more hardware won’t solve and by that time that legacy code will likely be an even bigger problem. Besides, even if you have all of the hardware you need in the warehouse, what about the costs of running that hardware? It would seem that these costs would increase at some point.

We started addressing it by looking at poorly performing or costly queries – either queries that ran for a long time or cumulatively ate up a lot of server resources. From there, we can look at the code to see if it might be a somewhat straightforward refactor of the query or checked to see if an index would help. Additionally, check out pages that make multiple calls to the DB instead of working in a set. We found some older code doing this and could replace it with a set-based solution that saved SQL and app server resources. It also improved the speed quite a bit. 🙂

Peter – when you say, “I’m kind of surprised that nobody is asking whether or not you’ve tried refactoring rather than buying more hardware” – that’s what I mean in the very first section of the blog post that begins with, “Quick disclaimer first.” Is that what you were looking for?

Pretty much. Even in our organization where it is often easier to upgrade hardware than dig into legacy code, we ask the question whether it makes more sense to “get us by” or “do it right”. (or at least differently)

I think that your thoughts did prompt that response, but I know we were discussing some things around IO recently and as much as we know that getting a SAN full of SSDs would help us immensely, it’s not really the solution to our problems. It would mask the current problems and cost a small fortune. Thus, we turn to refactoring and tuning our code.

Technical debt is one of the biggest problems I’ve encountered in my career. On a previous job, we said “enough is enough” and lobbied for time to identify the parts of the application and database that were causing code problems because of technical debt that we’d never paid down. We figured out how long it would take to fix and put together a cost benefit analysis for each one. It’s amazing how easy it was to get approval to drop all new development for two weeks and fix old problems once we could say “fixing the old problems will save us over a month of work this year alone AND will gain some performance estimates around X%”. Once we were done, we were able to keep prioritizing more technical debt reduction in every iteration. It was a great feeling and it made working with the application and database code a lot nicer.

Refactoring and removing technical debt can save you a ton of time in the long run. Avoiding technical debt in the first place is, of course, much better.

Good article. We adjusted the Virtual Memory yesterday from 2gb to 24gb per an article that stated VM should be 1.5 x physical memory (16gb in our case for SSRS server). Would you concur?

We also moved the MDF file for the ReportServerTempDB to an isolated drive per the same article.

Will monitor server for increased performance…

Jon – thanks! About the virtual memory – I don’t work with SSRS, so I couldn’t say. When the engine’s by itself with no other services, I only use 2GB – the amount Windows needs to write a minidump file. SQL Server shouldn’t be paging to disk if it’s configured correctly. Plus, these days with servers having 128-256GB of memory, a page file that big isn’t practical.

Brent: I totally agree with you in principle. Spend the time finding the long-running queries, missing indexes, etc…that are slowing you down. However, most managers I have worked for want the problem solved immediately, and happily throw hardware at the problem. In their eyes, its cheaper and faster to add a few RAM sticks than to spend two man-weeks fixing the problem.

Jeff – that’s an interesting question. In physical servers, I don’t usually find it faster to “add a few RAM sticks”. Adding memory generally causes a server outage because we have to shut most servers down in order to add more memory. That has to be coordinated through change control processes, and generally can’t happen during business hours. Furthermore, if the table’s still too big to fit in memory, it doesn’t speed anything up.

Index changes, on the other hand, can be done earlier, without taking the server down, especially if you’re using Enterprise Edition with its online index processes.

Again, totally agree. My experience has been that managers are willing to trade off ~15 minutes of downtime to add RAM sticks against hours of poor performance for end-users. Even if it does not solve the problem, it seem to make them feel better about it.

Jeff – I see. I usually say it this way to managers: “You go ahead and get with IT to find out about adding more memory, because that’s a business issue (money and downtime), not a technical issue. I’ll start working on fixing indexes. Let’s regroup in two hours and see what kind of progress we made.” That way I’m out of the loop on the things I can’t fix (money and downtime politics) and I get to just concentrate on the things I can fix. It’s pretty rare that I don’t win that battle, but then I do a lot of index tuning so that’s usually easier for me to do quickly.

I’ll add to what Brent has to say here. Even if you have the money, what is the procurement process? You generally are not going to go to Fry’s or Microcenter to get this stuff. You may have to go through a PO process, etc. Once you get the part you can finally even think to start to schedule the outage. Immediate fix? Hardly.

Is that server racked where you can’t slide it out? Not a 15 minute fix.

Plus, on very large systems, they don’t reboot in 2 minutes.

As Jeremiah so elequently put, at some point technical debt isn’t worth it. It’s better to fix the problem instead of trying to keep putting band aids on it.

We’ve had to make these decisions lately and we opted for the beefier hardware route, mainly because the SQL instances that were problematic hosted databases that support vendor applications. We found we have very little leverage with a few vendors in particular to force them to make schema changes, etc. that would improve performance. On the plus side we’ve managed to isolate the worst offenders to a single instance and now that we’ve upgraded hardware we won’t budge on adding more when the performance issues invariably reappear!

Brent, you said “Tuning your existing queries, schema, and server settings is almost always faster and cheaper than scaling up or scaling out”

Some serious DBAs take 100 dollars per hour to do the above….. if it takes them 50 hours to tune everything , it is not cheaper than scaling up…imagine the scenario that the GURU DBA tunes everything and we still have performance problems.

what will be his advice?

“Buy more/faster cpu,memory,hard disk”

Obviously he will still get his fee on top of the hardware expenses….

Even throwing 50 hours of time at $100/hr at the problem, you’re still at $5000. That’s a pretty cheap server chassis. When you start adding in ECC RAM, OS licensing, and SQL Server licensing, that $5000 for 50 hours of time starts to look pretty cheap.

When you dive deeper into scaling issues and the problem turns out to be physical I/O, $5000 starts to look like an incredibly cheap price tag. SANs aren’t cheap. While the cost of SANs has dropped in recent years, it certainly hasn’t dropped to a point where a week of an expert’s time is cheaper than sizing, buying, and provisioning entirely new gear.

Scaling out has its own set of costs – now you have to pay for twice as many OS and database licenses. If you’re attaching dedicated storage to each of your SQL Servers, that’s more money you need to spend there, too. Your network costs will increase and you may end up putting fiber channel or Infiniband gear in your data center to decrease the time it takes to ship data around between different nodes in your scale out cluster.

The point isn’t necessarily that you should hire someone to tune your databases, but that you should exhaust the options of tuning your existing set up before you look into scaling up or out. A scale out system with a lot of problems is still a system with a lot of problems; you’ve just spread them around.

As of Mar 7, 2013, the 16GB kit (8GBx2)* is $821.99 from Crucial. That’s a $478 price drop in 2 years.

* DDR2 PC2-5300 • CL=5 • Registered • ECC • DDR2-667 • 1.8V • 1024Meg x 72

Source: http://www.crucial.com/store/listmodule/DDR2/list.html.

It’s actually much cheaper than that – you can buy current modules much cheaper. Sample – 32GB for under $500:

http://www.crucial.com/store/mpartspecs.aspx?mtbpoid=B54D4175A5CA7304