All databases are not created equal.

Over the last couple of years, IT management pressured database administrators to cut hardware costs and licensing costs. We had a few choices:

- Consolidation – take databases that used to live on separate SQL Server instances and merge them onto one

- Instance stacking – install multiple SQL Server instances onto less operating systems

- Virtualization – keep the SQL Server instances separate, but merge their OS’s onto less hosts by turning them into virtual servers

All of these have their pros and cons. The bottom line, though, is that all of them end up with multiple databases sharing less physical hardware. The more databases we have on a server – whether virtual or physical – the more we have to monitor the resource utilization of each database. SQL Server doesn’t know which databases are more valuable to the business, or if one database is supposed to be more active than other databases.

When Tuning, Look for Greedy Databases

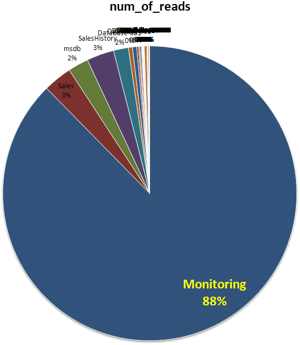

When I start tuning a server with more than a handful of databases, I like running sp_BlitzFirst @SinceStartup = 1. It lists each database file and the basic IO stats like number of reads & writes, size of reads & writes, and IO stalls. I don’t look for specific thresholds, but rather an imbalance – is one database dominating the IO?

If I see one database absolutely dwarfing the read/write statistics of other databases, I bring that to the attention of the server manager. To drive the point home, I copy the query output into Excel and whip up a quick graph. In the example shown here, one particular database was responsible for 88% of the reads on the server – more than all of the other >80 databases combined.

I’ve changed the database names to protect the innocent, but the one database was a enterprise-wide monitoring program’s repository (and no, it wasn’t a Quest product.) While that database did have a legit business value, it didn’t justify draining the server’s resources. In comparison, the Sales and SalesHistory databases (not really their names, but renamed for illustration) only used around 3% each. Those two databases had a vastly higher business value, and they were suffering due to the monitoring application.

The IT team had done the right thing by consolidating databases down to less servers, and when they needed a home for the new monitoring software repository, they used a shared instance. At first everything went fine, but over time the repository’s needs dwarfed even the mission-critical sales application.

The problem turned into more than just storage; since the monitoring app was doing a stunning amount of reads, it was pushing memory out of SQL Server’s buffers. The page life expectancy metric – the length of time data stays in the buffer cache – was averaging under 10 seconds. For reference, Microsoft recommends this metric be 180 seconds (3 minutes) or higher. Clearly, we were running into a problem with this database.

So how do we mitigate it? In my Perfmon & Profiler 101 presentation, I talk about defining “mitigations”, not fixes, because sometimes we can only afford to duct tape the problem.

Option 1: Fix the Database

Ideally, I’d pop the hood on the database, rework the indexing strategy, and help improve the performance of the queries. However, this was a third-party application with a sealed hood – no query changes here.

We could build a list of recommended changes for the vendor, but you know how those vendors are. They never listen to anybody, do they? <sigh> I know, I know.

Option 2: Move the Database to a Standalone Server

Since the database was a non-mission-critical app, we could build a new SQL Server – preferably a Standard Edition box (as opposed to the pricey Enterprise Edition box it was sharing) with cheap storage. No matter how much money that we’d throw at the hardware, the application still wouldn’t perform well, so it wouldn’t make sense to buy an expensive box.

We could also move it to a new virtual server and use our hypervisor’s resource throttling to minimize the impact on other servers. Some hypervisors can set a “max” amount for CPU, memory and storage throughput for a given virtual server.

Option 3: Move the Database to a New Instance

We could install a separate instance of SQL Server onto this existing server and set its max memory to just a couple of gigabytes. That way, it would only penalize itself for bad performance. We should also use a separate drive array for this database so that its IO performance wouldn’t take down other databases.

Unfortunately, since this server was on the SAN, this database’s IO would still be traveling over the same Host Bus Adapters (HBAs) that the other databases used to access their data. Depending on the quantity of IO, this could still present a bottleneck.

Option 4: Throw Money at the Shared Server

We could upgrade the bejeezus out of the memory and storage used by the SQL Server. This would speed up the monitoring application along with the other applications on the server. However, since the monitoring app ran 24/7, the other apps would never really see the full benefit of the additional hardware.

Which Option is Right for You?

Making the right choice requires knowing the business value of the resource-intensive database. If you don’t know what the database is used for, email your management with a copy of your pie chart and let them decide.

In this particular case, the client chose to move the database to its own server. The results speak for themselves – the server did 93% less reads per second on the data drive, meaning less IO saturation:

And page life expectancy, the length of time data stays in memory after it’s read off the disk, improved almost 100x:

The server was suddenly more responsive, more usable, and the mission-critical applications started flying again. Mr. Nastydatabase was confined to his own sandbox, hurting no one else but himself until he could learn to play well with others.

9 Comments. Leave new

Brent, many thanks for this insight, I will be reviewing the results across all of my servers very soon. Interestingly, on the first server I have executed the script from Louis Davidsons blog and my tempdb is high up – not the top one but in the top 5 for reads and writes.

I dont have an option to move that one off the server!!

Am I right in thinking that I need to look to the other databases spilling to HDD for queries etc?

We dont have performance issues – that is, the systems are doing as well as they ever have (but maybe they can go faster …), I’m simply aiming to benchmark at the moment but wondered if I need to take action now I have found this?

cheers

Jonathan

You don’t necessarily need to move it off the server – but you can start by putting it on separate spindles from the rest of your databases. You’re exactly right in that you want to check for any other databases on those same spindles, and just get ’em off so they don’t suffer.

Thanks,

What is your gut reaction that its TempDB that high across all num_reads, num_writes, bytes_read, bytes_written?

Would a busy (50 ish database) server always have such a busy TempDB or are we seeing a lot of applications that are poorly optimised?

Should I expect TempDB to be high on the other server too?

I agree with Brent here, you really need to move tempdb onto its own set of spindles. We are in a similar situation where I work, tempdb is about 50% of all the IO on the server so we are moving it onto solid state disks freeing up our main drive arrays and boosting throughput in tempdb dramatically.

I’d look for code that uses temp tables, if you are on 2005 if you are using snapshot isolation levels. Also sorts, group by’s and other ops use tempdb quite a bit. If this was a OLTP server with high tempdb usage I’d start digging into it. If it is a OLAP maybe they are using tempdb to spool data as it is going through the ETL process.

If it is a shrink rap app that you don’t have any control over moving it onto its own server may help as well. I had to deal with a billing application that created 3 temp tables every time someone logged into the system, we had 1600 people logging in and out all day, on a SQL Server 2000 installation. Needless to say, moving tempdb onto its own disks made a huge difference in performance.

Unless you are dealing with a vendor that has a “touch my database and you’ll void your support contract” mentality (MS Sharepoint, anyone??), I’m surprised you dismissed “Option 1: Fix the Database” so quickly.

Even if you can’t touch their code (I’d prefer not to even if I could), there are still several fairly “safe” ways to improve performance without much chance of problems:

1. Index tuning, especially covering indexes for common queries.

2. Plan Guides. Allows you to change how a query will execute, without having access to change the original query.

Testing and documentation are important here, especially when it comes time to apply vendor upgrades/patches.

Your changes could be reverted the next time an upgrade patch is run, or in extreme cases, your changes may cause the vendor’s patch to fail. In that case, you just make a “UNDO/REDO” script than can revert all your changes so you can safely apply the vendor’s upgrade.

In my experience, there are quite a few vendors out there that give you an “adequate” database out-of-the-box for “normal sized” installations, but when that particular installation starts to grow past a certain point, it really takes an experienced DBA to tune the database properly for best performance.

Ouch – I disagree strongly with the suggestions of index tuning and plan guides.

For index tuning, the problem I’ve seen (disturbingly often) is that 3rd party apps will come out with an upgrade, and that upgrade script will include several database tweaks. The tweaks assume that the database is exactly as the vendor installed it, down to index names. If you change an existing index, or add one with a name that the vendor later decides to add, the vendor’s upgrade package can explode. Guess who gets the blame? The DBA. I’ve also seen vendors flat-out refuse to support databases when they’ve noticed schema changes done by the client’s DBA.

For plan guides, this is a great idea – but only when you can focus on the database often. Every single time the vendor comes out with a new version with better indexes or changed queries, you have to devote DBA attention to your plan guides. I have a motto that I never want to put something into place that requires me to come back to it regularly. If I put in more than one solution like that, I’m basically backing myself into a corner, allocating my future time every time I implement a solution like that. I’ll only implement solutions that work without my intervention.

You’re completely right that these solutions work – but you have to make sure that you’re willing to marry yourself to your fixes and babysit what you’ve produced.

I’ll certainly agree that it isn’t ideal, but sometimes its necessary.

One specific vendor I have a lot of experience with (*cough*SageSoftware*cough*) seemed to provide barely-adequate indexes in their out-of-the-box product anyway, plus their software was so customizable that any larger install would need some production DBAs to look at all the custom tables/joins/fields and make sure they weren’t going to cause problems.

More of a combination DBA/application support role than a pure DBA, actually.

Brad, I havent dismissed any options. What I was surprised at was the appearance of TempDB on a server that I thought was doing OK in terms of performance and I wondered if its the case in general or if I really do have a problem. What I have dismissed is moving TempDB to another server(!).

We have plenty of systems on that server so I think any performance issue is a “death from a 100 cuts” issue rather than one single culprit. This will make it harder for me to locate the quickest win(s).

I am still interested to know what % of activity on the files stored on a server would be the TempDB files – < 20% | 20-40 | 40 – 60 | more ? … ?

Some vendors have said for me to get in there and let them know what indexes work for their application, others have said the polar opposite and we lose support if we change anything. Others are in the middle.

Boy this reminded me of this post, where even within MSDB there is a lack of good indexing strategy.I have read many posts similar to this suggesting adding indexes to the backup tables. Always shows up on my missing index reports and is very distracting.

http://weblogs.sqlteam.com/geoffh/archive/2008/01/21/MSDB-Performance-Tuning.aspx