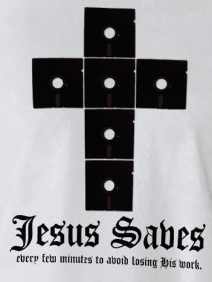

Ran across a funny situation in my test lab, and it’s only funny because it was my test lab.

The bad news: backups started failing a few days ago. One of the databases had a filegroup that wasn’t online, and as my maintenance plan looped through the list of databases, it died when it couldn’t back up that database. Unfortunately, it was going in alphabetical order, and that database started with a B.

The good news: the separate cleanup jobs still worked great. They were dutifully cleaning out any backups older than a few days.

The worst news: database mail had failed – of course, a few days before the backups started failing. My DNS servers in my lab had decided to take the week off, so email wasn’t making it out of my lab. I didn’t get notified about the backup jobs that started to fail.

The cleanup jobs worked better than the backup jobs, and the right hand didn’t know what the left hand was doing. In this particular case, the left hand had been amputated at the wrist. In a perfect world – or at least, in a world where my job depended on this data – the maintenance plan jobs would be interconnected so that they wouldn’t delete backup files if the backup job failed. That perfect world would not be my server lab.

No real data was harmed in the making of this blog post, but times like this remind me of just how hard it is to be a good database administrator, and how easy it is to lose data. Have you tested your restores lately? Do you really think you’ve got something more important to do?

7 Comments. Leave new

Love the shirt reference. and yes, I test my restores on a monthly basis.

This also illustrates the need for success emails as well as failure emails. Had you been receiving “backup was successful” messages and they suddenly stopped, you would have caught the DNS issue earlier. Great post.

Thanks! One of the monitoring systems I use, ServersAlive from Woodstone, has the ability to send an SMTP email every few minutes and then check a POP3 mailbox to make sure it arrived. You can set it up to send the email using your corporate (or in my case, lab) email server, address it to a Google account, and then pick it up via POP3. If it fails, then you know there’s an email problem. I really like that product, but I didn’t have that configured in my lab. Time to go do it!

This is the reason I no longer use a simple “delete anything older than x days” cleanup script. It’s been a bit of a pain, but I wrote perl scripts that ensure there are always X number of old backups, regardless of how old they actually are. Restoring to a week old backup is better than not being able to restore at all.

Oooo, nice. Would you be willing to share that? I could post it as a code example on SQLServerPedia if you want.

Sure. It’s kludgey, but gets the job done. There are probably better / cleaner ways 🙂

Specifically, it relies on a naming convention for the files that it deletes. My backups look like this, with one file for each day:

DB20C-MyDatabase-FullBackup-2009-10-14.zip

DB20C-MyDatabase-TranLogs-2009-10-14.zip

My Linux tar files look similar, so the same script works on both.

backup-web17a-2009-10-14.tar.gz

# .

# . This script prunes the rsync_area directory.

# . It takes arguments:

# . 1. The number of revisions to keep locally.

# . 2. The path to the rsync_area directory.

# . This path will be recursively searched for all files.

# .

use POSIX qw(strftime);

use Socket;

use Sys::Hostname;

# get the computer name

$compname = hostname();

# get the number of revisions

$revs = int(@ARGV[0]);

if ($revs == 0) { die "revs cannot be 0\n"; }

# get the rsync_area path

$rsyncdir = @ARGV[1];

$rsyncdir =~ s/\\/\//g;

# recurse through the rsync_area path

&smash("$rsyncdir");

sub smash {

my $dir = shift;

undef my @subdirs;

undef my @files;

undef my @sortedsubdirs;

undef my @sortedfiles;

opendir DIR, $dir or return;

while ($item = readdir(DIR)) {

if (-d "$dir/$item") {

if ($item !~ /^\.\.?$/) {

push @subdirs, "$dir/$item";

}

} else {

push @files, "$dir/$item";

}

}

@sortedfiles = sort { $b cmp $a } @files;

@sortedsubdirs = sort { $b cmp $a } @subdirs;

$lastfile = "";

foreach $file (@sortedfiles) {

# an ugly regexp that shoudl probably be tuned.

if ($file =~ /^(.*)\d\d\d\d-\d\d-\d\d\....(\.gz)?$/) {

if ($1 ne $lastfile) {

# new filename, start the count over and reset $lastfile

print " new file: $file\n";

$count = 1;

$lastfile = $1;

} else {

# still the same file

$count++;

if ($count > $revs) {

print " delete file: $file\n";

unlink $file;

} else {

print "ignore file: $file\n";

}

}

}

}

foreach $dir (@sortedsubdirs) {

print "processing subdir: $dir\n";

&smash($dir);

}

}

Call the script like so:

perl backup_area_clean.pl 3 \\backupserver\sqlbackups

would keep 3 copies of each database/tranlog backup archive. I usually pipe the output of this script to a log file for debugging purposes.