Last week, I blogged about the basics of SQL Server index fragmentation: why it happens, how to fix it, and how often people are fixing it. I left you with a cliffhanger: it seemed that the frequency of defrag jobs didn’t appear to affect fragmentation levels:

- Databases with no index defragmentation were an average of 5% fragmented

- Monthly – 17% fragmented

- Weekly – 3% fragmented

- Daily – 6% fragmented

At first glance, that would seem to indicate that your database got worse off if you defragmented! But like all good novels (and most bad ones), the plot thickens.

Enter Data Mining with Excel and SQL Server

Data mining is a lot like diamond mining, only there’s no monopoly on the market, and the ladies don’t seem to appreciate a quality KPI. Otherwise, they’re identical: there’s a whole lot of money in it, but that money doesn’t usually go to the people who do the actual mining. It goes to the executives and salespeople who take advantage of the mined products to make better decisions.

The people doing the mining, on the other hand, are forced to spend their lives in tiny, dark caves (or “cubicles”) trying to extract beautiful gems (or “data”) while risking painful lung ailments (or “carpal tunnel”) due to toiling with terribly unsafe and outdated hardware (or “hardware”).

For today’s demo, I will be the miner, and you’ll be the executive who takes advantage of my work. (It’s okay, I’m used to it – I work for a vendor now.)

In my podcast Data Mining with Excel in Four Minutes, I explained how to set up Microsoft’s free data mining add-ins for Excel 2007. It’s an Excel plugin that hooks up to any SQL Server Analysis Services server on your network, either SQL Server 2005 or 2008, and makes data mining a point-and-click affair. It doesn’t require high-end horsepower – even a desktop or laptop works great for this. If you can’t be bothered to set up an SSAS instance, then check out my Data Mining in the Cloud writeup on how to get started without using a server at all.

Help SSAS Help You: Explain Your Numbers

While data mining is really easy to set up, you can get much better results if you “prequalify” your data and turn some of the numbers into basic categories.

If I was working with United States salary data, for example, my source data might have a column for Hourly Wage. I would add another column and call it Tipped Employees:

- Under $6.55 per hour – Tipped Employees = Yes. You can pay someone less than minimum wage if they get tips, and in that case, you really just can’t go by their hourly wage alone.

- $6.55 per hour and over – Tipped Employees = “Unknown.” In a perfect world, I’d have enough data to find out if these people get tips, but that’s not always the case.

By adding a new attribute to my data, something that’s not clear from the numbers alone, I might get better insight from my data mining efforts.

By the way, if you’re reading this and it’s after July 2009, the minimum wage has risen to $7.25 per hour. If you’re a VB developer, you should immediately ask for a pay increase to match the new standard – unless of course they’ve got a tip jar by your desk.

Explaining Our Index Fragmentation Numbers

In the case of our index fragmentation numbers, one of the source data fields is Page Count – the number of pages that an object has. Size matters with fragmentation: small objects with only a handful of pages may appear to have very high fragmentation numbers, but they can’t actually be defragmented. There’s only so much defragmentation you can do when a table only has three pages. I’ve actually been on support escalation calls where customers demand to know why a defrag job doesn’t reduce all types of fragmentation to absolute zero, even for tables with just one page.

Microsoft’s best practices on SQL Server 2000 index defragmentation notes that:

“Generally, you should not be concerned with fragmentation levels of indexes with less than 1,000 pages. In the tests, indexes containing more than 10,000 pages realized performance gains, with the biggest gains on indexes with significantly more pages (greater than 50,000 pages).”

With that in mind, I added a Page Count Group column and calculated it with a formula:

|

1 |

=IF(Table1[[#This Row],[page_count]]>50000,"Large",(IF(Table1[[#This Row],[page_count]]<10000,"Small","Medium"))) |

That adds a text label for Small, Medium or Large depending on the size of the table.

Suddenly, The Data Makes More Sense

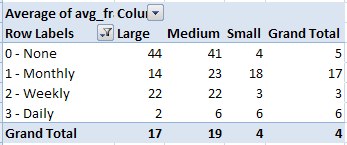

Even before doing data mining, if we just add a Pivot Table, we can suddenly make more sense out of the numbers.

For Large tables, we see an average 44% fragmentation when the database has no defragmentation jobs set up. Monthly defrag drops that to 14%, and daily drops it to just 2%! The Weekly data is a bit of an outlier here, but it’s still less than no defrag jobs at all, so we’ll have to dig deeper.

For Medium tables, we see the type of data distribution we would hope for: the more often we defrag, the lower our fragmentation gets.

For Small tables, the data is all over the place, but we know why: it has to do with the way smaller tables behave.

Adding this bit of human interpretation helped us get better results from our data – and we haven’t even started mining!

Learning More About SQL Server Data Storage

Want to learn more? We’ve got video training explaining it! In our free 90 minute video series How to Think Like the SQL Server Engine, you’ll learn:

- The differences between clustered and nonclustered indexes

- How (and when) to make a covering index

- The basics of execution plans

- What determines sargability

- How SQL Server estimates query memory requirements

- What parameter sniffing means, and why it’s not always helpful

11 Comments. Leave new

Brent,

Great post and series! Looking forward to part 3.

The small / medium / large categories helps put priority and focus where it is needed most – kind of like "don't waste your time" / "look a little closer" / "really pay attention!".

While I understand the stated index size thresholds of 10,000 pages and 50,000 pages (and that page count is the metric which was collected), it may also be helpful to offer a relative perspective by stating these thresholds for reference purposes as storage capacity metrics of about 80 MB (10,000 pages x 8 KB page size) and about 400 MB (50,000 pages x 8 KB page size). The "about" caveat depends on whether your preferred storage metric unit measures are decimal (MB = 1,000,000 bytes or 10^6 bytes) or binary (MB = 1,048,576 bytes or 2^20 bytes) – roughly a 5% difference for MB and 7.3% for GB.

Scott R.

Brent, does this scale down at all?

I have 3 productions servers. Only one database has indexes that are over 10000 pages, only 3 others have indexes over 1000 pages.

I have scheduled jobs that defrag indexes on the main databases (HR, Accounts, CRM etc) as I know they cause slowdowns if they are not kept neat and tidy.

Doesnt the frag %age have a vote in whether the index should be rebuilt/reorganised? Or does a 500 page index that is 90% fragged not cause the problems that a 5000 page index that is 2% fragged …

Jonathan

Jonathan – everything is “Your mileage may vary.” Depends on the random speed of the drives, the read vs write mix of the data, the frequency that the data is queried, etc. My approach is to start with Michelle Ufford’s excellent index defrag script, set it with very high thresholds (like only rebuild indexes when things are extremely fragmented) and then see how long it takes to run. Then I turn it down lower and lower over time so that I defrag as much as possible in as little time as possible. Whatever performance gains I can get from it, great, but I don’t go to the individual table to look at fragmentation vs size vs read/write mix and so on.

Scenario: 200GB database, 24×7, 2005 std edition, can’t use rebuild-online.

using the sys.dm_db_index_physical_stats in “limited” mode reveals high index fragmentation on index_level=0.

using the sys.dm_db_index_physical_stats in “detailed” mode reveals very HIGH index fragmentation on index_level 0, 1, & 2. 3 is zero

after re-organize indexes, the index_level 0 AVG decreases significantly but index_level 1 & 2 AVG remains very high

Please help me understand what is the index_level?

Padre – you can read about that in Books Online here: http://msdn.microsoft.com/en-us/library/ms188917.aspx Do a control-F to find the index_level explanation.

Hi.

Thanks for a great article. Oldie but goodie, right?

2 questions.

1.. Does the less than 1000 pages guideline apply to server 2008 and up?

2.. Considering the index is covering, am I better off having no index than having a 99 % fragmented index

Once again thanks for the article.. Your site are my #1 resource for SQL related matters..

Lars:

1. Yes, but it’s just an arbitrary number. It’s not set in stone.

2. It depends on whether your queries are better off with or without the index. The more indexes you have, the more overhead you experience during inserts/updates/deletes. I’d start by going to https://www.brentozar.com/go/scripts and look at the Index Tuning section. Run all four of those queries in order, and watch the videos to understand what the scripts are doing.

With resepect to the following quote from the article:

“At first glance, that would seem to indicate that your database got worse off if you defragmented!”

According to the last 9 months of serious testing on index maintenance, that’s actually true if you use the current “Best Practices” of don’t do anything for less than 10% (logical) fragmentation, REORGANIZE between 10% and 30%, and REBUILD greater 30%. The reason is that REORG actually removes critical free space above where the FILL FACTOR is and that space is critical to reducing “Bad” page splits, WHICH IS THE PRIMARY CAUSE OF LOGICAL AND PHYSICAL (Page Density) FRAGMENTATION. More fragmentation means more frequent index maintenance which means more fragmentation, wash, rinse, repeat. It’s like a bad drug habit… the more you do it, the more you need to do it. The culprit in all of this is REORGANIZE. There’s way more reasons than I can articulate in a single post but, like I said, it actually removes the most critical free space from an index and that causes perpetual page splits.

I’m giving a 2 hour presentation on it all at SQL Saturday #770 in Pittsburgh on the 29th of September, 2018 and it’s still not enough time to cover everything going on with indexes, fragmentation and, in particular, REORGANIZE, which I now refer to as “Death by Defragmentation”. 😉

p.s. The new methods I’m working on actually make Random GUIDS one of the best ways to avoid page splits and even avoids supposedly “good” page splits, which are also bad. In a simulation of inserting 1 row at a time, 10,000 rows per simulated day, for 365 simulated days (3.65 million rows total) on a 123 byte wide Clustered Index, the new method goes over 4 WEEKS with ZERO page splits (not even good ones) at an 80% FILL FACTOR and more than 8 WEEKS at 70%. A less narrow non-clustered index of 24 bytes goes more than 7 WEEKS at 80% FILL FACTOR and more than 11 WEEKS at 70% with ZERO page splits.

Except for the readability problems and the fact that GUIDs live in the 16 byte UNIQUEIDENTIFIER datatype, GUIDs are great for index maintenance and are NOT the maintenance nightmare that everyone doing it the wrong way makes it out to be.

Shifting gears a bit, ever-increasing/unique/narrow/immutable indexes should never need defragging except for one thing… “Expansive Updates”, which cause horrific numbers of page splits and fragmentation, and most people don’t even know they have such a problem. There are methods to fix even that kind of problem, though.

Why on a table with less than a 100 page count but with >50% fragmentation do I have a query that times out and rebuilding the indexes on that table fixes the timeouts if the low page count makes index optimization unimportant? Should you periodically rebuild all indexes even those with a small page count? Is it getting a bad execution plan?

Hi! To learn more about that, check out my Fundamentals of Query Tuning and Mastering Query Tuning classes.