Nodes Offline: Why Your SQL Server VM Can’t Use All Its Virtual Hardware

27 Comments

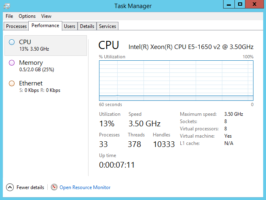

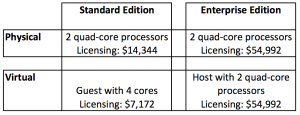

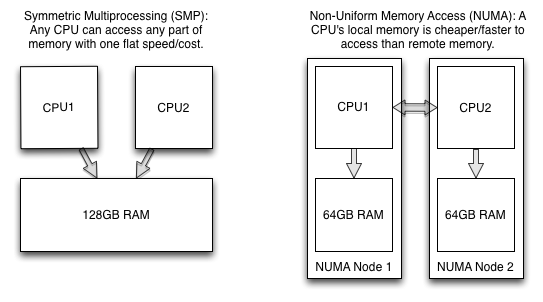

When you install SQL Server Standard or Web Edition in a virtual machine with more than 4 cores, things can look like they’re just fine – when in reality, you’re not using anywhere near your server’s potential. See, these editions have a tricky limitation – let’s look at the documentation: Standard and Web Edition Limitations Here’s…

Read More