Change Tracking is a developer tool introduced in SQL Server 2008 to help you sync data between SQL Servers, or between SQL Servers and devices. It’s designed to help you answer the request, “I need to get the most recent value for rows that have been modified since I last grabbed data.”

First, I’ll give you the top three reasons people use Change Tracking. Next, I’ll give you queries! If you’re using Change Tracking, these will help you document how it’s configured and measure how big your secret internal tables used by Change Tracking have grown to.

Then we’ll peek into query plans for queries using Change Tracking and talk about some common pitfalls that can impact performance.

Finally, we’ll talk blocking and data consistency. There may be some tradeoffs you need to make to get consistent results out of change tracking.

Why do people use Change Tracking?

Here are the top three reasons that Change Tracking with SQL Server sounds attractive to people:

1. Change Tracking doesn’t send every change over the wire. With a technology like transactional replication, every change that’s made is sent off into replication. If I update a single row a million times, that usually means at least a million “commands” head off into my distribution database. But I don’t always want all of those values. Often, I just want the most recent value, so Change Tracking offers a lightweight solution.

2. Change Tracking doesn’t care what platform your client is running. With a technology like merge replication, all the partners need to run merge replication. With Change Tracking, your client application just needs to come in and talk to a web server. The web server then queries out data that’s changed and sends it back to the client. The client doesn’t need to know a thing about SQL Server. (Clarification: there’s a part of merge replication itself which is also called “Change Tracking”. That’s totally different than what I’m talking about in this post.)

3. Change Tracking is cheap. It works in every edition of SQL Server (Standard Edition, Web Edition, you name it). This isn’t true for all your replication options – Change Data Capture requires Enterprise Edition, for example. Licensing SQL Server can be a huge part of your IT budget, so features that work with Standard Edition are often very attractive.

But as you’ll see in this post, there are tradeoffs, and Change Tracking doesn’t fit every scenario.

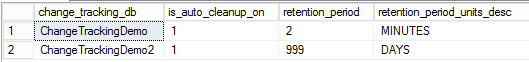

Are you cleaning up, and do you have a retention period set?

Change Tracking isn’t free. As you’ll see up ahead, it creates secret tables in your database. The first thing to find out is where you have change tracking on, if you have cleanup enabled, and what it cleans up. Check that out by running this query against any database on your SQL Server instance:

|

1 2 3 4 5 6 7 8 9 10 11 |

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED; GO SELECT db.name AS change_tracking_db, is_auto_cleanup_on, retention_period, retention_period_units_desc FROM sys.change_tracking_databases ct JOIN sys.databases db on ct.database_id=db.database_id; GO |

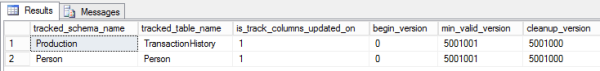

Are the right tables in Change Tracking?

You need to know which tables are being tracked. The more tables you track changes on, the more SQL Server has to hoard in other secret tables, and the more it will have to clean up. (Assuming you’re cleaning up. If you don’t clean up, that could be bad.)

Run this query against every database that has Change Tracking enabled to find out which tables have changes tracked:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED; GO SELECT sc.name as tracked_schema_name, so.name as tracked_table_name, ctt.is_track_columns_updated_on, ctt.begin_version /*when CT was enabled, or table was truncated */, ctt.min_valid_version /*syncing applications should only expect data on or after this version */ , ctt.cleanup_version /*cleanup may have removed data up to this version */ FROM sys.change_tracking_tables AS ctt JOIN sys.objects AS so on ctt.[object_id]=so.[object_id] JOIN sys.schemas AS sc on so.schema_id=sc.schema_id; GO |

How many committed transactions show in sys.dm_tran_commit_table?

We’ve looked at which tables you’re tracking and how long you’re retaining changes. But how many changes have been committed in that time period? This is the number committed across all the tables in Change Tracking (so the more tables you’re tracking, the bigger this can get).

|

1 2 3 4 5 6 7 8 |

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED; GO SELECT count(*) AS number_commits, MIN(commit_time) AS minimum_commit_time, MAX(commit_time) AS maximum_commit_time FROM sys.dm_tran_commit_table GO |

How big are your hidden Change Tracking tables?

There’s two primary places where Change Tracking keeps data about what’s changed:

- sys.syscommittab – this is the system table behind the sys.dm_tran_commit_table view, which you saw above

- sys.change_tracking_objectid tables – these are per each table tracked.

This query will show you all the internal tables with their size and rowcount:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED; GO select sct1.name as CT_schema, sot1.name as CT_table, ps1.row_count as CT_rows, ps1.reserved_page_count*8./1024. as CT_reserved_MB, sct2.name as tracked_schema, sot2.name as tracked_name, ps2.row_count as tracked_rows, ps2.reserved_page_count*8./1024. as tracked_base_table_MB, change_tracking_min_valid_version(sot2.object_id) as min_valid_version FROM sys.internal_tables it JOIN sys.objects sot1 on it.object_id=sot1.object_id JOIN sys.schemas AS sct1 on sot1.schema_id=sct1.schema_id JOIN sys.dm_db_partition_stats ps1 on it.object_id = ps1. object_id and ps1.index_id in (0,1) LEFT JOIN sys.objects sot2 on it.parent_object_id=sot2.object_id LEFT JOIN sys.schemas AS sct2 on sot2.schema_id=sct2.schema_id LEFT JOIN sys.dm_db_partition_stats ps2 on sot2.object_id = ps2. object_id and ps2.index_id in (0,1) WHERE it.internal_type IN (209, 210); GO |

Change Tracking Cleanup: No, you’re not crazy

We’ve just looked at two things that might not completely line up: your minimum commit time, and your cleanup/retention period.

If these don’t match, the root cause might be a few things:

- You’ve hit a bug in SQL Server 2008 and cleanup just isn’t running. See KB 973696, “FIX: Change tracking cleanup does not clean up the sys.syscommittab system table in SQL Server 2008”

- The Auto Cleanup Thread hasn’t run yet. This is a background task. I have observed that if I change the retention period on an existing database with change tracking it can take quite a while for the CHANGE_TRACKING_MIN_VALID_VERSION to update for that table. It takes about 30 minutes. The best documentation I can find for this is the comment from Jan 5, 2010 this Microsoft Connect Item.

If you adjust cleanup thresholds and you don’t see cleanup happening right away, you’re not crazy.

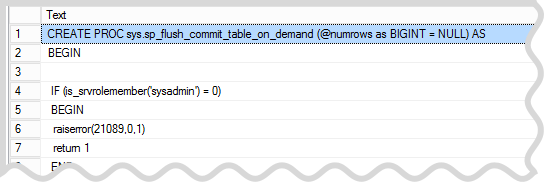

sys.sp_flush_commit_table_on_demand

I bet I know what you’re thinking right now. “How do I make cleanup run when I want it to?”

Well, I can’t give you a documented way to do it. However, Nic Cain will show you how to use the undocumented sys.sp_flush_commit_table_on_demand procedure. Nic has also written on some limitations that he found in Change Tracking cleanup here, which might require you to run cleanup manually if you have a lot of changes flowing through your system.

This is undocumented, so test carefully and use it at your own risk. You can review the code it runs by running:

|

1 2 |

exec sp_helptext 'sys.sp_flush_commit_table_on_demand'; GO |

As Nic warns, your manual cleanup could conflict with automatic cleanup. So if you choose to use this undocumented procedure, you may need to disable auto-cleanup for a time period. That requires running an ALTER DATABASE command, which might impact your execution plan cache and will require its own locks.

Note: I have not seen this automatically start cleaning up rows immediately after I change the retention period on a restored database. The cleanup procedure relies on results from the hidden internal functions change_tracking_hardened_cleanup_version() and safe_cleanup_version(), and I have not found a way to force updating the value used by that function on SQL Server 2012 SP1, I’ve had to wait 30 minutes like I mention above.

So again, even using this procedure, you might at times wonder if you’re crazy, or if Change Tracking is haunted. Be patient, be careful, and write some lightweight code to monitor and alert you if those internal tables get too big for your tastes.

Is your code using the CHANGE_TRACKING_MIN_VALID_VERSION function?

Now that you know if cleanup is turned on and how it’s working, check if your code is calling CHANGE_TRACKING_MIN_VALID_VERSION() at any point.

This is important to make sure the data you’re pulling is correct. Let’s say I’m using Change Tracking to move data to a secondary SQL Server. Normally it polls data every hour, but there’s a hardware problem and my secondary server is offline for 3 days. The next time it polls, it needs to make sure that all the data it requires is still on the publishing server.

This means your clients need to store off the highest version they pull down, and check it against CHANGE_TRACKING_MIN_VALID_VERSION() each time they poll.

Your client may also need to use a special isolation level called SNAPSHOT. (More on this soon!)

How are you querying with CHANGETABLE?

Here’s an example of the columns you can work with when querying:

|

1 2 3 4 5 6 7 8 9 |

SELECT p.FirstName, p.MiddleName, p.LastName, c.SYS_CHANGE_VERSION, CHANGE_TRACKING_CURRENT_VERSION() AS current_version FROM Person.Person AS p JOIN CHANGETABLE(CHANGES Person.Person, 2) AS c ON p.BusinessEntityID = c.BusinessEntityID; GO |

For each row that’s changed in the Person.Person table since revision number 2, I get the latest version of the FirstName, MiddleName and LastName for that row. I can see what the highest version number of those changes were, and I can also see the current highest version number for all tracked changes in the current database.

![]()

Could Trace Flag 8295 Help Your Performance? (TF 8295)

Trace Flag 8295 is documented in KB 2476322 and applies to SQL Server 2008 R2 CU7 / SQL Server 2008 SP2 CU4. After the trace flag is turned on, an additional nonclustered index is created on internal tables when you enable Change Tracking for that table.

There’s a big old disclaimer on that KB that says you need to test thoroughly in a non-production environment before making this change. (Hey, that’s a good general rule for trace flags in general.) I personally have not tested this trace flag, but it is used to support Change Tracking features by default by some System Center / SCOM products. This blog post by Santos Martinez gives some details.

(Thanks to Adam Machanic for letting me know about this trace flag, and to André Kamman and Edmund Poillion for helping track down the relevant documentation.)

What do your execution plans look like?

I’ve been giving you lots of ways to look at the internal objects for Change Tracking for a reason: the number of rows in the internal tables impacts performance.

Let’s say that my client last synced at revision number 1 million. A lot of changes have happened since then– 4 million in this table, and a handful of changes in other tables. I run a query like this to see what’s changed:

|

1 2 3 4 5 6 7 |

SELECT th.TransactionID, th.ProductID, th.TransactionDate FROM Production.TransactionHistory AS th JOIN CHANGETABLE(CHANGES Production.TransactionHistory, 1000000) AS c ON th.TransactionID = c.TransactionID GO |

The execution plan looks like this:

All of the tables with thick lines to the right side of the plan are the change tracking related tables. Looking at our STATISTICS IO and STATISTICS TIME output, here’s where we spent all our CPU usage:

|

1 2 3 4 5 6 7 |

Table 'TransactionHistory'. Scan count 0, logical reads 3, physical reads 0, Table 'Worktable'. Scan count 0, logical reads 0, physical reads 0 Table 'change_tracking_574625090'. Scan count 4001000, logical reads 12795765, physical reads 0 Table 'syscommittab'. Scan count 1, logical reads 16158, physical reads 0 SQL Server Execution Times: CPU time = 7735 ms, elapsed time = 7755 ms. |

(For readability, I removed the printed messages for readahead, lob, etc– those were all 0.)

Here’s the story the execution plan told me as I stepped through it:

- First, SQL Server pulled the commit_ts (it’s a timestamp!) and xdes_id (transactional identifier) columns for every commit that occurred since our revision number from sys.syscomittable. It used a function, an index scan, and a merge join to do this. It found 4,001,000 rows with these columns.

- SQL Server then looked for the corresponding TransactionID values in the sys.change_tracking_574625090 table. Unfortunately it did an index seek 4,001,000 times to do this. It found 4,000,000 values matching the commit_ts and xdex_ids. (The other 1K were changes made for a different tracked table.)

- The 4 million rows were pushed into a hash operation to find the unique TransactionIDs for all those revisions. It was a single row. (It had just been changed 4 million times.)

- The TransactionID key was then used to look up the related columns in a single, efficient, clustered index seek. Hey, that part was fast!

The whole process took 7.5 seconds. Of this, 99% of the work was just identifying what had changed.

Oops.

This may seem like an extreme example, but it’s really not. If you’re using Change Tracking on very frequently modified table or you’re not cleaning up very often (or at all), your overhead may in fact be far greater. I’ve seen undersized hash joins and tempdb spills in queries like this where revisions have piled up.

Change tracking, statistics, and parameter sniffing

Sometimes Change Tracking can be smart enough to give you a better execution plan based on the revision number you pass in. Whether or not it can do this well depends on two things:

- The freshness of statistics on your tables, including the internal change tracking tables

- Whether or not your revision number might be having a problem with parameter sniffing

If you have highly variable runtimes on reads from your Change Tracking tables, either of these might be be part of the problem.

Blocking, Cleanup, and Snapshot Isolation

Let’s talk about two problem scenarios that can happen with Change Tracking. They’re both related to that pesky background cleanup thread that you need to run so that your tables don’t bloat terribly, but which you can’t schedule.

Problem 1: blocking. When the cleanup thread runs, things get slow and you see locking and blocking. Investigation shows that it’s on the Change Tracking tables.

Problem 2: inconsistent results. A client comes in and says, “Hi, I need everything that changed after revision 99!” It checks the minimum revision and it’s below 99, so it begins a transaction and starts pulling down data from multiple tables. While it’s happening, new data is coming into some tables. And also, cleanup starts before it ends, and cleans up through revision 105 on some tables. Oh, we’ve got problems — we will have pulled down MORE revisions for some tables than others. And we will also have missed some revisions for some tables. We’re out of sync.

Because of these two problems, Microsoft strongly recommends that you use transactions and Snapshot isolation when reading and synchronizing data. It will protect you from those inconsistencies, which makes coding simpler, but of course there are tradeoffs. Learn more about implementing Snapshot isolation here.

Backups, Checkpoints, and Change Tracking

There’s a bug where duplicate rows in change tracking tables can cause checkpoints and backups to fail. Get information on the fix in KB 2682488.

Takeaways: Performance Tuning Change Tracking

Here’s the steps we’ve covered that will help you make Change Tracking go faster:

- Make sure you’re only tracking changes on tables that needed it. Extra tables in change tracking will bloat internal tables, and this can impact query performance.

- Make sure that your code properly checks CHANGE_TRACKING_MIN_VALID_VERSION so that you can run cleanup (or you’ll get troublesome bloat in internal tables).

- Validate that your cleanup jobs can keep up (and monitor it).

- Review and test using the undocumented procedure SYS.SP_FLUSH_COMMIT_TABLE_ON_DEMAND for cleanup if needed, but be sure to get approval since… well, it’s undocumented.

- Use query execution plans and STATISTICS IO and STATISTICS TIME to measure how much of your query runtime is being spent on Change Tracking tables.

- Look out for out-of-date statistics on Change Tracking internal tables, and parameter sniffing on procedures using Change Tracking.

- Use SNAPSHOT isolation to ensure you get consistent sets of data, even with cleanup running and new data coming in. It will also help you avoid pesky blocking situations.

Should you use Change Tracking?

As you may have guessed from this post, Change Tracking isn’t something that just configures itself and works perfectly for every application. But do you know of any other built-in data replication features which are like that?

Synchronizing data is hard. Change Tracking offers you some built in features that may save you time from writing custom code, but it’s not a great fit for databases with a very high rate of transaction commits to the tracked tables. For these databases there are major downsides when it comes to cleaning up those internal tables. You should also consider that the changes written to all the internal tables are also fully logged, which adds further overhead if you have a high level of writes. So you’ve got to choose wisely.

Could writing entirely custom code do better than Change Tracking? That’s up to you!

26 Comments. Leave new

“(Clarification: there’s a part of merge replication itself which is also called “Change Tracking”. That’s totally different than what I’m talking about in this post.)”

This is true. When I started analyzing Merge Replication and Change Tracking, I first thought that Merge Replication worked on ‘this’ Change Tracking feature. Maybe if someday Microsoft is renewing Merge Replication, they’ll make SQL Server use their own alleged better features.

Indeed, MSDN encourages us to use Change Tracking for synchronizing tasks, instead of triggers and timestamps or tracking tables: http://msdn.microsoft.com/en-us/library/cc305322(v=sql.105).aspx

Jaime

Yeah. I think their perspective is “we baked this cake for you professionally, why make your own cake?”

But maybe I just need a cupcake. Or maybe I’m making changes so often that their cake explodes. (My metaphor is clearly breaking down here!)

Agreed with you Jaimn.

But I also find this fantastic Library from MSDN team that looks perfect to make this tracking task pretty easier : http://msdn.microsoft.com/en-us/library/bb726006(v=sql.105)

However, I would also like to share another useful software named Lepide SQL server auditor i.e., (http://www.lepide.com/sql-server-audit/) that seems to be a very comprehensive approach while need to monitor SQL server database with real time alert at granular level.

Oooo, I wouldn’t use Change Tracking for Auditing purposes at all — that’s not what it’s designed for.

RE: “…. it’s not a great fit for databases with a very high rate of transaction commits to the tracked tables. For these databases there are major downsides when it comes to cleaning up those internal tables…..”

For those folk that fall into this category (needing to track changes in a set of high volume transaction tables) – any advice? [In the SQL 2012 environment]

Thanks!

–Erik

Writing your own code tailored to your specific needs tends to be the most scalable option.

Qlik Replicate is a great alternative to CT.

Just a follow up – I think Nic’s articles have moved here: http://sirsql.net/content/2014/05/

Thanks for great article! Got many answers by reading this!

Any impact for having change tracking enabled on the database but not enabled for any tables? I think this happened in one of my databases which uses CDC but not change tracking. CDC retention was supposed to be set but change tracking retention period was set instead.

Jeff – that’s a great question, but it’s not something that we’ve benchmarked. Generally I’d only recommend enabling features you’re going to use. 😀

Thank you for this Kendra! If we had change tracking enabled for the same table in multiple databases (same schema, just customer specific)… Is there a way to select all of the changes at the SERVER level? If that’s not built-in, what would your recommendations be to track changed records for any one table that is tracked in multiple databases? Dynamic SQL (ugh) or maybe a system view of some sort or maybe a trigger on a change tracking table to insert a record into a shared database or maybe service broker?

Ha.. I know each has it’s pros and cons but would you lean toward one over the other to accomplish the task?

There’s no system wide view to pick up all the changes at once. As you can tell from the article, change tracking has a lot of baggage, isn’t documented very well, and has a lot of gotchas, so I wouldn’t be eager to recommend it without a pretty heavy evaluation against other option. Same thing with Service Broker. But there is no general, “this is always better” option in this case, it does require doing a pretty lengthy evaluation to recommend the best architecture.

Thank you!!! And wow – that was FAST! 🙂

Thanks a lot for sharing such valuable information. I am in a situation where my CDC jobs ( 5 of them ) are spiking CPU to +95 % and Disk Queue Length is getting High. We don’t need CDC data immediately ( we have a process that sends CDC tables backups to developer every night ) my question is what can i do to avoid CPU spikes, should i change pooling or lower number of @maxtran ( we are using defaults now). Will really appreciate your help.

Thanks

Hi Abbas,

Couple observations– you’re using some non-SQL metrics for performance which can be misleading, and you’re grasping at setting changes in a way that shows you’re a bit lost. You don’t want to just go bashing at buttons in a case like this, you need to analyze more closely what’s happening at the time to find the root cause (and to establish if it’s even a problem– high CPU itself is a symptom but might be harmless).

I would start with the checklist here for incidents like this: https://www.brentozar.com/responder/

Kendra, my colleagues and I are working on a solution revolving around change tracking…

Our goal is to use SQL Server change tracking to track changes to be pushed to another non-SQL system.

Our database is a subscriber (transactional replication). Numerous publishers are pushing transactions into numerous tables.

During my testing, We observed that the entries recorded by change tracking has records grouped into ‘chunks’. I.E. There are many records with the same value of SYS_CHANGE_VERSION.

This is based on the query of select * from changetable(CHANGES my_table, 0)

Observation: When we execute the above query, some of the rows are ‘interleaved’.

For example, we may have 50 row with SYS_CHANGE_VERSION = 100, 1 row with SYS_CHANGE_VERSION = 101 and then more rows with SYS_CHANGE_VERSION = 100.

Our question:

When rows are inserted into the change tracking table/mechanism, can rows be inserted with a lower value of SYS_CHANGE_VERSION than the current maximum value?

We are trying to determine if all we need to do is have a sort order on SYS_CHANGE_VERSION and simple ignore records for the current max value of SYS_CHANGE_VERSION.

Furthermore, once data is read from the change tracking table, can subsequent reads contain entries which have the same ‘maximum’ SYS_CHANGE_VERSION which was read previously.

We are worried about whether or not we can continue/resume the process of pushing transactions to the non-sql system.

As an alternative, we have considered using the replication stored procedures on my subscriber to push changes to my non-SQL system.

Any answers greatly appreciated.

Angelo – for personalized system guidance, rather than leaving a comment on an unrelated blog post, your best bet is to use a Q&A site like http://DBA.StackExchange.com.

Will do, thank-you.

For a three-tier financial application (Sql Server, Asp.Net application server, http/json clients), we are looking for the best (read: lightest weight) solution for one-way cache validation and synchronisation Sql Server –> client .

From what I’ve read, Sql Server Change Tracking is overkill, since we only need the most recent date/time (or version) of the Sql Server table, which can then be compared with the version in the client cache.

We’ve also looked at

select top 1 user_updates from sys.dm_db_index_usage_stats where database_id=@dbid and object_id=@objid

and also

select top 1 leaf_insert_count + leaf_delete_count + leaf_update_count + nonleaf_insert_count + nonleaf_delete_count + nonleaf_update_count from sys.dm_db_index_operational_stats(@dbid, @objid, default, default)

which both might serve our purpose, but we’re not convinced by the performance.

Any thoughts here?

A rectification and partial answer to my own question: whereas the sys.dm_db_index_usage_stats performs very badly, the sys.dm_db_index_operational_stats() performs like a miracle, at sub-microsecond! So it would seem the latter is perfect for our purpose 🙂

Any other thoughts are still welcome…

Nick – for general questions, head on over to a Q&A site like http://dbastackexchange.com.

Those indexes are unreliable for change detection. They cant handle “slow moving sql updates”. UPDATE TAB SET COL=GETDATE() for lots of records causes the index to be updated immedately, but record keep getting modified after that. (For large tabs if it runs for minutes)

I never found better way than EXISTS CHANGETABLE(CHANGE TAB, XXX). We have 1000 tabs in our db, and sometimes checking them all from 6 threads finishes between 0.5s and 1.5s.

I would really like to find a quicker way,but there just isnt.

[…] Looking back, even if we had Change Tracking, we benefited from the granular control we had over this custom system, and the elegant simplicity of the implementation. The Change Tracking feature in SQL Server has remained somewhat of an ‘edge feature’, and people using it have had to deal with a variety of issues over time – a need to customize the indexes on the change tables, statistics estimate problems with change functions, and problems with cleanup. (I wrote about these a while back over here.) […]

Is this Thread still relevent

I have a Microsoft Support guy I cant reach as the DBA here because of a system not managed by us

Microsoft Congiuration Manager that uses change tracking

the Microsoft guy sends this to the admin and now he he is asking me?

”

After checking the information you provided, I was able to see that the watermarks are effectively updating with each run of the cleanup thread, which means that the issue is not related to watermarks, however, it is possible that the size of the tables to clean can interfere with the cleanup process, so I may require you to implement the following index:

CREATE NONCLUSTERED INDEX [si_commit_time]

ON [sys].[syscommittab] (

??? [commit_time] DESC,

??? [commit_ts] DESC

)

WITH (SORT_IN_TEMPDB = ON);

To implement it, you may require to do it thru a DAC, and once it is in place, please proceed to runt eh SP [dbo].[sp_ManualChangeTrackingMetaDataCleanupProc].

”

how to do you add an index to a in memory system table that is my question for the love of god why is this so complicated ….

any help or hints appreciated

Stephen – sorry, we don’t do free support here. You may want to contact Microsoft or ask a Q&A site like https://dba.stackexchange.com.