Temp tables are like real tables, just a little tricker.

When you’re starting out writing TSQL, it’s easy to want to do all your work in a single query. You learn about derived sub-queries, CROSS APPLY statements, and common table expressions. Suddenly, each of your queries is so complex that you hardly know what you’re doing.

But complex TSQL can be quite fragile. Small mis-estimations in parts of the plan can cause things to go very much awry in other regions of the plan.

Temp tables can help performance!

One treatment for slow, complex TSQL is to break it into multiple steps. You create a temp table, populate it, and reference it in future queries. This can lead to improved estimates and reliability, because temporary tables can have column and index related statistics. Those statistics help the optimizer estimate how many rows will be returned at different points along the way.

But this tuning technique isn’t foolproof. It’s easy to forget that statistics can be tricky– just like with real tables. Let’s take a look and prove that statistics issues from “normal” tables also matter on temp tables.

Out of date statistics on temp tables can hurt performance

The first step is to load up a temp table with some rows:

|

1 2 3 4 5 6 7 8 |

INSERT #temp (TransactionID, TempValue) SELECT TOP 100000 TransactionID, 1 FROM Production.TransactionHistory; GO INSERT #temp (TransactionID, TempValue) SELECT TOP 10000 TransactionID, 2 FROM Production.TransactionHistory; GO |

We now have:

- 100K rows where TempValue=1

- 10K rows where TempValue=2

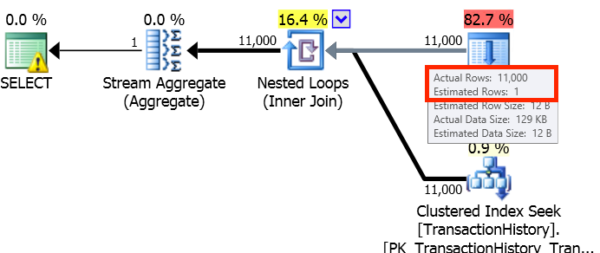

Now let’s say I query the temp table in some way. My where clause is for a specific TempValue. The act of running this query causes a column level statistic to be created on the TempValue column:

|

1 2 3 4 5 |

SELECT max(th.Quantity) FROM #temp as t JOIN Production.TransactionHistory th on t.TransactionID=th.TransactionID WHERE TempValue=2; GO |

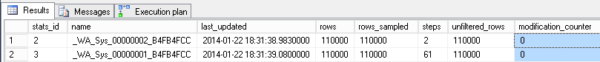

We can see the statistic, too. This code is modified from Books Online and uses a DMV available in SQL Server 2012 SP1 and higher:

|

1 2 3 4 5 6 7 8 |

use tempdb; GO SELECT sp.stats_id, name, last_updated, rows, rows_sampled, steps, unfiltered_rows, modification_counter FROM sys.stats AS stat CROSS APPLY sys.dm_db_stats_properties(stat.object_id, stat.stats_id) AS sp WHERE stat.object_id = object_id('#temp'); GO |

I continue on, and insert 11K rows for TempValue=3:

|

1 2 3 4 |

INSERT #temp (TransactionID, TempValue) SELECT TOP 11000 TransactionID, 3 FROM Production.TransactionHistory; GO |

At this point I have:

- 100K rows where TempValue=1

- 10K rows where TempValue=2

- 11K rows where TempValue=3

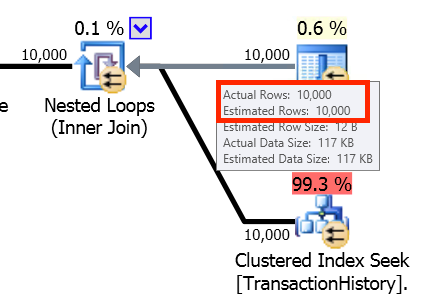

Now I run another query which wants to look ONLY at the 11K rows where TempValue=3. SQL Server completely mis-guesses how many rows will be returned– instead of 11K rows, it guesses that I’ll just get a SINGLE row:

|

1 2 3 4 5 |

SELECT max(th.Quantity) FROM #temp as t JOIN Production.TransactionHistory th on t.TransactionID=th.TransactionID WHERE TempValue=3; GO |

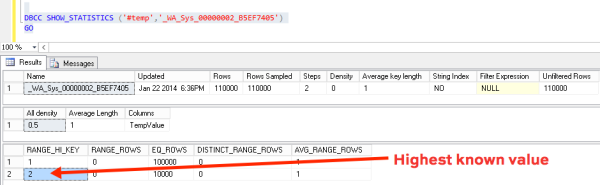

Looking at the statistics, we can see why– the statistic that was created when I ran my first SELECT query wasn’t triggered to update:

Let’s look at the histogram

When we look at the histogram for the statistic, it confirms that it doesn’t know about any rows with a TempValue higher than two:

Bad Statistics can lead to bad optimization

If I was joining from this temp table to other large tables, it might cause a very large data skew in my plan– and it could cause big problems. The optimizer might select inefficient joins, not allocate enough memory, and choose not to go parallel if estimates are skewed too low.

Temp tables are a great tool to use, but remember a few key rules:

- Column level statistics will be created for you when you run queries using the temp table

- If you modify the temp table in multiple steps, those statistics may get out of date

In complex procedures, manually creating and updating statistics on columns or indexes can save you time and improve execution plan quality.

Could Trace Flag 2371 Help?

If you find that you have a lot of existing code that has this problem, Trace Flag 2371 may help. This trace flag is available as of SQL Server 2008R2 SP1 and higher, and it changes the default threshold for when statistics automatically update.

The net effect of the trace flag is to cause statistics updates to happen more frequently on large tables. You must turn it on server wide for it to take effect — it doesn’t work for individual sessions.

I’m not a huge fan of enabling any trace flags unless you’re solving a specific problem, and documenting it. This trace flag is not very widely used in my experience, so flipping it on puts you in a small subset of people. If you start hitting wacky issues and need to resolve them, suddenly the trace flag gives you a whole new dimension of complexity. So please don’t think of this trace flag as “preventive medicine” — only use if it you don’t have other good ways to solve the problem.

18 Comments. Leave new

Great article as usual Kendra! Thank you. 🙂

Couple of clarification questions.

1. Did the statistics not get updated for the temp table for the same reasons as a permanent table would not have had their statistics updated (Statistics are updated after 500 rows or 20% growth)?

2. At the beginning, you had 2 insert statements and the statistics used by the SELECT statement included all of the data. Was this due to the statistics being generated at the time of the SELECT statement instead of at the time of the insert statements?

Thanks,

Mickey

Hey Mickey,

1) Yes– exactly. The temp table’s stats follow the same rule as real table’s stats, it’s just harder to observe as an administrator because the temp tables come and go.

2) Yep, you got it. Automatic stat creation is caused by queries doing reads on the tables, so that first select statement generates a new stat which is nice and fresh and correct.

kl

Hi Kendra, thanks for the post, very interesting as usual. Is there an equivalent to Trace-Flag 2371 for SQL Server 2008 (Non R2)? Thanks, Ben

Hi Ben, there’s not an exact equivalent, but there were some older trace flag for statistics. They’re lesser known / might have weirder performance impacts. I can’t recall the numbers offhand, but I’ll look up some links a bit later today and add ’em in here.

Hi Ben– the trace flags I was thinking of are 2389 and 2390. They are specific to the “ascending date” problem with statistics, so they don’t help with every situation.

The best place to start with these is Ben Nevarez’s great article here: http://www.benjaminnevarez.com/tag/trace-flags/

He mentions at the end that these do seem to be officially documented. They are lesser used, so of course test and use with care.

Thanks Kendra, that is very interesting. Ben Nevarez’s article is gold too. Might be worth giving it a try. Have a nice day, Ben.

Thanks Kendra, great article!

All the information is very useful although sometimes I find hard to remember the zillion different things that may come into play when a query is not responding the way I anticipate (no one to blame here but my own lack of ability to persist all this valuable info in my brain for more than a few days…). I think that a very interesting topic could be “how to systematically tackle estimation issues”. This is, some sort of work-flow that can help DBA’s tune those specific kind of problems in a systemic manner to make sure you are not leaving things behind… things you may have learn in the past about tuning but are buried somewhere below the recipe for burritos you just learn in a cooking class :).

The only thing I did not fully understand is why the problem of outdated statistics was somehow associated to an article regarding temp tables which may give the occasional reader the wrong impression that this symptom is characteristic of temp tables ONLY when it is really an infamous typical cause of statistics skewing in ALL types of tables.

Thanks. I really appreciate all your great articles!

I love that idea for a presentation concept!

I tried to convey that the issue wasn’t specific to temp tables with the title. (Statistics matter on temp tables, too!) It’s easy to forget that the rules with statistics for normal tables ALSO apply on temp tables, and that was my point. The issue can be harder to diagnose since the tables come and go in the scope of the caller.

I just updated the text in the top paragraph to try to make this a little more clear for others.

Thanks!

I think the HTML in part of this (great) article is broken – there seems to be a formatting issue where lines are running off the edge of the page – I tried viewing it in several browsers

Thanks for this note– it looks like our syntax formatter got in a fight with WordPress, and WordPress won! I just went through and I think I have it all fixed.

I think, it is good practice to first try Table Variable to reduce complexity of your queries. They are much harder to get wrong and do not have statistics issue outlined here.The only thing to keep in mind though – table variables are good only for small amount of rows.

Viktor – can you provide some evidence for how table variables reduce complexity, are harder to get wrong, and don’t have statistics issues?

Very useful post!

Someone I interviewed recently talked about temp table statistics. Now I’ve got more questions to ask around that topic after reading this post 🙂

I’m guessing that the same rule applies to the global temp tables regarding stats creation and updating, correct?

As for dropping statistics, I’m guessing they’re dropped either when the session ends (for local temp table), or if the temp table is explicitly dropped right?

Thanks!

Thanks much, Kendra. This article and a complimentary article from Paul White (http://sqlblog.com/blogs/paul_white/archive/2012/08/15/temporary-tables-in-stored-procedures.aspx) turned on a whole bunch of lights for me. Very exciting! ?

Our SQL developers make extensive use of temp tables and many are updated constantly during the execution of our procs and an awareness of the issue with stale stats may help us a bunch.

One question: Would you consider running “UPDATE STATISTICS #TempTableName” as a “best practice” in a proc following changes or queries to a temporary table that are likely to cause the existing stats to be out of date?

Thank for your post. In my opinion, as same as @John Shahan, update statistics of #TempTableName manually will solve this problem.

[…] https://www.brentozar.com/archive/2014/02/statistics-matter-on-temp-tables-too/ […]

Hello, thanks for your nice post. Please, is there any reason to not auto create stats even with auto create stats enabled? I have a plan with a warning about a temp table without stats, after insert and select, why it could be? Thanks.

Glad you like it. For questions, head to a Q and A site like dab.stackexchange.com.