Answer: Yep, sometimes it can.

I used to think that higher degrees of parallelism followed a law of diminishing returns– you could add more threads, but the benefits would taper off. But it’s a bit more complicated than that. Microsoft’s recommendation to be careful when setting maxdop to values over 8 is a warning worth heeding.

Lowering maxdop can cut CPU without sacrificing duration

We tend to think that reducing the number of threads available MUST make a query slower– but that we sometimes need to do it so other queries can run concurrently.

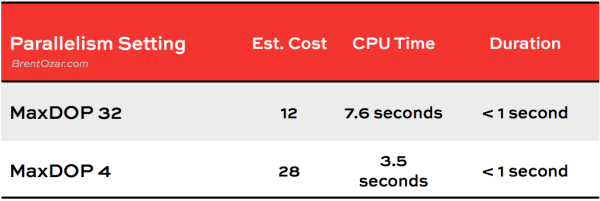

It’s great to support concurrency, but lower DOP doesn’t necessarily mean a longer runtime, even if it does lower CPU consumption. Here’s a simple example of an OLTP query run with two different DOP settings. CPU time and duration were measured using “SET STATISTICS TIME ON”. For both runs all data was in memory (no physical reads or read aheads).

Duration in both cases was around 700 milliseconds. The lower DOP didn’t make execution time longer. It changed the way SQL Server ran the query, but it cut overall CPU usage while keeping the runtime about the same. This was great in this case, because the query in question needed to run frequently on an OLTP system.

Higher maxdop can slow down large queries

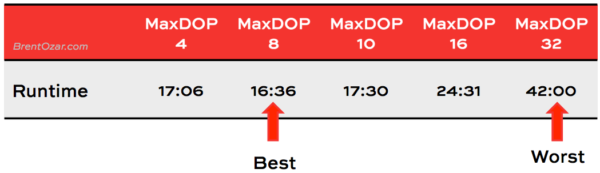

In the case of large queries, higher DOP can slow down query execution. The impacts here vary a lot by processor, memory type and amount, and whether or not your SQL Server is virtualized— not to mention based on the execution plan of the query in question.

Here’s a totally different query tested on totally different hardware. In this case the query reads in hundreds of gigabytes of data, but as in the previous example all tests were run against a “warm” cache and were not doing physical reads or read ahead reads. The server used in this test had 8 physical cores per NUMA node.

Changing maxdop changes query plans

When you tune maxdop, it’s worth watching the execution plans for the top queries on your server. I’ve seen changing the amount of threads available for a query make the optimizer change its mind about how to run a query immediately– and been able to reproduce it switching the plan back as soon as I hint a different DOP.

Since impacts can be complicated I recommend changing maxdop rarely and monitoring your plan cache and wait stats for at least a week or two after the change.

Parallelism is a good thing

Don’t get me wrong– I ain’t saying parallelism is bad for SQL Server. Multiple threads can make many queries faster!

One of the gotchas with SQL Server is that the default value of “0” for the “Max Degree of Parallelism” setting can lead to poor performance– because it lets SQL Server use all your processors (unless you’ve got more than 64). Fewer threads can not only reduce CPU usage, but may also be faster.

So check your maxdop settings, and keep reading to learn more about CXPACKET waits.

13 Comments. Leave new

Hi Doubt about the correct configuration about Max Dop, Some says that the perfect one is for the default one with ) but I think the correct one is 8, I going to give you a little feed back about the server operation on day execute a lot of transactions, and in the night they have schedules and process a lot of batch process. do you think we should configure different in day and other in night or doesn’t matter??

Changing the degree of parallelism for the whole instance will cause all plans to need to recompile, which isn’t always OK.

But if all the plans are recompile, is only one time and later everything is better isn’t? But you think is better only changed for some queys? because my CPU is increasing and the cxpacket wait is really big.

Hi Rafael,

Whether or not all the recompiles are worth it varies widely.

Testing the batch processes with specific settings and then setting it on a query by query basis for the largest queries is the safest bet, although it’s more work. The right max degree of parallelism varies widely both by workload and by hardware, so it is really specific to your SQL Server.

I know this is an old post, but I just wanted to throw a little extra HINT to Rafael as well – if the MAJORITY of your processes work best with one specific MAXDOP (8 for example), but a small number of others work better with a different MAXDOP (1 for example), I would not reconfigure the server nightly to handle the 2 different workloads. I would use a query hint to set the MAXDOP to what it should be for those few queries.

That way, no recompile and you don’t end up arguing with the DBA about what the “best” value is for the day and the night. It MIGHT be that 8 works best for most processes during the day and 1 is best at night today, but will that be the case in 6 months? In a year? in 5 years?

At my workplace, we have a FEW queries (thinking 10 or fewer stored procedures) on a system that run best with MAXDOP set to 1. So our approach was to use a query hint on those and it works great. Pain in the butt to find all of the stored procedures that work best with a specific query hint, but is safer than making a global config change.

Hello,

I would like to ask about how to calculate the appropriate MAXDOP setting.

I have read the Microsoft KB guidelines found in http://support.microsoft.com/kb/2806535.

Our server has 2 CPUs, 12 logical cores each with hyperthreading (6 physical cores), giving us a total of 24 logical cores. NUMA and Hyperthreading are both true so according to the MS KB, our MAXDOP setting should be 6.

Another DBA shared with us the following script that he uses to calculate MAXDOP value and it gives a different value. It calculates a value of 8.

I am trying to determine which advice is accurate… What do you think of the script below as compared with the Microsoft KB? There are varied views on this on the web so I am having a hard time picking an advice to stick to…. Thank you very much

DECLARE @CoreCount int;

DECLARE @NumaNodes int;

SET @CoreCount = (SELECT i.cpu_count from sys.dm_os_sys_info i);

SET @NumaNodes = (

SELECT MAX(c.memory_node_id) + 1

FROM sys.dm_os_memory_clerks c

WHERE memory_node_id 4 /* If less than 5 cores, don’t bother. */

BEGIN

DECLARE @MaxDOP int;

/* 3/4 of Total Cores in Machine */

SET @MaxDOP = @CoreCount * 0.75;

/* if @MaxDOP is greater than the per NUMA node

Core Count, set @MaxDOP = per NUMA node core count

*/

IF @MaxDOP > (@CoreCount / @NumaNodes)

SET @MaxDOP = (@CoreCount / @NumaNodes) * 0.75;

/*

Reduce @MaxDOP to an even number

*/

SET @MaxDOP = @MaxDOP – (@MaxDOP % 2);

/* Cap MAXDOP at 8, according to Microsoft */

IF @MaxDOP > 8 SET @MaxDOP = 8;

PRINT ‘Suggested MAXDOP = ‘ + CAST(@MaxDOP as varchar(max));

END

ELSE

BEGIN

PRINT ‘Suggested MAXDOP = 0 since you have less than 4 cores total.’;

END

So, bad news – there is no single formula that will guarantee you have the right setup for your workload. It just doesn’t work that way.

If you don’t need to fine tune this, just use the Microsoft guidance.

If you need to fine tune this, you need to understand multiple settings as well as how to look at SQL Server waits. Start with Brent’s post here: https://www.brentozar.com/archive/2013/08/what-is-the-cxpacket-wait-type-and-how-do-you-reduce-it/

Thank you very much for your answer.

I feel safer following the Microsoft guidelines so I think that will be my route.

I was just confused with the script provided by another DBA and where he might have gotten it from and if it has Microsoft’s blessing.

This is very helpful. Thank you very much.

We have a server that is struggling and I am not the official DBA for that server so I am not sure if it is disk issues or cpu, but it does have lots of ram. SQL server licesnse are less then the number of CPUs on the box so we have only 16 cores licensed. But my question about MAXDOP is this. We have seen that MAXDOP 1 has helped many many queries on this server, so what does that tell you about the health of the server?

Does that make it seems like the CPU’s is the bottle neck?

[…] Here’s a nice post here from Kendra Little talking about how having higher settings can actually slow down your query execution time: https://www.brentozar.com/archive/2013/12/q-can-high-maxdop-make-a-query-slower/ […]

Amazing article Kendra!!

I ran into a scenario on a WMware machine with Sql Server 2014 and 32 logical CPU assigned and one NUMA a really large query takes

MAXDOP 8 (As receommended by Microsfoft) Takes 7 minutes

MAXDOP 16 Takes 3 minutes

My experience confirms this. We just moved a SQL Server instance from a box having 8 logical processors, MAXDOP 0, to one having 40 logical processors. MAXDOP still 0. Things seemed fine for a day or two, and then the four horsemen of the apocalypse rode us down and ground us under their hooves. Blocks, deadlocks, wait times shooting through the roof, high durations. Every measurable stat got worse. Angry users, angry management. Yesterday one of us (not me) had the insight that maybe having more CPUs can make things slower. Counterintuitive, right? We did the research which found this article and MS’s KB article. We’ve made MAXDOP much lower, starting with MS’s KB recommendation (10 for this box), and the wait times for every query plan I’ve examined in the query store drove right down to near 0 and have stayed there since. The too-high MAXDOP was much more ruinous that I would have guessed. We had frequent, terrible pileups of queries in enormous blockjams with a concurrent rise in CPU usage. I saw a genuine deadlock that persisted for over a minute. Crazy. The relief from lowering MAXDOP was immediate and dramatic, at least in the query store stats. I’m hopeful that this week will be much better for our poor users. Note: Not a real DBA, I just play one at work.