5 Things About Fillfactor

72 Comments

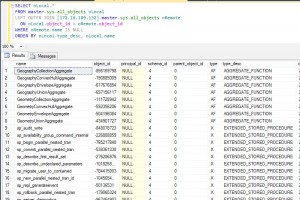

Are you a page half full, or a page half empty kind of person? I’ll never think “I’ve seen it all” when it comes to SQL Server– there’s just always someone waiting around the corner who’s found a weird new thing to do with it. But there are some things I really wish I could…

Read More