When someone brings me a query to tune, I start with a few questions:

How long does it take to run now? I’m looking for a time at first, and later as I progress through tuning, I’ll measure it more scientifically with logical reads and CPU cycles.

How long would you like it to take? Meaning, when do I know I’ve crossed the finish line and can stop tuning? Sure, I’d love to make every query take 5ms, but sometimes users are happy if it just doesn’t time out.

Am I allowed to change the query? Is it a third party ISV app that I’m not allowed to touch, or can we fiddle with it? Can I split it into multiple queries? Can I move it into a stored procedure? No, stored procs aren’t inherently faster, but if you care enough about it that speed matters, then moving it into a stored proc gives me the ability to tune it faster. I can run through more iterations more quickly without having to recompile an app.

How often does it run? Are we talking once a day as a scheduled job, or thousands of times per second as part of a page-rendering process?

How often does the data change? I try to deduce this by looking at the tables and fields involved, but I like getting confirmation from the developers. Does it change every time it’s queried, or about once a minute, or once an hour, or maybe…never?

When we get to this point, sometimes I get to ask my favorite question: why are we querying this at all? Why not cache it in the application and only fetch the updates periodically, like on startup? Often we store application parameters in the database because, well, it sure beats an INI file, and it lets people change application config on the fly without restarting things. Another common culprit is geographic data – what’s the exact latitude and longitude of a city, and what other cities are nearby? What stores are near each other?

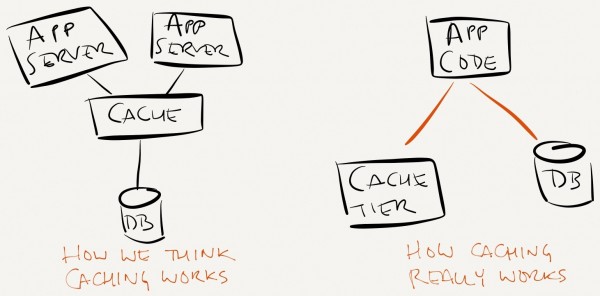

This starts a conversation about caching: temporarily saving things in memory in the app or web tier. The most basic version of this is in-memory variables in the app – this is the fastest way to get started, but the least scalable. You can’t persist large chunks of data here, and you end up with duplicate caches across all the app/web servers. Instead, build a caching tier with software like memcached, redis, Amazon ElastiCache, Windows AppFabric Cache, and Windows Azure Caching. These allow you to scale out your cache quickly and cheaply using commodity hardware and no fancypants licensing.

Caching does not sit in front of the database server – it sits alongside:

The application code has to be written so that when it needs data:

- The app checks to see if a valid copy of the data is in the cache tier

- If not, the app queries the database

- The app adds the data to cache to avoid hitting the db again – and maybe includes an expiration date.

(Note: some caches actually do purport to sit between your app and the database. Personally, I’m not a big fan of that because now your app is tied into a specific cache provider, and that’s risky today.)

In step 1, I glossed over a whole difficult concept by saying a valid copy of the database. Cache invalidation is notoriously hard – how do we tell the cache tier when something has been changed in the database? That’s not something we’re going to cover here, but don’t let that stop you from implementing caching. There are sure-fire, no-brainer cacheable wins right now in your environment. Cross that bridge now, and then cross the cache invalidation bridge when you get to it.

Once you’ve implemented a caching tier, performance tuning becomes a whole new ball game. Instead of trying to make a query 10% or 20% faster, you’ll avoid querying the database at all.

Five things to remember about building your cache:

- Try to write your app’s caching code in a way that will let you easily change cache providers. It’s just a key-value store, and key-value stores change fast.

- Size your database server so that it’ll survive your loads if the cache falls over. This is made easier if you also…

- Build emergency switches into your app to disable database-intensive features if necessary. If the cache dies, this will give you room to breathe.

- Monitor your cache tier. Pick metrics that will help you determine when it’s time to add horsepower.

- Look for data that maybe doesn’t belong in the database – ever. As you grow more comfortable with your cache server’s reliability, start picking elements of your web app like “Today’s Popular Items” or “5 Most Recent Hits” that should only be persisted in cache.

16 Comments. Leave new

Our application has its own custom-built cache tier, and we have recurring issues with reconciling the cache and the database. I guess these issues will remain as long as there is a cache tier, whether custom or off-the-shelf. But I’m looking forward to things like Hekaton, which may just make this entire discussion (and tier) redundant.

Hakim – hmmm, can you elaborate on how Hekaton would eliminate the need for caching?

I would guess that he is referring to the supposed latch-free technology with the multi-version concurrency operations and almost entirely in-memory table operations.

That is nice and good, but depending on the purposes of your database, not even hitting it is a pretty nice approach.

The company I work for needs to revisit a number of approaches to come up with something drastically different than what we have right now.

“This starts a conversation about caching: temporarily saving things in memory…” If the purpose of the cache tier is ultimately to make the app perform faster by avoiding hitting the database and its disks, and if SQL Server provides you a way to store your most frequently sought data in RAM, then you could possibly do without the cache tier altogether. Use SQL Server’s in-memory offerings instead of the cache.

To me if you are going to use a cache then you want to avoid having to even make the calls to the DB, regardless of using SQL Servers caching abilities.

That’s a load SQL Server does not have to deal with and it is much easier to scale out app servers than it is SQL servers.

Very nice. Caching is often forgotten, especially in the MS space. Thanks for the reminder though: as we’re building completely on AWS (and having to deal with AWS’ performance limitations), ElastiCache is going to come in handy.

Many years ago I designed a caching mechanism I’ve used several times since – firstly there’s a set of triggers on each cached table which increments the it in that table’s row in a cache_register table on each update. (some also maintain a register row for joined caches also)

Client apps maintain local in-memory caches for dropdowns & lists, along with the int value for the version of the data in each cache – when one is used the register int is compared – if different the cache and its int are refreshed.

When first implemented on customer and product tables in a large OLTP system it resulted in a dramatic performance gain, with some window opening dropping from 30 seconds + to <1 second most times.

James,

Good concept – SQL Server has a feature by the name of rowversion which provides a good built-in mechanism to assist with doing this:

http://technet.microsoft.com/en-us/library/ms182776.aspx

Sincerely,

Ross

Hi Brent,

Great article. A strong reminder to always keep an open mind, to look for the problem or solution ‘elsewhere’ – i.e. outside of the db or even the current project. It pays to take time to understand the whole system (including the people involved) before jumping to any rash conclusions.

Rob

Surely food for thought. But one sentence bothered me.

>>

Instead of trying to make a query 10% or 20% faster, you’ll avoid querying the database at all.

<<

SOMEBODY has to write the queries to load the cache. If your cache hit is the victim, meaning you are the one that needs the cache refreshed rather than taking advantage of someone else's calls, they you are at SOMBODYs mercy. You still need people capable of writing high performing queries.

Otherwise you just magnify the problem.

@Hakim makes a valid point. Are we caching on an application/web server simply because we will not devote the resources to supply a reasonable infrastructure for SQL Server and for contacting it? If you use badly configured drive space and a limited bandwidth connection, anything local is going to seem blazing fast. So you have a high performance band-aid if you do not analyze and solve the underlying issues first.

G Bryant – you wrote “devote the resources to supply a reasonable infrastructure for SQL Server” – in a high volume environment, this usually means Enterprise Edition at $7,000 per core. Each core I can avoid at the SQL Server level pays for a *lot* of app/web servers.

Good

Trimming the CPU and memory work for repetitive identical queries (with identical result sets) is why I hope that SQL Server pursues a feature set equivalent to the Oracle result cache. Some data warehouse implementations have benefitted from this result cache tremendously without the overhead of designing their own cache layer (and assuming the responsibility of cache coherence).

Lonny – have you thought about submitting that request at http://connect.microsoft.com? That’s where Microsoft tracks requests from the user community. I don’t hear a big outcry for this feature, so I doubt it’s on Microsoft’s radar.

You can also use Change Tracking, which is light and free in standard edition of SQL SERVER since a long time.

Iberserk – this post is about fast queries, like having your application ask for data and get it instantly returned. How does change tracking help in that scenario?