I’m proud to say that I’ve only lost one job in my life. I was a grocery store bagger when the store came under pressure to increase their percentage of minority workers. They laid off all of the Caucasian baggers, but I’m not bitter. I actually loved bagging groceries, and if I hadn’t been laid off, I’d still be the guy asking you “Paper or plastic?”

I probably didn’t deserve to lose that job, but I’ve deserved to lose others. I’ve just been lucky. Through my career, I’ve seen a lot of database administrators go through some dicey situations that cost them their jobs. Maybe not right away – but once these situations happen, it’s only a matter of time until management finds a legally defensible reason to swing the axe. These situations often involve just nine letters.

The First Three Letters That Get you Fired: RPO

Recovery Point Objective (RPO) measures how much data you’d lose if your server went down and you brought up the secondary server. It’s measured in time, not megabytes. The less time you have, the more frequently you have to get the data to a secondary location.

In theory, if you’re doing transaction log backups every 5 minutes to a network share, so you’ve got a 5-minute RPO.

In theory, “in theory” and “in practice” are the same. In practice, they are different.

In practice, you’re unlucky, and your transaction log backups will fail before the engine itself does. I know this because it’s happened to me and my clients. In one case, the volume for backups filled up and the transaction log backups stopped working. Since the log backups didn’t run, the transaction logs didn’t clear, and they continued to grow. The Gremlin of Disaster arrived, sowing corruption in his wake. By the time the dust cleared, we’d lost over an hour of data – permanently.

If you’re backing up to a local drive and the RAID controller fails, check out this helpful article. You’ll be needing it, because with a failed RAID controller, you can’t access any of the data on the local drives. Before you try pulling the hard drives out of the failed server and putting them into another server, check out this article. After all, you’re gambling – gambling that the RAID controller firmware matches between servers and that it’ll be able to read and rebuild the newly inserted drives. Guess wrong, and it will format the drives, thereby erasing your backups.

Savvy readers will detect a sense of acerbic humor in the post so far – even more biting than my usual pieces. Brace yourself, because it’s going to get worse.

The Next Three Letters: RTO

Recovery Time Objective is how much time you’ve got to get your server’s act together. Some companies measure it from the time you learn about the problem, some measure when the service is first unavailable.

The clock’s starting point matters a lot. When things go wrong, SQL Server Agent might just email the failsafe operator – if you’ve gone to the trouble of setting one up and configuring alerting. Even then, you probably won’t notice the email because you’ll be drunk at the pub having a nutritious dinner with your lovely family. An hour later, when you realize that your cell phone is set on vibrate, you’ll panic, run to the car, fire up your laptop, and try connecting over your phone’s 1-bar internet connection. Should we start the clock when the server started failing, when the email was sent to you, when you saw the email, or when you finally connected to the VPN? If the business wants to start the clock when the server stops, you have to account for the meatbag reaction time, and with most of us, there’s a pretty long delay. (If you’re the kind of DBA who sets up rules to move automated alerts into a separate email folder, you’re off to a bad start.)

If our idea of disaster protection is restoring the databases onto another server, we might assume that the RTO just needs to cover the length of time to do a full restore. Not so fast, Speedy McPinto – here’s a few things you’ll need to do to get the server online:

- Provision enough space to do the restore – do you know how much space you need on each drive?

- Make sure it’s on the right version/build of SQL Server – sometimes we have to install service packs, cumulative updates, or hotfixes to get production to work right. There’s no guarantee our standby box has those same binaries.

- Get the sp_configure settings right – if production had a certain maxdop, for example, it might fail terribly on the new hardware.

- Get security working correctly – you did script out the logins and passwords, didn’t you?

- Configure TempDB correctly – I’ve seen people restore production databases onto dev environments where TempDB was just configured with a few gigabytes of storage, and it instantly started growing when handling production load. TempDB ran out of space, and the server went Pete Tong.

- Restore the database – and hope to hell it restores correctly the very first time.

The less time you have, the more tasks you have to perform ahead of time, and the more you have to document, test, and rehearse the steps you’re going to perform at the moment of disaster.

The Most Important Three Letters: CYA

Right now, all of your users believe your RPO and RTO is zero. They believe you’ve got everything under control, and that when disaster strikes, you’re going to instantly bring up another server with no data loss whatsoever. We both know the truth, though – you spend your time at work checking blogs, not backups, so we’ve got some work to do.

You have to put it in writing – and that’s where our worksheet comes in:

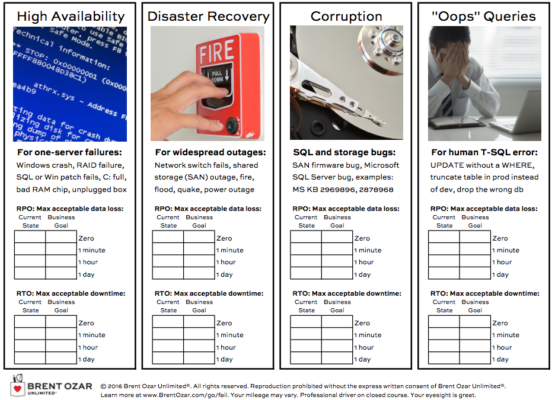

There are four columns: high availability, disaster recovery, corruption, and “oops” queries.

For each column, you have to define the maximum amount of data you’re willing to lose, and the amount of downtime you’re willing to have. Start by filling out the current state. (If you’re not familiar with calculating your current RPO/RTO, that’s where our DBA’s Guide to HA/DR class comes in.)

I know, you’re going to think the business says “ZERO!” but that’s where the next page of the worksheet comes in:

Down the left side, choose how much data loss you’re willing to tolerate. Across the top, choose your downtime. Where they meet, that’s the cost.

When the business users understand the costs, they’re able to make better decisions about RPO and RTO. But it all starts with getting them in writing. To learn more about how to actually pull it off, check out our online course, the DBA’s Guide to High Availability and Disaster Recovery.

37 Comments. Leave new

And for anyone who thinks (or insists) that RAID controllers don’t fail in SANs and, even if they did they’re teamed so there’s redundancy.. Well, I have a story of a 600GB database completely lost with no recovery that you might like to hear

Yep, I agree wholeheartedly. I’ve lost entire racks of storage. Good times…

Gail, that reminds me of a major disaster I dealt with. We had a drive fail in an array and when they opened it up to replace the failed drive, they discovered the hard way that Dell had forgotten to screw the drives in place and all drives came tumbling out.

Ow, ow, ow!

One of my servers had a punctured RAID and DELL advised rebuilding the machine from scratch. The problem was we couldn’t copy our database files off the machine. We were able to use disk2vhd to write an image to an external drive that we then ran on VirtualBox while the original was rebuilt.

I’d like to hear it.

…I’m sorry, I was reading your post, but got completely distracted by the aptly captioned Pinto, reminding me of driving that around in high school, and then a Mercury Bobcat (knock-off Pinto) once the first died. What were you saying? 😉

When I first read the title, I thought you meant written letters, and got very excited. But acronyms are cool, too!

Tough luck on the grocery bagging job loss 😉

Great advice! I am definitely attending your webinar.

Well I have been the lucky one. The most data I have ever lost was a 10 minute window. I always lobbied hard and successfully for high availability resources and using RPO and RTO as my basis, never had to CYA.

The key is picking the right hardware and setting it up correctly. If you are using a Fibre Channel connection to your SAN do you have a redundent FC switch?

Does you Array/SAN have Dual Controllers that are hot swapable?

Do you have extra NICS in the server going to different swiches?

Clustering has always been the most reliable solution if it is setup correctly. I have had to go into shops where they thought Mirroring would solve all thier issues, but in fact, it complicated the situation because the Users and Security settings were not synced between the servers.

It is all about a firm discipline on setup and configuration, and implenting the proper alerting system.

By the way, the 10 minutes I lost was not due to hardware or server issues, it was a careless developer who deleted the entire customer table by accident, 10 seconds after my last log backup.

Why did I lose 10 minutes? Well that is becuase I was in my car on the way to the datacenter to build another production cluster. And it took them that long to figure it out and call me.

The tool to make life so much easier here is one that does object level restores. It found the backup and all the rans action logs and fixed the Customer table in 4 minutes. I am not sure I can propmte tools here so if I hear from Brent etc that it is accpetable, I will post it.

Build the infrastructure right, and you can recover in a very short time.

Sorry for the typos……

David, there are many levels of “doing right” and “firm discipline”. We just had major issues with NetApp and Brocade. Was it NetApp’s fault? Was it Brocade? Well, from our customers perspective, it was us. Lesson learned

Was the Brocade Fabric load balanced and set for failover?

Was the NetApp setup for High Availability?

I am not saying hardware failures do not exist, but the infrastructure can be built to minimize that occurance.

There are, as you say, many levels that need to be considered down to the type of RAM in the Server and the Multipath client used for the HBA connection to the Fiber Fabric.

The real issue is the difference of management weighing the costs of doing it right versus the potential costs of taking shortcuts. I have to make the best of what I am given to work with like everyone else. Sometimes I get what I think is needed and sometimes not. But I try to follow the same approach as best I can in all cases.

Very highly doubt it was NetApp’s fault. Their software is absolutely meticulously tested and they will spam the heck out of you if there is a hardware problem, bug, or misconfiguration.

Brocade – I have a experienced a couple storage fabric outages with but I am pretty sure those were from layer 8 problems.

The biggest problems I see in fiber channel infrastructures are that a lot of organizations think they can get away with having your storage adapters, storage fabrics and storage appliances with unqualified firmware versions or even use stuff that isn’t certified at all to work together.

Great post Brent! As you had said in one of your early webcasts,its hard time when the CTO taps on your back when you are dealing with a downtime and reminds about RPO and RTO:)

I’ve always told my customers that you have three options:

– you can have it done fast

– you can have it done cheap

– you can have it done right

… now pick two.

Invariably, they pick fast and cheap. So I get a physical signature on a document where they accept the risks associated with their choices, and I don’t start work without that signature.

Amazing how often they now want to discuss “right”.

I’ll often ask embarrassing questions like:

– When is the last time you tested your restore process to a bare-iron machine?

– How many configurations (OS, Database and associated service packs) do you have in production, test and development?

– How do you measure RPO and RTO?

– What is your target for RPI and RTO?

– What measures have you put in place to achieve your stated goals?

– Have you historically met your stated goals?

– What are the costs associated with not meeting your stated goals (lost transactions, lost business, man-hour costs, good will costs)?

– Is your business prepared to absorb those costs?

Usually, I’m dealing with someone non-technical. So I ask them what happened the last time they didn’t have access to their primary mode of transportation (cars for suburbanites, public transportation for city-dwellers). They’ll tell me how they rented a car, were supplied with a “loaner” car, or instead of taking the bus, they took the subway.

I bring up the point that they had redundancy options, with associated levels of cost and convenience.

I’ll then ask them what redundancy options they have in place for their business or primary mode of making money. .

Aside from whatever local HA/DR measures have been put in place, I’m looking options for offsite replication to “cloud” databases like Azure. Has anyone else explored this as an option? I know there are some limitations (database size, costs, etc), but I haven’t had a chance to dig deep yet. Any guidance?

Marc – typically pure cloud databases like SQL Azure have too many limitations to function as HA/DR to onsite SQL Servers. You can’t do transaction log backups or database mirroring to SQL Azure. You can use these techniques with hybrid cloud solutions like Amazon EC2. The pros & cons of these are beyond what we can cover in comments, but you can start your research with this post by Jeremiah:

https://www.brentozar.com/archive/2011/09/sql-server-ec/

AWESOME comment. I’ve been searching for ways to present the options for RTO and RPO to the business, and I like your analogies.

Somehow, I’ve never lost any data yet due to crashes. The closest I came was when an really bad apple, who happened to be our lead Windows guy, converted a smallish manufacturing-related server from local hard disk storage to local raid (yeah, this was back in the day). He took a log copy of the database without grabbing a baseline, took an OS copy of the database without stopping SQL Server, and then re-used the original disk in the raid array, destroying its contents. If we would have restored to the most recent backup we had that was usable, we would have re-fulfilled orders. Since I was clever enough to not trust this guy at all, and the database was small, I had a redundant set of backup jobs that backed it up to my workstation, so I restored from those.

I have, however, lost data through my own ineptitude. I once truncated a 1-billion-row production table instead of the scratch table I was building from the production table. Hey, you know, let’s start over … I’ll just insert the words “TRUNCATE TABLE” here before the table name, highlight that part, hit Ctrl-E … oh, that wasn’t the right table, was it? Oops.

I have, however, caused data to disappear

ah… proper dba procedures for executing DML queries.

1) You shouldn’t be executing queries on a production server because the programmers should be writing good code.

2) Umm 1 isn’t always the case… maybe never.

3) Destructive code… such as truncate… put it between /* */ and then put —- before it.

test it on a dev db first.

4) delete and update statements. Put them in a transaction statement.

Don’t execute them without being able to roll them back. Do it on a dev db first.

5) know that top * doesn’t work with delete. Use CTE. If you are doing this, there is probably poor data architecture.

6) non DML select queries, use (nolock) hint on tables or isolation level of READ UNCOMMITTED.

Paul – interesting thoughts. Thanks for stopping by!

That post was supposed to have more in it – here’s the rest in a nutshell.

I was going to say that I have, however, caused data to disappear due to my own ineptitude, such as the time I truncated the wrong table. And by “wrong table,” I mean “one-billion row production table.”

Oops – you can delete that last one and this one if you’d like. Sorry for the wall spam – the trailing partial sentence on the first post fooled me.

See how easy it is to mess up data?

This is a great article but Mark J. stole my reply. In my experience, regular backups are not nearly as important as being able to actually restore the systems and databases after a disaster. Consider that if your systems are hardware-based (as opposed to Virtual or HyperV platforms), you roll the dice when trying to recover them. Ok, you build a new system but economize by buying a 2-year-old MB. It’s fine. It works, but when it gets smoked by an errant lighting strike or an overloaded power supply, you’re pooched. You return to the supplier only to find that MB is no longer available so the system images you created won’t boot on newer (probably better) hardware.

The solution: HyperV. System backups are dramatically easier (albeit more expensive). Restoration is not hardware dependent; just build up a state-of-the-technology platform and install a HyperV host. To that, you restore from your normal backup.

BUT, folks you gotta do it for real. Get a binder and record (on paper) the steps needed to start rebuilding from a credit card. Who can supply the hardware, who ELSE can supply the hardware, and the rack, the broadband connection and the UPS. Document everything from the A/C wall plug and where the broadband comes into the building. What steps EXACTLY does it take to set it all up and get the OS running and updated? Document it. Document performing the restore as if you were not there to do it (you probably won’t be). Have a place to note what didn’t work when you tried all of this in a practice run. Now photocopy the binder pages, scan and save them somewhere–like email it to yourself. Get a copy of the binder off-site. This does not mean two floors below you on 2 Wall Street–it means in another county.

Get the binder out every year or so and practice it again. Rinse and repeat as necessary.

I’m a data guy and while retired, I have seen too many good companies go under because of a bad power supply that the supplier got on the cheap.

Or put another way: Good resume, good backup. You only need one.

These stories are much better than anything on TV. Can’t wait for the next episode. Educational and entertaining. DBA 🙂

Great post and great responses.

The link for Backup & Recovery Step by Step seems to be dead

Mike – great catch! I’ve removed that. Thanks!

hi, nice post!

I’ll just pray that it won’t happen when I’m on call duty 😉

BR

Gerald

[…] Get the RPO/RTO HA/DR worksheet […]

[…] Here is a script you can use to check the backups on your server. When you run this and don’t see any dates, or the dates are from 3 years ago you have a problem. Current dates are a good sign that your backups are succeeding. Even if the dates are current you should still investigate further to understand the backup solution that is in place and how it is meeting your RPO and RTO goals. […]

We are currently using “Commvault” as a backup solution. Backups are being performed nightly but when sp_blitz is executed, it shows “Backups Not Performed Recently”. Is there a way to get Commvault to update SQL Server when it runs each evening?

Your best bet there would be to check with your Commvault admins and support. It sounds like they’re doing dirty snapshots rather than app-aware backups.

[…] much as possible. We want redundant storage, redundant servers, redundant networks. We agonize over RPO & RTO. But how much of that is necessary? Are we actually worrying about the right things? Are we […]

There are three columns: high availability, disaster recovery, corruption, and “oops” queries.

Am I missing something? It looks to me like there are four columns? Or was this just a trick to see who is paying attention?

Hahaha, great catch! Fixed.