StackOverflow went through a few infrastructure upgrades this week. Jeff Atwood blogged about the changes, and Kyle Brandt goes into more detail. Here’s a short recap of some of the SQL Server changes:

- Changed physical server models (from IBM/Lenovo to Dells)

- Went from two quad-core Xeons to two six-core Xeons

- Enabled hyperthreading (so it looked like 24 cores)

- Went from SQL Server 2008 to SQL Server 2008 R2

- Went from 6 SATA drives to 8 SATA drives (yes, they’re really doing over 2 million page views a day using a handful of cheap drives, but it’s all in the l33t programming, because they do their own caching)

- Did some index tuning

- Moved from one datacenter to another

That’s a lot of moving parts. When the dust settled, the CPU use on the SQL Server had gone up, not down. So where do we start troubleshooting?

I love good developers because they do unit testing. (Yes, readers, that means if you’re not doing unit testing, you’re not a good developer.) The most reliable way to troubleshoot is to boil things down to the simplest repeatable test possible. They identified two different queries that had dramatically different results between the old server and the new server. I looked at the execution plans produced by these queries on both the old and new servers. Everything was identical – same plans, similar reads, similar results – except for one thing: CPU time. You can measure CPU time for a query by executing SET STATISTICS TIME ON in SQL Server Management Studio. From that point forward, all queries in that session will have a bonus set of metrics in the Messages tab listing the milliseconds of CPU time used.

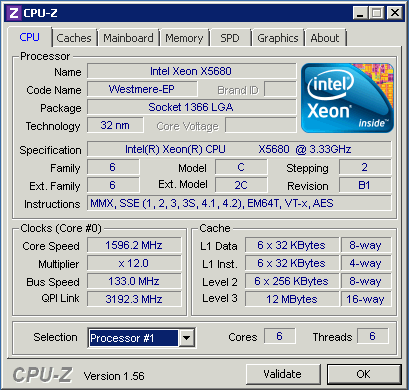

The same query doing the same amount of work took about twice as many milliseconds on the new server as it did the old server. That meant we had two possibilities: either something under the hood of SQL Server 2008 R2 had gone horribly awry and was doing twice as much CPU work for the exact same execution plan, or the new CPUs were twice as slow. The server manufacturers said the new CPUs ran at 3.33GHz – but did they really? I busted out the trusty free CPU-Z tool and ran it on the new servers:

Compare the “Specification” line to the “Core Speed” line. As we say in the business, wah wah wah waaaaah.

The new CPUs were Intel’s diabolical power-saving Nehalems, which have given me no end of grief. My hat is off to them for saving the planet by using less power, but my pants are down to them for killing performance without informing the users. In the last few weeks, I’ve seen several cases where server upgrades have resulted in worse performance, and one of the key factors has been throttled-down CPUs. In theory, the servers should crank up the juice according to demand, but in reality, that’s rarely the case. Server manufacturers are hiding power-saving settings in the BIOS, and Windows Server ships with a default power-saving option that throttles the CPU down way too often.

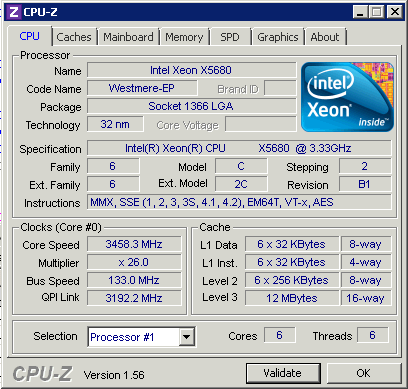

I was able to prove the problem by running several simultaneous load-test queries that push SQL Server’s CPU hard. When running just one or two of them, the processors didn’t speed up, but if I ran enough of them, the server said, “Alright, alright, I get it. I’ll speed my cores up. Jeez.”

The instant I reduced the number of running queries, the CPUs got lazy again. If you think that’s bad, there might be servers in your datacenter right now running just 800MHz, and they won’t even speed up when they’re under load:

There’s a few morals to the story:

Periodically test your CPUs with CPU-Z. Know their spec speed and their current speed. Saving power by throttling down is fine, but understand that they may not run full speed until they’re under very heavy load. StackOverflow was consistently running 50-60% CPU load, and the processors still weren’t speeding up. Since the power-saving speed was 1.6GHz, their CPU-intensive queries were literally running half as fast as their old server.

When you change your infrastructure, minimize variables. If you change half a dozen things at once, troubleshooting performance is much more difficult. I used to work at a company that did periodic outages to fix infrastructure issues like battery backups and air conditioning, and one of our IT team loved to take advantage of those excused outages to upgrade all his servers. More memory, different network cabling designs, new software versions, you name it, he’d do it. When the power came back on and his servers didn’t work, his users kept asking, “But I thought all we were doing was repairing the air conditioning?”

A/B test your solution with the smallest unit test possible. In StackOverflow’s case, we narrowed it down to just two queries, and we set up a separate testing environment where we could try out different solutions. When we narrowed it down to the CPU clock speeds, we were able to instantly say with confidence, “Yep, we found it, and now we can take production down to fix it.”

42 Comments. Leave new

Brent,

Good info on this issue – very important for DBA and infrastructure staff to be aware of the impact and the resolution.

FYI – Glenn Berry also covered this subject in two posts earlier this year (see links below), and spoke of the Windows Server power management configuration changes to resolve the issue:

http://glennberrysqlperformance.spaces.live.com/blog/cns!45041418ECCAA960!7101.entry

http://glennberrysqlperformance.spaces.live.com/blog/cns!45041418ECCAA960!3642.entry

Of the 3 provided power management profiles in Windows Server (“power saver”, “balanced”, “high performance”), it appears that the default (and “recommended” – out of the box) power management profile is “balanced”. Changing this setting to “high performance” can make a measurable difference, based on Glenn’s results. Seems like a relatively simple change – who knew?

My personal opinion: Aggressive power management profiles (less than “high performance”) are great for laptops (where battery life is a concern) and maybe some servers (where power savings is a greater concern than high performance), but should be avoided on servers having a greater concern for high performance over power savings (like DB servers, VM host servers, etc.). It would be great if Microsoft offered a revealed dialog for the power management setting during OS installation, with perhaps the same default setting of “balanced” but also with the ability to change the setting at OS install time, so that these choices are more explicit and revealed (and less implicit and hidden as they may be now – with the resulting downstream issues).

Kind of like the “black art” of disk volume alignment (awareness and resolution) prior to Windows Server 2008 and the automatic creation of 1 MB alignment disk volumes (due to inappropriate default OS settings or actions of the past) – no?

My thanks to you and Glenn for revealing this issue to DBAs everywhere!

Scott R.

Great post Brent, good to know these things.

Im curious if you have looked into the Windows 2008 R2 core parking feature. I would expect it would behave in a similar way but as yet I haven’t had the opportunity to test.

No, I haven’t touched core parking at all.

This is a great post, and a problem I will be keeping an eye out for in the future.

I’m curious your unit testing and how you’re using them. I’ve grown up with the approach that Unit tests should have no external dependencies, including the database. Are your code unit tests calling into the database or did you pick this up with SQL Server based unit testing?

Adam – I’m guessing by your comment that you’re a developer. Developers do unit tests of their code, which is good, and database developers do unit tests of their work as well – stored procedures, views, queries, functions, and the like.

Brent,

I am seeing similar problem on my server where CPU-Z reporting lower numbers than what spec says. Is there particular BIOS setting that has to be tweaked to make sure CPUs always operate at full capacity?

Thanks,

Igor

Igor – yes, the settings depend on your server manufacturer, plus you’ll want to go into the Control Panel in Windows, go into Power, and set it to High Performance. Both the BIOS and Windows settings are necessary.

Thanks Brent!

Hi Brent,

Thanks for the post, i’m also seeing lower numbers than the specs. The server is running some background processing. However if I run a query the core speed jumps to the full speed, which sounds as works as designed. I’m still going to search for the bios settings though and change to maximum powerrrr.

Thanks a lot!

Remco

Remco – if you run just *one* query and the server goes to full CPU speed, that’s great, but that isn’t what most customers are seeing.

Interesting, I have that exact HP model server and problem you describe! The server is two years old now and has run (mostly) great; fixing this should make it feel new again! Thanks!

Great article and web cast too, thanks Brent.

I tested out 3 servers. All 3 had balanced power plans. Two of them were running at 1.6 GHz while having Xeon 2.53 GHz CPUs. The 3rd one (also balanced) has 2 Xeon 3 GHz CPUs and was measured by CPU-Z several time running at top core speed, even though the CPU usage was at single digit percentage.

Tim

Great post. I recently took over as Sys Admin with a department that has a fairly beefy computational server.

I ran the tool on our “beefy” server and all processors were running at 800MHz…needless to say I’ve made some changes ;}

Thanks!

Very useful post. We’re auditing our servers to confirm and adjust their settings if necessary.

A couple of quick questions:

1.) Does higher-performance settings reduce the lifespan of the hardware? (thinking along the lines of heat and wear) Just something to consider.

2.) What kind of extra power-consumption is involved? With one or two servers and a monthly fee, who cares. But with thousands of servers, increasing your power consumption by 10% can get expensive.

3.) What effect does power consumption settings have in virtualized environments like EC2?

Thanks!

Rob – unfortunately, those are *anything* but quick questions, heh. They’re great questions, beyond what I (or anyone) could answer off the tops of our heads. These are interesting questions, but typically beyond the realm of SQL Servers that are performance-focused. If you’re performance-focused, you’re not running the hardware for more than 3 years tops anyway, and you’re completely okay with extra power consumption. Hope that helps!

Congratulations on having the most optimized DB I/O I’ve ever heard of. I’ve never before heard of a 8 core system with 6 SATA drives that needed CPU expansion. I’m guessing your data set is so small and read dominant that 97% of your data is buffered all the time?

Ryan – yep, the StackOverflow guys have worked *really* hard at optimizing IO, plus they do some CPU-intensive things that are a little outside of the norm for most SQL Server implementations. Your guesses would make total sense for most SQL Server implementations, but StackOverflow is indeed outside the norm of what most folks see.

Windows Server 2008 R2 has some new perfmon counters under Processor Information that allow this kind of information to be teased out – particularly % of maximum frequency…

Pah! My system admins would never setup our corporate SQL Server ESX cluster system like that.

http://serverfault.com/questions/200792/

Oh, I really hope I’m wrong…

In the big picture though isn’t this correct behaviour? I mean you’re looking at detailed stats and unit tests but under genuine load with real users isn’t the server doing what it’s supposed to do and all will work as it should?

John – unfortunately, no. Our production servers weren’t going up to full CPU speed even under load.

The problem is that the power management features do not react quickly enough to a change in the workload for the processor to increase the clock speed of the core in a timely manner, especially for typical OLTP queries.

Thus, you see a pretty significant performance hit when you use the Balanced power plan.

The extra power usage per processor is fairly low, especially compared to the overall power usage of the server

Brent, there is a MS KB on this subject:

http://support.microsoft.com/kb/2207548/en-us

Thanks for the link!

My i7-920 on Win7 throttles down to ~1.6ghz at home and parks idle cores, but it will jump back to full speed the instant I need it.

I wonder if the server has a bios issue that is causing the CPU to not ramp back up to full speed when needed.

Intel documentation shows clock speed changes timed in single digit micro-seconds. There’s no good reason why your chips aren’t ramping up when needed.

When I look around the web, Nehalem and Win7/2k8R2 speed issues caused by power savings are random. I would assume the randomness is caused by driver/bios issues.

I know my cousin recently was testing some potential i7 servers for his datacenter and he said the new xeons were about twice as fast as the previous generation of xeons for their SQL tests. But he does run Solaris/Linux, so it’s not MS-sql.

Brent, Does this issue apply to Windows 2003 as well?

Praveen – yep, absolutely, this is a hardware issue.

In order to get core speed eq to spec, did you change your power scheme to high performance, or was this strictly a BIOS change?

NM – I use the BIOS exclusively because in every server I’ve worked with, the hardware has had a setting that will override the OS. That way, if someone rebuilds the OS down the road, I don’t have to worry about them getting the setting right.

Brent, thanks for the help. Hey, I went to the download site for CPU-Z and came across what to do if when running the utility your computer BLUE SCREENS!!! 🙂

I’m usually liberal about running utilities like this, but this worried me. Have you ever had any issues with this utility?

Thanks,

Mark

I haven’t, but that doesn’t mean there aren’t any. 😉

Helpful article. It did provide us with some additional information about a server that was under-performing, so now we’ll need the hosting to try and change the setting to see if it helps.

Thanks.

We ran into a similar issue on a much newer HP server. There is a BIOS setting as described here that overrides the Windows setting: http://serverfault.com/a/196329/6390

We changed this, and updated the BIOS / iLO, and used High Performance, and our performance significantly increased (40% in our end of day process).

Changing back to Balanced had minor effects – the big wins were apparently in the BIOS/iLO. (We didn’t have enough of a window to change one thing at a time, alas).

I’m running into an interesting issue with a client server. It’s a HP ProLiant BL460c Gen8 Server with 2 Intel Xeon E5-2667 v2 processors. I ran CPU-Z and found that the processors were running at less than half their rated frequency, even under load.

I don’t have access to the BIOS settings, so I altered the Windows Power Plan to the “High Performance” plan and the first processor immediately jumped to it’s correct speed.

However, the interesting thing is that the second processor continues to run at the throttled frequency. I don’t know if a re-boot will address that or if I need to get IT involved to change the BIOS.

I just thought you might find that interesting. Thanks for the article.

David – yeah, stuff like that is always tricky. You’re best off getting onto the latest version of the server’s firmware too – there’s often bugs with this type of thing.

You came to our offices back in 2011 and the first thing you did was run CPU-Z which revealed that our database server was running at half power. Was I surprised! It turned out to be because our very lazy infrastructure guy set it to that to conserve power because there was not enough available power to our rack for all the servers in it. He’d never thought to mention that to me through all our performance troubleshooting.

Heh, yeah! Gotta love those easy, free fixes.

Hi Brent! I was wondering why on sp_Blitz you get the power plan warning as a 250 priority and only with the flag “CheckServerInfo”. Don’t you think it would be better to show it as a priority 50 or something “easier” to spot?

Because not everything can be priority 1, hahaha, and because it requires a pretty serious outage to change (because you also have to hit the hardware settings in the bios), and because not everyone is CPU-bottlenecked.

But at the same time istant file initialization is a priority 50 warning and requires a service restart as well.

In my opinion unusued CPU power sounds scary as hell (at least that’s how i reacted when i found out this warning when running sp_blitz in my environment).

I understand that not everything can be priority 1, but maybe this warnign deserves something more.

What do you think?

Should I go and submit a feature on github?

Also, thanks as always for everything you do for the community!

Mattia – I hear what you’re saying, but we’re not going to change the existing output unless there’s a really compelling reason to do so. People around the world have built tools on top of sp_Blitz, and I don’t take changing the output lightly.

I totally hear that one particular warning is important to you, and that’s great! Lots of folks find different things important in the result set. You know how to get this data, and you know how to read the result. You’re getting what you need out of the tool.

In terms of being opinionated about what matters more or less to YOU in the result set, that’s cool, but…as the maintainer, I can’t really go around changing the results every time someone has an opinion.

I hope you get value out of the free tool. Thanks!

I understand, crystal clear as always sir!

Also, I’m going to get a lot of value from paid tools of yours as well ?!