People love to search.

Google has us addicted to fast, easy search functions. Users expect every application to have a built-in blazing-fast search functionality. To pull that off, developers build search queries that let users enter a string, and we ask SQL Server to find matches. For example, say our users need to find some nuts. We take their string, put percent signs on either side, and then search for that field in the product names:

|

1 2 3 |

SELECT * FROM AdventureWorks.Production.Product WHERE [Name] LIKE '%nut%' |

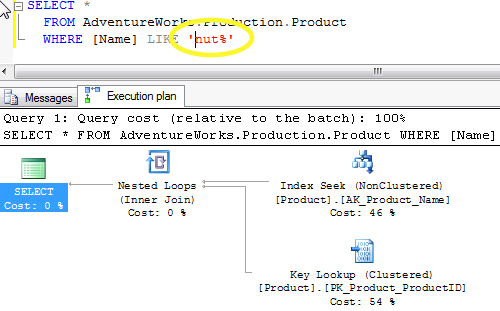

This works great on our machine – but when we scale it up to hundreds or thousands of simultaneous users, or if we ramp up the number of products, our response time sucks. Let’s find out why by checking the execution plan. In SQL Server Management Studio, click Query, Display Estimated Execution Plan, and we get:

There’s only one operation, which would sound like a good thing, but that one operation is a clustered index scan. That means SQL Server has to read every row out of the Product table, check to see whether it’s got “nut” anywhere in the name, and then return our results.

But wait – there’s an index on Production.Product for the Name field, and SQL Server will use that index if we have a slightly different query:

If we take the leading % wildcard out, notice that this time it does two operations. First, it does an index seek against our Name index, then it looks up the corresponding rows in the base table (Product). Two operations might sound more expensive, but if we looked at the total cost for each query, the second one would be faster in situations where we’re only pulling a small number of search results back. If this table was for a company called Nothin’ But Nuts, on the other hand, we would probably still need to scan the entire table, but that’s a discussion for another day.

So why doesn’t SQL Server use the index for the %nut% query? Pretend for a moment that you held a phone book in your hand, and I asked you to find everyone whose last name contains the letters HAM. You would have to scan every single page in the phone book, because the results would include things like:

- Beckham

- Chambers

- Hamilton

- Thames

If, on the other hand, I asked you to find everyone whose last name began with the letters HAM, you could turn straight to the H page in the phone book, scan through a few lines, and quickly get the information you need.

When I asked you for everyone beginning with the letters HAM, my query was sargable. When I asked you for all last names containing HAM anywhere in the name, my query was not sargable – meaning, you couldn’t leverage the indexes to do an index seek. (Yes, sargable is sort of a real word – it stems from the phrase Search Arguments.)

Since we can’t talk users out of using search queries, and since non-sargable queries don’t scale, we need to find a better way to search for text. That’s where SQL Server’s Full Text Search comes in. Unfortunately, your queries must change – normally when you add an index, your queries just magically become faster. Full text indexes don’t work that way. You have to tweak your queries to use operators like CONTAINS instead of LIKE. If you’re dealing with an old boat anchor of a database and the developers are long gone, you might be out of luck.

%String% is just one thing that will cause SQL Server to do slower scans instead of high-performing index seeks. To learn more about sargability, check out:

- Beginner-level: StackOverflow question: What makes a SQL statement sargable?

- Advanced-level: Rob Farley on SARGable functions

- Book: SQL Server 2008 Query Performance Tuning Distilled

- Implicit conversions are another common sargability problem. Read Jonathan Kehayias’ post on finding index scans due to implicit conversions, Tibor Karasazi’s post on the performance implications, Jonathan Kehayias’ post on implicit conversion performance issues, Craig Freedman’s post on errors caused by implicit conversions.

46 Comments. Leave new

Would non-sargable logic apply to non-SQL Server databases also? For example MySQL?

Yes, great question. Different database platforms have different abilities tonuse search arguments on indexes – check your MySQL documentation to see what applies to your database, because it definitely does apply.

Great post and reminder. I think the phone book is a good analogy for effective searching. I don’t present as much as some folks (like certain MCMs I know… 😉 ) but when I do a talk on database performance and SARGability comes into play I normally hand out two phone books to help illustrate the point with a search race. I enjoy seeing lightbulbs go off… I also take a ripped page of the phone book and say, now do a contains search… Sometimes you need to do ’em… But can you limit your data before that point?

lmao, that’s great. you linked to my SO post about sargable queries.

Just digging into full text searching a bit more. I realize that full text indexing indexes words, or combination of words, (as opposed to substring). So the clause

CONTAINS(‘firstname’, ‘linda’) returns all Lindas, but no Melindas. 🙁

Do others see this behavior to?

I pointed out to my favorite DBA that we should place indexes on those columns we were searching with wildcard characters and he promptly pointed me to this link where someone “in the know” explains the “phone book” analogy. The author’s theory is that SQL Server does not use the index for a query involving “LIKE ‘%nut%'” and he backs it up with execution plans, etc.

I can poke holes in that “phone book” analogy but we can leave that for another day (or not).

So I read the article and pointed out to my favorite DBA that if the statements made on this article are true then SQL Server is, disappointingly, not the great product I have always thought to be.

Having been the author of a successful embedded database engine in the 90’s I know a thing or two about optimizing queries inside a database engine and I couldn’t believe that SQL Server would not use an available index for a ‘%something%’ wildcard search. IMHO, it would simply be reckless for a database engine to ignore the index.

I will not bore you with the details of why and how an index speeds up ALL wildcard queries but I did a simple test and SQL Server showed its true quality.

1) I added 500K records to a table with 25 columns, all populated with completely random and unique data.

2) I then performed “SELECT * FROM TestTable WHERE C7 LIKE %something%’” which returned no results (as expected) in about 32 seconds. I repeated this operation several times to cause hydration of SQL Server’s cache and the query execution time leveled off at around 29 seconds.

3) I then added an index on column C7 and submitted the same query; the results? no results (as expected) returned in about 1 second. Subsequent resubmissions showed sub-second execution time.

4) I then removed the index from C7 and re-submitted the search several times; the results? 29 seconds.

Am I missing something?

Carlos – yes, SQL Server will indeed use the index on a wide table like the one you suggested, because it’ll use the index for scanning – but not seeking. If you’d like to post the execution plan XML somewhere public, I’d be happy to walk through that with you.

Hi Brent — Thank you for the prompt reply. I look forward to learning something from you. I uploaded the execution plan to http://www.simple-sw.com/Wildcards.sqlplan

Carlos – thanks for uploading that! It shows exactly what this article explains – you’re doing an index SCAN, not an index SEEK. SQL Server is ripping through every single page of the index and parsing every single record on every single page. The only advantage of the index is that it’s narrower (contains less fields) than the base table. It’s like having a copy of the phone book that only contains one column – if you’re looking for %ham%, you still have to examine every single field.

If you still don’t agree, check out Grant Fritchey’s excellent book, SQL Server 2008 Query Performance Tuning Distilled. It explains the differences between index seeks versus scans, and why SQL Server might choose to use the index versus the base table. I reviewed it here:

https://www.brentozar.com/archive/2009/04/book-review-sql-server-2008-query-performance-tuning-distilled/

Hope that helps!

BTW, I dropped all columns except C7 and reapeated the experiment with almost identical results except instead of 29 seconds it dropped to about 25 seconds without the index and <1 second with the index.

Hi Brent – It is not a matter of agreeing or disagreeing. The article basically says: “Don’t waste your time with an index because an index will not help expedite a wildcard query such as ‘%nut%'” and goes on to cite the “phone book” stuff.

Despite your explanation about execution plans, SEEK versus SCAN, etc., etc., do you at least agree that the gist of the article is incorrect? Actually, what is this article about after all? What am I missing?

My favorite DBA pointed me to this article as “definitive proof that indexes don’t work with wildcard queries such as %nut%’… Please enlighten me… My experiments show otherwise, with 1 column, with 25 columns, etc., etc.

Carlos – no, the gist of the article about sargability, meaning how SQL Server can parse your search arguments. SQL Server can’t parse %string% searches to a form where an index can be used for seeks. All it’s doing is using the narrowest copy of the data – for that matter, it could use an indexed view if that had the right fields, but they’re still not sargable.

Again, I hate doing this to you, but reading Grant’s book will help open your eyes as to what’s going on under the hood. I really do hope you take the time to pick it up – I promise that even a senior person such as yourself can learn some things about how indexes work. I still find myself learning new things about them all the time, even after the MCM program.

Have a good one!

Also, while I’m thinking of it – if you’re in a rush to learn it, you don’t even have to wait for the book. Check out the supporting links I put in the post, read through those, and that should help a lot.

Hi Brent — If I hadn’t already bought a Brooklyn bridge this morning I would gladly buy one from you. LOL.

If I were you I wouldn’t withdraw the article but at the very least I would suggest that you change its title to “Why %string% is lightning fast with SQL Server when you index the column, regardless of how many columns are present in your table”

This exchange has been very informative and I hope others will benefit from it as well.

Take care!

Carlos – (sigh) – I hate doing this to you, but again, you’re wrong.

The reason you think %string% is lightning fast is your test was wrong. You created a table with a bunch of columns, then deleted those columns and got similar test times. But what you forgot to do was do a dbcc dbreindex (or a similar alter index statement) after dropping those columns. You need to defrag/compact your table so that it takes less physical pages, and then you’ll get a more accurate test result. When you dropped those columns, SQL Server didn’t go back and repack your table into the right amount of space on disk – it still has to read through tons of empty space on each page.

And again – you would know this if you’d read Grant’s book.

I really hope for your sake (and definitely for your DBA’s sake) you read that book before you keep drawing conclusions like this.

Ok, this is my last reply because you appear to be in some kind of trance or state of denial.

Yes, you are correct in that I simply dropped the columns from the table to test with a 1-column table.

So, to get to the bottom of this argument, I created a new database from scratch with a single table with a single column and added 1,000,000 records to it. The SQL statement “SELECT * FROM TestTable WHERE C1 LIKE ‘%something%’” takes approximately 3.2 seconds without the index and 2.1 seconds with the index.

A 15% performance gain is significant but, even more important than that, how useful is a table with one column? Who in their right mind is going to be submitting wildcard queries against a 1-column table? Is that the point you have been trying to get across all along? I hope not because %string% is not slower even in that case.

By the way, defending your side of an argument by sending the other guy to read a book almost always backfires. There is no substitute for common sense. In the end your readers decide…

If you are going to bill yourself as an expert you better be prepared to defend the stuff you spew.

All the best!

Carlos –

I should just ignore this post but since I am subscribed to the comments, I couldn’t not respond. You started of with one-upmanship, ignored the main point in the article and then ignored the point Brent was trying to make. Brent is most certainly an expert and is one of a select few who have taken and passed the Microsoft Certified Master exam for SQL Server. He knows his stuff and has been backed up….

At any point… You seem to be missing the point….

It is no surprise at all that a table with an index will perform better even on the wildcard… Brent never stipulated that point in his article. He simply said that doing a wildcard more or less makes the index useless from a SEEK point of view… Doing an index scan can definitely be more efficient than doing a table scan.. But you are STILL doing a scan…

To illustrate Brent’s point you would actually try and do a search on a begins with search or equals search. ‘value%’ instead of ‘%value%’… Depending on how unique the rows in your table are, you may get an index seek and return far fewer pages of data which means less work which means a faster query.

Your “testing” doesn’t point out that wildcard searches are fast and it doesn’t point out that wildcard searches use an index (as someone who apparently developed a database system themselves, you should understand that just doing a scan isn’t really the “using” we refer to when we talk about using an index)… Do a query for “Select * from tablename” on that table and your results will be the same as far as the number of pages that have to be scanned. Sure, depending on your search it may take a bit longer to come back to your client but in terms of I/O —> It’s the same….

The phonebook analogy is a great one here… If I were to say to you “go find me all the last names that contain ‘als’.” There is no way that you can do an index seek on the last name column. Instead, you have to run your thumb over every name, and see if it contains ‘als’ and make a list of them… Think of that as an index scan… If I said, find all the last names that start with ‘wals’, you can seek directly to where the ‘wals%’ names start and stop when you get to ‘walt%’ —> Kind of like an index seek, no???

So your DBA is right in that you aren’t gettin the benefit of an index seek because you are having to scan through all records… You are right in that an index scan can be better or less work than a table scan or even a scan on a wider or different index (if all you are bringing back is one column and that column is indexed, for example)… Brent is right in what he says but you seem to miss the main point of the article, I fear.

I’ll also add that Grant’s book is an excellent book. Another great book on SQL Server is the book Brent co-wrote with some other SQL Server experts. Since you are now stuck working on a database system developed by a bunch of fools that aren’t as intelligent as you, check those books out. It will help you come down to our level….

😉

Well said, Mike! u da man!

For all the SQL Server experts here, but mainly for other users who will visit this article in the future, I need to support Carlos here. The article is partially incorrect and misleading, because it sounds like SQL server would do a table scan, not just an index scan (which is, usually, significantly faster). I don’t deny that index scan vs index seek are different operations 🙂

I’m talking about this sentence

“That means SQL Server has to read every row out of the Product table, check to see whether it’s got “nut” anywhere in the name, and then return our results.”

Mirek – no problem, you’re certainly welcome to support him. An index scan may be faster – but it’s still nowhere near as fast as a seek, and the problem gets dramatically worse as your table gets more data.

Well thanks a lot Brent for sharing such a great stuff with immature readers like me in a fantastic way and i really appreciate being a critic but for sentences like “this is my last reply because you appear to be in some kind of trance or state of denial.” and “The article is partially incorrect and misleading” i am deeply hurt. The experts of such levels should not comment like this way rather they should bring some facts and figures to support their points and most importantly try to understand what the author is trying to convey. I hope i am not being offensive here. Keep the good show up Brent 🙂 and my dear critics.

Thanks, glad you enjoyed the post!

Great artical Brent, I have been following you for years and I know you know your stuff. This issue has been a pain for me and I’d like to get it resolved instead of running queries like this at night when performance is better and I have less users on the server. I’m going to list what I’m purposing to do in this case.

I have a wildcard of ‘AMG/%’ (thank god it’s at the beginning of the field)

e.g.: where addressName not like ‘AMG/%’

I’m going to have the DBA or Developers create a clustered-index the fields as needed…

And review the processing time after these changes have been implimented…

I wanted to know how the CONTAINS() would act in this case as a replacement for the “not like ‘AMG/%'” keeping in mind the wildcard will still be in play…?

This is a very important topic to everyone who uses MS T-SQL, performance in such cases are killing me…

Thanks again Brent….

Tyrone – thanks, glad you like our work.

Why not try it on your own development server to find out exactly how it’ll perform with your data? I’m all about learning via experiments – it’s a great way to teach yourself more about how things work. Enjoy!

Thanks for this post. FYI the link to Rob Farley’s site appears broken. I would imagine you want http://blogs.msmvps.com/robfarley/2010/01/21/sargable-functions-in-sql-server/

Boy those comments were fun on this old post 😉 Wasn’t sure they were going to stop digging…

I landed here after searching because I am seeing kind of an odd and misleading operator in a plan where this is indeed the case…I have a query doing WHERE IndexedColumn LIKE ‘%’ + @param + ‘%’. Unsurprisingly it is slow, then I open the plan and see….Index Seek?? Actually a compute scalar adding the % on each side, then a Index Seek. Per stats IO, it still is scanning the whole thing, as I expected, but before I had reviewed the code, the execution plan looked innocuous, nice quick index seek, no high rowcounts (I’m on 2014 but don’t have the Actual Rows Read hotfix in yet). Have you guys ever seen SQL Server do this? I would’ve expected an Index Scan…

Nic – thanks, glad you liked it! Rather than guess, though, the only way I troubleshoot execution plans is to paste ’em:

http://pastetheplan.com

We built that so people could paste their plan and then ask questions about it on Stack. Give that a shot!

Sure, its not a problem per se, more just a curiosity, that it shows up as a seek and not a scan (even though it is de facto scanning…). Again, don’t need it fixed, just wondered if you guys have seen a change in operators with v12 and on…or if older versions do the quasi-seek as well.

I mocked up a “safe to share” version here, very simple version, and resulting plan:

https://www.brentozar.com/pastetheplan/?id=rkNlxx4yx

Setup to duplicate:

CREATE TABLE SampleTable (Col1 int, Col2 int, Col3 int, Col4 VARCHAR(12))

GO

INSERT INTO SampleTable

SELECT CAST(RAND() * 1000 as INT), CAST(RAND() * 1000 as INT), CAST(RAND() * 1000 as INT), SUBSTRING(CONVERT(varchar(40), NEWID()),0,12)

GO 10000

CREATE CLUSTERED INDEX cix ON SampleTable (Col1 ASC)

CREATE NONCLUSTERED INDEX ncix ON SampleTable (Col4 ASC)

GO

DECLARE @var VARCHAR(12)=’12’;

SELECT Col4 FROM SampleTable WHERE Col4 LIKE ‘%’+@var+’%’

Huh, same behavior same all the way back to 2008R2 at least. It’s a good way to disguise an expensive operation in an estimated plan, that’s for sure! 🙂

sargable isn’t a word.

This query is fully optimizable. It can use a trigraph index. Because your crappy inferior and overpriced database doesn’t support that doesn’t make any of these generalizations correct. It’s just an implementation detail that you don’t yet have a kosher indexing method.

http://dba.stackexchange.com/a/161543/2639

Allllllrighty then. I’ll be over here: https://en.wikipedia.org/wiki/Sargable

Totally a word. Sargable is an adverb, it has an etymology.

It’s a “speech sound or series of speech sounds that symbolizes and communicates a meaning without being divisible into smaller units capable of independent use.” So by use and definition, it’s a word.

It’s just not a very pretty word.

I found this post and it relates to a problem I am dealing with. I am working on converting a heap table to a clustered table by adding a primary key to the identity column:

TestTable

——————-

ID

ItemCode

Descr

(20 more columns; etc.)

Right now the table is a heap and I have a query that does a wildcard against the ItemCode column (varchar(250)):

SELECT ID, ItemCode, Descr, etc. FROM TestTable WHERE ItemCode LIKE ‘%something%’;

On a fresh SQL restart, if I run this query, it takes about 25 seconds, but subsequent queries take 1-2 seconds. After I add the primary key to convert the heap to a clustered table, if I run the same query as above, it takes about the same amount of time to get the first result, but subsequent results take 5-10 seconds.

The execution codes basically shows TestTable Scan for the first, and Clustered Index Scan for the second. Why would the heap return faster results on subsequent queries than the clustered?

Also, adding an index to the ItemCode table and changing the query to LIKE ‘something%’ is super fast for both.

I really want to get this table over to cluster but doing so slows the query that is used. Any suggestions?

For questions, head over to https://dba.stackexchange.com/questions

Thanks!

https://en.wikipedia.org/wiki/Sargable looks like its no longer valid.

Thanks for the heads up. Removed the link.

Very interesting article which explains “sargability”. I think the core issue is clear, if you search for ‘%thing%’ then SQL Server must do a full scan of the clustered index (or non-clustered index if there is one) to find the matches. One interesting thing I noticed, if you search a large number of rows SQL Server may do the scan in parallel. This speeds up the results, although at the cost of more CPU.

I have a table with 3,000,000+ rows and I need to do wildcard searches against one column. Ultimately I need to do starts with, ends with, and contains queries, but I am trying to optimize a step at a time.

I found an article that suggested a strategy for doing “ends with” searches. I added a second search column and stored the reverse of each string. I can then reverse the string I am searching for and do

where column = reversedstring + ‘%’

This, of course, is just as fast as the “starts with” query, as long as I apply indexes the same ways to both columns. A drawback is it takes twice as much storage, but it’s worth it to speed up searches.

Do you have any suggestions on how to improve the performance of %string% searches, or alternative approaches?

Patrick – unfortunately, architecture questions like this are pretty far beyond what I can do for free in blog post comments.

No problem. Thanks for the quick reply.

Hi Patrick, if you properly handle typed terms related with empty spaces, string cleansing exact matches, ordering and so on, here is the idea:

DECLARE @Input VARCHAR(100) = ‘Patrick Richards’

;WITH X(x) AS (SELECT 1 UNION ALL SELECT x+1 FROM x WHERE x<LEN(@input))

SELECT TermFromStart = RIGHT(@input,(LEN(@input)-x)+1), TermFromEnd = LEFT(@input,(LEN(@input)-x)+1) FROM X

After, you can do like: SELECT columns FROM your main table

JOIN String Index Table with your key column WHERE String Index Table.index column LIKE @Input+CHAR(37)

I’ve only worked in one IT job (the one I currently have), so I am often curious when I hear statements like “if the developers are long gone, you might be out of luck” — because they alert me to what might be industry trends that I had no idea about. Why would we be out of luck if the developers are gone? I assume this article is targeted to DBAs and/or DB Developers. Why would a DB professional be unable to write a query using a full text index?

Because the developers may have built the query into the app’s source code.

[…] Sargability: Why %string% Is Slow […]

[…] since our query isn’t sargable, that makes no difference on logical reads. We have no idea whether the <sql-server> tag is […]

[…] https://www.brentozar.com/archive/2010/06/sargable-why-string-is-slow/ […]