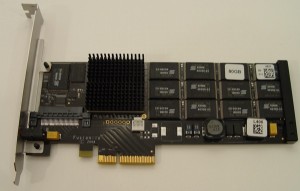

I don’t talk about hardware much here, but an SSD review hit Engadget this morning and made me take notice. Fusion-io gave their new 80gb ioDrive to TweakTown for a review, and –

Wait, hang on. Don’t close the browser yet. I know, everything about this post reeks of some tiny manufacturer with borked products and a one-page pamphlet instruction manual written in Engrish. TweakTown is not exactly the world’s most trusted source for enterprise-class database hardware, but bear with me for a minute. And for the record, Fusion-io is based in Utah.

So anyway, here’s the stats that TweakTown (cough) is reporting:

- 80gb = $2,995, 320gb = $14,995

- Around 460 MB/s reads (for reference, their raid 5 array of 8 15k drives got around 500 MB/s)

- Around 350 MB/s writes (reference array was around 370 MB/s)

- .05 milliseconds read latency (reference array was around 5ms)

- .02 milliseconds write latency (reference was 2ms)

Okay, it’s quick.

The Drawback: No RAID

TweakTown’s review was nothing short of glowing, but as a SQL Server DBA, I immediately had an issue with it. Notice that this isn’t a SATA drive – it’s an internal PCI-Express card. How do I protect against failure? Sooner or later it’s going to fail, because everything fails, and I want some level of redundancy. I can plug two of them into a server, but then I have two drives, and I have to implement software RAID. Ugh. Let’s say I’m okay with that (which I’m not) and I implement it – what kind of speed hit do I take?

Where the Fusion-IO Drive Makes Sense

If I had a SQL Server with excessively high TempDB load, to the point where TempDB was the biggest bottleneck in the system, I’d buy one of these and put TempDB on it. After all, if TempDB fails, I haven’t really lost much. Heck, my SAN admins would probably buy it for me to get my TempDB off the SAN if the load was that bad.

If I had a SQL Server in a colo datacenter, and I needed more storage speed, this would be a fast way to get it without paying more monthly rental fees for space for a SAN. SANs suck up a lot of rack space, and a couple of these in each database server (although probably the larger size ioDrives) would probably cost less than two years of colo space rental. Your mileage may vary.

And if I had a million dollars, as the Barenaked Ladies so elegantly said, I’d buy one for my girlfriend.

6 Comments. Leave new

Is the advantage to this that it doesn’t run on the same bus as a regular RAID array? Otherwise, what would be the point of offloading the TempDB to it rather than a separate RAID array anyway?

I’m not arguing, just trying to understand.

These guys claim 800mb/sec of write throughput on their prototype. Do yourself a favor and fast fwd to about 2:00. Hardware geeks are painfully nerdy.

http://advancedstorage.micronblogs.com/2008/11/iops-like-youve-never-seen/

David – great question. The advantage is that there’s near zero latency as you jump around doing random access. If you have a lot of users hammering TempDB doing reports or sorting, you can see some heavy random access, and that’s where conventional raid arrays really start to fall down. Since this solid state drive doesn’t have a latency penalty for random access, and since it’s 100-200x less latent than the spindles, there’s a big performance gain there. I’d love to back it up with real-life testing, but at $3k apiece, I won’t be springing for one of those anytime soon.

Jason – hahaha, man, I’m starting to second-guess my video podcasting. I can just imagine what I look like to non-SQL people. Heck, even to SQL people!

Another place you might use it would be for tables or indexes that can be regenerated (I don’t know if that is possible)

Quick note about mirroring ioDrives,

Using the OS volume manager (MS Dynamic Disk or Linux LVM / MDADM / VxVM) multiple ioDrives can be RAID'ed for fault tolerance (RAID-1 mirroring), performance (RAID-0 striping), or both (RAID-10).

Because the software volume manager is just directing traffic, not touching any data, it consumes basically no CPU. Better yet, it doesn't slow things down like a HW RAID controller would – by being a central choke point in the data path.

The nice thing about being on PCIe is that each ioDrive has direct bandwidth into memory over the northbridge. The northbridge acts like a full crossbar, non-blocking switch.

Indeed, with 8 ioDrives in a box one can sustain over 6GB/s of bandwidth and 400,000 IOPS from a single RAID'ed volume. That's in one box and represents over 50% of the memory bandwidth (it was an older Xeon)

With a hardware RAID controller you couldn't even get 1 GB/s.

A HW RAID controller only really helps with RAID-5 where parity must be calculated, but that's not generally used where IOPS are needed since it cancels out the additive effect across drives.

This goes to the classic adage of splitting the control path from the data path, and accelerate the data path.

-David Flynn

CTO Fusion-io

dflynn@fusionio.com

David – thanks for the reply. Do you have any whitepapers or tests from independent sources to back that up? My experience with software raid has been nowhere near that pleasant in terms of both manageability and performance.